论文阅读笔记 | (ICCV 2015) BoxSup

(ICCV 2015) BoxSup: Exploiting Bounding Boxes to Supervise Convolutional Networks for Semantic Segmentation

paper下载

Abstract

pixel-accurate监督需要大量的标签工作量,并限制深度网络的性能,而这些网络通常受益于更多的训练数据。

文章提出generating region proposals和训练CNN交替进行的方法,使用bounding box来部分代替mask进行图像语义分割的训练。

These two steps gradually recover segmentation masks for improving the networks, and vise versa. Our method, called “BoxSup”.

1. Introduction

pixel-level mask annotations非常耗时,令人沮丧,并且商业上获得成本昂贵。相反,bounding box annotations 比masks更经济。

尽管这些 box-level annotations不如pixel-level masks那么精确,就语义分割而言,它们的数量可能有助于改进训练深度网络。

文章采取无监督 region proposal方法来生成candidate segmentation masks。

CNN在这些approximate masks的监督下进行训练,更新的网络反过来改进了用于训练的estimated masks。

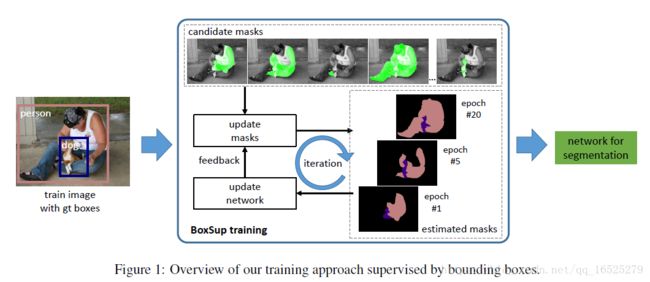

这个过程是迭代的。训练算法如图1所示。

该方法首先利用物体的位置框和MCG方法得到物体粗略的前景区域,并以此作为监督信息,结合FCN框架对网络的参数进行更新。进而,基于训练出的语义分割网络对物体框中的前景区域进行预测,提升前景区域的精度,并再次结合FCN框架对网络参数进行更新。BoxSup的核心思想就是通过这种迭代过程不断提升网络的语义分割能力。

此外,我们的半监督变体(其中9/10 mask annotations被bounding box annotations替代)与完全mask-supervised的对应物相比具有可比较的准确性。

成就:使用有限提供的mask annotations和额外大量的bounding box annotations,我们的方法在PASCAL VOC 2012和PASCAL-CONTEXT基准测试中实现了最新的结果。

2. Related Work

当前可用于对象检测,语义分割和许多其他视觉任务的可用数据集大都具有较少数量级的标记样本。而大量实验证明了数据集大小对于任务特定的网络训练的重要性。

R-CNN提出在大规模ImageNet数据集上,预训练深度网络作为分类器,并在训练数据有限的其他任务上继续进行训练(微调)。

对于语义分割,已有文献研究利用bounding box annotations代替masks。但是在这些工作中,box-level annotations并未用于监督深度卷积网络。

3. Baseline

在本文中,我们采用CRF精化的FCN方法作为mask-supervised基线。FCN用于分割 的情况,CRF用于后处理。

FCN的网络训练被formulate为ground-truth segmentation masks的逐像素(perpixel)回归问题。

objective function :

The objective function in Eqn.(1) demands pixel-level segmentation masks l(p) as supervision. It is not directly applicable if only bounding box annotations are given as supervision. Next we introduce our method for addressing this problem.

4. Approach

4.1. Unsupervised Segmentation for Supervised Training

为了利用bounding box annotations,期望从它们估计segmentation masks。

我们提出使用region proposal方法生成一组候选片段(candidate segments)。

candidate segments用于更新深度卷积网络。然后使用网络学习的语义特征来选择更好的candidates。这个过程是迭代的。

注意: region proposal仅用于网络训练,不会影响测试时间效率。

4.2. Formulation

作为预处理,我们使用region proposal方法来生成segmentation masks。 默认采用多尺度组合分组(Multiscale Combinatorial Grouping,MCG)。proposal candidate masks在整个训练练过程中都是固定的。 但是在训练期间,每个candidate mask将被分配一个可以是语义类别或背景的标签。 分配给masks的标签将被更新。

使用ground-truth bounding box annotation,我们希望它能尽可能多地挑选与box重叠的 candidate mask。

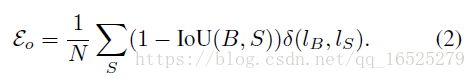

overlapping objective function :

利用candidate masks及其估计的语义标签,我们可以像在等式(1)中那样监督深度卷积网络。

regression objective function :

这个目标函数与公式(1)相同,除了其回归目标是estimated candidate segment。

我们最小化结合上述两个公式的objective function:

λ = 3 is a fixed weighting parameter

4.3. Training Algorithm

公式(4)中的目标函数涉及将标签分配给candidate segments的问题。接下来,我们提出一种贪婪迭代解法(greedy iterative solution)来寻找局部最优值。

1. 在网络参数λ固定的情况下,我们更新所有candidate segments的语义标签{lS}。我们只考虑一个ground-truth bounding box可以“激活”(即分配一个非背景标签)的情况,并且只有一个candidate。

为了增加样本方差以更好地进行随机训练,我们进一步采用随机抽样(random sampling)方法为每个ground-truth bounding box选择candidate segment。从largest costs的前k个segments 中随机抽样一个segment。设置k=5。

2. 在所有candidate segments的语义标签{lS}固定的情况下,我们更新网络参数λ。 在这种情况下,问题就变成了方程式(1)中的FCN问题。 利用SGD最小化目标函数。

我们迭代执行上述两个步骤,固定一组变量并解决另一组变量。 对于每次迭代,我们使用一个训练epoch来更新网络参数(即,所有训练图像都被访问一次),然后我们更新所有图像的segment labeling。该网络由在ImageNet分类数据集中预先训练的模型初始化,并且我们的算法从更新segment labels的步骤开始。

我们的方法适用于半监督情况(ground-truth annotations是segmentation masks 和 bounding boxes的混合)。 如果一个样本只有ground-truth boxes,那么标签l(p) 由上述candidate proposals给出,如果样本具有ground-truth masks,则简单地将其分配为真实标签。

5. Experiments

5.1. Experiments on PASCAL VOC 2012

We first evaluate our method on the PASCAL VOC 2012 semantic segmentation benchmark.This dataset involves 20 semantic categories of objects.

Comparisons of Supervision Strategies

Table 1 compares the results of using different strategies of supervision on the validation set.

This means that we can greatly reduce the labeling effort by dominantly using bounding box annotations.

The semi-supervised result (68.2) that uses VOC+COCO is considerably better than the strongly supervised result (63.8) that uses VOC only.

This comparison suggests a promising strategy - we may make use of the larger amount of existing bounding boxes annotations to improve the overall semantic segmentation results.

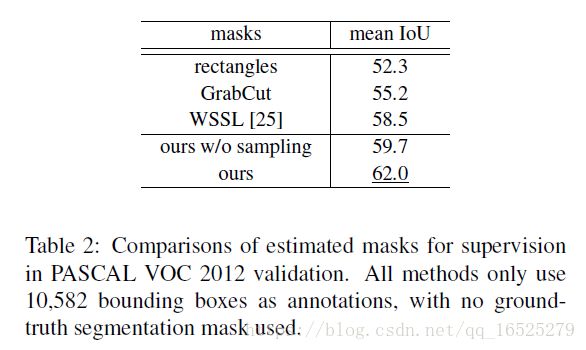

Comparisons of Estimated Masks for Supervision

In Table 2 we evaluate different methods of estimating masks from bounding boxes for supervision.

This result suggests that our method estimates more accurate masks than for supervision.

Comparisons of Region Proposals

Our method resorts to unsupervised region proposals for training. In Table 3, we compare the effects of various region proposals on our method: Selective Search (SS),Geodesic Object Proposals (GOP), and MCG.

Note that at test-time our method does not need region proposals. So the better accuracy of using MCG implies that our method effectively makes use of the higher quality segmentation masks to train a better network.

Comparisons on the Test Set

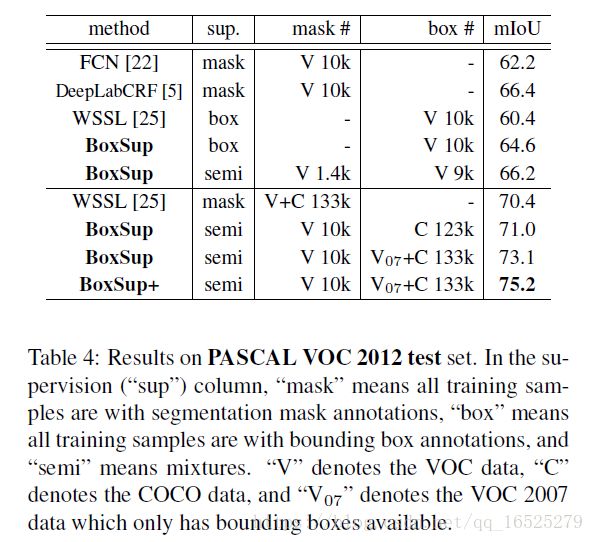

Next we compare with the state-of-the-art methods on the PASCAL VOC 2012 test set.In Table 4, the methods are based on the same FCN baseline and thus fair comparisons are made to evaluate the impact of mask/box/semisupervision.

This comparison demonstrates the power of our method that exploits large-scale bounding box annotations to improve accuracy.

Exploiting Boxes in PASCAL VOC 2007

To further demonstrate the effect of BoxSup, we exploit the bounding boxes in the PASCAL VOC 2007 dataset.

We train a BoxSup model using the union set of VOC 2007 boxes, COCO boxes, and the augmented VOC 2012 training set. The score improves from 71.0 to 73.1 (Table 4) because of the extra box training data. It is reasonable for us to expect further improvement if more bounding box annotations are available.

5.2 Experiments on PASCALCONTEXT

We further perform experiments on the recently labeled PASCAL-CONTEXT dataset.This dataset provides ground-truth semantic labels for the whole scene, including object and stuff (e.g., grass, sky, water).

To train a BoxSup model for this dataset, we first use the box annotations from all 80 object categories in the COCO dataset to train the FCN (using VGG-16).

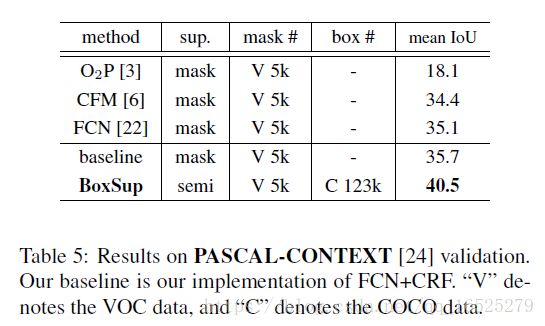

Table 5 shows the results in PASCAL-CONTEXT.

The 4.8% gain is solely because of the bounding box annotations in COCO that improve our network training.

6. Conclusion

The proposed BoxSup method can effectively harness bounding box annotations to train deep networks for semantic segmentation. Our BoxSup method that uses 133k bounding boxes and 10k masks achieves state-of-the-art results.Our error analysis suggests that semantic segmentation accuracy is hampered by the failure of recognizing objects, which large-scale data may help with.