Hive-1.2.1集成HBase-1.3.1不兼容问题

在Hive继承HBase中,二者的通信包就是hive-hbase-handler.jar,极其重要。如果我们用$HIVE_HOME/lib目录下的hive-hbase-handler-x.y.z.jar,那么一般都不会集成成功,反而报错如:

19/01/29 21:44:54 [htable-pool1-t1]: DEBUG ipc.RpcClientImpl: Use SIMPLE authentication for service ClientService, sasl=false

19/01/29 21:44:54 [htable-pool1-t1]: DEBUG ipc.RpcClientImpl: Connecting to app-dev-cms/192.168.4.62:16020

19/01/29 21:44:54 [main]: ERROR exec.DDLTask: java.lang.NoSuchMethodError: org.apache.hadoop.hbase.HTableDescriptor.addFamily(Lorg/apache/hadoop/hbase/HColumnDescrip

at org.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:214)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:664)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:657)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:156)

at com.sun.proxy.$Proxy9.createTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:714)

at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:4135)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:306)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:88)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1653)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1412)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1195)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1059)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1049)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:213)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:165)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:376)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:736)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:226)

at org.apache.hadoop.util.RunJar.main(RunJar.java:141)

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. org.apache.hadoop.hbase.HTableDescriptor.addFamily(Lorg/apache/hadoop/hbase/HColu

19/01/29 21:44:54 [main]: ERROR ql.Driver: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. org.apache.hadoop.hbase.HTableDescriptase/HColumnDescriptor;)V

这说明Hive和HBase的版本不兼容。因此,我们必须根据自己安装的Hive和HBase的版本自己重新编译hive-hbase-handler.jar,即依赖引用自己版本的hive和hase的jar包,重新打包hive-hbase-handler.jar

1.重新编译hive-hbase-handler.jar

(1)下载源码

apache-hive-1.2.1-src.tar.gz并解压

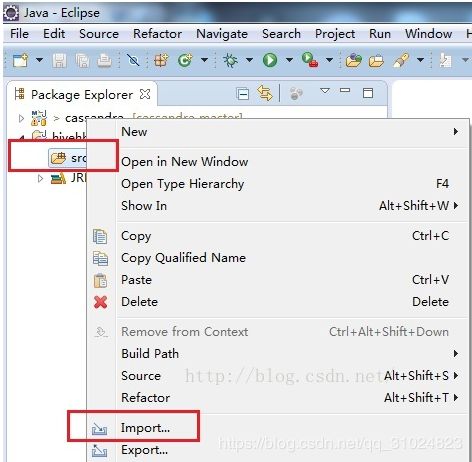

(2)导入eclipse

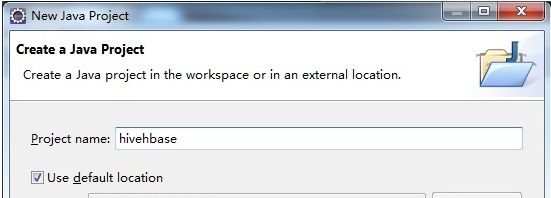

首先,需要在Eclipse中新建一个Java项目,项目名可以随便起,如hivehbase。

在Hive源码中找到hive-handler源码,切记现在hive-handler目录下的java目录,而不是把hive-handler导入。

接着,选择org目录即可。

(3)引入jar包

将$HIVE_HOME/lib 和 $HBASE_HOME/lib下的所有jar包,加到项目的lib下,并buildpath

注意:$HIVE_HOME/lib 和 $HBASE_HOME/lib目录和该项目lib下的zookepper-xxx.jar要替换成现在用的版本

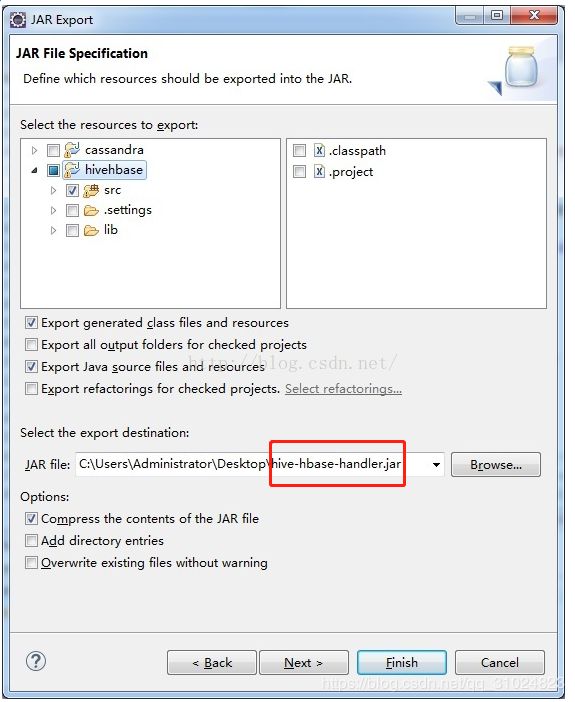

(4)导出jar包,替换掉$HIVE_HOME/lib下的

注意:导出的jar包名字要是hive-hbase-handler.jar

2.注意事项:

1.将$HIVE_HOME/lib和$HBASE_HOME/lib下的zookeeper-xxx.jar替换成现在所用的zookeeper-xxx.jar

2.$HIVE_HOME/lib目录下,用自己编译的hive-hbase-handler.jar替换调之前的那个,切记将之前的那个删掉,不用留在同一目录下,否则会报错ERROR exec.DDLTask: java.lang.NoSuchMethodError: org.apache.hadoop.hbase.HTableDescriptor.addFamily

3.执行hive语句时,要直接在控制台查看当前debug运行日志命令,方便定位错误

bin/hive -hiveconf hive.root.logger=DEBUG,console 4.hive执行需要依赖hbase的一些jar包

(方法1) 手动copy缺失的jar包

拷贝./hbase/lib/目录下的jar包,到$HIVE_HOME/lib/目录

包括hbase*.jar,metrics*.jar,htrace*.jar

cp $HBASE_HOME/lib/hbase*.jar $HIVE_HOME/lib

cp $HBASE_HOME/lib/metrics*.jar $HIVE_HOME/lib

cp $HBASE_HOME/lib/htrace*.jar $HIVE_HOME/lib

(方法2)设置classpath环境变量(export生命周期仅在一个bash窗口)

export HBASE_HOME=/opt/module/hbase-1.3.1

export HADOOP_HOME=/opt/module/hadoop-2.7.7

export HADOOP_CLASSPATH=`${HBASE_HOME}/bin/hbase mapredcp`

export HIVE_HOME=/opt/module/hive3.重启hive进行验证:

bin/hive -hiveconf hive.root.logger=DEBUG,console

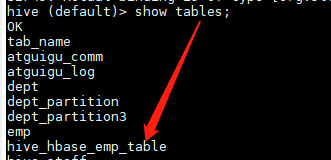

hive运行下面hql后会看到hbase和hive中都建好了表:

CREATE TABLE hive_hbase_emp_table(

empno int,

ename string,

job string,

mgr int,

hiredate string,

sal double,

comm double,

deptno int)

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,info:ename,info:job,info:mgr,info:hiredate,info:sal,info:comm,info:deptno")

TBLPROPERTIES ("hbase.table.name" = "hbase_emp_table");