hbase热点问题解决(预分区)

一、出现热点问题原因

1、hbase的中的数据是按照字典序排序的,当大量连续的rowkey集中写在个别的region,各个region之间数据分布不均衡;

2、创建表时没有提前预分区,创建的表默认只有一个region,大量的数据写入当前region;

3、创建表已经提前预分区,但是设计的rowkey没有规律可循,设计的rowkey应该由regionNo+messageId组成。

二、如何解决热点问题

解决这个问题,关键是要设计出可以让数据分布均匀的rowkey,与关系型数据库一样,rowkey是用来检索记录的主键。访问hbase table中的行,rowkey 可以是任意字符串(最大长度 是 64KB,实际应用中长度一般为 10-100bytes),在hbase内部,rowkey保存为字节数组,存储时,数据按照rowkey的字典序排序存储。

创建表命令:

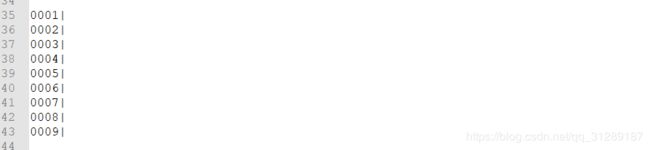

create 'testTable',{NAME => 'cf', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE=> '0', VERSIONS => '1', COMPRESSION => 'snappy', MIN_VERSIONS =>'0', TTL => '15552000', KEEP_DELETED_CELLS => 'false', BLOCKSIZE =>'65536', IN_MEMORY => 'false', BLOCKCACHE => 'true', METADATA =>{'ENCODE_ON_DISK' => 'true'}},{SPLITS_FILE=>'/app/soft/test/region.txt'}region.txt内容:

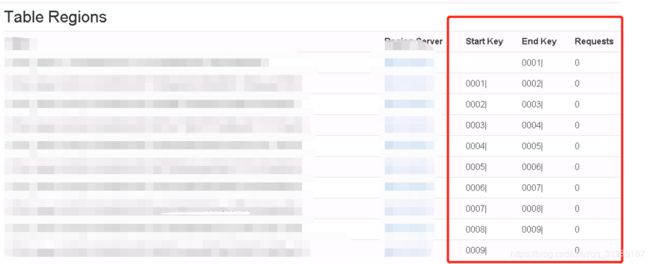

我这里预分10个region,执行命令之后,在hbase的console中可以看到以下信息,说明预分区ok了!!!

1、第一种设计rowkey方式:随机数+messageId,如果想让最近的数据快速get到,可以将时间戳加上,我这里的region是0001|到0009|开头的,因为hbase的数据是字典序排序的,所以如果我生成的 rowkey=0002rer4343343422,则当前这条数据就会保存到0001|~0002|这个region里,因为我的messageId都是字母+数字,“|”的ASCII值大于字母、数字。

生成regionNo的工具类:RegionUtils

package com.cn.dl;

import java.util.Random;

/**

* Created by Tiger on 2018/4/18.

*/

public class RegionUtils {

//十个预分区

private static final int REGION_NUM = 10;

//存放regionNo:0001,0002,...0009,0010

private static final String[] REGION_ARRAY = new String[REGION_NUM];

static {

initRegionArray();

}

/**

* 生成regionNo

* */

private static void initRegionArray(){

for(int i=1; i<=REGION_NUM; i++){

String regionNo = String.valueOf(i);

while (regionNo.length() < 4){

regionNo = "0" + regionNo;

}

REGION_ARRAY[i-1] = regionNo;

}

}

/**

* 随机获取regionNo

* @return regionNo

* */

public static String getRegionNo(){

Random random = new Random();

return REGION_ARRAY[random.nextInt(10)];

}

public static void main(String[] args) {

int i= 0;

while (i < 100){

System.out.println(getRegionNo());

i++;

}

}

}

生成rowKey:

// TODO: 2018/12/18 只是一个生成rowKey的案例

public void execute(Tuple tuple) {

try {

JSONObject json = JSONObject.parseObject(tuple.getStringByField("messageSpout"));

String messageId = json.getString("messageId");

// TODO: 2018/12/18 生成rowKey:regionNo+时间戳+messageId ,加上时间戳在hbase中可以提高查询效率

String rowKey = RegionUtils.getRegionNo() + System.currentTimeMillis() + messageId;

json.put("rowKey",rowKey);

System.out.println(json.toJSONString());

}catch (Exception e){

e.printStackTrace();

}finally {

collector.ack(tuple);

}

}打印结果,rowkey=regionNo+时间戳+messageId,前缀是随机的

{"name":"name2","messageId":"b998a8dfc05a4a819284213d4e727a85","age":12,"rowKey":"00041545105001972b998a8dfc05a4a819284213d4e727a85"}

{"name":"name3","messageId":"799affbf346641e8a00bfeee78ffcdb4","age":13,"rowKey":"00031545105002973799affbf346641e8a00bfeee78ffcdb4"}

{"name":"name4","messageId":"bbdf16b9a12b4fa09b060402f9522fed","age":14,"rowKey":"00051545105003973bbdf16b9a12b4fa09b060402f9522fed"}

{"name":"name5","messageId":"03c119868cd742459464df53c3827147","age":15,"rowKey":"0009154510500497403c119868cd742459464df53c3827147"}

{"name":"name6","messageId":"84c682681cdc4ac09ad3d270741074d3","age":16,"rowKey":"0002154510500597484c682681cdc4ac09ad3d270741074d3"}

{"name":"name7","messageId":"aecbd65f3f434452ab4a924d8e42b947","age":17,"rowKey":"00091545105006976aecbd65f3f434452ab4a924d8e42b947"}

{"name":"name8","messageId":"3bcb23e414e5450898b6b0eefff4d80a","age":18,"rowKey":"000315451050079783bcb23e414e5450898b6b0eefff4d80a"}

{"name":"name9","messageId":"40be62bfcea24ea799ae6f191241c5e8","age":19,"rowKey":"0002154510500897840be62bfcea24ea799ae6f191241c5e8"}

{"name":"name10","messageId":"94c220cd10d141c89cf08e61d0a48e7f","age":20,"rowKey":"0007154510500997894c220cd10d141c89cf08e61d0a48e7f"}

{"name":"name11","messageId":"0796f735b1ba43beb7b15d63c4fd4ec8","age":21,"rowKey":"000515451050109780796f735b1ba43beb7b15d63c4fd4ec8"}

{"name":"name12","messageId":"05ac8417e52443f48bf2c56879b3e2c6","age":22,"rowKey":"0009154510501197805ac8417e52443f48bf2c56879b3e2c6"}

{"name":"name13","messageId":"b87484b633b747ba8320cdf69334459b","age":23,"rowKey":"00101545105012978b87484b633b747ba8320cdf69334459b"}

{"name":"name14","messageId":"84c6daf1cdfd4c0f977a8742ee528977","age":24,"rowKey":"0005154510501397984c6daf1cdfd4c0f977a8742ee528977"}

{"name":"name15","messageId":"8e01e5c53d024de18507ed2a98e38519","age":25,"rowKey":"000415451050149808e01e5c53d024de18507ed2a98e38519"}

{"name":"name16","messageId":"48939394581946e881b91e797659d6ca","age":26,"rowKey":"0005154510501598248939394581946e881b91e797659d6ca"}

{"name":"name17","messageId":"b5c2024c721642a5a2b4cc8682c7cd40","age":27,"rowKey":"00021545105016981b5c2024c721642a5a2b4cc8682c7cd40"}

{"name":"name18","messageId":"4efb10fc351947f6a78761e7b3bf1783","age":28,"rowKey":"000215451050179824efb10fc351947f6a78761e7b3bf1783"}

{"name":"name19","messageId":"a4a27bafd5f749d0b08d9eb832120737","age":29,"rowKey":"00041545105018983a4a27bafd5f749d0b08d9eb832120737"}

{"name":"name20","messageId":"1d90758cbcc2495197b2ef0a05e58610","age":30,"rowKey":"000815451050199831d90758cbcc2495197b2ef0a05e58610"}

{"name":"name21","messageId":"47ae3fb0e3914496b75445df92d0a133","age":31,"rowKey":"0002154510502098347ae3fb0e3914496b75445df92d0a133"}

{"name":"name22","messageId":"419dccbeb2b74484997bd5373f8347af","age":32,"rowKey":"00071545105021982419dccbeb2b74484997bd5373f8347af"}

{"name":"name23","messageId":"51e38da4c9c74542bcd38be704ff3fec","age":33,"rowKey":"0005154510502298351e38da4c9c74542bcd38be704ff3fec"}

{"name":"name24","messageId":"e99e783220d14f63be1f967f82bd69fb","age":34,"rowKey":"00081545105023983e99e783220d14f63be1f967f82bd69fb"}

{"name":"name25","messageId":"0e15f181464146598dfe44976676c706","age":35,"rowKey":"000915451050249840e15f181464146598dfe44976676c706"}

{"name":"name26","messageId":"d0d8c0e939b44a688915714b85d61b1f","age":36,"rowKey":"00101545105025985d0d8c0e939b44a688915714b85d61b1f"}

{"name":"name27","messageId":"4a7d3404871a4137b9db4e24209f1346","age":37,"rowKey":"000515451050269844a7d3404871a4137b9db4e24209f1346"}

{"name":"name28","messageId":"51a5f39f032241fcaa6e5956a9ddb474","age":38,"rowKey":"0002154510502798651a5f39f032241fcaa6e5956a9ddb474"}

{"name":"name29","messageId":"c54ec6f46fa047fdae6187afad55e8f0","age":39,"rowKey":"00011545105028986c54ec6f46fa047fdae6187afad55e8f0"}

{"name":"name30","messageId":"076322780dae4748997006d53aa246c1","age":40,"rowKey":"00021545105029987076322780dae4748997006d53aa246c1"}

{"name":"name31","messageId":"563c258840a84f12ad1a5341381b163c","age":41,"rowKey":"00101545105030986563c258840a84f12ad1a5341381b163c"}

{"name":"name32","messageId":"377f3fbe63634fc4aa93b1737dd462da","age":42,"rowKey":"00051545105031988377f3fbe63634fc4aa93b1737dd462da"}

{"name":"name33","messageId":"5c1af0f26cc94d0b85d0fbca617e57ad","age":43,"rowKey":"000715451050329895c1af0f26cc94d0b85d0fbca617e57ad"}

{"name":"name34","messageId":"2d3050a0b3dd42df97ef87d45dbc0d26","age":44,"rowKey":"000715451050339902d3050a0b3dd42df97ef87d45dbc0d26"}

{"name":"name35","messageId":"526401e1f5ab45aeb95421e0ac200e4b","age":45,"rowKey":"00041545105034990526401e1f5ab45aeb95421e0ac200e4b"}总结:

这种设计的rowkey可以解决热点问题,但是要建立关联表,比如将rowkey保存到数据库或者nosql数据库中,因为前面的regionNo是随机的,不知道 对应数据在hbase的rowkey是多少;同一批数据,因为这个regionNo是随机的,所以要到多个region中get数据,不能使用startkey和endkey去get数据。

2、第二种设计rowkey的方式:通过messageId映射regionNo,这样既可以让数据均匀分布到各个region中,同时可以根据startkey和endkey可以get到同一批数据,messageId映射regionNo,使用一致性hash算法解决,一致性哈希算法在1997年由麻省理工学院的Karger等人在解决分布式Cache中提出的,设计目标是为了解决因特网中的热点(Hot spot)问题,

参考:

(https://baike.baidu.com/item/%E4%B8%80%E8%87%B4%E6%80%A7%E5%93%88%E5%B8%8C/2460889?fr=aladdin)(https://www.cnblogs.com/lpfuture/p/5796398.html)

public class ConsistentHash implements Serializable{

private static final long serialVersionUID = 1L;

private final HashFunction hashFunction;

//每个regions的虚拟节点个数

private final int numberOfReplicas;

//存储虚拟节点的hash值到真实节点的映射

private final SortedMap circle = new TreeMap();

public ConsistentHash(HashFunction hashFunction, int numberOfReplicas, Collection nodes) {

this.hashFunction = hashFunction;

this.numberOfReplicas = numberOfReplicas;

for (String node : nodes){

add(node);

}

}

/**

* 添加节点

* @param node

* @see java.util.TreeMap

* */

public void add(String node) {

for (int i = 0; i < numberOfReplicas; i++)

/*

* 不同的虚拟节点(i不同)有不同的hash值,但都对应同一个实际机器node

* 虚拟node一般是均衡分布在环上的,数据存储在顺时针方向的虚拟node上

*/

circle.put(hashFunction.getHashValue(node.toString() + i), node);

}

/**

* 移除节点

* @param node

* @see java.util.TreeMap

* */

public void remove(String node) {

for (int i = 0; i < numberOfReplicas; i++)

circle.remove(hashFunction.getHashValue(node.toString() + i));

}

/**

* 获取对应key的hashcode值,然后根据hashcode获取当前数据储存的真实节点

* */

public String get(Object key) {

if (circle.isEmpty())

return null;

//获取对应key的hashcode值

long hash = hashFunction.getHashValue((String) key);

//数据映射在两台虚拟机器所在环之间,就需要按顺时针方向寻找机器

if (!circle.containsKey(hash)) {

SortedMap tailMap = circle.tailMap(hash);

hash = tailMap.isEmpty() ? circle.firstKey() : tailMap.firstKey();

}

return circle.get(hash);

}

/**

* 获取hash环节点大小

* @return

* */

public long getSize() {

return circle.size();

}

/**

* 获取double类型数据的小数位后四位小数

* @param num

* @return

* */

public String getDecimalPoint(double num){

DecimalFormat df = new DecimalFormat("0.0000");

return df.format(num);

}

} public class HashFunction implements Serializable{

private static final long serialVersionUID = 1L;

/**

* 获取对应字符串的hashCode值

* @param key

* @return

* */

public long getHashValue(String key) {

final int p = 1677761999;

int hash = (int) 216613626111L;

for (int i = 0; i < key.length(); i++)

hash = (hash ^ key.charAt(i)) * p;

hash += hash << 13;

hash ^= hash >> 8;

hash += hash << 3;

hash ^= hash >> 18;

hash += hash << 5;

// 如果算出来的值为负数则取其绝对值

if (hash < 0)

hash = Math.abs(hash);

return hash;

}

}我目前满意第二种方式,然后在es中建立关联表,get数据时,先在es中get到rowkey,然后在hbase中获取数据,这个根据自己的业务设计。

写的内容有问题,欢迎来吐槽,我会及时修改,谢谢!