抽取式文本摘要实现

1、介绍

1、本文自动文本摘要实现的依据就是词频统计

2、文章是由句子组成的,文章的信息都包含在句子中,有些句子包含的信息多,有些句子包含的信息少。

3、句子的信息量用"关键词"来衡量。如果包含的关键词越多,就说明这个句子越重要。

4、"自动摘要"就是要找出那些包含信息最多的句子,也就是包含关键字最多的句子

5、而通过统计句子中关键字的频率的大小,进而进行排序,通过对排序的词频列表对文档中句子逐个进行打分,进而把打分高的句子找出来,就是我们要的摘要。

2、实现步骤

1、加载停用词

2、将文档拆分成句子列表

3、将句子列表分词

4、统计词频,取出100个最高的关键字

5、根据词频对句子列表进行打分

6、取出打分较高的前5个句子

3、原理

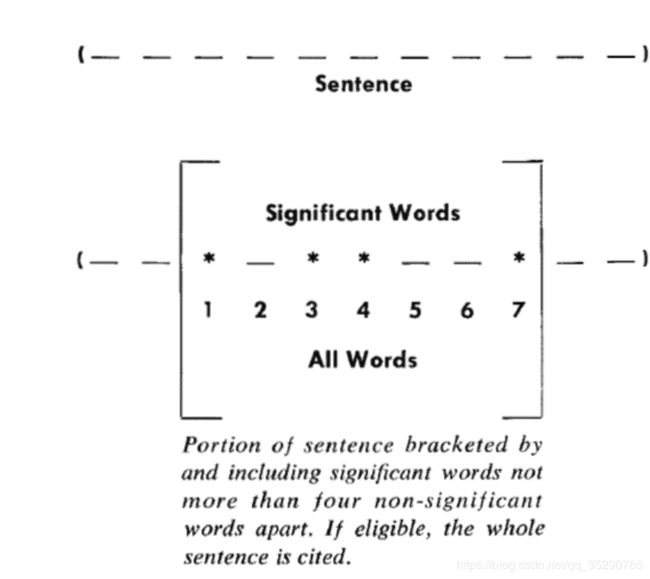

这种方法最早出自1958年的IBM公司科学家H.P. Luhn的论文《The Automatic Creation of Literature Abstracts》。Luhn提出用"簇"(cluster)表示关键词的聚集。所谓"簇"就是包含多个关键词的句子片段。

上图就是Luhn原始论文的插图,被框起来的部分就是一个"簇"。只要关键词之间的距离小于"门槛值",它们就被认为处于同一个簇之中。Luhn建议的门槛值是4或5。

也就是说,如果两个关键词之间有5个以上的其他词,就可以把这两个关键词分在两个簇。

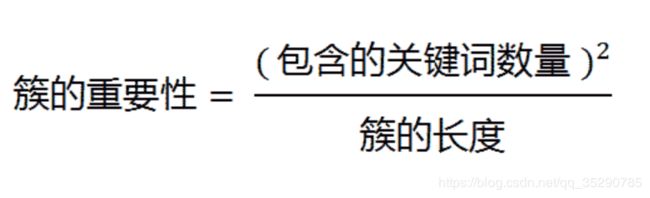

簇重要性分值计算公式:

以前图为例,其中的簇一共有7个词,其中4个是关键词。因此,它的重要性分值等于 ( 4 x 4 ) / 7 = 2.3。

然后,找出包含分值最高的簇的句子(比如前5句),把它们合在一起,就构成了这篇文章的自动摘要

4、相关代码

python实现代码:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# Author: Jia ShiLin

#!/user/bin/python

# coding:utf-8

import nltk

import numpy

import jieba

import codecs

import os

class SummaryTxt:

def __init__(self,stopwordspath):

# 单词数量

self.N = 100

# 单词间的距离

self.CLUSTER_THRESHOLD = 5

# 返回的top n句子

self.TOP_SENTENCES = 5

self.stopwrods = {}

#加载停用词

if os.path.exists(stopwordspath):

stoplist = [line.strip() for line in codecs.open(stopwordspath, 'r', encoding='utf8').readlines()]

self.stopwrods = {}.fromkeys(stoplist)

def _split_sentences(self,texts):

'''

把texts拆分成单个句子,保存在列表里面,以(.!?。!?)这些标点作为拆分的意见,

:param texts: 文本信息

:return:

'''

splitstr = '.!?。!?'#.decode('utf8')

start = 0

index = 0 # 每个字符的位置

sentences = []

for text in texts:

if text in splitstr: # 检查标点符号下一个字符是否还是标点

sentences.append(texts[start:index + 1]) # 当前标点符号位置

start = index + 1 # start标记到下一句的开头

index += 1

if start < len(texts):

sentences.append(texts[start:]) # 这是为了处理文本末尾没有标

return sentences

def _score_sentences(self,sentences, topn_words):

'''

利用前N个关键字给句子打分

:param sentences: 句子列表

:param topn_words: 关键字列表

:return:

'''

scores = []

sentence_idx = -1

for s in [list(jieba.cut(s)) for s in sentences]:

sentence_idx += 1

word_idx = []

for w in topn_words:

try:

word_idx.append(s.index(w)) # 关键词出现在该句子中的索引位置

except ValueError: # w不在句子中

pass

word_idx.sort()

if len(word_idx) == 0:

continue

# 对于两个连续的单词,利用单词位置索引,通过距离阀值计算族

clusters = []

cluster = [word_idx[0]]

i = 1

while i < len(word_idx):

if word_idx[i] - word_idx[i - 1] < self.CLUSTER_THRESHOLD:

cluster.append(word_idx[i])

else:

clusters.append(cluster[:])

cluster = [word_idx[i]]

i += 1

clusters.append(cluster)

# 对每个族打分,每个族类的最大分数是对句子的打分

max_cluster_score = 0

for c in clusters:

significant_words_in_cluster = len(c)

total_words_in_cluster = c[-1] - c[0] + 1

score = 1.0 * significant_words_in_cluster * significant_words_in_cluster / total_words_in_cluster

if score > max_cluster_score:

max_cluster_score = score

scores.append((sentence_idx, max_cluster_score))

return scores

def summaryScoredtxt(self,text):

# 将文章分成句子

sentences = self._split_sentences(text)

# 生成分词

words = [w for sentence in sentences for w in jieba.cut(sentence) if w not in self.stopwrods if

len(w) > 1 and w != '\t']

# words = []

# for sentence in sentences:

# for w in jieba.cut(sentence):

# if w not in stopwords and len(w) > 1 and w != '\t':

# words.append(w)

# 统计词频

wordfre = nltk.FreqDist(words)

# 获取词频最高的前N个词

topn_words = [w[0] for w in sorted(wordfre.items(), key=lambda d: d[1], reverse=True)][:self.N]

# 根据最高的n个关键词,给句子打分

scored_sentences = self._score_sentences(sentences, topn_words)

# 利用均值和标准差过滤非重要句子

avg = numpy.mean([s[1] for s in scored_sentences]) # 均值

std = numpy.std([s[1] for s in scored_sentences]) # 标准差

summarySentences = []

for (sent_idx, score) in scored_sentences:

if score > (avg + 0.5 * std):

summarySentences.append(sentences[sent_idx])

print (sentences[sent_idx])

return summarySentences

def summaryTopNtxt(self,text):

# 将文章分成句子

sentences = self._split_sentences(text)

# 根据句子列表生成分词列表

words = [w for sentence in sentences for w in jieba.cut(sentence) if w not in self.stopwrods if

len(w) > 1 and w != '\t']

# words = []

# for sentence in sentences:

# for w in jieba.cut(sentence):

# if w not in stopwords and len(w) > 1 and w != '\t':

# words.append(w)

# 统计词频

wordfre = nltk.FreqDist(words)

# 获取词频最高的前N个词

topn_words = [w[0] for w in sorted(wordfre.items(), key=lambda d: d[1], reverse=True)][:self.N]

# 根据最高的n个关键词,给句子打分

scored_sentences = self._score_sentences(sentences, topn_words)

top_n_scored = sorted(scored_sentences, key=lambda s: s[1])[-self.TOP_SENTENCES:]

top_n_scored = sorted(top_n_scored, key=lambda s: s[0])

summarySentences = []

for (idx, score) in top_n_scored:

print( sentences[idx])

summarySentences.append(sentences[idx])

return sentences

if __name__=='__main__':

obj =SummaryTxt('stopword.txt')

# txt=u'十八大以来的五年,是党和国家发展进程中极不平凡的五年。面对世界经济复苏乏力、局部冲突和动荡频发、全球性问题加剧的外部环境,面对我国经济发展进入新常态等一系列深刻变化,我们坚持稳中求进工作总基调,迎难而上,开拓进取,取得了改革开放和社会主义现代化建设的历史性成就。' \

# u'为贯彻十八大精神,党中央召开七次全会,分别就政府机构改革和职能转变、全面深化改革、全面推进依法治国、制定“十三五”规划、全面从严治党等重大问题作出决定和部署。五年来,我们统筹推进“五位一体”总体布局、协调推进“四个全面”战略布局,“十二五”规划胜利完成,“十三五”规划顺利实施,党和国家事业全面开创新局面。' \

# u'经济建设取得重大成就。坚定不移贯彻新发展理念,坚决端正发展观念、转变发展方式,发展质量和效益不断提升。经济保持中高速增长,在世界主要国家中名列前茅,国内生产总值从五十四万亿元增长到八十万亿元,稳居世界第二,对世界经济增长贡献率超过百分之三十。供给侧结构性改革深入推进,经济结构不断优化,数字经济等新兴产业蓬勃发展,高铁、公路、桥梁、港口、机场等基础设施建设快速推进。农业现代化稳步推进,粮食生产能力达到一万二千亿斤。城镇化率年均提高一点二个百分点,八千多万农业转移人口成为城镇居民。区域发展协调性增强,“一带一路”建设、京津冀协同发展、长江经济带发展成效显著。创新驱动发展战略大力实施,创新型国家建设成果丰硕,天宫、蛟龙、天眼、悟空、墨子、大飞机等重大科技成果相继问世。南海岛礁建设积极推进。开放型经济新体制逐步健全,对外贸易、对外投资、外汇储备稳居世界前列。' \

# u'全面深化改革取得重大突破。蹄疾步稳推进全面深化改革,坚决破除各方面体制机制弊端。改革全面发力、多点突破、纵深推进,着力增强改革系统性、整体性、协同性,压茬拓展改革广度和深度,推出一千五百多项改革举措,重要领域和关键环节改革取得突破性进展,主要领域改革主体框架基本确立。中国特色社会主义制度更加完善,国家治理体系和治理能力现代化水平明显提高,全社会发展活力和创新活力明显增强。'

# txt ='The information disclosed by the Film Funds Office of the State Administration of Press, Publication, Radio, Film and Television shows that, the total box office in China amounted to nearly 3 billion yuan during the first six days of the lunar year (February 8 - 13), an increase of 67% compared to the 1.797 billion yuan in the Chinese Spring Festival period in 2015, becoming the "Best Chinese Spring Festival Period in History".' \

# 'During the Chinese Spring Festival period, "The Mermaid" contributed to a box office of 1.46 billion yuan. "The Man From Macau III" reached a box office of 680 million yuan. "The Journey to the West: The Monkey King 2" had a box office of 650 million yuan. "Kung Fu Panda 3" also had a box office of exceeding 130 million. These four blockbusters together contributed more than 95% of the total box office during the Chinese Spring Festival period.' \

# 'There were many factors contributing to the popularity during the Chinese Spring Festival period. Apparently, the overall popular film market with good box office was driven by the emergence of a few blockbusters. In fact, apart from the appeal of the films, other factors like film ticket subsidy of online seat-selection companies, cinema channel sinking and the film-viewing heat in the middle and small cities driven by the home-returning wave were all main factors contributing to this blowout. A management of Shanghai Film Group told the 21st Century Business Herald.'

txt = 'Monetary policy summary The Bank of England’s Monetary Policy Committee (MPC) sets monetary policy in order to meet the 2% inflation target and in a way that helps to sustain growth and employment. At its meeting ending on 5 August 2015, the MPC voted by a majority of 8-1 to maintain Bank Rate at 0.5%. The Committee voted unanimously to maintain the stock of purchased assets financed by the issuance of central bank reserves at £375 billion, and so to reinvest the £16.9 billion of cash flows associated with the redemption of the September 2015 gilt held in the Asset Purchase Facility. CPI inflation fell back to zero in June. As set out in the Governor’s open letter to the Chancellor, around three quarters of the deviation of inflation from the 2% target, or 1½ percentage points, reflects unusually low contributions from energy, food, and other imported goods prices. The remaining quarter of the deviation of inflation from target, or ½ a percentage point, reflects the past weakness of domestic cost growth, and unit labour costs in particular. The combined weakness in domestic costs and imported goods prices is evident in subdued core inflation, which on most measures is currently around 1%. With some underutilised resources remaining in the economy and with inflation below the target, the Committee intends to set monetary policy in order to ensure that growth is sufficient to absorb the remaining economic slack so as to return inflation to the target within two years. Conditional upon Bank Rate following the gently rising path implied by market yields, the Committee judges that this is likely to be achieved. In its latest economic projections, the Committee projects UK-weighted world demand to expand at a moderate pace. Growth in advanced economies is expected to be a touch faster, and growth in emerging economies a little slower, than in the past few years. The support to UK exports from steady global demand growth is expected to be counterbalanced, however, by the effect of the past appreciation of sterling. Risks to global growth are judged to be skewed moderately to the downside reflecting, for example, risks to activity in the euro area and China. Private domestic demand growth in the United Kingdom is expected to remain robust. Household spending has been supported by the boost to real incomes from lower food and energy prices. Wage growth has picked up as the labour market has tightened and productivity has strengthened. Business and consumer confidence remain high, while credit conditions have continued to improve, with historically low mortgage rates providing support to activity in the housing market. Business investment has made a substantial contribution to growth in recent years. Firms have invested to expand capacity, supported by accommodative financial conditions. Despite weakening slightly, surveys suggest continued robust investment growth ahead. This will support the continuing increase of underlying productivity growth towards past average rates. Robust private domestic demand is expected to produce sufficient momentum to eliminate the margin of spare capacity over the next year or so, despite the continuing fiscal consolidation and modest global growth. This is judged likely to generate the rise in domestic costs expected to be necessary to return inflation to the target in the medium term. The near-term outlook for inflation is muted. The falls in energy prices of the past few months will continue to bear down on inflation at least until the middle of next year. Nonetheless, a range of measures suggest that medium-term inflation expectations remain well anchored. There is little evidence in wage settlements or spending patterns of any deflationary mindset among businesses and households. Sterling has appreciated by 3½% since May and 20% since its trough in March 2013. The drag on import prices from this appreciation will continue to push down on inflation for some time to come, posing a downside risk to its path in the near term. Set against that, the degree of slack in the economy has diminished substantially over the past two and a half years. The unemployment rate has fallen by more than 2 percentage points since the middle of 2013, and the ratio of job vacancies to unemployment has returned from well below to around its pre-crisis average. The margin of spare capacity is currently judged to be around ½% of GDP, with a range of views among MPC members around that central estimate. A further modest tightening of the labour market is expected, supporting a continued firming in the growth of wages and unit labour costs over the next three years, counterbalancing the drag on inflation from sterling. Were Bank Rate to follow the gently rising path implied by market yields, the Committee judges that demand growth would be sufficient to return inflation to the target within two years. In its projections, inflation then moves slightly above the target in the third year of the forecast period as sustained growth leads to a degree of excess demand. Underlying those projections are significant judgements in a number of areas, as described in the August Inflation Report. In any one of these areas, developments might easily turn out differently than assumed with implications for the outlook for growth and inflation, and therefore for the appropriate stance of monetary policy. Reflecting that, there is a spread of views among MPC members about the balance of risks to inflation relative to the best collective judgement presented in the August Report. At the Committee’s meeting ending on 5 August, the majority of MPC members judged it appropriate to leave the stance of monetary policy unchanged at present. Ian McCafferty preferred to increase Bank Rate by 25 basis points, given his view that demand growth and wage pressures were likely to be greater, and the margin of spare capacity smaller, than embodied in the Committee’s collective August projections. All members agree that, given the likely persistence of the headwinds weighing on the economy, when Bank Rate does begin to rise, it is expected to do so more gradually and to a lower level than in recent cycles. This guidance is an expectation, not a promise. The actual path Bank Rate will follow over the next few years will depend on the economic circumstances. The Committee will continue to monitor closely the incoming data.'

print (txt)

print ("--")

obj.summaryScoredtxt(txt)

print ("----")

obj.summaryTopNtxt(txt)java实现代码:

//import com.hankcs.hanlp.HanLP;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.io.FileInputStream;

import java.io.IOException;

import java.io.Reader;

import java.io.StringReader;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.HashMap;

import java.util.HashSet;

import java.util.LinkedHashMap;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import java.util.PriorityQueue;

import java.util.Queue;

import java.util.Set;

import java.util.TreeMap;

import java.util.regex.Pattern;

import java.util.regex.Matcher;

//import com.hankcs.hanlp.seg.common.Term;

import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme;

/**

* @Author:sks

* @Description:文本摘要提取文中重要的关键句子,使用top-n关键词在句子中的比例关系

* 返回过滤句子方法为:1.均值标准差,2.top-n句子,3.最大边缘相关top-n句子

* @Date:Created in 16:40 2017/12/22

* @Modified by:

**/

public class NewsSummary {

//保留关键词数量

int N = 50;

//关键词间的距离阀值

int CLUSTER_THRESHOLD = 5;

//前top-n句子

int TOP_SENTENCES = 10;

//最大边缘相关阀值

double λ = 0.4;

//句子得分使用方法

final Set styleSet = new HashSet();

//停用词列表

Set stopWords = new HashSet();

//句子编号及分词列表

Map> sentSegmentWords = null;

public NewsSummary(){

this.loadStopWords("D:\\work\\Solr\\solr-python\\CNstopwords.txt");

styleSet.add("meanstd");

styleSet.add("default");

styleSet.add("MMR");

}

/**

* 加载停词

* @param path

*/

private void loadStopWords(String path){

BufferedReader br=null;

try

{

InputStreamReader reader = new InputStreamReader(new FileInputStream(path),"utf-8");

br = new BufferedReader(reader);

String line=null;

while((line=br.readLine())!=null)

{

stopWords.add(line);

}

br.close();

}catch(IOException e){

e.printStackTrace();

}

}

/**

* @Author:sks

* @Description:利用正则将文本拆分成句子

* @Date:

*/

private List SplitSentences(String text){

List sentences = new ArrayList();

String regEx = "[!?。!?.]";

Pattern p = Pattern.compile(regEx);

String[] sents = p.split(text);

Matcher m = p.matcher(text);

int sentsLen = sents.length;

if(sentsLen>0)

{ //把每个句子的末尾标点符号加上

int index = 0;

while(index < sentsLen)

{

if(m.find())

{

sents[index] += m.group();

}

index++;

}

}

for(String sentence:sents){

//文章从网页上拷贝过来后遗留下来的没有处理掉的html的标志

sentence=sentence.replaceAll("(”|“|—|‘|’|·|"|↓|•)", "");

sentences.add(sentence.trim());

}

return sentences;

}

/**

* 这里使用IK进行分词

* @param text

* @return

*/

private List IKSegment(String text){

List wordList = new ArrayList();

Reader reader = new StringReader(text);

IKSegmenter ik = new IKSegmenter(reader,true);

Lexeme lex = null;

try {

while((lex=ik.next())!=null)

{

String word=lex.getLexemeText();

if(word.equals("nbsp") || this.stopWords.contains(word))

continue;

if(word.length()>1 && word!="\t")

wordList.add(word);

}

} catch (IOException e) {

e.printStackTrace();

}

return wordList;

}

/**

* 每个句子得分 (keywordsLen*keywordsLen/totalWordsLen)

* @param sentences 分句

* @param topnWords keywords top-n关键词

* @return

*/

private Map scoreSentences(List sentences,List topnWords){

Map scoresMap=new LinkedHashMap();//句子编号,得分

sentSegmentWords=new HashMap>();

int sentence_idx=-1;//句子编号

for(String sentence:sentences){

sentence_idx+=1;

List words=this.IKSegment(sentence);//对每个句子分词

// List words= HanLP.segment(sentence);

sentSegmentWords.put(sentence_idx, words);

List word_idx=new ArrayList();//每个关词键在本句子中的位置

for(String word:topnWords){

if(words.contains(word)){

word_idx.add(words.indexOf(word));

}else

continue;

}

if(word_idx.size()==0)

continue;

Collections.sort(word_idx);

//对于两个连续的单词,利用单词位置索引,通过距离阀值计算一个族

List> clusters=new ArrayList>();//根据本句中的关键词的距离存放多个词族

List cluster=new ArrayList();

cluster.add(word_idx.get(0));

int i=1;

while(i();

cluster.add(word_idx.get(i));

}

i+=1;

}

clusters.add(cluster);

//对每个词族打分,选择最高得分作为本句的得分

double max_cluster_score=0.0;

for(List clu:clusters){

int keywordsLen=clu.size();//关键词个数

int totalWordsLen=clu.get(keywordsLen-1)-clu.get(0)+1;//总的词数

double score=1.0*keywordsLen*keywordsLen/totalWordsLen;

if(score>max_cluster_score)

max_cluster_score=score;

}

scoresMap.put(sentence_idx,max_cluster_score);

}

return scoresMap;

}

/**

* @Author:sks

* @Description:利用均值方差自动文摘

* @Date:

*/

public String SummaryMeanstdTxt(String text){

//将文本拆分成句子列表

List sentencesList = this.SplitSentences(text);

//利用IK分词组件将文本分词,返回分词列表

List words = this.IKSegment(text);

// List words1= HanLP.segment(text);

//统计分词频率

Map wordsMap = new HashMap();

for(String word:words){

Integer val = wordsMap.get(word);

wordsMap.put(word,val == null ? 1: val + 1);

}

//使用优先队列自动排序

Queue> wordsQueue=new PriorityQueue>(wordsMap.size(),new Comparator>()

{

// @Override

public int compare(Entry o1,

Entry o2) {

return o2.getValue()-o1.getValue();

}

});

wordsQueue.addAll(wordsMap.entrySet());

if( N > wordsMap.size())

N = wordsQueue.size();

//取前N个频次最高的词存在wordsList

List wordsList = new ArrayList(N);//top-n关键词

for(int i = 0;i < N;i++){

Entry entry= wordsQueue.poll();

wordsList.add(entry.getKey());

}

//利用频次关键字,给句子打分,并对打分后句子列表依据得分大小降序排序

Map scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,从第一句开始,句子编号的自然顺序

//approach1,利用均值和标准差过滤非重要句子

Map keySentence = new LinkedHashMap();

//句子得分均值

double sentenceMean = 0.0;

for(double value:scoresLinkedMap.values()){

sentenceMean += value;

}

sentenceMean /= scoresLinkedMap.size();

//句子得分标准差

double sentenceStd=0.0;

for(Double score:scoresLinkedMap.values()){

sentenceStd += Math.pow((score-sentenceMean), 2);

}

sentenceStd = Math.sqrt(sentenceStd / scoresLinkedMap.size());

for(Map.Entry entry:scoresLinkedMap.entrySet()){

//过滤低分句子

if(entry.getValue()>(sentenceMean+0.5*sentenceStd))

keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));

}

StringBuilder sb = new StringBuilder();

for(int index:keySentence.keySet())

sb.append(keySentence.get(index));

return sb.toString();

}

/**

* @Author:sks

* @Description:默认返回排序得分top-n句子

* @Date:

*/

public String SummaryTopNTxt(String text){

//将文本拆分成句子列表

List sentencesList = this.SplitSentences(text);

//利用IK分词组件将文本分词,返回分词列表

List words = this.IKSegment(text);

// List words1= HanLP.segment(text);

//统计分词频率

Map wordsMap = new HashMap();

for(String word:words){

Integer val = wordsMap.get(word);

wordsMap.put(word,val == null ? 1: val + 1);

}

//使用优先队列自动排序

Queue> wordsQueue=new PriorityQueue>(wordsMap.size(),new Comparator>()

{

// @Override

public int compare(Entry o1,

Entry o2) {

return o2.getValue()-o1.getValue();

}

});

wordsQueue.addAll(wordsMap.entrySet());

if( N > wordsMap.size())

N = wordsQueue.size();

//取前N个频次最高的词存在wordsList

List wordsList = new ArrayList(N);//top-n关键词

for(int i = 0;i < N;i++){

Entry entry= wordsQueue.poll();

wordsList.add(entry.getKey());

}

//利用频次关键字,给句子打分,并对打分后句子列表依据得分大小降序排序

Map scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,从第一句开始,句子编号的自然顺序

List> sortedSentList = new ArrayList>(scoresLinkedMap.entrySet());//按得分从高到底排序好的句子,句子编号与得分

//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");

Collections.sort(sortedSentList, new Comparator>(){

// @Override

public int compare(Entry o1,Entry o2) {

return o2.getValue() == o1.getValue() ? 0 :

(o2.getValue() > o1.getValue() ? 1 : -1);

}

});

//approach2,默认返回排序得分top-n句子

Map keySentence = new TreeMap();

int count = 0;

for(Map.Entry entry:sortedSentList){

count++;

keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));

if(count == this.TOP_SENTENCES)

break;

}

StringBuilder sb=new StringBuilder();

for(int index:keySentence.keySet())

sb.append(keySentence.get(index));

return sb.toString();

}

/**

* @Author:sks

* @Description:利用最大边缘相关自动文摘

* @Date:

*/

public String SummaryMMRNTxt(String text){

//将文本拆分成句子列表

List sentencesList = this.SplitSentences(text);

//利用IK分词组件将文本分词,返回分词列表

List words = this.IKSegment(text);

// List words1= HanLP.segment(text);

//统计分词频率

Map wordsMap = new HashMap();

for(String word:words){

Integer val = wordsMap.get(word);

wordsMap.put(word,val == null ? 1: val + 1);

}

//使用优先队列自动排序

Queue> wordsQueue=new PriorityQueue>(wordsMap.size(),new Comparator>()

{

// @Override

public int compare(Entry o1,

Entry o2) {

return o2.getValue()-o1.getValue();

}

});

wordsQueue.addAll(wordsMap.entrySet());

if( N > wordsMap.size())

N = wordsQueue.size();

//取前N个频次最高的词存在wordsList

List wordsList = new ArrayList(N);//top-n关键词

for(int i = 0;i < N;i++){

Entry entry= wordsQueue.poll();

wordsList.add(entry.getKey());

}

//利用频次关键字,给句子打分,并对打分后句子列表依据得分大小降序排序

Map scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,从第一句开始,句子编号的自然顺序

List> sortedSentList = new ArrayList>(scoresLinkedMap.entrySet());//按得分从高到底排序好的句子,句子编号与得分

//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");

Collections.sort(sortedSentList, new Comparator>(){

// @Override

public int compare(Entry o1,Entry o2) {

return o2.getValue() == o1.getValue() ? 0 :

(o2.getValue() > o1.getValue() ? 1 : -1);

}

});

//approach3,利用最大边缘相关,返回前top-n句子

if(sentencesList.size()==2)

{

return sentencesList.get(0)+sentencesList.get(1);

}

else if(sentencesList.size()==1)

return sentencesList.get(0);

Map keySentence = new TreeMap();

int count = 0;

Map MMR_SentScore = MMR(sortedSentList);

for(Map.Entry entry:MMR_SentScore.entrySet())

{

count++;

int sentIndex=entry.getKey();

String sentence=sentencesList.get(sentIndex);

keySentence.put(sentIndex, sentence);

if(count==this.TOP_SENTENCES)

break;

}

StringBuilder sb=new StringBuilder();

for(int index:keySentence.keySet())

sb.append(keySentence.get(index));

return sb.toString();

}

/**

* 计算文本摘要

* @param text

* @param style(meanstd,default,MMR)

* @return

*/

public String summarize(String text,String style){

try {

if(!styleSet.contains(style) || text.trim().equals(""))

throw new IllegalArgumentException("方法 summarize(String text,String style)中text不能为空,style必须是meanstd、default或者MMR");

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.exit(1);

}

//将文本拆分成句子列表

List sentencesList = this.SplitSentences(text);

//利用IK分词组件将文本分词,返回分词列表

List words = this.IKSegment(text);

// List words1= HanLP.segment(text);

//统计分词频率

Map wordsMap = new HashMap();

for(String word:words){

Integer val = wordsMap.get(word);

wordsMap.put(word,val == null ? 1: val + 1);

}

//使用优先队列自动排序

Queue> wordsQueue=new PriorityQueue>(wordsMap.size(),new Comparator>()

{

// @Override

public int compare(Entry o1,

Entry o2) {

return o2.getValue()-o1.getValue();

}

});

wordsQueue.addAll(wordsMap.entrySet());

if( N > wordsMap.size())

N = wordsQueue.size();

//取前N个频次最高的词存在wordsList

List wordsList = new ArrayList(N);//top-n关键词

for(int i = 0;i < N;i++){

Entry entry= wordsQueue.poll();

wordsList.add(entry.getKey());

}

//利用频次关键字,给句子打分,并对打分后句子列表依据得分大小降序排序

Map scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,从第一句开始,句子编号的自然顺序

Map keySentence=null;

//approach1,利用均值和标准差过滤非重要句子

if(style.equals("meanstd"))

{

keySentence = new LinkedHashMap();

//句子得分均值

double sentenceMean = 0.0;

for(double value:scoresLinkedMap.values()){

sentenceMean += value;

}

sentenceMean /= scoresLinkedMap.size();

//句子得分标准差

double sentenceStd=0.0;

for(Double score:scoresLinkedMap.values()){

sentenceStd += Math.pow((score-sentenceMean), 2);

}

sentenceStd = Math.sqrt(sentenceStd / scoresLinkedMap.size());

for(Map.Entry entry:scoresLinkedMap.entrySet()){

//过滤低分句子

if(entry.getValue()>(sentenceMean+0.5*sentenceStd))

keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));

}

}

List> sortedSentList = new ArrayList>(scoresLinkedMap.entrySet());//按得分从高到底排序好的句子,句子编号与得分

//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");

Collections.sort(sortedSentList, new Comparator>(){

// @Override

public int compare(Entry o1,Entry o2) {

return o2.getValue() == o1.getValue() ? 0 :

(o2.getValue() > o1.getValue() ? 1 : -1);

}

});

//approach2,默认返回排序得分top-n句子

if(style.equals("default")){

keySentence = new TreeMap();

int count = 0;

for(Map.Entry entry:sortedSentList){

count++;

keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));

if(count == this.TOP_SENTENCES)

break;

}

}

//approach3,利用最大边缘相关,返回前top-n句子

if(style.equals("MMR"))

{

if(sentencesList.size()==2)

{

return sentencesList.get(0)+sentencesList.get(1);

}

else if(sentencesList.size()==1)

return sentencesList.get(0);

keySentence = new TreeMap();

int count = 0;

Map MMR_SentScore = MMR(sortedSentList);

for(Map.Entry entry:MMR_SentScore.entrySet())

{

count++;

int sentIndex=entry.getKey();

String sentence=sentencesList.get(sentIndex);

keySentence.put(sentIndex, sentence);

if(count==this.TOP_SENTENCES)

break;

}

}

StringBuilder sb=new StringBuilder();

for(int index:keySentence.keySet())

sb.append(keySentence.get(index));

//System.out.println("summarize out...");

return sb.toString();

}

/**

* 最大边缘相关(Maximal Marginal Relevance),根据λ调节准确性和多样性

* max[λ*score(i) - (1-λ)*max[similarity(i,j)]]:score(i)句子的得分,similarity(i,j)句子i与j的相似度

* User-tunable diversity through λ parameter

* - High λ= Higher accuracy

* - Low λ= Higher diversity

* @param sortedSentList 排好序的句子,编号及得分

* @return

*/

private Map MMR(List> sortedSentList){

//System.out.println("MMR In...");

double[][] simSentArray=sentJSimilarity();//所有句子的相似度

Map sortedLinkedSent=new LinkedHashMap();

for(Map.Entry entry:sortedSentList){

sortedLinkedSent.put(entry.getKey(),entry.getValue());

}

Map MMR_SentScore=new LinkedHashMap();//最终的得分(句子编号与得分)

Map.Entry Entry=sortedSentList.get(0);//第一步先将最高分的句子加入

MMR_SentScore.put(Entry.getKey(), Entry.getValue());

boolean flag=true;

while(flag){

int index=0;

double maxScore=Double.NEGATIVE_INFINITY;//通过迭代计算获得最高分句子

for(Map.Entry entry:sortedLinkedSent.entrySet()){

if(MMR_SentScore.containsKey(entry.getKey())) continue;

double simSentence=0.0;

for(Map.Entry MMREntry:MMR_SentScore.entrySet()){//这个是获得最相似的那个句子的最大相似值

double simSen=0.0;

if(entry.getKey()>MMREntry.getKey())

simSen=simSentArray[MMREntry.getKey()][entry.getKey()];

else

simSen=simSentArray[entry.getKey()][MMREntry.getKey()];

if(simSen>simSentence){

simSentence=simSen;

}

}

simSentence=λ*entry.getValue()-(1-λ)*simSentence;

if(simSentence>maxScore){

maxScore=simSentence;

index=entry.getKey();//句子编号

}

}

MMR_SentScore.put(index, maxScore);

if(MMR_SentScore.size()==sortedLinkedSent.size())

flag=false;

}

//System.out.println("MMR out...");

return MMR_SentScore;

}

/**

* 每个句子的相似度,这里使用简单的jaccard方法,计算所有句子的两两相似度

* @return

*/

private double[][] sentJSimilarity(){

//System.out.println("sentJSimilarity in...");

int size=sentSegmentWords.size();

double[][] simSent=new double[size][size];

for(Map.Entry> entry:sentSegmentWords.entrySet()){

for(Map.Entry> entry1:sentSegmentWords.entrySet()){

if(entry.getKey()>=entry1.getKey()) continue;

int commonWords=0;

double sim=0.0;

for(String entryStr:entry.getValue()){

if(entry1.getValue().contains(entryStr))

commonWords++;

}

sim=1.0*commonWords/(entry.getValue().size()+entry1.getValue().size()-commonWords);

simSent[entry.getKey()][entry1.getKey()]=sim;

}

}

//System.out.println("sentJSimilarity out...");

return simSent;

}

public static void main(String[] args){

NewsSummary summary=new NewsSummary();

String text="我国古代历史演义小说的代表作。明代小说家罗贯中依据有关三国的历史、杂记,在广泛吸取民间传说和民间艺人创作成果的基础上,加工、再创作了这部长篇章回小说。" +

"作品写的是汉末到晋初这一历史时期魏、蜀、吴三个封建统治集团间政治、军事、外交等各方面的复杂斗争。通过这些描写,揭露了社会的黑暗与腐朽,谴责了统治阶级的残暴与奸诈," +

"反映了人民在动乱时代的苦难和明君仁政的愿望。小说也反映了作者对农民起义的偏见,以及因果报应和宿命论等思想。战争描写是《三国演义》突出的艺术成就。" +

"这部小说通过惊心动魄的军事、政治斗争,运用夸张、对比、烘托、渲染等艺术手法,成功地塑造了诸葛亮、曹操、关羽、张飞等一批鲜明、生动的人物形象。" +

"《三国演义》结构宏伟而又严密精巧,语言简洁、明快、生动。有的评论认为这部作品在艺术上的不足之处是人物性格缺乏发展变化,有的人物渲染夸张过分导致失真。" +

"《三国演义》标志着历史演义小说的辉煌成就。在传播政治、军事斗争经验、推动历史演义创作的繁荣等方面都起过积极作用。" +

"《三国演义》的版本主要有明嘉靖刻本《三国志通俗演义》和清毛宗岗增删评点的《三国志演义》";

String keySentences=summary.SummaryMeanstdTxt(text);

System.out.println("summary: "+keySentences);

String topSentences=summary.SummaryTopNTxt(text);

System.out.println("summary: "+topSentences);

String mmrSentences=summary.SummaryMMRNTxt(text);

System.out.println("summary: "+mmrSentences);

}

}

另外也可以引用汉语语言处理包,该包里面有摘要的方法

import com.hankcs.hanlp.HanLP; import com.hankcs.hanlp.corpus.tag.Nature; import com.hankcs.hanlp.seg.common.Term;

//摘要,200是摘要的最大长度 String summary = HanLP.getSummary(txt,200); 引用hanlp-portable-1.5.2.jar和hanlp-solr-plugin-1.1.2.jar