sparkRDD

- Apache Spark

背景介绍

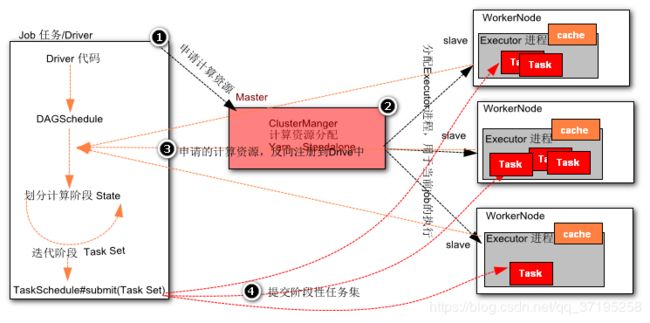

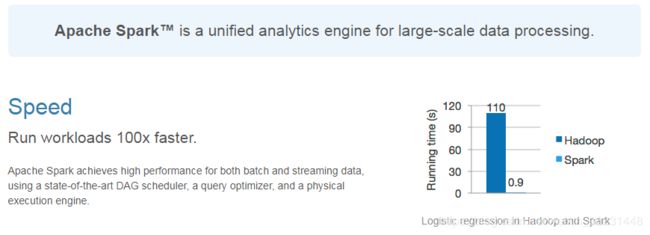

Spark是一个快如闪电的统一分析引擎(计算框架)用于大规模数据集的处理。Spark在做数据的批处理计算,计算性能大约是Hadoop MapReduce的10~100倍,因为Spark使用比较先进的基于DAG 任务调度,可以将一个任务拆分成若干个阶段,然后将这些阶段分批次交给集群计算节点处理。

MapReduce VS Spark

MapReduce作为第一代大数据处理框架,在设计初期只是为了满足基于海量数据级的海量数据计算的迫切需求。自2006年剥离自Nutch(Java搜索引擎)工程,主要解决的是早期人们对大数据的初级认知所面临的问题。

整个MapReduce的计算实现的是基于磁盘的IO计算,随着大数据技术的不断普及,人们开始重新定义大数据的处理方式,不仅仅满足于能在合理的时间范围内完成对大数据的计算,还对计算的实效性提出了更苛刻的要求,因为人们开始探索使用Map Reduce计算框架完成一些复杂的高阶算法,往往这些算法通常不能通过1次性的Map Reduce迭代计算完成。由于Map Reduce计算模型总是把结果存储到磁盘中,每次迭代都需要将数据磁盘加载到内存,这就为后续的迭代带来了更多延长。

2009年Spark在加州伯克利AMP实验室诞生,2010首次开源后该项目就受到很多开发人员的喜爱,2013年6月份开始在Apache孵化,2014年2月份正式成为Apache的顶级项目。Spark发展如此之快是因为Spark在计算层方面明显优于Hadoop的Map Reduce这磁盘迭代计算,因为Spark可以使用内存对数据做计算,而且计算的中间结果也可以缓存在内存中,这就为后续的迭代计算节省了时间,大幅度的提升了针对于海量数据的计算效率。

Spark也给出了在使用MapReduce和Spark做线性回归计算(算法实现需要n次迭代)上,Spark的速率几乎是MapReduce计算10~100倍这种计算速度。

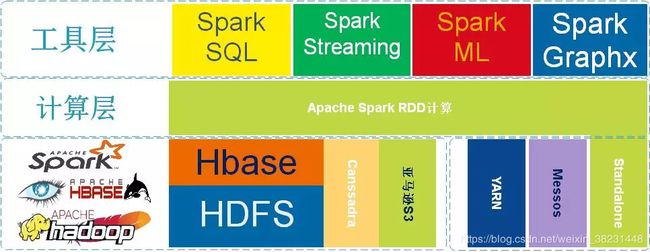

不仅如此Spark在设计理念中也提出了One stack ruled them all战略,并且提供了基于Spark批处理至上的计算服务分支例如:实现基于Spark的交互查询、近实时流处理、机器学习、Grahx 图形关系存储等。

从图中不难看出Apache Spark处于计算层,Spark项目在战略上启到了承上启下的作用,并没有废弃原有以hadoop为主体的大数据解决方案。因为Spark向下可以计算来自于HDFS、HBase、Cassandra和亚马逊S3文件服务器的数据,也就意味着使用Spark作为计算层,用户原有的存储层架构无需改动。

计算流程

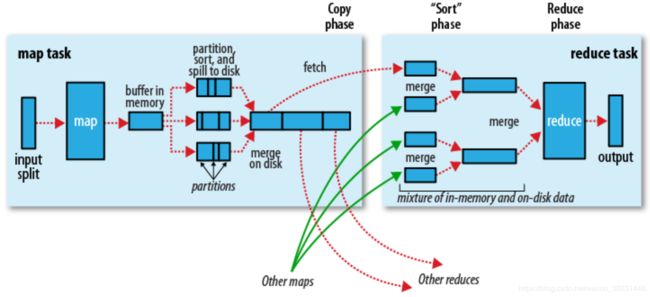

因为Spark计算是在MapReduce计算之后诞生,吸取了MapReduce设计经验,极大地规避了MapReduce计算过程中的诟病,先来回顾一下MapReduce计算的流程。

总结一下几点缺点:

1)MapReduce虽然基于矢量编程思想,但是计算状态过于简单,只是简单的将任务分为Map state和Reduce State,没有考虑到迭代计算场景。

2)在Map任务计算的中间结果存储到本地磁盘,IO调用过多,数据读写效率差。

3)MapReduce是先提交任务,然后在计算过程中申请资源。并且计算方式过于笨重。每个并行度都是由一个JVM进程来实现计算。

通过简单的罗列不难发现MapReduce计算的诟病和问题,因此Spark在计算层面上借鉴了MapReduce计算设计的经验,提出了DGASchedule和TaskSchedual概念,打破了在MapReduce任务中一个job只用Map State和Reduce State的两个阶段,并不适合一些迭代计算次数比较多的场景。因此Spark 提出了一个比较先进的设计理念,任务状态拆分,Spark在任务计算初期首先通过DGASchedule计算任务的State,将每个阶段的Sate封装成一个TaskSet,然后由TaskSchedual将TaskSet提交集群进行计算。可以尝试将Spark计算的流程使用一下的流程图描述如下:

相比较于MapReduce计算,Spark计算有以下优点:

1)智能DAG任务拆分,将一个复杂计算拆分成若干个State,满足迭代计算场景

2)Spark提供了计算的缓存和容错策略,将计算结果存储在内存或者磁盘,加速每个state的运行,提升运行效率

3)Spark在计算初期,就已经申请好计算资源。任务并行度是通过在Executor进程中启动线程实现,相比较于MapReduce计算更加轻快。

目前Spark提供了Cluster Manager的实现由Yarn、Standalone、Messso、kubernates等实现。其中企业常用的有Yarn和Standalone方式的管理。

环境搭建

Standalone

Hadoop环境

- 设置CentOS进程数和文件数(重启)

[root@CentOS ~]# vi /etc/security/limits.conf

* soft nofile 204800

* hard nofile 204800

* soft nproc 204800

* hard nproc 204800

[root@CentOS ~]# reboot

- 配置主机名(重启)

[root@CentOS ~]# vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=CentOS

[root@CentOS ~]# reboot

- 设置IP映射

[root@CentOS ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.111.132 CentOS

- 防火墙服务

[root@CentOS ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@CentOS ~]# chkconfig iptables off

- 安装JDK1.8+

[root@CentOS ~]# rpm -ivh jdk-8u191-linux-x64.rpm

warning: jdk-8u191-linux-x64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@CentOS ~]# vi .bashrc

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source ~/.bashrc

- SSH配置免密

[root@CentOS ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

a5:2d:f5:c3:22:83:cf:13:25:59:fb:c1:f4:63:06:d4 root@CentOS

The key's randomart image is:

+--[ RSA 2048]----+

| ..+. |

| o + oE |

| o = o = |

| . B + + . |

| . S o = |

| o = . . |

| + |

| . |

| |

+-----------------+

[root@CentOS ~]# ssh-copy-id CentOS

The authenticity of host 'centos (192.168.111.132)' can't be established.

RSA key fingerprint is fa:1b:c0:23:86:ff:08:5e:83:ba:65:4c:e6:f2:1f:3b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'centos,192.168.111.132' (RSA) to the list of known hosts.

root@centos's password:`需要输入密码`

Now try logging into the machine, with "ssh 'CentOS'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

- 配置HDFS

将hadoop-2.9.2.tar.gz解压到系统的/usr目录下然后配置[core|hdfs]-site.xml配置文件。

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/core-site.xml

<property>

<name>fs.defaultFSname>

<value>hdfs://CentOS:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/hadoop-2.9.2/hadoop-${user.name}value>

property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>CentOS:50090value>

property>

<property>

<name>dfs.datanode.max.xcieversname>

<value>4096value>

property>

<property>

<name>dfs.datanode.handler.countname>

<value>6value>

property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/slaves

CentOS

- 配置hadoop环境变量

[root@CentOS ~]# vi .bashrc

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

export HADOOP_HOME

[root@CentOS ~]# source .bashrc

- 启动Hadoop服务

[root@CentOS ~]# hdfs namenode -format # 创建初始化所需的fsimage文件

[root@CentOS ~]# start-dfs.sh

- Spark环境

下载spark-2.4.3-bin-without-hadoop.tgz解压到/usr目录,并且将Spark目录修改名字为spark-2.4.3然后修改spark-env.sh和spark-default.conf文件.

下载地址:http://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-2.4.3/spark-2.4.3-bin-without-hadoop.tgz

- 解压Spark安装包,并且修改解压文件名

[root@CentOS ~]# tar -zxf spark-2.4.3-bin-without-hadoop.tgz -C /usr/

[root@CentOS ~]# mv /usr/spark-2.4.3-bin-without-hadoop/ /usr/spark-2.4.3

[root@CentOS ~]# vi .bashrc

SPARK_HOME=/usr/spark-2.4.3

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SPARK_HOME/bin:$SPARK_HOME/sbin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

export HADOOP_HOME

export SPARK_HOME

[root@CentOS ~]# source .bashrc

- 配置Spark服务

[root@CentOS spark-2.4.3]# cd /usr/spark-2.4.3/conf/

[root@CentOS conf]# mv spark-env.sh.template spark-env.sh

[root@CentOS conf]# mv slaves.template slaves

[root@CentOS conf]# vi slaves

CentOS

[root@CentOS conf]# vi spark-env.sh

SPARK_MASTER_HOST=CentOS

SPARK_MASTER_PORT=7077

SPARK_WORKER_CORES=4

SPARK_WORKER_MEMORY=2g

LD_LIBRARY_PATH=/usr/hadoop-2.9.2/lib/native

SPARK_DIST_CLASSPATH=$(hadoop classpath)

export SPARK_MASTER_HOST

export SPARK_MASTER_PORT

export SPARK_WORKER_CORES

export SPARK_WORKER_MEMORY

export LD_LIBRARY_PATH

export SPARK_DIST_CLASSPATH

- 启动Spark进程

[root@CentOS ~]# cd /usr/spark-2.4.3/

[root@CentOS spark-2.4.3]# ./sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.master.Master-1-CentOS.out

CentOS: starting org.apache.spark.deploy.worker.Worker, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-CentOS.out

- 测试Spark

[root@CentOS spark-2.4.3]# ./bin/spark-shell

--master spark://CentOS:7077

--deploy-mode client

--executor-cores 2

executor-cores:在standalone模式表示程序每个Worker节点分配资源数。不能超过单台自大core个数,如果不清每台能够分配的最大core的个数,可以使用--total-executor-cores,该种分配会尽最大可能分配。

scala> sc.textFile("hdfs:///words/t_words",5)

.flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_)

.sortBy(_._1,true,3)

.saveAsTextFile("hdfs:///results")

[外链图片转存失败(img-4UO1zS9f-1562290253342)(assets/1561713568413.png)]

Spark On Yarn

Hadoop环境

- 设置CentOS进程数和文件数(重启)

[root@CentOS ~]# vi /etc/security/limits.conf

* soft nofile 204800

* hard nofile 204800

* soft nproc 204800

* hard nproc 204800

[root@CentOS ~]# reboot

- 配置主机名(重启)

[root@CentOS ~]# vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=CentOS

[root@CentOS ~]# reboot

- 设置IP映射

[root@CentOS ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.111.132 CentOS

- 防火墙服务

[root@CentOS ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@CentOS ~]# chkconfig iptables off

- 安装JDK1.8+

[root@CentOS ~]# rpm -ivh jdk-8u191-linux-x64.rpm

warning: jdk-8u191-linux-x64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@CentOS ~]# vi .bashrc

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source ~/.bashrc

- SSH配置免密

[root@CentOS ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

a5:2d:f5:c3:22:83:cf:13:25:59:fb:c1:f4:63:06:d4 root@CentOS

The key's randomart image is:

+--[ RSA 2048]----+

| ..+. |

| o + oE |

| o = o = |

| . B + + . |

| . S o = |

| o = . . |

| + |

| . |

| |

+-----------------+

[root@CentOS ~]# ssh-copy-id CentOS

The authenticity of host 'centos (192.168.111.132)' can't be established.

RSA key fingerprint is fa:1b:c0:23:86:ff:08:5e:83:ba:65:4c:e6:f2:1f:3b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'centos,192.168.111.132' (RSA) to the list of known hosts.

root@centos's password:`需要输入密码`

Now try logging into the machine, with "ssh 'CentOS'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

- 配置HDFS

将hadoop-2.9.2.tar.gz解压到系统的/usr目录下然后配置[core|hdfs]-site.xml配置文件。

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/core-site.xml

<property>

<name>fs.defaultFSname>

<value>hdfs://CentOS:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/hadoop-2.9.2/hadoop-${user.name}value>

property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>CentOS:50090value>

property>

<property>

<name>dfs.datanode.max.xcieversname>

<value>4096value>

property>

<property>

<name>dfs.datanode.handler.countname>

<value>6value>

property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/slaves

CentOS

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>CentOSvalue>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/mapred-site.xml

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

- 配置hadoop环境变量

[root@CentOS ~]# vi .bashrc

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

export HADOOP_HOME

[root@CentOS ~]# source .bashrc

- 启动Hadoop服务

[root@CentOS ~]# hdfs namenode -format # 创建初始化所需的fsimage文件

[root@CentOS ~]# start-dfs.sh

[root@CentOS ~]# start-yarn.sh

- Spark环境

下载spark-2.4.3-bin-without-hadoop.tgz解压到/usr目录,并且将Spark目录修改名字为spark-2.4.3然后修改spark-env.sh和spark-default.conf文件.

下载地址:http://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-2.4.3/spark-2.4.3-bin-without-hadoop.tgz

- 解压Spark安装包,并且修改解压文件名

[root@CentOS ~]# tar -zxf spark-2.4.3-bin-without-hadoop.tgz -C /usr/

[root@CentOS ~]# mv /usr/spark-2.4.3-bin-without-hadoop/ /usr/spark-2.4.3

[root@CentOS ~]# vi .bashrc

SPARK_HOME=/usr/spark-2.4.3

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SPARK_HOME/bin:$SPARK_HOME/sbin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

export HADOOP_HOME

export SPARK_HOME

[root@CentOS ~]# source .bashrc

- 配置Spark服务

[root@CentOS spark-2.4.3]# cd /usr/spark-2.4.3/conf/

[root@CentOS conf]# mv spark-env.sh.template spark-env.sh

[root@CentOS conf]# vi spark-env.sh

HADOOP_CONF_DIR=/usr/hadoop-2.9.2/etc/hadoop

YARN_CONF_DIR=/usr/hadoop-2.9.2/etc/hadoop

SPARK_EXECUTOR_CORES=4

SPARK_EXECUTOR_MEMORY=2G

SPARK_DRIVER_MEMORY=1G

LD_LIBRARY_PATH=/usr/hadoop-2.9.2/lib/native

SPARK_DIST_CLASSPATH=$(hadoop classpath):$SPARK_DIST_CLASSPATH

SPARK_HISTORY_OPTS="-Dspark.history.fs.logDirectory=hdfs:///spark-logs"

export HADOOP_CONF_DIR

export YARN_CONF_DIR

export SPARK_EXECUTOR_CORES

export SPARK_DRIVER_MEMORY

export SPARK_EXECUTOR_MEMORY

export LD_LIBRARY_PATH

export SPARK_DIST_CLASSPATH

export SPARK_HISTORY_OPTS

[root@CentOS conf]# mv spark-defaults.conf.template spark-defaults.conf

[root@CentOS conf]# vi spark-defaults.conf

spark.eventLog.enabled=true

spark.eventLog.dir=hdfs:///spark-logs

在HDFS上创建spark-logs目录,用于作为Sparkhistory服务器存储数据的地方。

[root@CentOS ~]# hdfs dfs -mkdir /spark-logs

- 启动Spark历史服务器(可选)

[root@CentOS spark-2.4.3]# ./sbin/start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.history.HistoryServer-1-CentOS.out

[root@CentOS spark-2.4.3]# jps

5728 NodeManager

5090 NameNode

5235 DataNode

10531 Jps

5623 ResourceManager

5416 SecondaryNameNode

10459 HistoryServer

改进程启动一个内嵌的web ui端口是18080,用户可以访问改页面查看任务执行计划、历史。

- 测试Spark

./bin/spark-shell

--master yarn

--deploy-mode client

--num-executors 2

--executor-cores 3

--num-executors:在Yarn模式下,表示向NodeManager申请的资源数进程,--executor-cores表示每个进程所能运行线程数。真个任务计算资源= num-executors * executor-core

scala> sc.textFile("hdfs:///words/t_words",5)

.flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_)

.sortBy(_._1,true,3)

.saveAsTextFile("hdfs:///results")

[外链图片转存失败(img-DuyBQG3x-1562290253343)(assets/1561713568413.png)]

本地仿真

在该种模式下,无需安装yarn、无需启动Stanalone,一切都是模拟。

[root@CentOS spark-2.4.3]# ./bin/spark-shell --master local[5]

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://CentOS:4040

Spark context available as 'sc' (master = local[5], app id = local-1561742649329).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.3

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_191)

Type in expressions to have them evaluated.

Type :help for more information.

scala> sc.textFile("hdfs:///words/t_words").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).sortBy(_._1,false,3).saveAsTextFile("hdfs:///results1/")

scala>

Spark 开发环境构建

- 引入开发所需依赖

<dependencies>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.11artifactId>

<version>2.4.3version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>4.0.1version>

<executions>

<execution>

<id>scala-compile-firstid>

<phase>process-resourcesphase>

<goals>

<goal>add-sourcegoal>

<goal>compilegoal>

goals>

execution>

executions>

plugin>

plugins>

build>

SparkRDDWordCount(本地)

//1.创建SparkContext

val conf = new SparkConf().setMaster("local[10]").setAppName("wordcount")

val sc = new SparkContext(conf)

val lineRDD: RDD[String] = sc.textFile("file:///E:/demo/words/t_word.txt")

lineRDD.flatMap(line=>line.split(" "))

.map(word=>(word,1))

.groupByKey()

.map(tuple=>(tuple._1,tuple._2.sum))

.sortBy(tuple=>tuple._2,false,1)

.collect()

.foreach(tuple=>println(tuple._1+"->"+tuple._2))

//3.关闭sc

sc.stop()

集群(yarn)

//1.创建SparkContext

val conf = new SparkConf().setMaster("yarn").setAppName("wordcount")

val sc = new SparkContext(conf)

val lineRDD: RDD[String] = sc.textFile("hdfs:///words/t_words")

lineRDD.flatMap(line=>line.split(" "))

.map(word=>(word,1))

.groupByKey()

.map(tuple=>(tuple._1,tuple._2.sum))

.sortBy(tuple=>tuple._2,false,1)

.collect()

.foreach(tuple=>println(tuple._1+"->"+tuple._2))

//3.关闭sc

sc.stop()

发布:

[root@CentOS spark-2.4.3]# ./bin/spark-submit --master yarn --deploy-mode client --class com.baizhi.demo02.SparkRDDWordCount --num-executors 3 --executor-cores 4 /root/sparkrdd-1.0-SNAPSHOT.jar

集群(standalone)

//1.创建SparkContext

val conf = new SparkConf().setMaster("spark://CentOS:7077").setAppName("wordcount")

val sc = new SparkContext(conf)

val lineRDD: RDD[String] = sc.textFile("hdfs:///words/t_words")

lineRDD.flatMap(line=>line.split(" "))

.map(word=>(word,1))

.groupByKey()

.map(tuple=>(tuple._1,tuple._2.sum))

.sortBy(tuple=>tuple._2,false,1)

.collect()

.foreach(tuple=>println(tuple._1+"->"+tuple._2))

//3.关闭sc

sc.stop()

发布:

[root@CentOS spark-2.4.3]# ./bin/spark-submit --master spark://CentOS:7077 --deploy-mode client --class com.baizhi.demo02.SparkRDDWordCount --num-executors 3 --total-executor-cores 4 /root/sparkrdd-1.0-SNAPSHOT.jar

RDD详解 (理论-面试)

Spark计算中一个重要的概念就是可以跨越多个节点的可伸缩分布式数据集 RDD(resilient distributed

dataset) Spark的内存计算的核心就是RDD的并行计算。RDD可以理解是一个弹性的,分布式、不可变的、带有分区的数据集合,所谓的Spark的批处理,实际上就是正对RDD的集合操作,RDD有以下特点:

- 任意一个RDD都包含分区数(决定程序某个阶段计算并行度)

- RDD所谓的分布式计算是在分区内部计算的

- 因为RDD是只读的,RDD之间的变换存着依赖关系(宽依赖、窄依赖)

- 针对于k-v类型的RDD,一般可以指定分区策略(一般系统提供)

- 针对于存储在HDFS上的文件,系统可以计算最优位置,计算每个切片。(了解)

如下案例:

[外链图片转存失败(img-abHO2qNQ-1562290253344)(assets/1561948333785.png)]

通过上诉的代码中不难发现,Spark的整个任务的计算无外乎围绕RDD的三种类型操作RDD创建、RDD转换、RDD Action.通常习惯性的将flatMap/map/reduceByKey称为RDD的转换算子,collect触发任务执行,因此被人们称为动作算子。在Spark中所有的Transform算子都是lazy执行的,只有在Action算子的时候,Spark才会真正的运行任务,也就是说只有遇到Action算子的时候,SparkContext才会对任务做DAG状态拆分,系统才会计算每个状态下任务的TaskSet,继而TaskSchedule才会将任务提交给Executors执行。现将以上字符统计计算流程描述如下:

[外链图片转存失败(img-4c63gyuI-1562290253344)(assets/1561949521439.png)]

textFile(“路径”,分区数) -> flatMap -> map -> reduceByKey -> sortBy在这些转换中其中flatMap/map

、reduceByKey、sotBy都是转换算子,所有的转换算子都是Lazy执行的。程序在遇到collect(Action 算子)系统会触发job执行。Spark底层会按照RDD的依赖关系将整个计算拆分成若干个阶段,我们通常将RDD的依赖关系称为RDD的血统-lineage。血统的依赖通常包含:宽依赖、窄依赖。

RDD容错

在理解DAGSchedule如何做状态划分的前提是需要大家了解一个专业术语lineage通常被人们称为RDD的血统。在了解什么是RDD的血统之前,先来看看程序猿进化过程。

[外链图片转存失败(img-OeYbcCjt-1562290253345)(assets/20190423150135519.png)]

上图中描述了一个程序猿起源变化的过程,我们可以近似的理解类似于RDD的转换也是一样的,Spark的计算本质就是对RDD做各种转换,因为RDD是一个不可变只读的集合,因此每次的转换都需要上一次的RDD作为本次转换的输入,因此RDD的lineage描述的是RDD间的相互依赖关系。为了保证RDD中数据的健壮性,RDD数据集通过所谓的血统关系(Lineage)记住了它是如何从其它RDD中演变过来的。Spark将RDD之间的关系归类为宽依赖和窄依赖。Spark会根据Lineage存储的RDD的依赖关系对RDD计算做故障容错,目前Saprk的容错策略更具RDD依赖关系重新计算、对RDD做Cache、对RDD做Checkpoint手段完成RDD计算的故障容错。

宽依赖|窄依赖

RDD在Lineage依赖方面分为两种Narrow Dependencies与Wide Dependencies用来解决数据容错的高效性。Narrow Dependencies是指父RDD的每一个分区最多被一个子RDD的分区所用,表现为一个父RDD的分区对应于一个子RDD的分区或多个父RDD的分区对应于子RDD的一个分区,也就是说一个父RDD的一个分区不可能对应一个子RDD的多个分区。Wide Dependencies父RDD的一个分区对应一个子RDD的多个分区。

对于Wide Dependencies这种计算的输入和输出在不同的节点上,一般需要夸节点做Shuffle,因此如果是RDD在做宽依赖恢复的时候需要多个节点重新计算成本较高。相对于Narrow Dependencies RDD间的计算是在同一个Task当中实现的是线程内部的的计算,因此在RDD分区数据丢失的的时候,也非常容易恢复。

Sage划分(重点)

Spark任务阶段的划分是按照RDD的lineage关系逆向生成的这么一个过程,Spark任务提交的流程大致如下图所示:

[外链图片转存失败(img-FvgDjMPN-1562290253345)(assets/20190423150251657.png)]

这里可以分析一下DAGScheduel中对State拆分的逻辑代码片段如下所示:

- DAGScheduler.scala 第

719行

def runJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

resultHandler: (Int, U) => Unit,

properties: Properties): Unit = {

val start = System.nanoTime

val waiter = submitJob(rdd, func, partitions, callSite, resultHandler, properties)

//...

}

- DAGScheduler - 675行

def submitJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

resultHandler: (Int, U) => Unit,

properties: Properties): JobWaiter[U] = {

//eventProcessLoop 实现的是一个队列,系统底层会调用 doOnReceive -> case JobSubmitted -> dagScheduler.handleJobSubmitted(951行)

eventProcessLoop.post(JobSubmitted(

jobId, rdd, func2, partitions.toArray, callSite, waiter,

SerializationUtils.clone(properties)))

waiter

}

- DAGScheduler - 951行

private[scheduler] def handleJobSubmitted(jobId: Int,

finalRDD: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

callSite: CallSite,

listener: JobListener,

properties: Properties) {

var finalStage: ResultStage = null

try {

//...

finalStage = createResultStage(finalRDD, func, partitions, jobId, callSite)

} catch {

//...

}

submitStage(finalStage)

}

- DAGScheduler - 1060行

private def submitStage(stage: Stage) {

val jobId = activeJobForStage(stage)

if (jobId.isDefined) {

logDebug("submitStage(" + stage + ")")

if (!waitingStages(stage) && !runningStages(stage) && !failedStages(stage)) {

//计算当前State的父Stage

val missing = getMissingParentStages(stage).sortBy(_.id)

logDebug("missing: " + missing)

if (missing.isEmpty) {

logInfo("Submitting " + stage + " (" + stage.rdd + "), which has no missing parents")

//如果当前的State没有父Stage,就提交当前Stage中的Task

submitMissingTasks(stage, jobId.get)

} else {

for (parent <- missing) {

//递归查找当前父Stage的父Stage

submitStage(parent)

}

waitingStages += stage

}

}

} else {

abortStage(stage, "No active job for stage " + stage.id, None)

}

}

- DAGScheduler - 549行 (获取当前State的父State)

private def getMissingParentStages(stage: Stage): List[Stage] = {

val missing = new HashSet[Stage]

val visited = new HashSet[RDD[_]]

// We are manually maintaining a stack here to prevent StackOverflowError

// caused by recursively visiting

val waitingForVisit = new ArrayStack[RDD[_]]//栈

def visit(rdd: RDD[_]) {

if (!visited(rdd)) {

visited += rdd

val rddHasUncachedPartitions = getCacheLocs(rdd).contains(Nil)

if (rddHasUncachedPartitions) {

for (dep <- rdd.dependencies) {

dep match {

//如果是宽依赖ShuffleDependency,就添加一个Stage

case shufDep: ShuffleDependency,[_, _, _] =>

val mapStage = getOrCreateShuffleMapStage(shufDep, stage.firstJobId)

if (!mapStage.isAvailable) {

missing += mapStage

}

//如果是窄依赖NarrowDependency,将当前的父RDD添加到栈中

case narrowDep: NarrowDependency[_] =>

waitingForVisit.push(narrowDep.rdd)

}

}

}

}

}

waitingForVisit.push(stage.rdd)

while (waitingForVisit.nonEmpty) {//循环遍历栈,计算 stage

visit(waitingForVisit.pop())

}

missing.toList

}

- DAGScheduler - 1083行 (提交当前Stage的TaskSet)

private def submitMissingTasks(stage: Stage, jobId: Int) {

logDebug("submitMissingTasks(" + stage + ")")

// First figure out the indexes of partition ids to compute.

val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()

// Use the scheduling pool, job group, description, etc. from an ActiveJob associated

// with this Stage

val properties = jobIdToActiveJob(jobId).properties

runningStages += stage

// SparkListenerStageSubmitted should be posted before testing whether tasks are

// serializable. If tasks are not serializable, a SparkListenerStageCompleted event

// will be posted, which should always come after a corresponding SparkListenerStageSubmitted

// event.

stage match {

case s: ShuffleMapStage =>

outputCommitCoordinator.stageStart(stage = s.id, maxPartitionId = s.numPartitions - 1)

case s: ResultStage =>

outputCommitCoordinator.stageStart(

stage = s.id, maxPartitionId = s.rdd.partitions.length - 1)

}

val taskIdToLocations: Map[Int, Seq[TaskLocation]] = try {

stage match {

case s: ShuffleMapStage =>

partitionsToCompute.map { id => (id, getPreferredLocs(stage.rdd, id))}.toMap

case s: ResultStage =>

partitionsToCompute.map { id =>

val p = s.partitions(id)

(id, getPreferredLocs(stage.rdd, p))

}.toMap

}

} catch {

case NonFatal(e) =>

stage.makeNewStageAttempt(partitionsToCompute.size)

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}

stage.makeNewStageAttempt(partitionsToCompute.size, taskIdToLocations.values.toSeq)

// If there are tasks to execute, record the submission time of the stage. Otherwise,

// post the even without the submission time, which indicates that this stage was

// skipped.

if (partitionsToCompute.nonEmpty) {

stage.latestInfo.submissionTime = Some(clock.getTimeMillis())

}

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

// TODO: Maybe we can keep the taskBinary in Stage to avoid serializing it multiple times.

// Broadcasted binary for the task, used to dispatch tasks to executors. Note that we broadcast

// the serialized copy of the RDD and for each task we will deserialize it, which means each

// task gets a different copy of the RDD. This provides stronger isolation between tasks that

// might modify state of objects referenced in their closures. This is necessary in Hadoop

// where the JobConf/Configuration object is not thread-safe.

var taskBinary: Broadcast[Array[Byte]] = null

var partitions: Array[Partition] = null

try {

// For ShuffleMapTask, serialize and broadcast (rdd, shuffleDep).

// For ResultTask, serialize and broadcast (rdd, func).

var taskBinaryBytes: Array[Byte] = null

// taskBinaryBytes and partitions are both effected by the checkpoint status. We need

// this synchronization in case another concurrent job is checkpointing this RDD, so we get a

// consistent view of both variables.

RDDCheckpointData.synchronized {

taskBinaryBytes = stage match {

case stage: ShuffleMapStage =>

JavaUtils.bufferToArray(

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef))

case stage: ResultStage =>

JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef))

}

partitions = stage.rdd.partitions

}

taskBinary = sc.broadcast(taskBinaryBytes)

} catch {

// In the case of a failure during serialization, abort the stage.

case e: NotSerializableException =>

abortStage(stage, "Task not serializable: " + e.toString, Some(e))

runningStages -= stage

// Abort execution

return

case e: Throwable =>

abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

// Abort execution

return

}

val tasks: Seq[Task[_]] = try {

val serializedTaskMetrics = closureSerializer.serialize(stage.latestInfo.taskMetrics).array()

stage match {

case stage: ShuffleMapStage =>

stage.pendingPartitions.clear()

partitionsToCompute.map { id =>

val locs = taskIdToLocations(id)

val part = partitions(id)

stage.pendingPartitions += id

new ShuffleMapTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, properties, serializedTaskMetrics, Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId, stage.rdd.isBarrier())

}

case stage: ResultStage =>

partitionsToCompute.map { id =>

val p: Int = stage.partitions(id)

val part = partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, id, properties, serializedTaskMetrics,

Option(jobId), Option(sc.applicationId), sc.applicationAttemptId,

stage.rdd.isBarrier())

}

}

} catch {

case NonFatal(e) =>

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}

if (tasks.size > 0) {

logInfo(s"Submitting ${tasks.size} missing tasks from $stage (${stage.rdd}) (first 15 " +

s"tasks are for partitions ${tasks.take(15).map(_.partitionId)})")

taskScheduler.submitTasks(new TaskSet(

tasks.toArray, stage.id, stage.latestInfo.attemptNumber, jobId, properties))

} else {

// Because we posted SparkListenerStageSubmitted earlier, we should mark

// the stage as completed here in case there are no tasks to run

markStageAsFinished(stage, None)

stage match {

case stage: ShuffleMapStage =>

logDebug(s"Stage ${stage} is actually done; " +

s"(available: ${stage.isAvailable}," +

s"available outputs: ${stage.numAvailableOutputs}," +

s"partitions: ${stage.numPartitions})")

markMapStageJobsAsFinished(stage)

case stage : ResultStage =>

logDebug(s"Stage ${stage} is actually done; (partitions: ${stage.numPartitions})")

}

submitWaitingChildStages(stage)

}

}

状态小节

通过以上源码分析,可以得出Spark所谓宽窄依赖事实上指的是ShuffleDependency或者是NarrowDependency如果是ShuffleDependency系统会生成一个ShuffeMapStage,如果是NarrowDependency则忽略,归为当前Stage。当系统回推到起始RDD的时候因为发现当前RDD或者ShuffleMapStage没有父Stage的时候,当前系统会将当前State下的Task封装成ShuffleMapTask(如果是ResultStage就是ResultTask),当前Task的数目等于当前state分区的分区数。然后将Task封装成TaskSet通过调用taskScheduler.submitTasks将任务提交给集群。

RDD缓存

缓存是一种RDD计算容错的一种手段,程序在RDD数据丢失的时候,可以通过缓存快速计算当前RDD的值,而不需要反推出所有的RDD重新计算,因此Spark在需要对某个RDD多次使用的时候,为了提高程序的执行效率用户可以考虑使用RDD的cache。如下测试:

val conf = new SparkConf()

.setAppName("word-count")

.setMaster("local[2]")

val sc = new SparkContext(conf)

val value: RDD[String] = sc.textFile("file:///D:/demo/words/")

.cache()

value.count()

var begin=System.currentTimeMillis()

value.count()

var end=System.currentTimeMillis()

println("耗时:"+ (end-begin))//耗时:253

//失效缓存

value.unpersist()

begin=System.currentTimeMillis()

value.count()

end=System.currentTimeMillis()

println("不使用缓存耗时:"+ (end-begin))//2029

sc.stop()

除了调用cache之外,Spark提供了更细粒度的RDD缓存方案,用户可以根据集群的内存状态选择合适的缓存策略。用户可以使用persist方法指定缓存级别。缓存级别有如下可选项:

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

xxRDD.persist(StorageLevel.MEMORY_AND_DISK_SER_2)

其中:

MEMORY_ONLY:表示数据完全不经过序列化存储在内存中,效率高,但是有可能导致内存溢出.

MEMORY_ONLY_SER和MEMORY_ONLY一样,只不过需要对RDD的数据做序列化,牺牲CPU节省内存,同样会导致内存溢出可能。

其中

_2表示缓存结果有备份,如果大家不确定该使用哪种级别,一般推荐MEMORY_AND_DISK_SER_2

Check Point 机制

除了使用缓存机制可以有效的保证RDD的故障恢复,但是如果缓存失效还是会在导致系统重新计算RDD的结果,所以对于一些RDD的lineage较长的场景,计算比较耗时,用户可以尝试使用checkpoint机制存储RDD的计算结果,该种机制和缓存最大的不同在于,使用checkpoint之后被checkpoint的RDD数据直接持久化在文件系统中,一般推荐将结果写在hdfs中,这种checpoint并不会自动清空。注意checkpoint在计算的过程中先是对RDD做mark,在任务执行结束后,再对mark的RDD实行checkpoint,也就是要重新计算被Mark之后的rdd的依赖和结果,因此为了避免Mark RDD重复计算,推荐使用策略

val conf = new SparkConf().setMaster("yarn").setAppName("wordcount")

val sc = new SparkContext(conf)

sc.setCheckpointDir("hdfs:///checkpoints")

val lineRDD: RDD[String] = sc.textFile("hdfs:///words/t_word.txt")

val cacheRdd = lineRDD.flatMap(line => line.split(" "))

.map(word => (word, 1))

.groupByKey()

.map(tuple => (tuple._1, tuple._2.sum))

.sortBy(tuple => tuple._2, false, 1)

.cache()

cacheRdd.checkpoint()

cacheRdd.collect().foreach(tuple=>println(tuple._1+"->"+tuple._2))

cacheRdd.unpersist()

//3.关闭sc

sc.stop()

RDD算子实战

转换算子

map(function)

传入的集合元素进行RDD[T]转换 def map(f: T => U): org.apache.spark.rdd.RDD[U]

scala> sc.parallelize(List(1,2,3,4,5),3).map(item => item*2+" " )

res1: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[2] at map at :25

scala> sc.parallelize(List(1,2,3,4,5),3).map(item => item*2+" " ).collect

res2: Array[String] = Array("2 ", "4 ", "6 ", "8 ", "10 ")

filter(func)

将满足条件结果记录 def filter(f: T=> Boolean): org.apache.spark.rdd.RDD[T]

scala> sc.parallelize(List(1,2,3,4,5),3).filter(item=> item%2==0).collect

res3: Array[Int] = Array(2, 4)

flatMap(func)

将一个元素转换成元素的数组,然后对数组展开。def flatMap[U](f: T=> TraversableOnce[U]): org.apache.spark.rdd.RDD[U]

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).collect

res4: Array[String] = Array(ni, hao, hello, spark)

mapPartitions(func)

与map类似,但在RDD的每个分区(块)上单独运行,因此当在类型T的RDD上运行时,func必须是Iterator 类型

def mapPartitions[U](f: Iterator[Int] => Iterator[U],preservesPartitioning: Boolean): org.apache.spark.rdd.RDD[U]

scala> sc.parallelize(List(1,2,3,4,5),3).mapPartitions(items=> for(i<-items;if(i%2==0)) yield i*2 ).collect()

res7: Array[Int] = Array(4, 8)

mapPartitionsWithIndex(func)

与mapPartitions类似,但也为func提供了表示分区索引的整数值,因此当在类型T的RDD上运行时,func必须是类型(Int,Iterator 。

def mapPartitionsWithIndex[U](f: (Int, Iterator[T]) => Iterator[U],preservesPartitioning: Boolean): org.apache.spark.rdd.RDD[U]

scala> sc.parallelize(List(1,2,3,4,5),3).mapPartitionsWithIndex((p,items)=> for(i<-items) yield (p,i)).collect

res11: Array[(Int, Int)] = Array((0,1), (1,2), (1,3), (2,4), (2,5))

sample(withReplacement, fraction, seed)

对数据进行一定比例的采样,使用withReplacement参数控制是否允许重复采样。

def sample(withReplacement: Boolean,fraction: Double,seed: Long): org.apache.spark.rdd.RDD[T]

scala> sc.parallelize(List(1,2,3,4,5,6,7),3).sample(false,0.7,1L).collect

res13: Array[Int] = Array(1, 4, 6, 7)

union(otherDataset)

返回一个新数据集,其中包含源数据集和参数中元素的并集。

def union(other: org.apache.spark.rdd.RDD[T]): org.apache.spark.rdd.RDD[T]

scala> var rdd1=sc.parallelize(Array(("张三",1000),("李四",100),("赵六",300)))

scala> var rdd2=sc.parallelize(Array(("张三",1000),("王五",100),("温七",300)))

scala> rdd1.union(rdd2).collect

res16: Array[(String, Int)] = Array((张三,1000), (李四,100), (赵六,300), (张三,1000), (王五,100), (温七,300))

intersection(otherDataset)

返回包含源数据集和参数中元素交集的新RDD。

def intersection(other: org.apache.spark.rdd.RDD[T],numPartitions: Int): org.apache.spark.rdd.RDD[T]

scala> var rdd1=sc.parallelize(Array(("张三",1000),("李四",100),("赵六",300)))

scala> var rdd2=sc.parallelize(Array(("张三",1000),("王五",100),("温七",300)))

scala> rdd1.intersection(rdd2).collect

res17: Array[(String, Int)] = Array((张三,1000))

distinct([numPartitions]))

返回包含源数据集的不同元素的新数据集。

scala> sc.parallelize(List(1,2,3,3,5,7,2),3).distinct.collect

res19: Array[Int] = Array(3, 1, 7, 5, 2)

groupByKey([numPartitions])

在(K,V)对的数据集上调用时,返回(K,Iterable 对的数据集。 注意:如果要对每个键执行聚合(例如总和或平均值)进行分组,则使用reduceByKey或aggregateByKey将产生更好的性能。 注意:默认情况下,输出中的并行级别取决于父RDD的分区数。您可以传递可选的numPartitions参数来设置不同数量的任务。

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).groupByKey(3).map(tuple=>(tuple._1,tuple._2.sum)).collect

reduceByKey(func, [numPartitions])

当调用(K,V)对的数据集时,返回(K,V)对的数据集,其中使用给定的reduce函数func聚合每个键的值,该函数必须是类型(V,V)=> V.

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).reduceByKey((v1,v2)=>v1+v2).collect()

res33: Array[(String, Int)] = Array((hao,1), (hello,1), (spark,1), (ni,1))

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).reduceByKey(_+_).collect()

res34: Array[(String, Int)] = Array((hao,1), (hello,1), (spark,1), (ni,1))

aggregateByKey(zeroValue)(seqOp, combOp, [numPartitions])

当调用(K,V)对的数据集时,返回(K,U)对的数据集,其中使用给定的组合函数和中性“零”值聚合每个键的值。允许与输入值类型不同的聚合值类型,同时避免不必要的分配。

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).aggregateByKey(0L)((z,v)=>z+v,(u1,u2)=>u1+u2).collect

res35: Array[(String, Long)] = Array((hao,1), (hello,1), (spark,1), (ni,1))

sortByKey([ascending], [numPartitions])

当调用K实现Ordered的(K,V)对数据集时,返回按键升序或降序排序的(K,V)对数据集,如布尔升序参数中所指定。

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).aggregateByKey(0L)((z,v)=>z+v,(u1,u2)=>u1+u2).sortByKey(false).collect()

res37: Array[(String, Long)] = Array((spark,1), (ni,1), (hello,1), (hao,1))

sortBy(func,[ascending], [numPartitions])**

对(K,V)数据集调用sortBy时,用户可以通过指定func指定排序规则,T => U 要求U必须实现Ordered接口

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(line=>line.split("\\s+")).map(word=>(word,1)).aggregateByKey(0L)((z,v)=>z+v,(u1,u2)=>u1+u2).sortBy(_._2,true,2).collect

res42: Array[(String, Long)] = Array((hao,1), (hello,1), (spark,1), (ni,1))

join

当调用类型(K,V)和(K,W)的数据集时,返回(K,(V,W))对的数据集以及每个键的所有元素对。通过leftOuterJoin,rightOuterJoin和fullOuterJoin支持外连接。

scala> var rdd1=sc.parallelize(Array(("001","张三"),("002","李四"),("003","王五")))

scala> var rdd2=sc.parallelize(Array(("001",("apple",18.0)),("001",("orange",18.0))))

scala> rdd1.join(rdd2).collect

res43: Array[(String, (String, (String, Double)))] = Array((001,(张三,(apple,18.0))), (001,(张三,(orange,18.0))))

cogroup

当调用类型(K,V)和(K,W)的数据集时,返回(K,(Iterable ,Iterable ))元组的数据集。此操作也称为groupWith。

scala> var rdd1=sc.parallelize(Array(("001","张三"),("002","李四"),("003","王五")))

scala> var rdd2=sc.parallelize(Array(("001","apple"),("001","orange"),("002","book")))

scala> rdd1.cogroup(rdd2).collect()

res46: Array[(String, (Iterable[String], Iterable[String]))] = Array((001,(CompactBuffer(张三),CompactBuffer(apple, orange))), (002,(CompactBuffer(李四),CompactBuffer(book))), (003,(CompactBuffer(王五),CompactBuffer())))

cartesian

当调用类型为T和U的数据集时,返回(T,U)对的数据集(所有元素对)。

scala> var rdd1=sc.parallelize(List("a","b","c"))

scala> var rdd2=sc.parallelize(List(1,2,3,4))

scala> rdd1.cartesian(rdd2).collect()

res47: Array[(String, Int)] = Array((a,1), (a,2), (a,3), (a,4), (b,1), (b,2), (b,3), (b,4), (c,1), (c,2), (c,3), (c,4))

coalesce(numPartitions)

将RDD中的分区数减少为numPartitions。过滤大型数据集后,可以使用概算子减少分区数。

scala> sc.parallelize(List("ni hao","hello spark"),3).coalesce(1).partitions.length

res50: Int = 1

scala> sc.parallelize(List("ni hao","hello spark"),3).coalesce(1).getNumPartitions

res51: Int = 1

repartition

随机重新调整RDD中的数据以创建更多或更少的分区。

scala> sc.parallelize(List("a","b","c"),3).mapPartitionsWithIndex((index,values)=>for(i<-values) yield (index,i) ).collect

res52: Array[(Int, String)] = Array((0,a), (1,b), (2,c))

scala> sc.parallelize(List("a","b","c"),3).repartition(2).mapPartitionsWithIndex((index,values)=>for(i<-values) yield (index,i) ).collect

res53: Array[(Int, String)] = Array((0,a), (0,c), (1,b))

动作算子

collect

用在测试环境下,通常使用collect算子将远程计算的结果拿到Drvier端,注意一般数据量比较小,用于测试。

scala> var rdd1=sc.parallelize(List(1,2,3,4,5),3).collect().foreach(println)

saveAsTextFile

将计算结果存储在文件系统中,一般存储在HDFS上

scala> sc.parallelize(List("ni hao","hello spark"),3).flatMap(_.split("\\s+")).map((_,1)).reduceByKey(_+_).sortBy(_._2,false,3).saveAsTextFile("hdfs:///wordcounts")

foreach

迭代遍历所有的RDD中的元素,通常是将foreach传递的数据写到外围系统中,比如说可以将数据写入到Hbase中。

scala> sc.parallelize(List(“ni hao”,“hello spark”),3).flatMap(.split("\s+")).map((,1)).reduceByKey(+).sortBy(_._2,false,3).foreach(println)

(hao,1)

(hello,1)

(spark,1)

(ni,1)

注意如果使用以上代码写数据到外围系统,会因为不断创建和关闭连接影响写入效率,一般推荐使用foreachPartition

val lineRDD: RDD[String] = sc.textFile("file:///E:/demo/words/t_word.txt")

lineRDD.flatMap(line=>line.split(" "))

.map(word=>(word,1))

.groupByKey()

.map(tuple=>(tuple._1,tuple._2.sum))

.sortBy(tuple=>tuple._2,false,3)

.foreachPartition(items=>{

//创建连接

items.foreach(t=>println("存储到数据库"+t))

//关闭连接

})

共享变量

变量广播

通常情况下,当一个RDD的很多操作都需要使用driver中定义的变量时,每次操作,driver都要把变量发送给worker节点一次,如果这个变量中的数据很大的话,会产生很高的传输负载,导致执行效率降低。使用广播变量可以使程序高效地将一个很大的只读数据发送给多个worker节点,而且对每个worker节点只需要传输一次,每次操作时executor可以直接获取本地保存的数据副本,不需要多次传输。

val conf = new SparkConf().setAppName("demo").setMaster("local[2]")

val sc = new SparkContext(conf)

val userList = List(

"001,张三,28,0",

"002,李四,18,1",

"003,王五,38,0",

"004,zhaoliu,38,-1"

)

val genderMap = Map("0" -> "女", "1" -> "男")

val bcMap = sc.broadcast(genderMap)

sc.parallelize(userList,3)

.map(info=>{

val prefix = info.substring(0, info.lastIndexOf(","))

val gender = info.substring(info.lastIndexOf(",") + 1)

val genderMapValue = bcMap.value

val newGender = genderMapValue.getOrElse(gender, "未知")

prefix + "," + newGender

}).collect().foreach(println)

sc.stop()

累加器

Spark提供的Accumulator,主要用于多个节点对一个变量进行共享性的操作。Accumulator只提供了累加的功能。但是确给我们提供了多个task对一个变量并行操作的功能。但是task只能对Accumulator进行累加操作,不能读取它的值。只有Driver程序可以读取Accumulator的值。

scala> var count=sc.longAccumulator("count")

scala> sc.parallelize(List(1,2,3,4,5,6),3).foreach(item=> count.add(item))

scala> count.value

res1: Long = 21