1 hwservice启动过程与binder驱动的关系

int main() {

configureRpcThreadpool(1, true /* callerWillJoin */);

ServiceManager *manager = new ServiceManager();

if (!manager->add(serviceName, manager)) {

ALOGE("Failed to register hwservicemanager with itself.");

}

TokenManager *tokenManager = new TokenManager();

if (!manager->add(serviceName, tokenManager)) {

ALOGE("Failed to register ITokenManager with hwservicemanager.");

}

// Tell IPCThreadState we're the service manager

sp service = new BnHwServiceManager(manager);

IPCThreadState::self()->setTheContextObject(service);

// Then tell the kernel

ProcessState::self()->becomeContextManager(nullptr, nullptr);

int rc = property_set("hwservicemanager.ready", "true");

if (rc) {

ALOGE("Failed to set \"hwservicemanager.ready\" (error %d). "\

"HAL services will not start!\n", rc);

}

joinRpcThreadpool();

return 0;

第一步:

IPCThreadState::self()->setTheContextObject(service);

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

调用open_driver,主要打开 /dev/hwbinder

int fd = open("/dev/hwbinder", O_RDWR | O_CLOEXEC);

DEFAULT_BINDER_VM_SIZE 默认mm空间是1M

mVMStart = mmap(0, mMmapSize, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

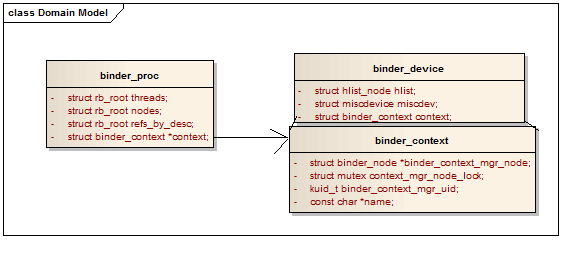

驱动部分的binder_open 主要是分配binder_proc 和 binder_device通过file的private_data指向proc接口。他们之间的映射关系如下:

mmap建立,调用binder_mmap操作

主要操作是

static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{

vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE;

vma->vm_ops = &binder_vm_ops;

vma->vm_private_data = proc;

ret = binder_alloc_mmap_handler(&proc->alloc, vma);

binder_alloc_mmap_hander主要是分配page,初始化alloc结构,分配一个binder_buffer并初始化,添加到alloc->buffers队列中,相应的结构体创建如下:

第二步:

becomeContextManager 调用

status_t result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR, &dummy);

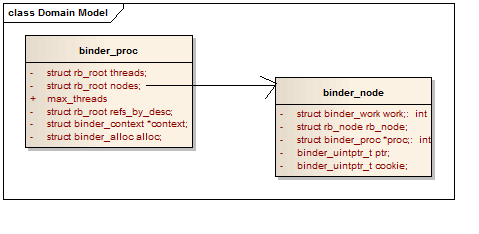

binder_ioctl执行开始binder_get_thread 将当前thread 的pid号绑定到一个binder_thread中,然后插入到proc的rb tree中

在binder driver内部的ioctl使用binder_ioctl_set_ctx_mgr函数如下:

主要操作是分配binder_node,将他插入到proc下的红黑树中

在new_node = binder_new_node(proc, NULL); 中fp为NULL因此node里面的cookie ptr 都为0

2 添加service

接下来就是要添加service到hwservice中去

先看下注册的接口如下:

::android::status_t ILight::registerAsService(const std::string &serviceName) {

::android::hardware::details::onRegistration("[email protected]", "ILight", serviceName);

const ::android::sp<::android::hidl::manager::V1_0::IServiceManager> sm

= ::android::hardware::defaultServiceManager();

if (sm == nullptr) {

return ::android::INVALID_OPERATION;

}

::android::hardware::Return ret = sm->add(serviceName.c_str(), this);

return ret.isOk() && ret ? ::android::OK : ::android::UNKNOWN_ERROR;

}

第一步:

调用defaultServiceManager拿到BpHwServiceManager

sp defaultServiceManager1_1() {

{

details::gDefaultServiceManager =

fromBinder(

ProcessState::self()->getContextObject(nullptr));

return details::gDefaultServiceManager;

}

ProcessState::self()->getContextObject(nullptr) 执行流程如下:

sp ProcessState::getContextObject(const sp& /*caller*/)

{

return getStrongProxyForHandle(0);

}

sp ProcessState::getStrongProxyForHandle(int32_t handle)

{

b = new BpHwBinder(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

}

fromBinder最终返回new BpHwServiceManager(BpHwBinder);

这里面在BpHwBinder创建的过程中,往mOut添加命令OBJECT_LIFETIME_WEAK,在后面的tranct执行时先被执行

BpHwBinder::BpHwBinder(int32_t handle)

: mHandle(handle)

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

{

ALOGV("Creating BpHwBinder %p handle %d\n", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

IPCThreadState::self()->incWeakHandle(handle);

}

void IPCThreadState::incWeakHandle(int32_t handle)

{

LOG_REMOTEREFS("IPCThreadState::incWeakHandle(%d)\n", handle);

mOut.writeInt32(BC_INCREFS);

mOut.writeInt32(handle);

}

具体到驱动是

如果handle为0 说明是同servicemanager通信,

if (increment && !target) {

struct binder_node *ctx_mgr_node;

mutex_lock(&context->context_mgr_node_lock);

ctx_mgr_node = context->binder_context_mgr_node;

if (ctx_mgr_node)

ret = binder_inc_ref_for_node(

proc, ctx_mgr_node,

strong, NULL, &rdata);

mutex_unlock(&context->context_mgr_node_lock);

}

if (ret)

ret = binder_update_ref_for_handle(

proc, target, increment, strong,

&rdata);

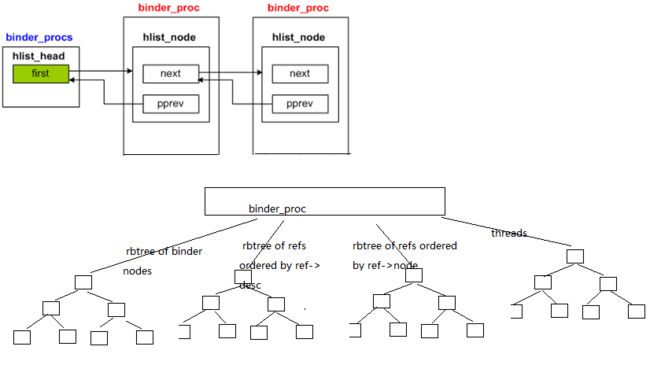

牵扯到ref node,得从proc说起,binder_proc有四个rb tree分别是

1 以node 为key binder_ref组成的rb tree

2 以desc为key,也就是handle,binder_ref组成的rb tree

3 node结构体组成的 rb_tree node->ptr为key

4 threads组成的 rb tree

其实就是对node 和 binder_ref_data 相应的计数器进行加减

struct binder_node {

int internal_strong_refs;

int local_weak_refs;

int local_strong_refs;

}

struct binder_ref_data {

int debug_id;

uint32_t desc;

int strong;

int weak;

};

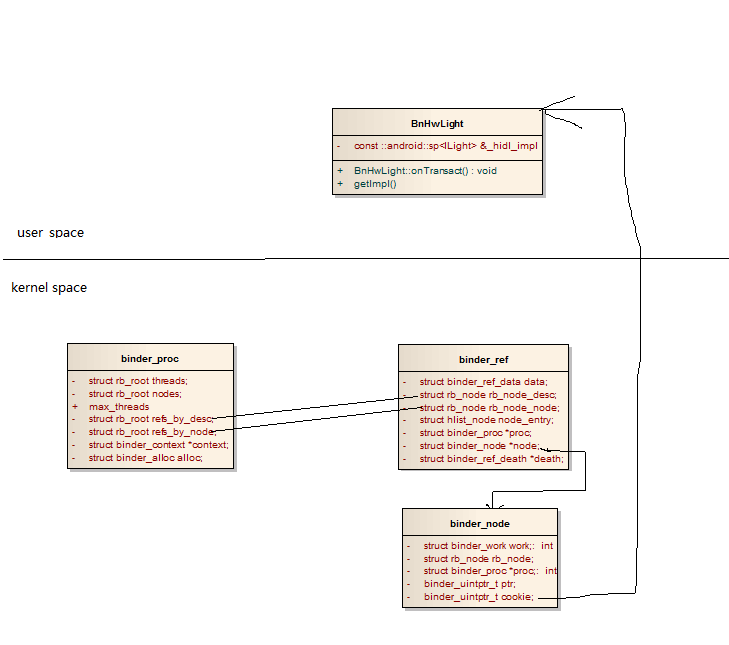

第二步 调用BpHwServiceManager->add(serviceName.c_str(), this);添加service

BpHwServiceManager::_hidl_add(

::android::sp<::android::hardware::IBinder> _hidl_binder = ::android::hardware::toBinder<

::android::hidl::base::V1_0::IBase>(service);

if (_hidl_binder.get() != nullptr) {

_hidl_err = _hidl_data.writeStrongBinder(_hidl_binder);

其中service为ILight,通过toBinder new一个return new BnHwLight(static_cast

status_t flatten_binder(const sp& /*proc*/,

const sp& binder, Parcel* out)

{

if (binder != NULL) {

sp real = binder.promote();

if (real != NULL) {

IBinder *local = real->localBinder();

if (!local) {

obj.hdr.type = BINDER_TYPE_WEAK_BINDER;

obj.binder = reinterpret_cast(binder.get_refs());

obj.cookie = reinterpret_cast(binder.unsafe_get());

BINDER_TYPE_WEAK_BINDER 命令是在驱动中的binder_translate_binder

switch (hdr->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_binder(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

} break;

分配一个node给这个binder的service,并创建ref指向这个node,分别插入到proc中的两个ref红黑树中

static int binder_translate_binder(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

struct binder_node *node;

struct binder_proc *proc = thread->proc;

struct binder_proc *target_proc = t->to_proc;

struct binder_ref_data rdata;

int ret = 0;

node = binder_get_node(proc, fp->binder);

if (!node) {

node = binder_new_node(proc, fp);

if (!node)

return -ENOMEM;

}

其实就是在HIDL_server处创建对应的binder_node和binder_ref,binder_node的cookie执行bnHWBase结构。

Parcel填写完毕后调用

_hidl_err = ::android::hardware::IInterface::asBinder(_hidl_this)->transact(2 /* add */, _hidl_data, &_hidl_reply);

最终调用BpHwBinder::transact

status_t BpHwBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags, TransactCallback /*callback*/)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

IPCThreadState::transact 执行流程

err = writeTransactionData(BC_TRANSACTION_SG, flags, handle, code, data, NULL);

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

writeTransactionData 填写mOut

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data_sg tr_sg;

/* Don't pass uninitialized stack data to a remote process */

tr_sg.transaction_data.target.ptr = 0;

tr_sg.transaction_data.target.handle = handle;

tr_sg.transaction_data.code = code;

tr_sg.transaction_data.flags = binderFlags;

tr_sg.transaction_data.cookie = 0;

tr_sg.transaction_data.sender_pid = 0;

tr_sg.transaction_data.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr_sg.transaction_data.data_size = data.ipcDataSize();

tr_sg.transaction_data.data.ptr.buffer = data.ipcData();

tr_sg.transaction_data.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr_sg.transaction_data.data.ptr.offsets = data.ipcObjects();

tr_sg.buffers_size = data.ipcBufferSize();

} else if (statusBuffer) {

tr_sg.transaction_data.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr_sg.transaction_data.data_size = sizeof(status_t);

tr_sg.transaction_data.data.ptr.buffer = reinterpret_cast(statusBuffer);

tr_sg.transaction_data.offsets_size = 0;

tr_sg.transaction_data.data.ptr.offsets = 0;

tr_sg.buffers_size = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr_sg, sizeof(tr_sg));

return NO_ERROR;

waitForResponse 开始调用talkWithDriver 填写binder_write_read bwr,调用 ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

bwr.write_consumed = 0;

bwr.read_consumed = 0;

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

这里牵扯到驱动的BINDER_WRITE_READ

binder_write_read 结构体结构如下:

struct binder_write_read {

binder_size_t write_size; /* bytes to write */

binder_size_t write_consumed; /* bytes consumed by driver */

binder_uintptr_t write_buffer;

binder_size_t read_size; /* bytes to read */

binder_size_t read_consumed; /* bytes consumed by driver */

binder_uintptr_t read_buffer;

};

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

} //第一次从bp端将binder_write_read copy到内核

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, //此处写数据

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

具体到

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

case BC_TRANSACTION_SG:

case BC_REPLY_SG: {

struct binder_transaction_data_sg tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr.transaction_data,

cmd == BC_REPLY_SG, tr.buffers_size);

break;

}

write过程主要是填写binder_transaction,copy buffer,最后通过binder_translate_handle将binder_transaction传递给目标thread, binder_transaction结构如下:

struct binder_transaction {

int debug_id;

struct binder_work work;

struct binder_thread *from; //写thread

struct binder_transaction *from_parent; //

struct binder_proc *to_proc; // 这里是hwservice 的proc

struct binder_thread *to_thread; // hwservice 的thread结构

struct binder_transaction *to_parent; //

unsigned need_reply:1;

/* unsigned is_dead:1; */ /* not used at the moment */

struct binder_buffer *buffer; //这里牵扯到一次copy从user到kernel 分配buffer

unsigned int code;

unsigned int flags;

struct binder_priority priority;

struct binder_priority saved_priority;

bool set_priority_called;

kuid_t sender_euid;

/**

* @lock: protects @from, @to_proc, and @to_thread

*

* @from, @to_proc, and @to_thread can be set to NULL

* during thread teardown

*/

spinlock_t lock;

};

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_handle(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

} break;

上面通过binder_translate_handle 得到handle 和cookie并赋值

struct flat_binder_object {

struct binder_object_header hdr;

__u32 flags;

/* 8 bytes of data. */

union {

binder_uintptr_t binder; /* local object */

__u32 handle; /* remote object */

};

/* extra data associated with local object */

binder_uintptr_t cookie;

};

最重要的一部就是 binder_proc_transaction

将binder_transaction 添加到thread->todo list上去,最终唤醒hwservice,这样完成了写的操作。

binder_enqueue_work_ilocked(&t->work, &target_thread->todo);

binder_inner_proc_unlock(target_proc);

wake_up_interruptible_sync(&target_thread->wait);

当hwservice完成service的添加之后,binder_write,添加到thread->todo list中,唤醒register_service线程,这时register_service应该阻塞在read中。

binder_ioctl_write_read中完成write后,阻塞在read中,等待read返回

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&proc->todo))

binder_wakeup_proc_ilocked(proc);

binder_inner_proc_unlock(proc);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_thread_read 将当前进程挂到thread->wait中去,等待唤醒

static int binder_wait_for_work(struct binder_thread *thread,

bool do_proc_work)

{

DEFINE_WAIT(wait);

struct binder_proc *proc = thread->proc;

int ret = 0;

freezer_do_not_count();

binder_inner_proc_lock(proc);

for (;;) {

prepare_to_wait(&thread->wait, &wait, TASK_INTERRUPTIBLE);

if (binder_has_work_ilocked(thread, do_proc_work))

break;

if (do_proc_work)

list_add(&thread->waiting_thread_node,

&proc->waiting_threads);

binder_inner_proc_unlock(proc);

schedule();

binder_inner_proc_lock(proc);

list_del_init(&thread->waiting_thread_node);

if (signal_pending(current)) {

ret = -ERESTARTSYS;

break;

}

}

finish_wait(&thread->wait, &wait);

binder_inner_proc_unlock(proc);

freezer_count();

return ret;

第三步 hwservice 添加server

此时hwservice也是阻塞在binder_read中,在被register线程唤醒后,拿到binder_transaction

w = binder_dequeue_work_head_ilocked(list);

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

binder_inner_proc_unlock(proc);

t = container_of(w, struct binder_transaction, work);

} break;

得到binder_transaction 将,完成其他参数的赋值

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

binder_inner_proc_lock(thread->proc);

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

binder_inner_proc_unlock(thread->proc);

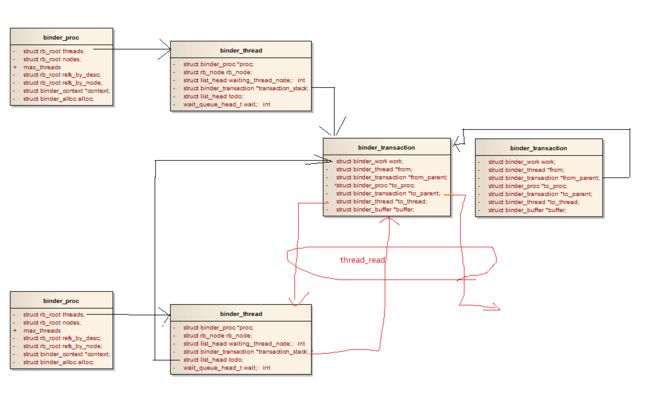

然后建立了如下的图,hwservice的transaction_stack也是执行这个binder_transaction

然后根据binder_transaction初始化binder_transaction_data

struct binder_transaction_data {

/* The first two are only used for bcTRANSACTION and brTRANSACTION,

* identifying the target and contents of the transaction.

*/

union {

/* target descriptor of command transaction */

__u32 handle;

/* target descriptor of return transaction */

binder_uintptr_t ptr;

} target;

binder_uintptr_t cookie; /* target object cookie */

__u32 code; /* transaction command */

/* General information about the transaction. */

__u32 flags;

pid_t sender_pid;

uid_t sender_euid;

binder_size_t data_size; /* number of bytes of data */

binder_size_t offsets_size; /* number of bytes of offsets */

/* If this transaction is inline, the data immediately

* follows here; otherwise, it ends with a pointer to

* the data buffer.

*/

union {

struct {

/* transaction data */

binder_uintptr_t buffer;

/* offsets from buffer to flat_binder_object structs */

binder_uintptr_t offsets;

} ptr;

__u8 buf[8];

} data;

};

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

tr.code = t->code;

tr.flags = t->flags;

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

tr.data.ptr.buffer = (binder_uintptr_t)

((uintptr_t)t->buffer->data +

binder_alloc_get_user_buffer_offset(&proc->alloc));

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr))) {

if (t_from)

binder_thread_dec_tmpref(t_from);

最后将数据返回给read线程,也就是hwservice

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

在hwservice的线程的用户态,将binder_transaction_data读出

if (tr.target.ptr) { //普通的HIDL server走这个分支

// We only have a weak reference on the target object, so we must first try to

// safely acquire a strong reference before doing anything else with it.

if (reinterpret_cast(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast(tr.cookie)->transact(tr.code, buffer,

&reply, tr.flags, reply_callback);

reinterpret_cast(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

}

} else {

error = mContextObject->transact(tr.code, buffer, &reply, tr.flags, reply_callback); //hwservice调用

}

其实调用的是BnHwServiceManager::onTransact

::android::status_t BnHwServiceManager::onTransact(

uint32_t _hidl_code,

const ::android::hardware::Parcel &_hidl_data,

::android::hardware::Parcel *_hidl_reply,

uint32_t _hidl_flags,

TransactCallback _hidl_cb) {

::android::status_t _hidl_err = ::android::OK;

switch (_hidl_code) {

case 1 /* get */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_get(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 2 /* add */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_add(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 3 /* getTransport */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_getTransport(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 4 /* list */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_list(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 5 /* listByInterface */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_listByInterface(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 6 /* registerForNotifications */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_registerForNotifications(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 7 /* debugDump */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_debugDump(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 8 /* registerPassthroughClient */:

{

bool _hidl_is_oneway = _hidl_flags & 1 /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_registerPassthroughClient(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

default:

{

return ::android::hidl::base::V1_0::BnHwBase::onTransact(

_hidl_code, _hidl_data, _hidl_reply, _hidl_flags, _hidl_cb);

}

}

if (_hidl_err == ::android::UNEXPECTED_NULL) {

_hidl_err = ::android::hardware::writeToParcel(

::android::hardware::Status::fromExceptionCode(::android::hardware::Status::EX_NULL_POINTER),

_hidl_reply);

}return _hidl_err;

}

在完成service的添加后,返回register线程调用结果

if ((tr.flags & TF_ONE_WAY) == 0) {

if (!reply_sent) {

// Should have been a reply but there wasn't, so there

// must have been an error instead.

reply.setError(error);

sendReply(reply, 0);

} else {

status_t IPCThreadState::sendReply(const Parcel& reply, uint32_t flags)

{

status_t err;

status_t statusBuffer;

err = writeTransactionData(BC_REPLY_SG, flags, -1, 0, reply, &statusBuffer);

if (err < NO_ERROR) return err;

return waitForResponse(NULL, NULL);

}

具体到驱动里

case BC_TRANSACTION_SG:

case BC_REPLY_SG: {

struct binder_transaction_data_sg tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr.transaction_data,

cmd == BC_REPLY_SG, tr.buffers_size);

break;

}

binder_transaction调用reply为true,拿到binder_transaction从而得到target_thread和target_proc

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

if (reply) {

binder_inner_proc_lock(proc);

in_reply_to = thread->transaction_stack;

剩下的与第二步相同,传输到register的todo中,唤醒,返回到register线程用户带,得到注册结果

第四步 client 调用getservice

4.1 注册到hwservice的IBinder

::android::status_t ILight::registerAsService(const std::string &serviceName) {

::android::hardware::details::onRegistration("[email protected]", "ILight", serviceName);

const ::android::sp<::android::hidl::manager::V1_0::IServiceManager> sm

= ::android::hardware::defaultServiceManager();

if (sm == nullptr) {

return ::android::INVALID_OPERATION;

}

::android::hardware::Return ret = sm->add(serviceName.c_str(), this);

return ret.isOk() && ret ? ::android::OK : ::android::UNKNOWN_ERROR;

}

注册的是 ILight其中isRemote()返回false

::android::sp<::android::hardware::IBinder> _hidl_binder = ::android::hardware::toBinder<

::android::hidl::base::V1_0::IBase>(service);

if (_hidl_binder.get() != nullptr) {

_hidl_err = _hidl_data.writeStrongBinder(_hidl_binder);

toBinder就根据ILight new一个BnHwLight,返回的父类IBinder指向BnHwLight

::android::sp<::android::hardware::IBinder> _hidl_binder = ::android::hardware::toBinder<

::android::hidl::base::V1_0::IBase>(service);

if (_hidl_binder.get() != nullptr) {

_hidl_err = _hidl_data.writeStrongBinder(_hidl_binder);

经过驱动的传输后,返回给上层处理的是 BINDER_TYPE_HANDLE 和binder_refs的键值

if (fp->hdr.type == BINDER_TYPE_BINDER)

fp->hdr.type = BINDER_TYPE_HANDLE;

else

fp->hdr.type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = rdata.desc;

fp->cookie = 0;

在BnHwServiceManager::_hidl_add 读出来的

::android::sp<::android::hardware::IBinder> _hidl_binder;

_hidl_err = _hidl_data.readNullableStrongBinder(&_hidl_binder);

service = ::android::hardware::fromBinder<::android::hidl::base::V1_0::IBase,::android::hidl::base::V1_0::BpHwBase,::android::hidl::base::V1_0::BnHwBase>(_hidl_binder);

readNullableStrongBinder 将cookie付给_hidl_binder

status_t unflatten_binder(const sp& proc,

const Parcel& in, sp* out)

{

const flat_binder_object* flat = in.readObject();

if (flat) {

switch (flat->hdr.type) {

case BINDER_TYPE_BINDER:

*out = reinterpret_cast(flat->cookie);

return finish_unflatten_binder(NULL, *flat, in);

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE:

*out = proc->getWeakProxyForHandle(flat->handle);

return finish_unflatten_binder(

static_cast(out->unsafe_get()), *flat, in);

根据handle new出一个BpHwBinder

fromBinder localBinder为null,new BpHwBase(BpHwBinder),从而添加到hwservice中去的是bpHwBase

bool _hidl_out_success = static_cast

4.2 getservice 过程

hwservice在拿到对应的BpHwBase结构后

BnHwServiceManager::_hidl_get

{

::android::sp<::android::hardware::IBinder> _hidl_binder = ::android::hardware::toBinder<

::android::hidl::base::V1_0::IBase>(_hidl_out_service);

if (_hidl_binder.get() != nullptr) {

_hidl_err = _hidl_reply->writeStrongBinder(_hidl_binder);

}

toBinder得到的是填写的flat->handle,在writeStrongBinder时然会给的是handle值

status_t flatten_binder(const sp& /*proc*/,

const sp& binder, Parcel* out)

{

flat_binder_object obj;

if (binder != NULL) {

BHwBinder *local = binder->localBinder();

if (!local) {

BpHwBinder *proxy = binder->remoteBinder();

if (proxy == NULL) {

ALOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.hdr.type = BINDER_TYPE_HANDLE;

obj.flags = FLAT_BINDER_FLAG_ACCEPTS_FDS;

obj.binder = 0; /* Don't pass uninitialized stack data to a remote process */

obj.handle = handle;

obj.cookie = 0;

BINDER_TYPE_HANDLE传输到驱动中

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_handle(fp, t, thread);

static int binder_translate_handle(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

if (node->proc == target_proc) { //这两个不相等,为Node分配binder_ref

} else {

int ret;

struct binder_ref_data dest_rdata;

binder_node_unlock(node);

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_HANDLE,

NULL, &dest_rdata);

if (ret)

goto done;

fp->binder = 0;

fp->handle = dest_rdata.desc;

fp->cookie = 0;

trace_binder_transaction_ref_to_ref(t, node, &src_rdata,

&dest_rdata);

这样返回给用户层的是新分配和绑定号的handle,驱动传输完成后的结构如下,这样通过bp的handler可以跟HIDL_service的node通信了

::android::hardware::Return<::android::sp<::android::hidl::base::V1_0::IBase>> BpHwServiceManager::_hidl_get

::android::sp<::android::hardware::IBinder> _hidl_binder;

_hidl_err = _hidl_reply.readNullableStrongBinder(&_hidl_binder);

if (_hidl_err != ::android::OK) { goto _hidl_error; }

case BINDER_TYPE_HANDLE:

*out = proc->getStrongProxyForHandle(flat->handle);

return finish_unflatten_binder(

static_cast(out->get()), *flat, in);

通过getStrongProxyForHandle 根据handle new出新的BpHwBinder

sp ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

// We need to create a new BpHwBinder if there isn't currently one, OR we

// are unable to acquire a weak reference on this current one. See comment

// in getWeakProxyForHandle() for more info about this.

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

b = new BpHwBinder(handle);

五总结

1)Hidl service

在HIDL_SEVICE侧注册node,并将node->proc指向target_proc也就是hwservice的proc

writeStrongBinder

BINDER_TYPE_WEAK_BINDER

2)在驱动执行完成后返回给hwservice的是分配一个binder_ref的handle

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_BINDER,

&thread->todo, &rdata);

3)在hwservice拿到这个handle后注册一个 通过new BpHwBase(BpHwBinder)

4)hidl_client通过get操作

5)hwservice writeStrongBinder 到驱动调用BINDER_TYPE_WEAK_HANDLE,将 sendReply(reply, 0);

还是使用binder_write,只不过这次在hid_client分配binder_ref 指向node,这样返回给hidl_client的是cliet端的handle

6)hidl_client拿到这个handle后注册bphwbinder

网上找了图,这个图介绍的比价清晰。