在JDK1.8的环境下搭建一个网络搜索引擎。

搜索引擎的基本功能:文本采集,文本转换,创建索引,用户交互,排序、评价。搭建一个搜索引擎也是从这几个功能入手的。

使用到框架:Crawler4j, Lucene

- 文本采集

这一步主要完成从网上爬取数据,网络爬虫通过追踪网页上的超链接找到并下载页面。

由于磁盘不够,没有配置python,加上只是简单实现,所以选择轻量的Crawler4j作为爬取框架。首先用idea创建一个maven项目。Crawler4j:https://github.com/yasserg/crawler4j

参照git里提供的代码,爬虫需要的类有(这里以爬取大学的网站http://www.cumtb.edu.cn/为例)

为了简单起见,现只爬取网站的title和链接,存入一个txt里,以供后续建立索引。

【正规情况下应该存入数据库】

import edu.uci.ics.crawler4j.crawler.Page;

import edu.uci.ics.crawler4j.crawler.WebCrawler;

import edu.uci.ics.crawler4j.parser.HtmlParseData;

import edu.uci.ics.crawler4j.url.WebURL;

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.util.regex.Pattern;

public class Crawler extends WebCrawler {

private final static Pattern FILTERS = Pattern.compile(".*(\\.(css|js|gif|jpg"

+ "|png|mp3|mp4|zip|gz))$");

FileWriter writer;

public Crawler() throws IOException {

File file = new File("src/crawldata/data.txt");

if (!file.exists()) file.createNewFile();

writer = new FileWriter(file);

}

/**

* This method receives two parameters. The first parameter is the page

* in which we have discovered this new url and the second parameter is

* the new url. You should implement this function to specify whether

* the given url should be crawled or not (based on your crawling logic).

* In this example, we are instructing the crawler to ignore urls that

* have css, js, git, ... extensions and to only accept urls that start

* with "https://www.ics.uci.edu/". In this case, we didn't need the

* referringPage parameter to make the decision.

*/

@Override

public boolean shouldVisit(Page referringPage, WebURL url) {

String href = url.getURL().toLowerCase();

return !FILTERS.matcher(href).matches()

&& href.startsWith("http://www.cumtb.edu.cn/");

}

/**

* This function is called when a page is fetched and ready

* to be processed by your program.

*/

@Override

public void visit(Page page) {

String url = page.getWebURL().getURL();

if (page.getParseData() instanceof HtmlParseData) {

HtmlParseData htmlParseData = (HtmlParseData) page.getParseData();

try {

//System.out.println(">>>>>>>" + htmlParseData.getTitle() + '\n');

//System.out.println(">>>>>>>" + url + '\n');

writer.write(htmlParseData.getTitle() + '\n');

writer.write(url + '\n');

writer.flush();

} catch (Exception e) {

System.out.println("*******************发生错误");

e.printStackTrace();

}

}

}

}

import edu.uci.ics.crawler4j.crawler.CrawlConfig;

import edu.uci.ics.crawler4j.crawler.CrawlController;

import edu.uci.ics.crawler4j.fetcher.PageFetcher;

import edu.uci.ics.crawler4j.robotstxt.RobotstxtConfig;

import edu.uci.ics.crawler4j.robotstxt.RobotstxtServer;

public class Controller {

public void startCrawl(String crawlStorageFolder) throws Exception{

int numberOfCrawlers = 7;

CrawlConfig config = new CrawlConfig();

config.setCrawlStorageFolder(crawlStorageFolder);

/*

* Instantiate the controller for this crawl.

*/

PageFetcher pageFetcher = new PageFetcher(config);

RobotstxtConfig robotstxtConfig = new RobotstxtConfig();

RobotstxtServer robotstxtServer = new RobotstxtServer(robotstxtConfig, pageFetcher);

CrawlController controller = new CrawlController(config, pageFetcher, robotstxtServer);

/*

* For each crawl, you need to add some seed urls. These are the first

* URLs that are fetched and then the crawler starts following links

* which are found in these pages

*/

controller.addSeed("http://www.cumtb.edu.cn/");

/*

* Start the crawl. This is a blocking operation, meaning that your code

* will reach the line after this only when crawling is finished.

*/

controller.start(Crawler.class, numberOfCrawlers);

}

}

public class test {

public static void main (String[] args) throws Exception{

new Controller().startCrawl("src/crawldata");

}

}

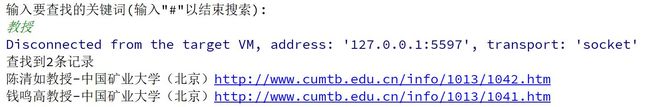

【爬取结果】

- 创建索引

索引是现代搜索引擎的核心,建立索引的过程就是把源数据处理成非常方便查询的索引文件的过程。

Lucene 采用的是一种称为反向索引(inverted index)的机制。反向索引就是说我们维护了一个词 / 短语表,对于这个表中的每个词 / 短语,都有一个链表描述了有哪些文档包含了这个词 / 短语。

在pom里导入所需要的包

org.apache.lucene

lucene-core

5.3.1

org.apache.lucene

lucene-analyzers-common

5.3.1

org.apache.lucene

lucene-queryparser

5.3.1

org.apache.lucene

lucene-analyzers-smartcn

5.3.1

org.apache.lucene

lucene-highlighter

5.3.1

考虑到内容不多,修改Crawler的代码,直接在内存里创建就好。

在Crawler里添加如下代码

writer.write(url + '\n');

writer.flush();

Indexer.titles.add(htmlParseData.getTitle());

Indexer.contents.add(url);

创建索引

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.cn.smart.SmartChineseAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.IntField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import java.nio.file.Paths;

import java.util.ArrayList;

import java.util.List;

public class Indexer {

public static List titles = new ArrayList<>();

public static List contents = new ArrayList<>();

private static final String INDEX_DIR = "src/crawldata/lucene";

public void createIndex() throws Exception{

Analyzer analyzer = new SmartChineseAnalyzer();

Directory directory = FSDirectory.open(Paths.get(INDEX_DIR));

IndexWriterConfig config = new IndexWriterConfig(analyzer);

IndexWriter indexWriter = new IndexWriter(directory, config);

//爬好之后创立索引

for(int i = 0; i < contents.size(); ++i) {

Document document = new Document();

document.add(new IntField("id", i, Field.Store.YES));

document.add(new TextField("title", titles.get(i), Field.Store.YES));

document.add(new TextField("url", contents.get(i), Field.Store.YES));

indexWriter.addDocument(document);

}

indexWriter.commit();

indexWriter.close();

}

}

运行下面语句创建索引

public static void main (String[] args) throws Exception{

new Controller().startCrawl("src/crawldata");

new Indexer().createIndex();

}

4.交互

对文档建立好索引后,就可以在这些索引上面进行搜索了。搜索引擎首先会对搜索的关键词进行解析,然后再在建立好的索引上面进行查找,最终返回和用户输入的关键词相关联的文档。

这里用控制台实现的,没有编写前端。

public class test {

public static void main (String[] args) throws Exception{

Scanner scanner = new Scanner(System.in);

System.out.println("输入要查找的关键词(输入\"#\"以结束搜索):");

String s = scanner.nextLine();

while (s != "#") {

SearchBuilder.search("src/crawldata/lucene", s);

System.out.println("输入要查找的关键词(输入\"#\"以结束搜索):");

s = scanner.nextLine();

}

}

}

参考

https://blog.csdn.net/yyhui95/article/details/72526193

https://www.ibm.com/developerworks/cn/java/j-lo-lucene1/index.html

https://blog.csdn.net/u014427391/article/details/80006401