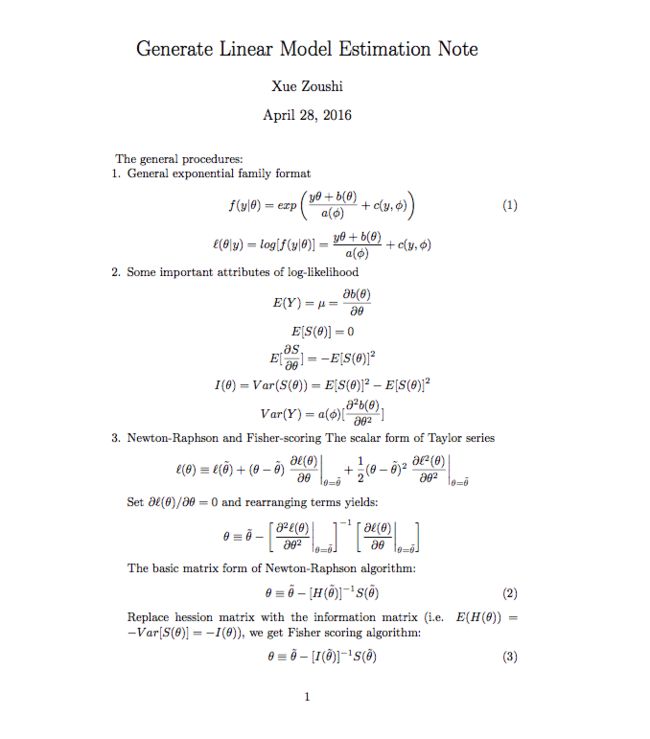

广义线性模型(glm)意为利用连接函数将各种分布(正态分布,二项分布,泊松分布)假设下的因变量与自变量想联系起来,使用十分广泛,下面是我之前的笔记,包含letex代码和相应的pdf文件,

letex代码:

'''

\documentclass{article}

\usepackage{paralist}

\begin{document}

\title{Generate Linear Model Estimation Note}

\author{Xue Zoushi }

\date{April 28, 2016}

\maketitle

The general procedures:

\begin{compactenum}

\item General exponential family format

\begin{equation}

f(y|\theta) = exp \left ( \frac{y\theta + b(\theta)}{a(\phi)} + c(y,\phi) \right)

\end{equation}

\[

\ell(\theta|y) =log[f(y|\theta)]= \frac{y\theta + b(\theta)}{a(\phi)} + c(y,\phi)

\]

\item Some important attributes of log-likelihood

\[ E(Y) = \mu = \frac{\partial b(\theta)} {\partial \theta} \]

\[ E[S(\theta)]= 0 \]

\[ E[\frac{\partial S}{\partial \theta}] = -E[S(\theta)]^2 \]

\[ I(\theta)= Var(S(\theta))= E[S(\theta)]^2 - {E[S(\theta)]}^2 \]

\[ Var(Y) = a(\phi)[\frac{\partial^2 b(\theta)}{\partial \theta ^ 2}] \]

\item Newton-Raphson and Fisher-scoring

The scalar form of Taylor series

\[ \ell(\theta) \equiv \ell(\tilde{\theta}) + (\theta - \tilde{\theta})

\left. \frac{\partial \ell (\theta)}{\partial \theta} \right |_{\theta = \tilde{\theta}} +

\frac{1}{2} (\theta - \tilde{\theta})^2 \left. \frac{\partial \ell^2 (\theta)}{\partial \theta^2}

\right |_{\theta = \tilde{\theta}}\]

Set \( \partial \ell (\theta) / \partial \theta = 0 \) and rearranging terms yields:

\[ \theta \equiv \tilde{\theta} -

\left [ \left . \frac{\partial ^2 \ell (\theta)}{\partial \theta ^2} \right |_{\theta=\tilde{\theta}}\right ]^{-1}

\left [ \left . \frac{\partial \ell (\theta)}{\partial \theta} \right |_{\theta=\tilde{\theta}} \right ] \]

The basic matrix form of Newton-Raphson algorithm:

\begin{equation}

\theta \equiv \tilde{\theta} - [H(\tilde{\theta})]^{-1} S(\tilde{\theta})

\end{equation}

Replace hession matrix with the information matrix (i.e. \( E(H(\theta))= -Var[S(\theta)]= -I(\theta) \)),

we get Fisher scoring algorithm:

\begin{equation}

\theta \equiv \tilde{\theta} - [I(\tilde{\theta})]^{-1} S(\tilde{\theta})

\end{equation}

\item Estimate the coefficient \( \beta \).

Scalar form

\begin{equation}

\frac{\partial \ell (\beta)}{\partial \beta} =

\frac{\partial \ell (\theta) }{ \partial \theta} \frac{\partial \theta }{ \partial \mu }

\frac{\partial \mu }{ \partial \eta } \frac{\partial \eta }{ \partial \beta }

\end{equation}

Some results:

\begin{itemize}

\item

\[ \frac{\partial \ell (\theta)}{\partial \theta} = \frac{y-\mu}{a(\phi)} \]

\item

\[\frac{\partial\theta}{\partial\mu}=\left(\frac{\partial\mu}{\partial\theta}\right)^{-1}=\frac{1}{V(\mu)}\]

\item

\[ \frac{\partial \eta}{\partial \beta} = \frac{\partial X \beta}{\beta}\]

\item

\[ \frac{\partial \ell(\beta)}{\partial \beta} =

(y-\mu)\left( \frac{1}{V(y)} \right)\left(\frac{\partial \mu}{\partial \eta} \right) X \]

\end{itemize}

Matrix form

\begin{equation}

\frac{\partial\ell(\theta)}{\partial \beta} = X^{'} D^{-1} V^{-1}(y-\mu)

\end{equation}

where $y$ is the $n\times1$ vector of observations, $\ell(\theta)$ is the $n\times 1$ vector of log-likelihood

values associated with observations, $V = diag[Var(y_{i})]$ is the $n \times n$ variance matrix of the

observations, $D=diag[\partial \eta_{i} / \partial \mu_{i}]$ is the $n \times n$ matrix of derivatives, and $\mu$

is the $n \times 1$ mean vector.\\

Let $W=(DVD)^{-1}$, we can get:

\[ S(\beta) = \frac{\partial \ell (\theta)}{\partial \beta}

= X^{'} D^{-1}V^{-1}(D^{-1}D)(y-\mu) = X^{'}WD(y-\mu) \]

\[ Var[S(\beta)] =X^{'}WD[Var(y-\mu)]DWX =X^{'}WDVDWX=X^{'}WX \]

\item Pseudo-Likelihood for GLM \\

Using Fisher scoring equation yields

$\beta = \tilde{\beta} +(X^{'}\tilde{W}X)^{-1}X^{'}\tilde{W}\tilde{D}(y-\tilde{\mu})$,

where $\tilde{W},\tilde{D}$, and $\mu$ evaluated at $\tilde{\beta}$. So GLM estimating equations:

\begin{equation}

X^{'}\tilde{W}X\beta = X^{'}\tilde{W}y^{*}

\end{equation}

where $y^{*} = X\tilde{\beta} + \tilde{D}(y-\tilde{\mu}) = \tilde{eta} + \tilde{D}(y-\tilde{\mu})$, and

$y^{*}$ is called the pseudo-variable.

\[ E(y^{*}) =E[X\tilde{\beta} + \tilde{D}(y - \tilde{\mu})] = X\beta \]

\[ Var(y^{*}) = E[X\tilde{\beta} + \tilde{D}(y - \tilde{\mu})] = \tilde{D}\tilde{V}\tilde{D}=\tilde{W}^{-1} \]

\begin{equation}

X^{'}[Var(y^{*})]^{-1}X\beta = X^{'}[Var(y^{*})]^{-1} \Rightarrow X^{'}WX\beta = X^{'}Wy^{*}

\end{equation}

\end{compactenum}

\end{document}

'''

使用emacs编辑,然后使用命令 pdfletex -glm_estimation.tex生成,生成文件在博客园的文件附件中。

下面是生成的pdf文件截图:

注:这是我博客园的文章迁移过来的 http://www.cnblogs.com/xuezoushi/p/5461293.html