文章目录

- 1. Time and Media Representations - 时间和媒体表现

- 1.1. Representation of Assets - 资产的表示

- 2. Representations of Time - 时间的表示

- 2.0.1. CMTime Represents a Length of Time - CMTime 表示时间的长度

- 2.0.1.1. Using CMTime - 使用 CMTime

- 2.0.1.2. Special Values of CMTime - CMTime 的特殊值

- 2.0.1.3. Representing CMTime as an Object - CMTime表示为一个对象

- 2.0.1.4. Epochs - 纪元

- 2.0.2. CMTimeRange Represents a Time Range - CMTimeRange表示一个时间范围

- 2.0.2.1. Working with Time Ranges - 与时间范围工作

- 2.0.2.2. Special Values of CMTimeRange - CMTimeRange 的特殊值

- 2.0.2.3. Representing a CMTimeRange Structure as an Object - 将 CMTimeRange 结构体表示为对象

- 2.0.1. CMTime Represents a Length of Time - CMTime 表示时间的长度

- 2.1. Representations of Media - 媒体的表示

- 2.2. Converting CMSampleBuffer to a UIImage Object - 将 CMSampleBuffer 转化为 UIImage 对象

Time and Media Representations - 时间和媒体表现

Time-based audiovisual data, such as a movie file or a video stream, is represented in the AV Foundation framework by AVAsset. Its structure dictates much of the framework works. Several low-level data structures that AV Foundation uses to represent time and media such as sample buffers come from the Core Media framework.

基于视听资料的时间,比如一个电影文件或视频流,在AV Foundation 框架中是由 AVAsset 代表的。它的结构决定了大部分的框架工程。一些低层的数据结构(AV Foundation 使用来表示时间和媒体,比如样本缓冲区)来自 Core Media framework。

Representation of Assets - 资产的表示

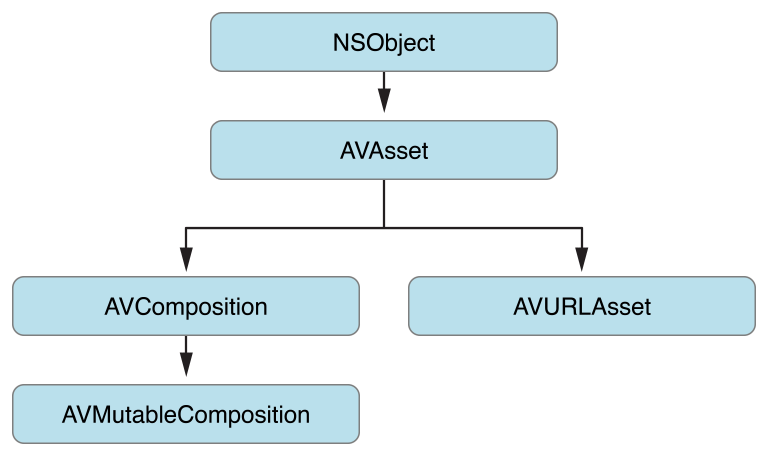

AVAsset is the core class in the AV Foundation framework. It provides a format-independent abstraction of time-based audiovisual data, such as a movie file or a video stream. The primary relationships are shown in Figure 6-1. In many cases, you work with one of its subclasses: You use the composition subclasses when you create new assets (see Editing), and you use AVURLAsset to create a new asset instance from media at a given URL (including assets from the MPMedia framework or the Asset Library framework—see Using Assets).

AVAsset 是 AV Foundation 框架的核心类。它提供了一个格式 – 与基于时间的视听数据的抽象无关,比如电影文件或视频流。主要的关系如图 6-1所示。在很多情况下,你都与它的一个子类一起工作:当你创建新的资产(见 Editing)使用组件的子类,并使用 AVURLAsset 从给定 URL 的媒体来创建一个新的资产实例。(包括来自 MPMedia 框架或者 Asset Library framework 的资产,见Using Assets)

An asset contains a collection of tracks that are intended to be presented or processed together, each of a uniform media type, including (but not limited to) audio, video, text, closed captions, and subtitles. The asset object provides information about whole resource, such as its duration or title, as well as hints for presentation, such as its natural size. Assets may also have metadata, represented by instances of AVMetadataItem.

资产包含了一组轨道,旨在被一起呈现或一起处理,每一个统一的媒体类型,包括(但不仅限于)音频、视频、文本、隐藏式字幕,以及字幕。资产对象提供关于整个资源的信息,比如它的持续时间或标题,以及用于呈现提示的信息,例如它的自然尺寸。资产也有可能拥有元数据,通过 AVMetadataItem 的实例表示。

A track is represented by an instance of AVAssetTrack, as shown in Figure 6-2. In a typical simple case, one track represents the audio component and another represents the video component; in a complex composition, there may be multiple overlapping tracks of audio and video.

轨道由 AVAssetTrack 的实例表示,如图 6-2所示。在一个典型简单的情况下,一个轨道代表代表音频组件,另一个代表视频组件;在复杂的组成中,可以存在音频和视频的多个重叠的轨道。

A track has a number of properties, such as its type (video or audio), visual and/or audible characteristics (as appropriate), metadata, and timeline (expressed in terms of its parent asset). A track also has an array of format descriptions. The array contains CMFormatDescription objects (see CMFormatDescriptionRef), each of which describes the format of media samples referenced by the track. A track that contains uniform media (for example, all encoded using to the same settings) will provide an array with a count of 1.

轨道有许多属性,比如它的类型(视频或者音频),视觉和/或听觉特性(根据需要),元数据和时间轴(在其父资产表示)。一个轨道也有格式描述的数组。数组包含 CMFormatDescription 对象(见 CMFormatDescriptionRef),其中每一个都描述了轨道引用的媒体样本的格式。包含了统一媒体的轨道(例如,所有使用相同设置的编码)将提供计数为 1 的数组。

A track may itself be divided into segments, represented by instances of AVAssetTrackSegment. A segment is a time mapping from the source to the asset track timeline.

轨道自身可以被分成几段,由 AVAssetTrackSegment 的实例表示。一个片段是一个时间映射,从资源到资产轨道时间轴的映射。

Representations of Time - 时间的表示

Time in AV Foundation is represented by primitive structures from the Core Media framework.

AV Foundation 中的时间是由来自 Core Media framework 的原始结构体表示的。

-

CMTime Represents a Length of Time -

CMTime表示时间的长度

CMTime is a C structure that represents time as a rational number, with a numerator (an int64_t value), and a denominator (an int32_t timescale). Conceptually, the timescale specifies the fraction of a second each unit in the numerator occupies. Thus if the timescale is 4, each unit represents a quarter of a second; if the timescale is 10, each unit represents a tenth of a second, and so on. You frequently use a timescale of 600, because this is a multiple of several commonly used frame rates: 24 fps for film, 30 fps for NTSC (used for TV in North America and Japan), and 25 fps for PAL (used for TV in Europe). Using a timescale of 600, you can exactly represent any number of frames in these systems.

CMTime 是一个C语言的结构体,以一个有理数表示时间,有一个分子(一个 int64_t 值)和一个分母(一个 int32_t 时间刻度)。在概念上讲,时间刻度指定一秒中每个单元占据的分数。因此如果时间刻度为 4,每个单元代表一秒的四分之一;如果时间刻度为 10,每个单元代表一秒的十分之一,等等。经常使用时间刻度为 600,因为这是因为这是几种常用帧速率的倍数:24 fps的电影, 30 fps 的NTSC(用在北美洲和日本的电视),25 fps的 PAL(用于欧洲电视)。使用 600的时间刻度,可以在这些系统中精确的表示任意数量的帧。

In addition to a simple time value, a CMTime structure can represent nonnumeric values: +infinity, -infinity, and indefinite. It can also indicate whether the time been rounded at some point, and it maintains an epoch number.

除了简单的时间值,CMTime 结构体可以表示非数字的值:正无穷大、负无穷大,不确定的。它也可以表示时间在哪一位约等于,并且它能保持一个纪元数字。

-

Using CMTime - 使用

CMTime

You create a time using CMTimeMake or one of the related functions such as CMTimeMakeWithSeconds (which allows you to create a time using a float value and specify a preferred timescale). There are several functions for time-based arithmetic and for comparing times, as illustrated in the following example:

使用 CMTimeMake 或一个相关功能的 来创建一个时间,例如 CMTimeMakeWithSeconds (它允许你使用浮点值来创建一个时间,并指定一个首选时间刻度)。有基于时间算术的和比较时间的几个功能,如下面的示例所示:

CMTime time1 = CMTimeMake(200, 2); // 200 half-seconds // 200半秒

CMTime time2 = CMTimeMake(400, 4); // 400 quarter-seconds//400个四分之一秒

// time1 and time2 both represent 100 seconds, but using different timescales.

// time1和time2都代表100秒,但使用不同的时间尺度。

if (CMTimeCompare(time1, time2) == 0) {

NSLog(@"time1 and time2 are the same");

//NSLog(@“time1和time2是相同的”);

}

Float64 float64Seconds = 200.0 / 3;

CMTime time3 = CMTimeMakeWithSeconds(float64Seconds , 3); // 66.66... third-seconds // 66.66 ...第三秒

time3 = CMTimeMultiply(time3, 3);

// time3 now represents 200 seconds; next subtract time1 (100 seconds).

// time3现在代表200秒; 接下来减去time1(100秒)

time3 = CMTimeSubtract(time3, time1);

CMTimeShow(time3);

if (CMTIME_COMPARE_INLINE(time2, ==, time3)) {

NSLog(@"time2 and time3 are the same");

//NSLog(@“time2和time3是相同的”);

}

For a list of all the available functions, see CMTime Reference.

有关所有可用的功能列表,请参阅 CMTime Reference

-

Special Values of CMTime -

CMTime的特殊值

Core Media provides constants for special values: kCMTimeZero, kCMTimeInvalid, kCMTimePositiveInfinity, and kCMTimeNegativeInfinity. There are many ways in which a CMTime structure can, for example, represent a time that is invalid. To test whether a CMTime is valid, or a nonnumeric value, you should use an appropriate macro, such as CMTIME_IS_INVALID, CMTIME_IS_POSITIVE_INFINITY, or CMTIME_IS_INDEFINITE.

Core Media 提供了特殊值的常量:kCMTimeZero,kCMTimeInvalid,kCMTimePositiveInfinity,以及 kCMTimeNegativeInfinity。有许多方法,例如,其中 CMTime 结构体可以表示一个无效的时间。为了测试CMTime 是否是无效的,或者是一个非数字值,应该使用一个适当的宏,比如 CMTIME_IS_INVALID,CMTIME_IS_POSITIVE_INFINITY,或者 CMTIME_IS_INDEFINITE

CMTime myTime = <#Get a CMTime#>;

if (CMTIME_IS_INVALID(myTime)) {

// Perhaps treat this as an error; display a suitable alert to the user.

//也许把它当作一个错误; 向用户显示适当的警报。

}

You should not compare the value of an arbitrary CMTime structure with kCMTimeInvalid.

你不应该将一个任意的 CMTime 结构体的值与 kCMTimeInvalid 比较。

-

Representing CMTime as an Object -

CMTime表示为一个对象

If you need to use CMTime structures in annotations or Core Foundation containers, you can convert a CMTime structure to and from a CFDictionary opaque type (see CFDictionaryRef) using the CMTimeCopyAsDictionary and CMTimeMakeFromDictionary functions, respectively. You can also get a string representation of a CMTime structure using the CMTimeCopyDescription function.

如果你需要在注释或者 Core Foundation 容器中使用 CMTime 结构体,可以使用 CMTimeCopyAsDictionary 将 CMTime 结构体转换,使用 CMTimeMakeFromDictionary 从一个 CFDictionary 不透明的类型(见 CFDictionaryRef)。使用 CMTimeCopyDescription 函数可以得到一个 CMTime 结构体的字符串表示。

-

Epochs - 纪元

The epoch number of a CMTime structure is usually set to 0, but you can use it to distinguish unrelated timelines. For example, the epoch could be incremented through each cycle using a presentation loop, to differentiate between time N in loop 0 and time N in loop 1.

CMTime 结构体的纪元数量通常设置为 0,但是你可以用它来区分不相关的时间轴。例如,纪元可以通过使用演示循环每个周期递增,区分循环0中的时间 N与循环1中的时间 N。

-

CMTimeRange Represents a Time Range -

CMTimeRange表示一个时间范围

CMTimeRange is a C structure that has a start time and duration, both expressed as CMTime structures. A time range does not include the time that is the start time plus the duration.

You create a time range using CMTimeRangeMake or CMTimeRangeFromTimeToTime. There are constraints on the value of the CMTime epochs:

- CMTimeRange structures cannot span different epochs.

- The epoch in a CMTime structure that represents a timestamp may be nonzero, but you can only - perform range operations (such as CMTimeRangeGetUnion) on ranges whose start fields have the - same epoch.

- The epoch in a CMTime structure that represents a duration should always be 0, and the value must be nonnegative.

CMTimeRange 是一个 C语言结构体,有开始时间和持续时间,即表示为 CMTime 结构体。时间范围不包括开始时间加上持续时间。

使用 CMTimeRangeMake 或者 CMTimeRangeFromTimeToTime 创建一个时间范围。有关 CMTime 纪元的值,有一些约束:

-

CMTimeRange结构体不能跨越不同的纪元。 -

CMTime结构体中的纪元,表示一个时间戳可能是非零,但你只能在其开始字段具有相同纪元的范围内执行范围操作(比如 CMTimeRangeGetUnion)。 - 在

CMTime结构体中的纪元,表示持续时间应该总是为0,并且值必须是非负数。

-

Working with Time Ranges - 与时间范围工作

Core Media provides functions you can use to determine whether a time range contains a given time or other time range, to determine whether two time ranges are equal, and to calculate unions and intersections of time ranges, such as CMTimeRangeContainsTime, CMTimeRangeEqual, CMTimeRangeContainsTimeRange, and CMTimeRangeGetUnion.

Core Media 提供了一些功能,可用于确定一个时间范围是否包含一个特定的时间或其他时间范围,确定两个时间范围是否相等,并计算时间范围的接口和相交范围,比如 CMTimeRangeContainsTime,CMTimeRangeEqual,CMTimeRangeContainsTimeRange,以及 CMTimeRangeGetUnion。

Given that a time range does not include the time that is the start time plus the duration, the following expression always evaluates to false:

由于时间范围不包括开始时间加上持续时间,下面的表达式的结果总是为 false:

CMTimeRangeContainsTime(range, CMTimeRangeGetEnd(range));

For a list of all the available functions, see CMTimeRange Reference.

有关所有可用功能的列表,请参阅 CMTimeRange Reference。

-

Special Values of CMTimeRange -

CMTimeRange的特殊值

Core Media provides constants for a zero-length range and an invalid range, kCMTimeRangeZero and kCMTimeRangeInvalid, respectively. There are many ways, though in which a CMTimeRange structure can be invalid, or zero—or indefinite (if one of the CMTime structures is indefinite. If you need to test whether a CMTimeRange structure is valid, zero, or indefinite, you should use an appropriate macro: CMTIMERANGE_IS_VALID, CMTIMERANGE_IS_INVALID, CMTIMERANGE_IS_EMPTY, or CMTIMERANGE_IS_EMPTY.

Core Media 分别提供一个长度为0的范围和一个无效范围,就是kCMTimeRangeZero 和 kCMTimeRangeInvalid。有很多种方法,尽管 CMTimeRange 结构可以是无效的,或为零,或是不确定的(如果CMTime 结构是不确定的)。如果你需要测试 ``CMTimeRange` 结构体是否是有效的,零,或者不确定,你应该使用适当的宏:CMTIMERANGE_IS_VALID,CMTIMERANGE_IS_INVALID,CMTIMERANGE_IS_EMPTY,或者 CMTIMERANGE_IS_INDEFINITE。

CMTimeRange myTimeRange = <#Get a CMTimeRange#>;

if (CMTIMERANGE_IS_EMPTY(myTimeRange)) {

// The time range is zero. //时间范围为零。

}

You should not compare the value of an arbitrary CMTimeRange structure with kCMTimeRangeInvalid.

你不应该将任意的 CMTimeRange 结构体的值与 kCMTimeRangeInvalid进行比较。

-

Representing a CMTimeRange Structure as an Object - 将

CMTimeRange结构体表示为对象

If you need to use CMTimeRange structures in annotations or Core Foundation containers, you can convert a CMTimeRange structure to and from a CFDictionary opaque type (see CFDictionaryRef) using CMTimeRangeCopyAsDictionary and CMTimeRangeMakeFromDictionary, respectively. You can also get a string representation of a CMTime structure using the CMTimeRangeCopyDescription function.

如果你需要在注释或 Core Foundation 容器中使用 CMTimeRange 结构,可以使用 CMTimeRangeCopyAsDictionary 转换一个 CMTimeRange ,使用 CMTimeRangeMakeFromDictionary 从一个 CFDictionary 不透明类型 (见 CFDictionaryRef)。也可以 CMTimeRangeCopyDescription 功能得到 CMTime 结构的一个字符串表示。

Representations of Media - 媒体的表示

Video data and its associated metadata are represented in AV Foundation by opaque objects from the Core Media framework. Core Media represents video data using CMSampleBuffer (see CMSampleBufferRef). CMSampleBuffer is a Core Foundation-style opaque type; an instance contains the sample buffer for a frame of video data as a Core Video pixel buffer (see CVPixelBufferRef). You access the pixel buffer from a sample buffer using CMSampleBufferGetImageBuffer:

视频数据和其相关的元数据都是被 AV Foundation 中来自 Core Media framework的不透明对象表示。Core Media 表示视频数据 使用 CMSampleBuffer(见 CMSampleBufferRef)。CMSampleBuffer 是 Core Foundation 风格的不透明类型;实例包含了用于作为Core Video 像素缓冲(见CVPixelBufferRef)的视频数据的单帧样品缓冲区。使用 CMSampleBufferGetImageBuffer 从一个样本缓冲区访问像素缓冲。

CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(<#A CMSampleBuffer#>);

From the pixel buffer, you can access the actual video data. For an example, see Converting CMSampleBuffer to a UIImage Object.

In addition to the video data, you can retrieve a number of other aspects of the video frame:

- Timing information. You get accurate timestamps for both the original presentation time and - the decode time using CMSampleBufferGetPresentationTimeStamp and - CMSampleBufferGetDecodeTimeStamp respectively.

- Format information. The format information is encapsulated in a CMFormatDescription object (- see CMFormatDescriptionRef). From the format description, you can get for example the pixel - type and video dimensions using CMVideoFormatDescriptionGetCodecType and - CMVideoFormatDescriptionGetDimensions respectively.

- Metadata. Metadata are stored in a dictionary as an attachment. You use CMGetAttachment to retrieve the dictionary:

从像素缓冲区,可以访问实际的视频数据。有个例子,请参阅 Converting CMSampleBuffer to a UIImage Object。

除了视频数据之外,可以从数据帧中检索多个其他方面的信息:

- 定时信息。分别使用 CMSampleBufferGetPresentationTimeStamp 和 CMSampleBufferGetDecodeTimeStamp为原来的呈现时间和解码时间,获取准确的时间戳。

- 格式信息。格式信息被封装在

CMFormatDescription对象(见 CMFormatDescriptionRef)。从格式的描述,分别使用CMVideoFormatDescriptionGetCodecType和 CMVideoFormatDescriptionGetDimensions 可以得到例如像素类型和视频尺寸。 - 元数据。元数据作为附件被存储在字典中。使用 CMGetAttachment 去检索词典:

CMSampleBufferRef sampleBuffer = <#Get a sample buffer#>;// 获取样本缓冲区

CFDictionaryRef metadataDictionary =

CMGetAttachment(sampleBuffer, CFSTR("MetadataDictionary", NULL);

if (metadataDictionary) {

// Do something with the metadata.

//对元数据做些什么。

}

Converting CMSampleBuffer to a UIImage Object - 将 CMSampleBuffer 转化为 UIImage 对象

The following code shows how you can convert a CMSampleBuffer to a UIImage object. You should consider your requirements carefully before using it. Performing the conversion is a comparatively expensive operation. It is appropriate to, for example, create a still image from a frame of video data taken every second or so. You should not use this as a means to manipulate every frame of video coming from a capture device in real time.

下面的代码展示了如何将一个 CMSampleBuffer 转化为一个 UIImage 对象。在使用它之前,应该仔细考虑你的要求。执行转换是一个相对昂贵的操作。例如,比较合适的是 从每一秒左右的视频数据的一帧创建一个静态图像。你不应该使用这个作为一种手段 去操作来自实时捕获设备的视频的每一帧。

// Create a UIImage from sample buffer data

//从样本缓冲区数据创建一个UIImage

- (UIImage *) imageFromSampleBuffer:(CMSampleBufferRef) sampleBuffer

{

// Get a CMSampleBuffer's Core Video image buffer for the media data

//获取媒体数据的CMSampleBuffer的核心视频图像缓冲区

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

// Lock the base address of the pixel buffer

//锁定像素缓冲区的基址

CVPixelBufferLockBaseAddress(imageBuffer, 0);

// Get the number of bytes per row for the pixel buffer

//获取像素缓冲区每行的字节数

void *baseAddress = CVPixelBufferGetBaseAddress(imageBuffer);

// Get the number of bytes per row for the pixel buffer

//获取像素缓冲区每行的字节数

size_t bytesPerRow = CVPixelBufferGetBytesPerRow(imageBuffer);

// Get the pixel buffer width and height

//获取像素缓冲区的宽度和高度

size_t width = CVPixelBufferGetWidth(imageBuffer);

size_t height = CVPixelBufferGetHeight(imageBuffer);

// Create a device-dependent RGB color space

//创建一个设备相关的RGB色彩空间

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

// Create a bitmap graphics context with the sample buffer data

//用样本缓冲区数据创建一个位图图形上下文

CGContextRef context = CGBitmapContextCreate(baseAddress, width, height, 8,

bytesPerRow, colorSpace, kCGBitmapByteOrder32Little | kCGImageAlphaPremultipliedFirst);

// Create a Quartz image from the pixel data in the bitmap graphics context

//根据位图图形上下文中的像素数据创建Quartz图像

CGImageRef quartzImage = CGBitmapContextCreateImage(context);

// Unlock the pixel buffer

//解锁像素缓冲区

CVPixelBufferUnlockBaseAddress(imageBuffer,0);

// Free up the context and color space

//释放上下文和颜色空间

CGContextRelease(context);

CGColorSpaceRelease(colorSpace);

// Create an image object from the Quartz image

//从Quartz图像创建一个图像对象

UIImage *image = [UIImage imageWithCGImage:quartzImage];

// Release the Quartz image

//释放Quartz图像

CGImageRelease(quartzImage);

return (image);

}

后记:2016年8月7日,16:38,翻译至此结束:本文翻译的版本是官方文档2015-06-30版,也就是现在的最新版,翻译成果中还有许多需要校对的地方,希望查阅的小伙伴遇到问题能反馈给我。我也会在接下来的几天写 demo的同时,再次进行校对。感谢导师和leader,给我机会完成这项工作。

参考文献:

Yofer Zhang的博客

AVFoundation的苹果官网