iOS 10中新特性:

- 有史以来第一次,你的app 可以拍摄和编辑

live photos - 可以响应不同的图片捕获

一、相机捕获内容显示(输入)

- 创建名为PhotoMe并设置只有IPhone使用的项目,竖屏。

- 授权:在Info.plist 中添加

NSCameraUsageDescription

PhotoMe needs the camera to take photos. Duh!

NSMicrophoneUsageDescription

PhotoMe needs the microphone to record audio with Live Photos.

NSPhotoLibraryUsageDescription

PhotoMe will save photos in the Photo Library.

- 自定义View:创建名为CameraPreviewView 的UIView子类

import UIKit

import AVFoundation

import Photos

class CameraPreviewView: UIView {

//1

override class var layerClass: AnyClass {

return AVCaptureVideoPreviewLayer.self

}

//2

var cameraPreviewLayer: AVCaptureVideoPreviewLayer {

return layer as! AVCaptureVideoPreviewLayer

}

//3

var session: AVCaptureSession? {

get {

return cameraPreviewLayer.session

}

set {

cameraPreviewLayer.session = newValue

}

}

}

- 画界面:在Main.storyboard 中,拖入一个UIView,并设定约束,上,左,右和父视图对齐,宽高比为3:4,设置自定义类为CameraPreviewView,向ViewController连接名为cameraPreviewView的outlet属性。

- 在ViewController中:

import AVFoundation

添加属性:

fileprivate let session = AVCaptureSession()

fileprivate let sessionQueue = DispatchQueue(label: "com.razeware.PhotoMe.session-queue")

var videoDeviceInput: AVCaptureDeviceInput!

添加方法:

private func prepareCaptureSession() {

// 1

session.beginConfiguration()

session.sessionPreset = AVCaptureSessionPresetPhoto

do {

// 2

let videoDevice = AVCaptureDevice.defaultDevice(

withDeviceType: .builtInWideAngleCamera,

mediaType: AVMediaTypeVideo,

position: .front)

// 3

let videoDeviceInput = try

AVCaptureDeviceInput(device: videoDevice)

// 4

if session.canAddInput(videoDeviceInput) {

session.addInput(videoDeviceInput)

self.videoDeviceInput = videoDeviceInput

// 5

DispatchQueue.main.async {

self.cameraPreviewView.cameraPreviewLayer

.connection.videoOrientation = .portrait

}

} else {

print("Couldn't add device to the session")

return

}

} catch {

print("Couldn't create video device input: \(error)")

return

}

// 6

session.commitConfiguration()

}

在viewDidLoad() 中,授权,配置session:

//1

cameraPreviewView.session = session

//2

sessionQueue.suspend()

//3

AVCaptureDevice.requestAccess(forMediaType: AVMediaTypeVideo) {

success in

if !success {

print("Come on, it's a camera app!")

return

}

//4

self.sessionQueue.resume()

}

sessionQueue.async {

[unowned self] in

self.prepareCaptureSession()

}

在viewWillAppear() 中,启动session:

override func viewWillAppear(_ animated: Bool) {

super.viewWillAppear(animated)

sessionQueue.async {

self.session.startRunning()

}

}

二、拍照(输出)

- 在ViewController 中:

添加属性:

fileprivate let photoOutput = AVCapturePhotoOutput()

prepareCaptureSession()中,prepareCaptureSession()前面:

if session.canAddOutput(photoOutput) {

session.addOutput(photoOutput)

// 必须在session启动之前配置,否则会发生闪烁

photoOutput.isHighResolutionCaptureEnabled = true

} else {

print("Unable to add photo output")

return

}

- 画界面:

- 在Main.storyboard 中设置main view 和 camera preview view 的background 为black,main view的tint color 为 orange。

- main view拖入Visual Effect View With Blur,设置约束为左,下,右与父视图对齐。 Blur Style 为 Dark。

- Visual Effect View With Blur 拖入 button,名称为Take Photo!,字体为20,并将其放入stack View 中。

- 对stack View 添加顶部距父视图为5,底部距父视图为20的约束。

- 连接button到ViewController 中:

@IBOutlet weak var shutterButton: UIButton!

@IBAction func handleShutterButtonTap(_ sender: UIButton) {

capturePhoto()

}

extension ViewController {

fileprivate func capturePhoto() {

// 1

let cameraPreviewLayerOrientation = cameraPreviewView

.cameraPreviewLayer.connection.videoOrientation

// 2

sessionQueue.async {

if let connection = self.photoOutput

.connection(withMediaType: AVMediaTypeVideo) {

connection.videoOrientation =

cameraPreviewLayerOrientation

}

// 3

let photoSettings = AVCapturePhotoSettings()

photoSettings.flashMode = .off

photoSettings.isHighResolutionPhotoEnabled = true

}

}

}

- 创建代理:因为一张照片还没处理完就可以拍另一张,所以ViewController 持有一个键为AVCapturePhotoSettings,值为代理的字典。

import AVFoundation

import Photos

class PhotoCaptureDelegate: NSObject {

// 1

var photoCaptureBegins: (() -> ())? = .none

var photoCaptured: (() -> ())? = .none

fileprivate let completionHandler: (PhotoCaptureDelegate, PHAsset?)-> ()

// 2

fileprivate var photoData: Data? = .none

// 3

init(completionHandler: @escaping (PhotoCaptureDelegate, PHAsset?) -> ()) {

self.completionHandler = completionHandler

}

// 4

fileprivate func cleanup(asset: PHAsset? = .none) {

completionHandler(self, asset)

}

}

-

原理如图:

- 实现代理方法

extension PhotoCaptureDelegate: AVCapturePhotoCaptureDelegate {

// Process data completed

func capture(_ captureOutput: AVCapturePhotoOutput,

didFinishProcessingPhotoSampleBuffer

photoSampleBuffer: CMSampleBuffer?,

previewPhotoSampleBuffer: CMSampleBuffer?,

resolvedSettings: AVCaptureResolvedPhotoSettings,

bracketSettings: AVCaptureBracketedStillImageSettings?,

error: Error?) {

guard let photoSampleBuffer = photoSampleBuffer else {

print("Error capturing photo \(error)")

return

}

photoData = AVCapturePhotoOutput

.jpegPhotoDataRepresentation(

forJPEGSampleBuffer: photoSampleBuffer, previewPhotoSampleBuffer: previewPhotoSampleBuffer)

}

// Entire process completed

func capture(_ captureOutput: AVCapturePhotoOutput,

didFinishCaptureForResolvedSettings

resolvedSettings: AVCaptureResolvedPhotoSettings,

error: Error?) {

// 1

guard error == nil, let photoData = photoData else {

print("Error \(error) or no data")

cleanup()

return

}

// 2

PHPhotoLibrary.requestAuthorization {

[unowned self]

(status) in

// 3

guard status == .authorized else {

print("Need authorisation to write to the photo library")

self.cleanup()

return

}

// 4

var assetIdentifier: String?

PHPhotoLibrary.shared().performChanges({

let creationRequest = PHAssetCreationRequest.forAsset()

let placeholder = creationRequest

.placeholderForCreatedAsset

creationRequest.addResource(with: .photo,

data: photoData, options: .none)

assetIdentifier = placeholder?.localIdentifier

}, completionHandler: { (success, error) in

if let error = error {

print("Error saving to the photo library: \(error)")

}

var asset: PHAsset? = .none

if let assetIdentifier = assetIdentifier {

asset = PHAsset.fetchAssets(

withLocalIdentifiers: [assetIdentifier],

options: .none).firstObject

}

self.cleanup(asset: asset)

})

}

}

}

- 在ViewController中:

添加属性:

fileprivate var photoCaptureDelegates = [Int64 : PhotoCaptureDelegate]()

在capturePhoto()的queue 闭包末尾添加:

// 1

let uniqueID = photoSettings.uniqueID

let photoCaptureDelegate = PhotoCaptureDelegate() {

[unowned self] (photoCaptureDelegate, asset) in

self.sessionQueue.async { [unowned self] in

self.photoCaptureDelegates[uniqueID] = .none

}

}

// 2

self.photoCaptureDelegates[uniqueID] = photoCaptureDelegate

// 3

self.photoOutput.capturePhoto(

with: photoSettings, delegate: photoCaptureDelegate)

三、难以置信的

- 添加闪屏动画

在capturePhoto()创建delegate 后,添加:

photoCaptureDelegate.photoCaptureBegins = { [unowned self] in

DispatchQueue.main.async {

self.shutterButton.isEnabled = false

self.cameraPreviewView.cameraPreviewLayer.opacity = 0

UIView.animate(withDuration: 0.2) {

self.cameraPreviewView.cameraPreviewLayer.opacity = 1

}

}

}

photoCaptureDelegate.photoCaptured = { [unowned self] in

DispatchQueue.main.async {

self.shutterButton.isEnabled = true

}

}

在PhotoCaptureDelegate的extension 中:

func capture(_ captureOutput: AVCapturePhotoOutput,

willCapturePhotoForResolvedSettings

resolvedSettings: AVCaptureResolvedPhotoSettings) {

photoCaptureBegins?()

}

func capture(_ captureOutput: AVCapturePhotoOutput,

didCapturePhotoForResolvedSettings

resolvedSettings: AVCaptureResolvedPhotoSettings) {

photoCaptured?()

}

- 展示缩略图

- 获取缩略图:在PhotoCaptureDelegate添加属性:

var thumbnailCaptured: ((UIImage?) -> ())? = .none

在代理方法didFinishProcessingPhotoSampleBuffer末尾添加:

if let thumbnailCaptured = thumbnailCaptured,

let previewPhotoSampleBuffer = previewPhotoSampleBuffer,

let cvImageBuffer =

CMSampleBufferGetImageBuffer(previewPhotoSampleBuffer) {

let ciThumbnail = CIImage(cvImageBuffer: cvImageBuffer)

let context = CIContext(options: [kCIContextUseSoftwareRenderer:

false])

let thumbnail = UIImage(cgImage: context.createCGImage(ciThumbnail,

from: ciThumbnail.extent)!, scale: 2.0, orientation: .right)

thumbnailCaptured(thumbnail)

}

-

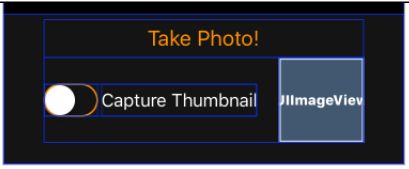

画界面:设置switch 为 off,label 的文本颜色为white,imageView 的 clip to bounds 为 true,content mode 为 Aspect Fill。

连线:

@IBOutlet weak var previewImageView: UIImageView!

@IBOutlet weak var thumbnailSwitch: UISwitch!

- 设置缩略图格式: 在capturePhoto()中,创建delegate之前添加:

if self.thumbnailSwitch.isOn

&& photoSettings.availablePreviewPhotoPixelFormatTypes

.count > 0 {

photoSettings.previewPhotoFormat = [

kCVPixelBufferPixelFormatTypeKey as String :

photoSettings

.availablePreviewPhotoPixelFormatTypes.first!,

kCVPixelBufferWidthKey as String : 160,

kCVPixelBufferHeightKey as String : 160

]

}

- 展示缩略图:在创建delegate之后添加:

photoCaptureDelegate.thumbnailCaptured = { [unowned self] image in

DispatchQueue.main.async {

self.previewImageView.image = image

}

}

- Live Photos

- 画界面:在Option Stack 中拖入一Horizontal Stack View,嵌入一个switch 和 一个 label,设置switch 为 off,label 的text 为 Live Photo Mode, text color 为 white。向Control Stack 加入一个label ,text 为 capturing...,字体大小为35,hidden 为 true,text color 为 orange。

连线:

@IBOutlet weak var capturingLabel: UILabel!

@IBOutlet weak var livePhotoSwitch: UISwitch!

- 添加音频输入:在prepareCaptureSession()中,创建video device input 之后添加:

do {

let audioDevice = AVCaptureDevice.defaultDevice(withMediaType:

AVMediaTypeAudio)

let audioDeviceInput = try AVCaptureDeviceInput(device: audioDevice)

if session.canAddInput(audioDeviceInput) {

session.addInput(audioDeviceInput)

} else {

print("Couldn't add audio device to the session")

return

}

} catch {

print("Unable to create audio device input: \(error)")

return

}

- 设置Photo 输出:在photoOutput.isHighResolutionCaptureEnabled = true 之后添加:

photoOutput.isLivePhotoCaptureEnabled =

photoOutput.isLivePhotoCaptureSupported

DispatchQueue.main.async {

self.livePhotoSwitch.isEnabled =

self.photoOutput.isLivePhotoCaptureSupported

- 设置输出路径:在capturePhoto()中,创建delegate之前添加:

if self.livePhotoSwitch.isOn {

let movieFileName = UUID().uuidString

let moviePath = (NSTemporaryDirectory() as NSString)

.appendingPathComponent("(movieFileName).mov")

photoSettings.livePhotoMovieFileURL = URL(

fileURLWithPath: moviePath)

} - 捕获live photo:在PhotoCaptureDelegate添加属性:

var capturingLivePhoto: ((Bool) -> ())? = .none

fileprivate var livePhotoMovieURL: URL? = .none

在代理方法willCapturePhotoForResolvedSettings末尾添加:

if resolvedSettings.livePhotoMovieDimensions.width > 0

&& resolvedSettings.livePhotoMovieDimensions.height > 0 {

capturingLivePhoto?(true)

}

添加代理方法:

func capture(_ captureOutput: AVCapturePhotoOutput,

didFinishRecordingLivePhotoMovieForEventualFileAt

outputFileURL: URL,

resolvedSettings: AVCaptureResolvedPhotoSettings) {

capturingLivePhoto?(false)

}

func capture(_ captureOutput: AVCapturePhotoOutput,

didFinishProcessingLivePhotoToMovieFileAt outputFileURL:

URL,

duration: CMTime,

photoDisplay photoDisplayTime: CMTime,

resolvedSettings: AVCaptureResolvedPhotoSettings,

error: Error?) {

if let error = error {

print("Error creating live photo video: \(error)")

return

}

livePhotoMovieURL = outputFileURL

}

- 添加视频到相册:在代理方法didFinishCaptureForResolvedSettings:error:)中,addResource 后添加:

if let livePhotoMovieURL = self.livePhotoMovieURL {

let movieResourceOptions = PHAssetResourceCreationOptions()

movieResourceOptions.shouldMoveFile = true

creationRequest.addResource(with: .pairedVideo,

fileURL: livePhotoMovieURL, options:

movieResourceOptions)

}

- 更新UI:在ViewController添加属性:

fileprivate var currentLivePhotoCaptures: Int = 0

在capturePhoto()中,创建delegate之后添加:

// Live photo UI updates

photoCaptureDelegate.capturingLivePhoto = { (currentlyCapturing) in

DispatchQueue.main.async { [unowned self] in

self.currentLivePhotoCaptures += currentlyCapturing ? 1 : -1

UIView.animate(withDuration: 0.2) {

self.capturingLabel.isHidden =

self.currentLivePhotoCaptures == 0

}

}

}