ELK由Elasticsearch、Logstash和Kibana三部分组件组成;

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash是一个完全开源的工具,它可以对你的日志进行收集、分析,并将其存储供以后使用

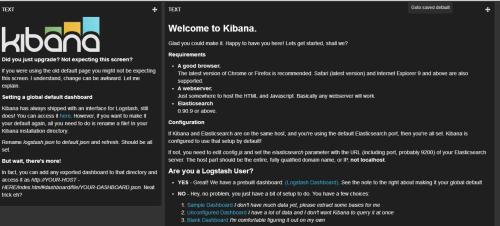

kibana 是一个开源和免费的工具,它可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

四大组件

Logstash: logstash server端用来搜集日志;

Elasticsearch: 存储各类日志;

Kibana: web化接口用作查寻和可视化日志;

Logstash Forwarder: logstash client端用来通过lumberjack 网络协议发送日志到logstash server;

实验环境

centos6.5_x64

实验软件

java-1.7.0-openjdk

elasticsearch-1.4.2.tar.gz

master.tar.gz

logstash-1.4.2.tar.gz

kibana-3.1.2.tar.gz

软件安装

yum install -y java-1.7.0-openjdk*

java -version

java version "1.7.0_111"

tar zxvf elasticsearch-1.4.2.tar.gz

mv elasticsearch-1.4.2 /usr/local/

ln -s /usr/local/elasticsearch-1.4.2 /usr/local/elasticsearc

tar zxvf master.tar.gz

mv elasticsearch-servicewrapper-master/service/ /usr/local/elasticsearch/bin/

/usr/local/elasticsearch/bin/service/elasticsearch start

Starting Elasticsearch...

Waiting for Elasticsearch......

running: PID:2496

netstat -tuplna | grep 9300

tcp 0 0 :::9300 :::* LISTEN 2498/java

tcp 0 0 ::ffff:192.168.31.103:44390 ::ffff:192.168.31.103:9300 ESTABLISHED 2498/java

ps -ef | grep 9300

root 2566 2161 0 20:04 pts/0 00:00:00 grep 9300

curl -X GET http://localhost:9200

{

"status" : 200,

"name" : "Time Bomb",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.4.2",

"build_hash" : "927caff6f05403e936c20bf4529f144f0c89fd8c",

"build_timestamp" : "2014-12-16T14:11:12Z",

"build_snapshot" : false,

"lucene_version" : "4.10.2"

},

"tagline" : "You Know, for Search"

}

tar zxvf logstash-1.4.2.tar.gz

mv logstash-1.4.2 /usr/local/

ln -s /usr/local/logstash-1.4.2 /usr/local/logstash

/usr/local/logstash/bin/logstash -e 'input { stdin { } } output { stdout {} }'

mkdir -p /usr/local/logstash/etc

vim /usr/local/logstash/etc/hello_search.conf 默认没有这个配置文件需要手动编辑

input

stdin {

type => "human"

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

host => "192.168.31.243"

port => 9300

}

}

/usr/local/logstash/bin/logstash -f /usr/local/logstash/etc/hello_search.conf &

{

"message" => "hello word",

"@version" => "1",

"@timestamp" => "2016-09-24T12:40:42.081Z",

"type" => "human",

"host" => "0.0.0.0"

}

tar zxvf kibana-3.1.2.tar.gz

mv kibana-3.1.2 /var/www/html/kibana

vim /var/www/html/kibana/config.js

elasticsearch: "http://"+window.location.hostname+":9200"

elasticsearch: "http://192.168.31.243:9200", 修改为

vim /usr/local/elasticsearch/config/elasticsearch.yml

http.cors.enabled: true 配置文件最后加载一行

/usr/local/elasticsearch/bin/service/elasticsearch restart 重启读取配置文件

service httpd restart

vim /usr/local/logstash/etc/logstash_agent.conf

input {

file {

type => "http.access"

path => ["/var/log/httpd/access_log"]

}

file {

type => "http.error"

path => ["/var/log/httpd/error_log"]

}

file {

type => "messages"

path => ["/var/log/messages"]

}

}

output {

elasticsearch {

host => "192.168.31.243"

port => 9300

}

nohup /usr/local/logstash/bin/logstash -f /usr/local/logstash/etc/logstash_agent.conf &

ps -ef | grep 9292

root 10834 2682 0 21:03 pts/1 00:00:00 grep 9292

ps -ef | grep 9300

root 10838 2682 0 21:04 pts/1 00:00:00 grep 9300

ps -ef | grep 9200

root 10840 2682 0 21:04 pts/1 00:00:00 grep 9200

http://serverip/kibana