基本使用流程

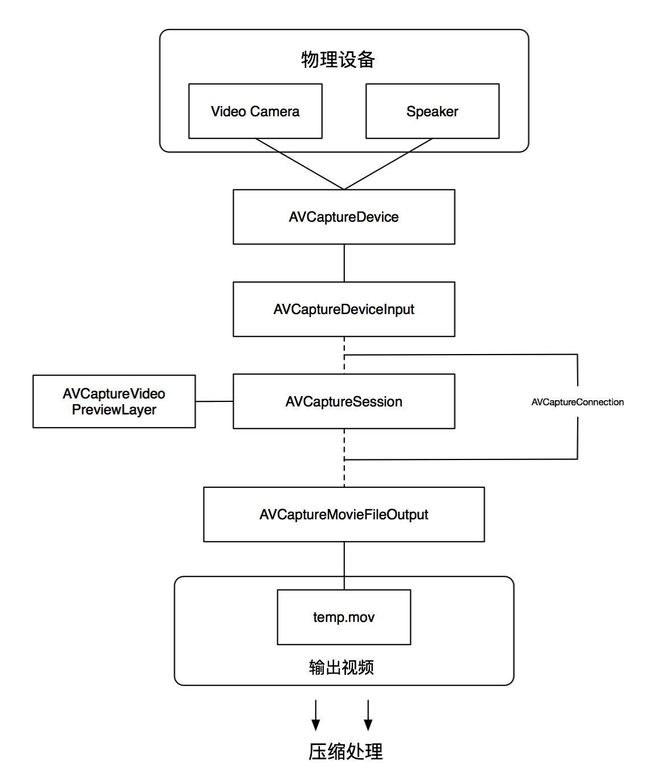

AVCaptureSession是AVFoundation的核心类,用于捕捉视频和音频,协调视频和音频的输入和输出流.下面是上找的围绕AVCaptureSession的图

围绕AVCaptureSession的核心类的简介

AVCaptureSession是AVFoundation的核心类,用于捕捉视频和音频,协调视频和音频的输入和输出流.

对session的常见操作:

1. 创建AVCaptureSession

设置SessionPreset,用于设置output输出流的bitrate或者说画面质量

// 1 创建session

AVCaptureSession *session = [AVCaptureSession new];

//设置session显示分辨率

if ([[UIDevice currentDevice] userInterfaceIdiom] == UIUserInterfaceIdiomPhone)

[session setSessionPreset:AVCaptureSessionPreset640x480];

else

[session setSessionPreset:AVCaptureSessionPresetPhoto];

2. 给Session添加input输入

一般是Video或者Audio数据,也可以两者都添加,即AVCaptureSession的输入源AVCaptureDeviceInput.

// 2 获取摄像头device,并且默认使用的后置摄像头,并且将摄像头加入到captureSession中

AVCaptureDevice *device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

AVCaptureDeviceInput *deviceInput = [AVCaptureDeviceInput deviceInputWithDevice:device error:&error];

isUsingFrontFacingCamera = NO;

if ([session canAddInput:deviceInput]){

[session addInput:deviceInput];

}

3. 给session添加output输出

添加AVCaptureOutput,即AVCaptureSession的输出源.一般输出源分成:音视频源,图片源,文件源等.

- 音视频输出AVCaptureAudioDataOutput,AVCaptureVideoDataOutput.

- 静态图片输出AVCaptureStillImageOutput(iOS10中被AVCapturePhotoOutput取代了)

- AVCaptureMovieFileOutput表示文件源.

通常如果需要音视频帧,需要在将output加入到session之前,设置videoSetting或者audioSetting,主要是音视频的格式或者回调的delegate以及dispatch queue.

// 4 创建拍照使用的AVCaptureStillImageOutput,并且注册observer观察capturingStillImage,并将output加入到session. 使用observer的作用监控"capturingStillImage",如果为YES,那么表示开始截取视频帧.在回调方法中显示闪屏效果

stillImageOutput = [AVCaptureStillImageOutput new];

[stillImageOutput addObserver:self forKeyPath:@"capturingStillImage" options:NSKeyValueObservingOptionNew context:(__bridge void * _Nullable)(AVCaptureStillImageIsCapturingStillImageContext)];

if ([session canAddOutput:stillImageOutput]){

[session addOutput:stillImageOutput];

}

// 5 创建预览output,设置预览videosetting,然后设置预览delegate使用的回调线程,将该预览output加入到session

videoDataOutput = [AVCaptureVideoDataOutput new];

// we want BGRA, both CoreGraphics and OpenGL work well with 'BGRA'

NSDictionary *rgbOutputSettings = [NSDictionary dictionaryWithObject:

[NSNumber numberWithInt:kCMPixelFormat_32BGRA] forKey:(id)kCVPixelBufferPixelFormatTypeKey];

[videoDataOutput setVideoSettings:rgbOutputSettings];

[videoDataOutput setAlwaysDiscardsLateVideoFrames:YES]; // discard if the data output queue is blocked (as we process the still image)

// create a serial dispatch queue used for the sample buffer delegate as well as when a still image is captured

// a serial dispatch queue must be used to guarantee that video frames will be delivered in order

// see the header doc for setSampleBufferDelegate:queue: for more information

videoDataOutputQueue = dispatch_queue_create("VideoDataOutputQueue", DISPATCH_QUEUE_SERIAL);

[videoDataOutput setSampleBufferDelegate:self queue:videoDataOutputQueue];

if ([session canAddOutput:videoDataOutput]){

[session addOutput:videoDataOutput];

}

4. AVCaptureConnection设置input,output连接的重要属性

在给AVCaptureSession添加input和output以后,就可以通过audio或者video的output生成AVCaptureConnection.通过connection设置output的视频或者音频的重要属性,比如ouput video的方向videoOrientation(这里注意videoOrientation并非DeviceOrientation,默认情况下录制的视频是90度转角的,这个是相机传感器导致的,请google)

[[videoDataOutput connectionWithMediaType:AVMediaTypeVideo] setEnabled:NO];

AVCaptureConnection *videoCon = [videoDataOutput connectionWithMediaType:AVMediaTypeVideo];

// 原来的刷脸没有这句话.因此录制出来的视频是有90度转角的, 这是默认情况

if ([videoCon isVideoOrientationSupported]) {

// videoCon.videoOrientation = AVCaptureVideoOrientationPortrait;

// 下面这句是默认系统video orientation情况!!!!,如果要outputsample图片输出的方向是正的那么需要将这里设置称为portrait

//videoCon.videoOrientation = AVCaptureVideoOrientationLandscapeLeft;

}

5. 视频预览层AVCaptureVideoPreviewLayer

在input,output等重要信息都添加到session以后,可以用session创建AVCaptureVideoPreviewLayer,这是摄像头的视频预览层.

previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:session];

[previewLayer setBackgroundColor:[[UIColor blackColor] CGColor]];

[previewLayer setVideoGravity:AVLayerVideoGravityResizeAspectFill];// 犹豫使用的aspectPerserve

CALayer *rootLayer = [previewView layer];

[rootLayer setMasksToBounds:YES];

[previewLayer setFrame:[rootLayer bounds]];

[rootLayer addSublayer:previewLayer];

6. 启动session

// 7 启动session,output开始接受samplebuffer回调

[session startRunning];

Apple Demo SquareCam的session的完整的初始化

具体解释见注释

/**

* 相机初始化方法

*/

- (void)setupAVCapture

{

NSError *error = nil;

// 1 创建session

AVCaptureSession *session = [AVCaptureSession new];

// 2 设置session显示分辨率

if ([[UIDevice currentDevice] userInterfaceIdiom] == UIUserInterfaceIdiomPhone)

[session setSessionPreset:AVCaptureSessionPreset640x480];

else

[session setSessionPreset:AVCaptureSessionPresetPhoto];

// 3 获取摄像头device,并且默认使用的后置摄像头,并且将摄像头加入到captureSession中

AVCaptureDevice *device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

AVCaptureDeviceInput *deviceInput = [AVCaptureDeviceInput deviceInputWithDevice:device error:&error];

isUsingFrontFacingCamera = NO;

if ( [session canAddInput:deviceInput] )

[session addInput:deviceInput];

// 4 创建拍照使用的AVCaptureStillImageOutput,并且注册observer观察capturingStillImage,并将output加入到session. 使用observer的作用监控"capturingStillImage",如果为YES,那么表示开始截取视频帧.在回调方法中显示闪屏效果

stillImageOutput = [AVCaptureStillImageOutput new];

[stillImageOutput addObserver:self forKeyPath:@"capturingStillImage" options:NSKeyValueObservingOptionNew context:(__bridge void * _Nullable)(AVCaptureStillImageIsCapturingStillImageContext)];

if ( [session canAddOutput:stillImageOutput] )

[session addOutput:stillImageOutput];

// 5 创建预览output,设置预览videosetting,然后设置预览delegate使用的回调线程,将该预览output加入到session

videoDataOutput = [AVCaptureVideoDataOutput new];

// we want BGRA, both CoreGraphics and OpenGL work well with 'BGRA'

NSDictionary *rgbOutputSettings = [NSDictionary dictionaryWithObject:

[NSNumber numberWithInt:kCMPixelFormat_32BGRA] forKey:(id)kCVPixelBufferPixelFormatTypeKey];

[videoDataOutput setVideoSettings:rgbOutputSettings];

[videoDataOutput setAlwaysDiscardsLateVideoFrames:YES]; // discard if the data output queue is blocked (as we process the still image)

// create a serial dispatch queue used for the sample buffer delegate as well as when a still image is captured

// a serial dispatch queue must be used to guarantee that video frames will be delivered in order

// see the header doc for setSampleBufferDelegate:queue: for more information

videoDataOutputQueue = dispatch_queue_create("VideoDataOutputQueue", DISPATCH_QUEUE_SERIAL);

[videoDataOutput setSampleBufferDelegate:self queue:videoDataOutputQueue];

if ( [session canAddOutput:videoDataOutput] )

[session addOutput:videoDataOutput];

[[videoDataOutput connectionWithMediaType:AVMediaTypeVideo] setEnabled:NO];

AVCaptureConnection *videoCon = [videoDataOutput connectionWithMediaType:AVMediaTypeVideo];

// 原来的刷脸没有这句话.因此录制出来的视频是有90度转角的, 这是默认情况

if ([videoCon isVideoOrientationSupported]) {

// videoCon.videoOrientation = AVCaptureVideoOrientationPortrait;

// 下面这句是默认系统video orientation情况!!!!,如果要outputsample图片输出的方向是正的那么需要将这里设置称为portrait

//videoCon.videoOrientation = AVCaptureVideoOrientationLandscapeLeft;

}

effectiveScale = 1.0;

// 6 获取相机的实时预览layer,并且设置layer的拉升属性AVLayerVideoGravityResizeAspect,设置previewLayer的bounds,并加入到view中

previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:session];

[previewLayer setBackgroundColor:[[UIColor blackColor] CGColor]];

[previewLayer setVideoGravity:AVLayerVideoGravityResizeAspectFill];// 犹豫使用的aspectPerserve

CALayer *rootLayer = [previewView layer];

[rootLayer setMasksToBounds:YES];

[previewLayer setFrame:[rootLayer bounds]];

[rootLayer addSublayer:previewLayer];

// 7 启动session,output开始接受samplebuffer回调

[session startRunning];

bail:

if (error) {

UIAlertView *alertView = [[UIAlertView alloc] initWithTitle:[NSString stringWithFormat:@"Failed with error %d", (int)[error code]]

message:[error localizedDescription]

delegate:nil

cancelButtonTitle:@"Dismiss"

otherButtonTitles:nil];

[alertView show];

[self teardownAVCapture];

}

}

请参考apple 的官方demo: SquareCam