测试环境

- 准备三台虚拟机(CentOS7.5),强烈建议不要有任何跟docker/k8s/flannel等相关的残留配置!

k8s-master:10.25.151.100

k8s-node-1:10.25.151.101

k8s-node-2:10.25.151.102

- 前期绝大多数信息和配置请参照前作《学习kubernetes(一):kubeasz部署基于Flannel的k8s测试环境》

- 敲黑板了!敲黑板了!一定记得三台虚拟机的hostname要不同!

hostnamectl --static set-hostname k8s-master

------------------

hostnamectl --static set-hostname k8s-node-1

------------------

hostnamectl --static set-hostname k8s-node-2

calico node name 由节点主机名决定,如果重复,那么重复节点在etcd中只存储一份配置,BGP 邻居也不会建立。

- master上配置ansible的hosts文件(只列修改的部分)

# cat hosts

[etcd]

10.25.151.100 NODE_NAME=etcd1

[kube-master]

10.25.151.100

[kube-node]

10.25.151.101

10.25.151.102

CLUSTER_NETWORK="calico"

- 安装步骤依然是

# ansible-playbook 01.prepare.yml

# ansible-playbook 02.etcd.yml

# ansible-playbook 03.docker.yml

# ansible-playbook 04.kube-master.yml

# ansible-playbook 05.kube-node.yml

# ansible-playbook 06.network.yml

- 查看信息

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.25.151.100 Ready master 27m v1.13.4

10.25.151.101 Ready node 26m v1.13.4

10.25.151.102 Ready node 26m v1.13.4

#

# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-6bfc4bd6bd-xlqld 1/1 Running 0 7m6s 10.25.151.100 10.25.151.100

calico-node-fhv5c 1/1 Running 0 7m6s 10.25.151.100 10.25.151.100

calico-node-hvgzx 1/1 Running 0 7m6s 10.25.151.102 10.25.151.102

calico-node-t7zbd 1/1 Running 0 7m6s 10.25.151.101 10.25.151.101

#

验证网络

节点上启动pod

# kubectl run test --image=busybox --replicas=3 sleep 30000

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/test created

#

# kubectl get pod --all-namespaces -o wide|head -n 4

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default test-568866f478-58f6j 1/1 Running 0 20m 172.20.140.65 10.25.151.102

default test-568866f478-dfmhv 1/1 Running 0 20m 172.20.235.193 10.25.151.100

default test-568866f478-f9lrw 1/1 Running 0 20m 172.20.109.65 10.25.151.101

查看网络信息

- 查看所有calico节点状态(以master上为例)

# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+-------------------+-------+----------+-------------+

| 10.25.151.101 | node-to-node mesh | up | 06:29:58 | Established |

| 10.25.151.102 | node-to-node mesh | up | 06:35:24 | Established |

+---------------+-------------------+-------+----------+-------------+

#

# netstat -antlp|grep ESTABLISHED|grep 179

tcp 0 0 10.25.151.100:179 10.25.151.102:37227 ESTABLISHED 23851/bird

tcp 0 0 10.25.151.100:179 10.25.151.101:35681 ESTABLISHED 23851/bird

可以看到master已经和另外两个node建立起了BGP连接

- 查看 calico网络为各节点分配的网段

# ETCDCTL_API=3 etcdctl --endpoints="http://127.0.0.1:2379" get --prefix /calico/ipam/v2/host

/calico/ipam/v2/host/k8s-master/ipv4/block/172.20.235.192-26

{"state":"confirmed"}

/calico/ipam/v2/host/k8s-node-1/ipv4/block/172.20.109.64-26

{"state":"confirmed"}

/calico/ipam/v2/host/k8s-node-2/ipv4/block/172.20.140.64-26

{"state":"confirmed"}

#

- 查看宿主机的网络(以master为例)

# ip addr

...

3: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:ad:cf:d5:b1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: tunl0@NONE: mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.20.235.192/32 brd 172.20.235.192 scope global tunl0

valid_lft forever preferred_lft forever

5: cali2fa278432d7@if4: mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

#

实际通信验证

- 以master和node-1上的busybox容器通信为例

master上

/ # ip add

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if5: mtu 1500 qdisc noqueue

link/ether 9e:56:f1:0d:a8:d5 brd ff:ff:ff:ff:ff:ff

inet 172.20.235.193/32 scope global eth0

valid_lft forever preferred_lft forever

/ #

/ # ip route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

-----------------------------------------------------------------

-----------------------------------------------------------------

node-1上

/ # ip addr

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if5: mtu 1500 qdisc noqueue

link/ether 06:e5:35:66:5b:84 brd ff:ff:ff:ff:ff:ff

inet 172.20.109.65/32 scope global eth0

valid_lft forever preferred_lft forever

/ #

/ # ip route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

/ #

可以看到和calico给各个节点分配的IP段相符

- 跟踪路由,container(master)->ens160(master)->tunl0(node-1)->container(node-1)

/ # traceroute 172.20.109.65

traceroute to 172.20.109.65 (172.20.109.65), 30 hops max, 46 byte packets

1 10.25.151.100 (10.25.151.100) 0.018 ms 0.014 ms 0.009 ms

2 172.20.109.64 (172.20.109.64) 0.978 ms 0.540 ms 0.583 ms

3 172.20.109.65 (172.20.109.65) 0.414 ms 0.843 ms 0.538 ms

/ #

- 在master的物理口抓包,可以看到ICMP报文被封装为UDP报文

# tcpdump -i ens160 -enn 'ip[2:2] > 1200 and ip[2:2] < 1500'

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens160, link-type EN10MB (Ethernet), capture size 262144 bytes

03:12:06.020274 00:50:56:a9:21:d2 > 00:50:56:a9:1e:4a, ethertype IPv4 (0x0800), length 1262: 10.25.151.100 > 10.25.151.101: 172.20.235.193 > 172.20.109.65: ICMP echo request, id 3840, seq 34, length 1208 (ipip-proto-4)

03:12:06.020680 00:50:56:a9:1e:4a > 00:50:56:a9:21:d2, ethertype IPv4 (0x0800), length 1262: 10.25.151.101 > 10.25.151.100: 172.20.109.65 > 172.20.235.193: ICMP echo reply, id 3840, seq 34, length 1208 (ipip-proto-4)

-

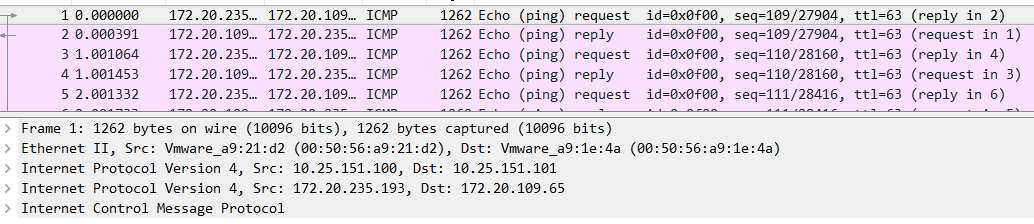

抓包后用wireshark解析,可以看到IPIP报文,外层IP是node的IP,内层是容器的IP

与原ICMP报文相比,只是多了一个IP header,传输效率高