背景

在上一次的初次使用cmake编译jni后,这次cmake文件再次升级。利用网上的代码来编译一个简单的播放器。本文参照 Android+FFmpeg+ANativeWindow视频解码播放 一文,在cmake下重新编译。特将过程分享给大家。

gradle 配置

apply plugin: 'com.android.application'

android {

compileSdkVersion 22

buildToolsVersion "23.0.1"

//sourceSets.main.jni.srcDirs = ['jniLibs']

defaultConfig {

applicationId "jonesx.videoplayer"

minSdkVersion 9

targetSdkVersion 22

versionCode 1

versionName "1.0"

ndk {

abiFilters 'armeabi'

}

externalNativeBuild {

cmake {

arguments '-DANDROID_TOOLCHAIN=clang','-DANDROID_STL=gnustl_static'

}

}

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

}

}

externalNativeBuild {

cmake {

path "src/main/cpp/CMakeLists.txt"

}

}

}

dependencies {

compile fileTree(dir: 'libs', include: ['*.jar'])

compile 'com.android.support:appcompat-v7:22.2.1'

compile 'com.android.support:design:22.2.1'

}

cmake 配置

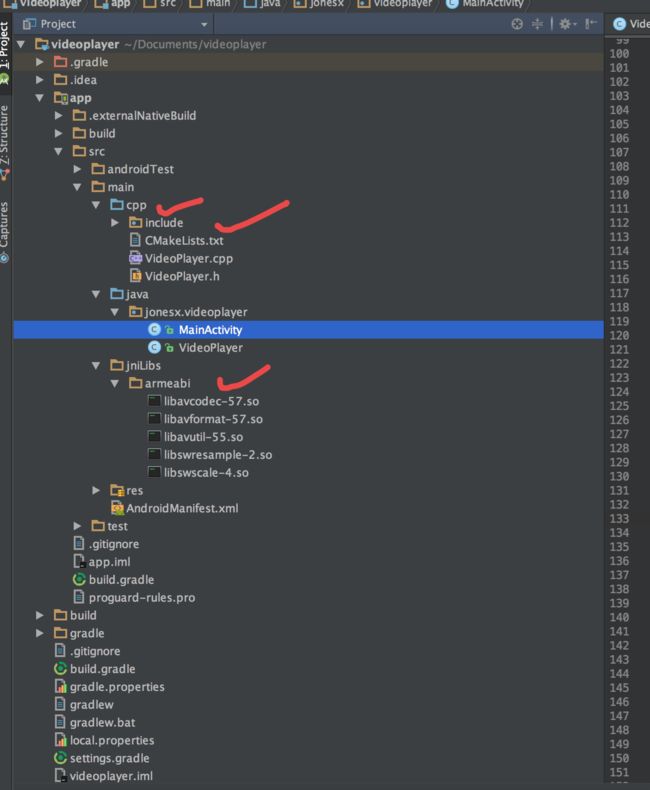

- 整个工程的层级。

- cmake配置文件

CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1)

set(lib_src_DIR ${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI})

include_directories(

${CMAKE_SOURCE_DIR}/include

)

add_library(avcodec-57_lib SHARED IMPORTED)

set_target_properties(avcodec-57_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libavcodec-57.so)

add_library(avformat-57_lib SHARED IMPORTED)

set_target_properties(avformat-57_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libavformat-57.so)

add_library(avutil-55_lib SHARED IMPORTED)

set_target_properties(avutil-55_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libavutil-55.so)

add_library(swresample-2_lib SHARED IMPORTED)

set_target_properties(swresample-2_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libswresample-2.so)

add_library(swscale-4_lib SHARED IMPORTED)

set_target_properties(swscale-4_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libswscale-4.so)

# build application's shared lib

add_library(VideoPlayer SHARED

VideoPlayer.cpp)

# Include libraries needed for VideoPlayer lib

target_link_libraries(VideoPlayer

log

android

avcodec-57_lib

avformat-57_lib

avutil-55_lib

swresample-2_lib

swscale-4_lib)

解释下,这里添加了依赖的so库,注意路径呀,我在这里坑了半天,还有同事拯救了我。

add_library(swscale-4_lib SHARED IMPORTED)

set_target_properties(swscale-4_lib PROPERTIES IMPORTED_LOCATION

${lib_src_DIR}/libswscale-4.so)

最后,不要忘了,target_link_libraries中添加对应的库。

VideoPlayer.cpp

//

// Created by Jonesx on 2016/3/20.

//

#include

#include "VideoPlayer.h"

#include

#include

#include

extern "C"{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

};

#define LOG_TAG "videoplayer"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG, LOG_TAG, __VA_ARGS__)

JNIEXPORT jint JNICALL Java_jonesx_videoplayer_VideoPlayer_play

(JNIEnv *env, jclass clazz, jobject surface) {

LOGD("play");

// sd卡中的视频文件地址,可自行修改或者通过jni传入

char *file_name = "/sdcard/test.mp4";

av_register_all();

AVFormatContext *pFormatCtx = avformat_alloc_context();

// Open video file

if (avformat_open_input(&pFormatCtx, file_name, NULL, NULL) != 0) {

LOGD("Couldn't open file:%s\n", file_name);

return -1; // Couldn't open file

}

// Retrieve stream information

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGD("Couldn't find stream information.");

return -1;

}

// Find the first video stream

int videoStream = -1, i;

for (i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO

&& videoStream < 0) {

videoStream = i;

}

}

if (videoStream == -1) {

LOGD("Didn't find a video stream.");

return -1; // Didn't find a video stream

}

// Get a pointer to the codec context for the video stream

AVCodecContext *pCodecCtx = pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGD("Codec not found.");

return -1; // Codec not found

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGD("Could not open codec.");

return -1; // Could not open codec

}

// 获取native window

ANativeWindow *nativeWindow = ANativeWindow_fromSurface(env, surface);

// 获取视频宽高

int videoWidth = pCodecCtx->width;

int videoHeight = pCodecCtx->height;

// 设置native window的buffer大小,可自动拉伸

ANativeWindow_setBuffersGeometry(nativeWindow, videoWidth, videoHeight,

WINDOW_FORMAT_RGBA_8888);

ANativeWindow_Buffer windowBuffer;

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGD("Could not open codec.");

return -1; // Could not open codec

}

// Allocate video frame

AVFrame *pFrame = av_frame_alloc();

// 用于渲染

AVFrame *pFrameRGBA = av_frame_alloc();

if (pFrameRGBA == NULL || pFrame == NULL) {

LOGD("Could not allocate video frame.");

return -1;

}

// Determine required buffer size and allocate buffer

// buffer中数据就是用于渲染的,且格式为RGBA

int numBytes = av_image_get_buffer_size(AV_PIX_FMT_RGBA, pCodecCtx->width, pCodecCtx->height,

1);

uint8_t *buffer = (uint8_t *) av_malloc(numBytes * sizeof(uint8_t));

av_image_fill_arrays(pFrameRGBA->data, pFrameRGBA->linesize, buffer, AV_PIX_FMT_RGBA,

pCodecCtx->width, pCodecCtx->height, 1);

// 由于解码出来的帧格式不是RGBA的,在渲染之前需要进行格式转换

struct SwsContext *sws_ctx = sws_getContext(pCodecCtx->width,

pCodecCtx->height,

pCodecCtx->pix_fmt,

pCodecCtx->width,

pCodecCtx->height,

AV_PIX_FMT_RGBA,

SWS_BILINEAR,

NULL,

NULL,

NULL);

int frameFinished;

AVPacket packet;

while (av_read_frame(pFormatCtx, &packet) >= 0) {

// Is this a packet from the video stream?

if (packet.stream_index == videoStream) {

// Decode video frame

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// 并不是decode一次就可解码出一帧

if (frameFinished) {

// lock native window buffer

ANativeWindow_lock(nativeWindow, &windowBuffer, 0);

// 格式转换

sws_scale(sws_ctx, (uint8_t const *const *) pFrame->data,

pFrame->linesize, 0, pCodecCtx->height,

pFrameRGBA->data, pFrameRGBA->linesize);

// 获取stride

uint8_t *dst = (uint8_t *) windowBuffer.bits;

int dstStride = windowBuffer.stride * 4;

uint8_t *src = (pFrameRGBA->data[0]);

int srcStride = pFrameRGBA->linesize[0];

// 由于window的stride和帧的stride不同,因此需要逐行复制

int h;

for (h = 0; h < videoHeight; h++) {

memcpy(dst + h * dstStride, src + h * srcStride, srcStride);

}

ANativeWindow_unlockAndPost(nativeWindow);

}

}

av_packet_unref(&packet);

}

av_free(buffer);

av_free(pFrameRGBA);

// Free the YUV frame

av_free(pFrame);

// Close the codecs

avcodec_close(pCodecCtx);

// Close the video file

avformat_close_input(&pFormatCtx);

return 0;

}

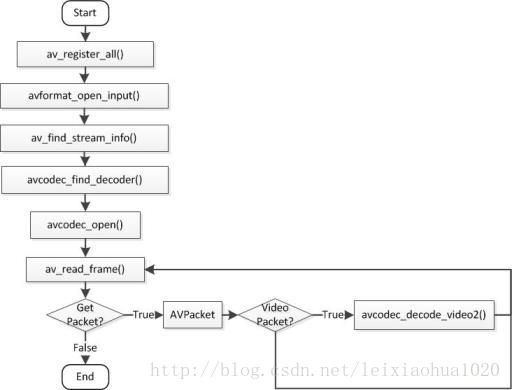

这里是正常的ffmpeg编解码流程。参见雷神

这里又有一个坑点,由于我使用的是c++ 编译器clang,而ffmpeg的so库均为c语言的,故需添加

extern "C"{

include "libavcodec/avcodec.h"

include "libavformat/avformat.h"

include "libswscale/swscale.h"

include "libavutil/imgutils.h"

};

如果这里没添加extern "C"{},即不是按C语言编译,就会存在undefined reference错误。下面是使用

VideoPlayer.java

package jonesx.videoplayer;

/**

* Created by Jonesx on 2016/3/12.

*/

public class VideoPlayer {

static {

System.loadLibrary("VideoPlayer");

}

public static native int play(Object surface);

}

MainActivity.java

这里使用的SufaceView来进行播放。

public class MainActivity extends AppCompatActivity implements SurfaceHolder.Callback {

SurfaceHolder surfaceHolder;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

SurfaceView surfaceView = (SurfaceView) findViewById(R.id.surface_view);

surfaceHolder = surfaceView.getHolder();

surfaceHolder.addCallback(this);

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

new Thread(new Runnable() {

@Override

public void run() {

VideoPlayer.play(surfaceHolder.getSurface());

}

}).start();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

}

}

灰常简单的xml

收工,看效果,非常简陋,没暂停什么滴。

ps 补充源码 https://github.com/nothinglhw/cmakeDemo