kubernetes安装

192.168.1.101 k8s-node02

192.168.1.73 k8s-node01

192.168.1.23 k8s-master01

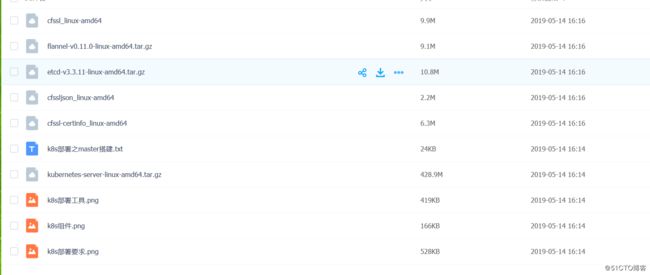

下载:链接: 链接: https://pan.baidu.com/s/1e0aRFSykVHFTIp9OE4NgRA 提取码: 22t3 环境初始化

停止iptables

systemctl stop firewalld.service

systemctl disable firewalld.service 关闭selinux

[root@k8s-node01 ~]# cat /etc/selinux/config

SELINUX=disabled

[root@k8s-node01 ~]# setenforce 0设置sysctl

[root@k8s-node01 ssl]# cat /etc/sysctl.conf

fs.file-max=1000000

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.tcp_max_syn_backlog = 16384

net.core.netdev_max_backlog = 32768

net.core.somaxconn = 32768

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_fin_timeout = 20

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_syncookies = 1

#net.ipv4.tcp_tw_len = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.ip_local_port_range = 1024 65000

net.nf_conntrack_max = 6553500

net.netfilter.nf_conntrack_max = 6553500

net.netfilter.nf_conntrack_tcp_timeout_close_wait = 60

net.netfilter.nf_conntrack_tcp_timeout_fin_wait = 120

net.netfilter.nf_conntrack_tcp_timeout_time_wait = 120

net.netfilter.nf_conntrack_tcp_timeout_established = 3600

[root@k8s-node01 ssl]# modprobe br_netfilter

[root@k8s-node01 ssl]# sysctl -p 加载ipvs

在所有的Kubernetes节点node1和node2上执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <安装docker

[root@k8s-node01 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-node01 ~]# mv docker-ce.repo /etc/yum.repos.d/

[root@k8s-node01 yum.repos.d]# yum install -y docker-ce

[root@k8s-node01 ~]# systemctl daemon-reload

[root@k8s-node01 ~]# systemctl start docker 1:配置TLS证书

组件: 需要的证书

etcd ca.pem server.pem server-key.pem

kube-apiserver ca.pem server.pem server-key.pem

kubelet ca.pem ca-key.pem

kube-proxy ca.pem kube-proxy.pem kube-proxy-key.pem

kubectl ca.pem admin.pem admin-key.pem安装证书生成工具

[root@k8s-master01 ~]# wget http://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@k8s-master01 ~]# wget http://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@k8s-master01 ~]# wget http://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@k8s-master01 ~]# chmod +x cfssl*

[root@k8s-master01 ~]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

[root@k8s-master01 ~]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@k8s-master01 ~]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@k8s-master01 ~]# mkdir /root/ssl

[root@k8s-master01 ~]# cd /root/ssl生成ca证书

[root@k8s-master01 ssl]# cat ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@k8s-master01 ssl]# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Zhengzhou",

"ST": "Zhengzhou",

"O": "k8s",

"OU": "System"

}

]

}

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -生成server证书

[root@k8s-master01 ssl]# cat server-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.1.23",

"192.168.1.73",

"192.168.1.101",

"kubernetes",

"k8s-node01",

"k8s-master01",

"k8s-node02",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Zhengzhou",

"ST": "Zhengzhou",

"O": "k8s",

"OU": "System"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server生成admin证书

[root@k8s-master01 ssl]# cat admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Zhengzhou",

"ST": "Zhengzhou",

"O": "System:masters",

"OU": "System"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin生成kube-proxy证书

[root@k8s-master01 ssl]# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Zhengzhou",

"ST": "Zhengzhou",

"O": "k8s",

"OU": "System"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

注意生成的证书要每个服务器同步一下

[root@k8s-node02 flanneld]# scp -r /root/ssl k8s-node01:/root/

[root@k8s-node02 flanneld]# scp -r /root/ssl k8s-node02:/root/部署etcd存储集群

[root@k8s-master01 ~]#wget https://github.com/etcd-io/etcd/releases/download/v3.3.11/etcd-v3.3.11-linux-amd64.tar.gz

[root@k8s-master01 ~]#tar xf etcd-v3.3.11-linux-amd64.tar.gz

[root@k8s-master01 ~]#mkdir /k8s/etcd/{bin,cfg} -p

[root@k8s-master01 ~]#mv etcd-v3.3.11-linux-amd64/etcd* /k8s/etcd/bin

[root@k8s-master01 ~]#vim /k8s/etcd/cfg/etcd

#[root@k8s-master01 etcd-v3.3.11-linux-amd64]# cat /k8s/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.23:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.23:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.23:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.23:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.23:2380,etcd02=https://192.168.1.73:2380,etcd03=https://192.168.1.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-clusters"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-master01 etcd-v3.3.11-linux-amd64]# cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/k8s/etcd/cfg/etcd

ExecStart=/k8s/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/root/ssl/server.pem \

--key-file=/root/ssl/server-key.pem \

--peer-cert-file=/root/ssl/server.pem \

--peer-key-file=/root/ssl/server-key.pem \

--trusted-ca-file=/root/ssl/ca.pem \

--peer-trusted-ca-file=/root/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-master01 etcd-v3.3.11-linux-amd64]# systemctl daemon-reload

[root@k8s-master01 etcd-v3.3.11-linux-amd64]# systemctl restart etcd

复制到从节点

[root@k8s-master01 ~]# scp /usr/lib/systemd/system/etcd.service k8s-node01:/usr/lib/systemd/system/etcd.service

[root@k8s-master01 ~]# scp /usr/lib/systemd/system/etcd.service k8s-node02:/usr/lib/systemd/system/etcd.service

[root@k8s-master01 ~]# scp -r etcd k8s-node01:/k8s/

[root@k8s-master01 ~]# scp -r etcd k8s-node02:/k8s/

注意修改:

[root@k8s-master01 k8s]# cat /k8s/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd01" #对应的服务器 修改为下列: ETCD_INITIAL_CLUSTER里面的etcd0#

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.23:2380" #修改为对应服务器的ip

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.23:2379" #修改为对应服务器的ip

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.23:2380" #修改为对应服务器的ip

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.23:2379" #修改为对应服务器的ip

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.23:2380,etcd02=https://192.168.1.73:2380,etcd03=https://192.168.1.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-clusters"

ETCD_INITIAL_CLUSTER_STATE="new"

三台分别执行:systemctl daemon-reload&&systemctl enable etcd&& systemctl restart etcd&&ps -ef|grep etcd

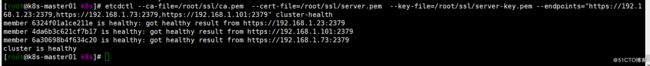

检查集群健康状态

[root@k8s-master01 ~]# etcdctl --ca-file=/root/ssl/ca.pem --cert-file=/root/ssl/server.pem --key-file=/root/ssl/server-key.pem --endpoints=" https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379" cluster-health部署flannel网路

是Overkay网络的一种,也是将源数据包封装在另一种网络里面进行路由转发和通信,目前已经支持UDP,CXLAN,AWS VPC和GCE路由等数据转发方式。

多主机容器网络通信其他主流方案:隧道(Weave,openSwitch),路由方案(calico)等

[root@k8s-master01 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-master01 ~]# tar xf flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-master01 ~]# mkdir /k8s/flanneld/{bin,cfg}

[root@k8s-master01 ~]# cd flannel-v0.11.0-linux-amd64

[root@k8s-master01 ~]# mv flanneld mk-docker-opts.sh /k8s/flanneld/bin

[root@k8s-master01 ~]# cat /etc/profile

export PATH=/k8s/etcd/bin:/k8s/flanneld/bin:$PATH向 etcd 写入集群 Pod 网段信息

[root@k8s-master01 ~]# etcdctl --ca-file=/root/ssl/ca.pem --cert-file=/root/ssl/server.pem --key-file=/root/ssl/server-key.pem --endpoints="https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'设置flanneld配置文件和启动管理文件

[root@k8s-master01 flanneld]# vim /k8s/flanneld/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379 -etcd-cafile=/root/ssl/ca.pem -etcd-certfile=/root/ssl/server.pem -etcd-keyfile=/root/ssl/server-key.pem"

[root@k8s-master01 flanneld]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/k8s/flanneld/cfg/flanneld

ExecStart=/k8s/flanneld/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/k8s/flanneld/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-master01 flanneld]#systemctl daemon-reload

[root@k8s-master01 flanneld]#systemctl enable flanneld

[root@k8s-master01 flanneld]#systemctl start flanneld

检查启动:ifconfig查看flanneld网口

flannel.1: flags=4163 mtu 1450

inet 172.17.39.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::ec16:16ff:fe4b:cd1 prefixlen 64 scopeid 0x20

ether ee:16:16:4b:0c:d1 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 12 overruns 0 carrier 0 collisions 0

查看生成子网的接口

[root@k8s-master01 flanneld]# vim /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.39.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.39.1/24 --ip-masq=false --mtu=1450" 配置Docker启动指定flanneld子网段

[root@k8s-master01 flanneld]# mv /usr/lib/systemd/system/docker.service /usr/lib/systemd/system/docker.service_back

[root@k8s-master01 flanneld]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

[root@k8s-master01 flanneld]# systemctl daemon-reload

[root@k8s-master01 flanneld]# systemctl restart docker

然后ifconfig查看docker是否从flanneld得到ip地址

[root@k8s-master01 flanneld]# ifconfig

docker0: flags=4099 mtu 1500

inet 172.17.39.1 netmask 255.255.255.0 broadcast 172.17.39.255

ether 02:42:f0:f7:a0:74 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1450

inet 172.17.39.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::ec16:16ff:fe4b:cd1 prefixlen 64 scopeid 0x20

ether ee:16:16:4b:0c:d1 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 13 overruns 0 carrier 0 collisions 0

复制配置到从节点

[root@k8s-master01 ~]# cd /k8s/

[root@k8s-master01 k8s]# scp -r flanneld k8s-node01:/k8s/

[root@k8s-master01 k8s]# scp -r flanneld k8s-node02:/k8s/

[root@k8s-master01 k8s]# scp /usr/lib/systemd/system/docker.service k8s-node01:/usr/lib/systemd/system/docker.service

[root@k8s-master01 k8s]# scp /usr/lib/systemd/system/docker.service k8s-node02:/usr/lib/systemd/system/docker.service

[root@k8s-master01 k8s]# scp /usr/lib/systemd/system/flanneld.service k8s-node01:/usr/lib/systemd/system/flanneld.service

[root@k8s-master01 k8s]# scp /usr/lib/systemd/system/flanneld.service k8s-node02:/usr/lib/systemd/system/flanneld.service

node01执行

[root@k8s-node01 cfg]# systemctl daemon-reload

[root@k8s-node01 cfg]# systemctl enable docker

[root@k8s-node01 cfg]# systemctl enable flanneld

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-node01 cfg]# systemctl start flanneld

[root@k8s-node01 cfg]# systemctl start docker

node02执行

[root@k8s-node02 flanneld]# systemctl daemon-reload

[root@k8s-node02 flanneld]# systemctl enable docker

[root@k8s-node02 flanneld]# systemctl enable flanneld

[root@k8s-node02 flanneld]# systemctl restart flanneld

[root@k8s-node02 flanneld]# systemctl restart docker

这样:不同的服务器flanneld会生成不同的IP地址,docker会根据flanneld生成的网络接口生成*.1的ip地址

#查看etcd注册的ip地址

[root@k8s-master01 k8s]# etcdctl --ca-file=/root/ssl/ca.pem --cert-file=/root/ssl/server.pem --key-file=/root/ssl/server-key.pem --endpoints="https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379" ls /coreos.com/network/subnets

/coreos.com/network/subnets/172.17.89.0-24

/coreos.com/network/subnets/172.17.44.0-24

/coreos.com/network/subnets/172.17.39.0-24

[root@k8s-master01 k8s]# etcdctl --ca-file=/root/ssl/ca.pem --cert-file=/root/ssl/server.pem --key-file=/root/ssl/server-key.pem --endpoints="https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379" get /coreos.com/network/subnets/172.17.39.0-24

{"PublicIP":"192.168.1.23","BackendType":"vxlan","BackendData":{"VtepMAC":"ee:16:16:4b:0c:d1"}}

PublicIP: 节点ip地址

BackendType: 类型

VtepMAC: 虚拟的mac

查看下路由表:### master上创建node节点的kubeconfig文件创建 TLS Bootstrapping Token生成token.csv文件

head -c 16 /dev/urandom |od -An -t x |tr -d ' ' > /k8s/kubenerets/token.csv

[root@k8s-master01 kubenerets]# cat toker.csv

454b513c7148ab3a0d2579e8f0c4e884,kubelet-bootstrap,10001,"system:kubelet-bootstrap"创建apiserver配置文件

[root@k8s-master01 kubenerets]# export KUBE_APISERVER="https://192.168.1.23:6443"创建kubelet bootstrapping kubeconfig

BOOTSTRAP_TOKEN=454b513c7148ab3a0d2579e8f0c4e884

KUBE_APISERVER="https://192.168.1.23:6443"设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/root/ssl/ca.pem\

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/root/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/root/ssl/kube-proxy.pem \

--client-key=/root/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig部署 apiserver kube-scheduler kube-controller-manager

创建apiserver配置文件

[root@k8s-master01 cfg]# cat /k8s/kubenerets/cfg/kube-apisever

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.23:2379,https://192.168.1.73:2379,https://192.168.1.101:2379 \

--insecure-bind-address=0.0.0.0 \

--insecure-port=8080 \

--bind-address=192.168.1.23 \

--secure-port=6443 \

--advertise-address=192.168.1.23 \

--allow-privileged=true \

--service-cluster-ip-range=10.10.10.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/k8s/kubenerets/cfg/toker.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/root/ssl/server.pem \

--kubelet-https=true \

--tls-private-key-file=/root/ssl/server-key.pem \

--client-ca-file=/root/ssl/ca.pem \

--service-account-key-file=/root/ssl/ca-key.pem \

--etcd-cafile=/root/ssl/ca.pem \

--etcd-certfile=/root/ssl/server.pem \

--etcd-keyfile=/root/ssl/server-key.pem"kube-apiserver启动脚本

[root@k8s-master01 cfg]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubenerets/cfg/kube-apisever

ExecStart=/k8s/kubenerets/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targetscheduler 部署

[root@k8s-master01 cfg]# cat kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect"启动脚本

[root@k8s-master01 cfg]# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubenerets/cfg/kube-scheduler

ExecStart=/k8s/kubenerets/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target kube-controller-manager 部署

[root@k8s-master01 cfg]# cat kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.10.10.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/root/ssl/ca.pem \

--cluster-signing-key-file=/root/ssl/ca-key.pem \

--root-ca-file=/root/ssl/ca.pem \

--service-account-private-key-file=/root/ssl/ca-key.pem启动脚本

[root@k8s-master01 cfg]# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubenerets/cfg/kube-controller-manager

ExecStart=/k8s/kubenerets/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl enable kube-controller-manager

systemctl enable kube-scheduler

systemctl restart kube-apiserver

systemctl restart kube-controller-manager

systemctl restart kube-scheduler#查看master集群状态

[root@k8s-master01 cfg]# kubectl get cs,nodes

复制 文件到从节点

复制证书文件到node节点

[root@k8s-master01 cfg]# scp -r /root/ssl k8s-node01:/root/

[root@k8s-master01 cfg]# scp -r /root/ssl k8s-node02:/root/复制bootstrap.kubeconfig kube-proxy.kubeconfig

[root@k8s-master01 kubenerets]# scp *.kubeconfig k8s-node01:/k8s/kubenerets/

bootstrap.kubeconfig 100% 2182 4.1MB/s 00:00

kube-proxy.kubeconfig 100% 6300 12.2MB/s 00:00

[root@k8s-master01 kubenerets]# scp *.kubeconfig k8s-node02:/k8s/kubenerets/

bootstrap.kubeconfig 100% 2182 4.1MB/s 00:00

kube-proxy.kubeconfig 100% 6300 12.2MB/s 00:00 我这里直接把可执行命令都发送到测试环境

[root@k8s-master01 bin]# scp ./* k8s-node01:/k8s/kubenerets/bin/ && scp ./* k8s-node02:/k8s/kubenerets/bin/

apiextensions-apiserver 100% 41MB 70.0MB/s 00:00

cloud-controller-manager 100% 96MB 95.7MB/s 00:01

hyperkube 100% 201MB 67.1MB/s 00:03

kubeadm 100% 38MB 55.9MB/s 00:00

kube-apiserver 100% 160MB 79.9MB/s 00:02

kube-controller-manager 100% 110MB 69.4MB/s 00:01

kubectl 100% 41MB 80.6MB/s 00:00

kubelet 100% 122MB 122.0MB/s 00:01

kube-proxy 100% 35MB 66.0MB/s 00:00

kube-scheduler 100% 37MB 78.5MB/s 00:00

mounter 100% 1610KB 17.9MB/s 00:00 部署node节点组件

kubernetes work 节点运行如下组件:

docker 前面已经部署

kubelet

kube-proxy 部署 kubelet 组件

kublet 运行在每个 worker 节点上,接收 kube-apiserver 发送的请求,管理 Pod 容器,执行交互式命令,如exec、run、logs 等;

kublet 启动时自动向 kube-apiserver 注册节点信息,内置的 cadvisor 统计和监控节点的资源使用情况;

为确保安全,本文档只开启接收 https 请求的安全端口,对请求进行认证和授权,拒绝未授权的访问(如apiserver、heapster)。部署kubelet

[root@k8s-node01 cfg]# cat /k8s/kubenerets/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--address=192.168.1.73 \

--hostname-override=192.168.1.73 \

--kubeconfig=/k8s/kubenerets/cfg/kubelet.kubeconfig \ #自己生成 不需要创建

--experimental-bootstrap-kubeconfig=/k8s/kubenerets/bootstrap.kubeconfig \

--cert-dir=/root/ssl \

--allow-privileged=true \

--cluster-dns=10.10.10.2 \

--cluster-domain=cluster.local \

--fail-swap-on=false \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

#kubelet启动脚本

[root@k8s-node01 cfg]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/k8s/kubenerets/cfg/kubelet

ExecStart=/k8s/kubenerets/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target部署kube-proxy

kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡。 创建 kube-proxy 配置文件

[root@k8s-node01 cfg]# vim /k8s/kubenerets/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.73 \

--kubeconfig=/k8s/kubenerets/kube-proxy.kubeconfig"

bindAddress: 监听地址;

clientConnection.kubeconfig: 连接 apiserver 的 kubeconfig 文件;

clusterCIDR: kube-proxy 根据 –cluster-cidr 判断集群内部和外部流量,指定 –cluster-cidr 或 –masquerade-all 选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT;

hostnameOverride: 参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 ipvs 规则;

mode: 使用 ipvs 模式;创建kube-proxy systemd unit 文件

[root@k8s-node01 cfg]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/k8s/kubenerets/cfg/kube-proxy

ExecStart=/k8s/kubenerets/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl enable kube-proxy

systemctl start kube-prox 在master创建用户角色并绑定权限

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrapmaster节点查看csr

[root@k8s-master01 cfg]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-YCL1SJyx3q0tSDCQuFLe4DmMdxUZgLA3-2EmDCOKiD4 19m kubelet-bootstrap Pendingmaster节点授权允许node节点皆在csr

kubectl certificate approve node-csr-YCL1SJyx3q0tSDCQuFLe4DmMdxUZgLA3-2EmDCOKiD4再次查看src发现CONDITION 变更为:Approved,Issued

master查看node加载进度

[root@k8s-master01 cfg]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.1.73 Ready 48s v1.14.1 这时候node01节点应该自动生成了kubelet的证书

[root@k8s-node01 cfg]# ls /root/ssl/kubelet*

/root/ssl/kubelet-client-2019-05-14-11-29-40.pem /root/ssl/kubelet-client-current.pem /root/ssl/kubelet.crt /root/ssl/kubelet.key其他从节点加入集群方式同上

[root@k8s-node01 kubenerets]# scp /usr/lib/systemd/system/kube* k8s-node02:/usr/lib/systemd/system/

[root@k8s-node01 cfg]# cd /k8s/kubenerets/cfg

[root@k8s-node01 cfg]# scp kubelet kube-proxy k8s-node02:/k8s/kubenerets/cfg/修改kubelet和kube-proxy

[root@k8s-node02 cfg]# cat kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--address=192.168.1.101 \

--hostname-override=192.168.1.101 \

--kubeconfig=/k8s/kubenerets/cfg/kubelet.kubeconfig \

--experimental-bootstrap-kubeconfig=/k8s/kubenerets/bootstrap.kubeconfig \

--cert-dir=/root/ssl \

--allow-privileged=true \

--cluster-dns=10.10.10.2 \

--cluster-domain=cluster.local \

--fail-swap-on=false \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

[root@k8s-node02 cfg]# cat kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.101 \

--kubeconfig=/k8s/kubenerets/kube-proxy.kubeconfig" 复制启动脚本

scp /usr/lib/systemd/system/kube* k8s-node02:/usr/lib/systemd/system/

启动

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl enable kube-proxy

systemctl start kube-proxymaster节点加载crs

[root@k8s-master01 cfg]# kubectl get csr

[root@k8s-master01 cfg]# kubectl certificate approve node-csr-gHgQ5AYjpn6nFUMVEEYvIfyNqUK2ctmpA14YMecQtHY