说白了就是两个函数一个建立索引(写),另一个来查找(读),所以涉及到java IO的一些知识。

[java] view plaincopyprint?

import java.io.*;

import java.nio.file.Paths;

import java.util.Date;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.Field.Store;

import org.apache.lucene.document.LongField;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.*;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

/**

* This class demonstrate the process of creating index with Lucene

* for text files

*/

public class TxtFileIndexer {

public static void main(String[] args) throws Exception{

//indexDir is the directory that hosts Lucene's index files

Directory indexDir = FSDirectory.open(Paths.get("G:\\luceneout"));

//dataDir is the directory that hosts the text files that to be indexed

File dataDir = new File("G:\\downloads\\LJParser_release\\LJParser_Packet\\训练分类用文本\\交通");

Analyzer luceneAnalyzer = new StandardAnalyzer(); //新建一个分词器实例

IndexWriterConfig config = new IndexWriterConfig(luceneAnalyzer);

File[] dataFiles = dataDir.listFiles(); //所有训练样本文件

IndexWriter indexWriter = new IndexWriter(indexDir,config);//构造一个索引写入器

long startTime = new Date().getTime();

for(int i = 0; i < dataFiles.length; i++){

if(dataFiles[i].isFile() && dataFiles[i].getName().endsWith(".txt")){

System.out.println("Indexing file " + dataFiles[i].getCanonicalPath()); //返回绝对路径

Document document = new Document();//每一个文件都变成一个document对象

Reader txtReader = new FileReader(dataFiles[i]);

Field field1 = new StringField("path",dataFiles[i].getPath(),Store.YES);

Field field2 = new TextField("content",txtReader);

Field field3 = new LongField("fileSize", dataFiles[i].length(), Store.YES);

Field field4 = new TextField("filename",dataFiles[i].getName(),Store.YES);

document.add(field1);

document.add(field2);

document.add(field3);

document.add(field4);

indexWriter.addDocument(document); //写进一个索引

}

}

//indexWriter.optimize();

indexWriter.close();

long endTime = new Date().getTime();

System.out.println("It takes " + (endTime - startTime)

+ " milliseconds to create index for the files in directory "

+ dataDir.getPath());

}

}

import java.io.*;

import java.nio.file.Paths;

import java.util.Date;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.Field.Store;

import org.apache.lucene.document.LongField;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.*;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

/**

* This class demonstrate the process of creating index with Lucene

* for text files

*/

public class TxtFileIndexer {

public static void main(String[] args) throws Exception{

//indexDir is the directory that hosts Lucene's index files

Directory indexDir = FSDirectory.open(Paths.get("G:\\luceneout"));

//dataDir is the directory that hosts the text files that to be indexed

File dataDir = new File("G:\\downloads\\LJParser_release\\LJParser_Packet\\训练分类用文本\\交通");

Analyzer luceneAnalyzer = new StandardAnalyzer(); //新建一个分词器实例

IndexWriterConfig config = new IndexWriterConfig(luceneAnalyzer);

File[] dataFiles = dataDir.listFiles(); //所有训练样本文件

IndexWriter indexWriter = new IndexWriter(indexDir,config);//构造一个索引写入器

long startTime = new Date().getTime();

for(int i = 0; i < dataFiles.length; i++){

if(dataFiles[i].isFile() && dataFiles[i].getName().endsWith(".txt")){

System.out.println("Indexing file " + dataFiles[i].getCanonicalPath()); //返回绝对路径

Document document = new Document();//每一个文件都变成一个document对象

Reader txtReader = new FileReader(dataFiles[i]);

Field field1 = new StringField("path",dataFiles[i].getPath(),Store.YES);

Field field2 = new TextField("content",txtReader);

Field field3 = new LongField("fileSize", dataFiles[i].length(), Store.YES);

Field field4 = new TextField("filename",dataFiles[i].getName(),Store.YES);

document.add(field1);

document.add(field2);

document.add(field3);

document.add(field4);

indexWriter.addDocument(document); //写进一个索引

}

}

//indexWriter.optimize();

indexWriter.close();

long endTime = new Date().getTime();

System.out.println("It takes " + (endTime - startTime)

+ " milliseconds to create index for the files in directory "

+ dataDir.getPath());

}

}

读取索引并查找

[java] view plaincopyprint?

import java.io.File;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.*;

import org.apache.lucene.store.*;

/**

* This class is used to demonstrate the

* process of searching on an existing

* Lucene index

*

*/

public class TxtFileSearcher {

public static void main(String[] args) throws Exception{

//存储了索引文件

Directory indexDir = FSDirectory.open(Paths.get("G:\\luceneout"));

//读取器读取索引文件

DirectoryReader ireader = DirectoryReader.open(indexDir);

//查找

IndexSearcher searcher = new IndexSearcher(ireader);

//目的查找字符串

String queryStr = "大数据挖掘";

//构造一个词法分析器,并将查询结果返回到一个队列

QueryParser parser = new QueryParser("content",new StandardAnalyzer());

Query query = parser.parse(queryStr);

TopDocs docs = searcher.search(query, 100);

System.out.print("一共搜索到结果:"+docs.totalHits+"条");

//输出查询结果信息

for(ScoreDoc scoreDoc:docs.scoreDocs){

System.out.print("序号为:"+scoreDoc.doc);

System.out.print("评分为:"+scoreDoc.score);

Document document = searcher.doc(scoreDoc.doc);

System.out.print("路径为:"+document.get("path"));

System.out.print("内容为"+document.get("content"));

System.out.print("文件大小为"+document.get("fileSize"));

System.out.print("文件名为"+document.get("filename"));

System.out.println();

}

}

}

import java.io.File;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.*;

import org.apache.lucene.store.*;

/**

* This class is used to demonstrate the

* process of searching on an existing

* Lucene index

*

*/

public class TxtFileSearcher {

public static void main(String[] args) throws Exception{

//存储了索引文件

Directory indexDir = FSDirectory.open(Paths.get("G:\\luceneout"));

//读取器读取索引文件

DirectoryReader ireader = DirectoryReader.open(indexDir);

//查找

IndexSearcher searcher = new IndexSearcher(ireader);

//目的查找字符串

String queryStr = "大数据挖掘";

//构造一个词法分析器,并将查询结果返回到一个队列

QueryParser parser = new QueryParser("content",new StandardAnalyzer());

Query query = parser.parse(queryStr);

TopDocs docs = searcher.search(query, 100);

System.out.print("一共搜索到结果:"+docs.totalHits+"条");

//输出查询结果信息

for(ScoreDoc scoreDoc:docs.scoreDocs){

System.out.print("序号为:"+scoreDoc.doc);

System.out.print("评分为:"+scoreDoc.score);

Document document = searcher.doc(scoreDoc.doc);

System.out.print("路径为:"+document.get("path"));

System.out.print("内容为"+document.get("content"));

System.out.print("文件大小为"+document.get("fileSize"));

System.out.print("文件名为"+document.get("filename"));

System.out.println();

}

}

}

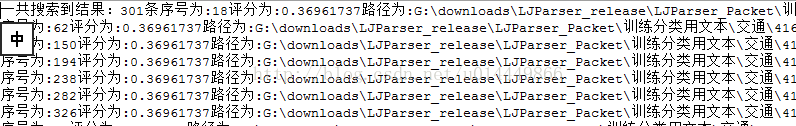

运行结果

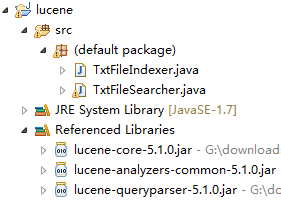

下面是文件目录

两个函数都需要用到分词器,前者是为了配置写入,后者则是为了配置词法分析器来查找