VRRP介绍

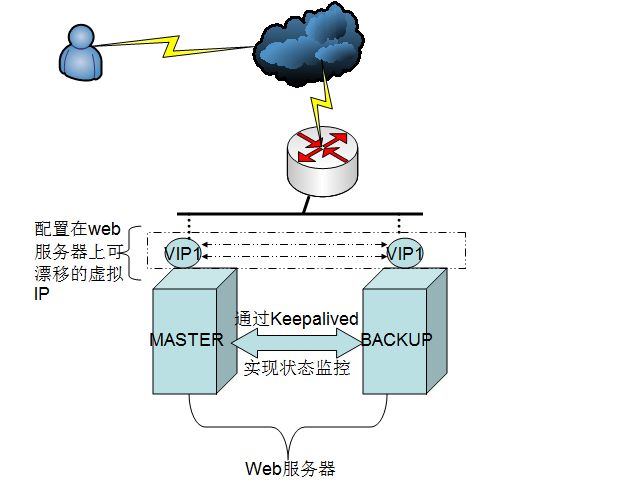

VRRP(Virtual Routing Redundant Protocol)可以通过在多台路由器组合成一个虚拟路由组(一个VRRP组)之间共享一个虚拟IP(VIP)解决静态配置的问题,此时仅需要客户端以VIP作为其默认网关即可,虚拟IP配置在哪个路由设备上取决于VRRP的工作机制,实现网关地址漂移;MASTER与BACKUP设备之间有优先级设定,数字越大优先级越高,通过各节点之间不停的传送通告信息来确认对方是否在线;当MASTER宕机的时候,虚拟IP会漂移到备用路由上,实现前端网关的高可用

VRRP中一主多从模式

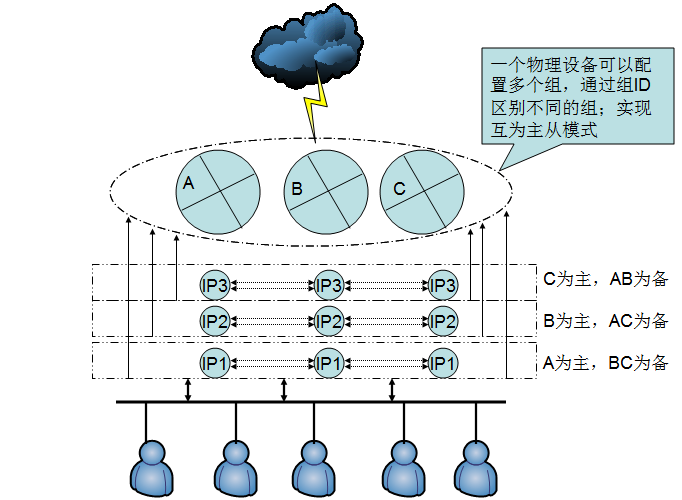

VRRP中多主模式

Keepalived介绍

Keepalived起初是特意为LVS设计,用来监控集群服务中各Real Server节点的状态,根据Layer3,4,5交换机制检查每个服务节点的状态;当某个服务节点出现故障,Keepalived检测到后将这个故障节点从服务中剔除;当故障节点重新恢复到正常状态后,Keepalived可根据自身机制自动将服务加进集群服务添加到集群服务列表中;后来Keepalived加入了VRRP功能,使得现在的Keepalived具有服务器运行检测功能与HA Cluster功能

基本知识点就介绍到这里了,下面让我们一起来看一看keepalived功能实现吧

基于keepalived实现LVS高可用

LVS的实现模型为DR,DR的详细相关配置已经在之前博文有介绍,详细内容请点击DR模型实现

IP地址规划

HA1 |

HA2 |

Real Server1 |

Real Server2 |

||||

FQDN |

node1.magedu.com |

FQDN |

node2.magedu.com |

FQDN |

test1.magedu.com |

FQDN |

test2.magedu.com |

IP |

172.16.51.76 |

IP |

172.16.51.100 |

IP |

172.16.51.77 |

IP |

172.16.51.78 |

Resal Server配置准备

安装web服务

# yum install httpd –y

测试web服务

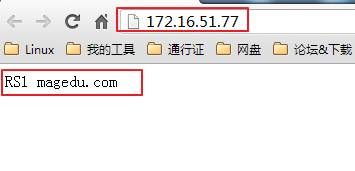

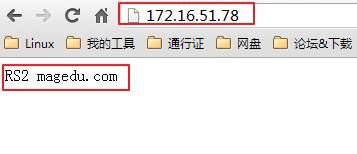

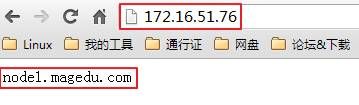

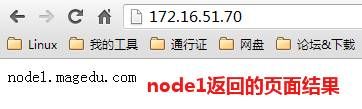

Real Server1

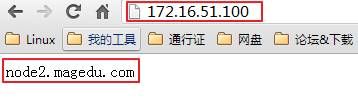

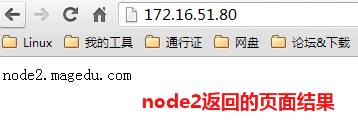

Real Server2

LVSDR模型配置实现

Rea lServer1上VIP配置

# sysctl -w net.ipv4.conf.lo.arp_ignore=1

# sysctl -w net.ipv4.conf.all.arp_ignore=1

# sysctl -w net.ipv4.conf.lo.arp_announce=2

# sysctl -wnet.ipv4.conf.all.arp_announce=2

# ifconfig lo:0 172.16.51.70 broadcast172.16.51.70 netmask 255.255.255.255 up

# route add -host 172.16.51.70 dev lo:0

Rea lServer2上VIP配置与RealServer1相同,这里就不再列出了

在Rea lServer1与Rea lServer2上安装ipvsadm

# yum -y install ipvsadm

HA1与HA2主机相关操作配置

在HA1与HA2上安装keeplived

# yum -y --nogpgcheck localinstallkeepalived-1.2.7-5.el5.i386.rpm

修改配置文件(HA1上操作)

# cd /etc/keepalived/

# vim keepalived.conf

全局配置更改(关于邮件这里未做太多的更改)

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from root@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

虚拟路由组

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 101

advert_int 1

authentication {

auth_typePASS

auth_pass password

}

virtual_ipaddress {

172.16.51.70

}

}

Virtual_server与real_server设置

virtual_server 172.16.51.70 80 {

delay_loop 6

lb_algo wlc

lb_kind DR

nat_mask 255.255.0.0

persistence_timeout 50

protocol TCP

real_server 172.16.51.77 80 {

weight 1

SSL_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

virtual_server 172.16.51.70 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

persistence_timeout 50

protocol TCP

real_server 172.16.51.78 80 {

weight 1

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

更改完成后复制到HA2上相同的一份

# scp /etc/keepalived/keepalived.conf node2:/etc/keepalived/

修改node2上keepalived配置文件,修改项如下

state BACKUP

priority 100

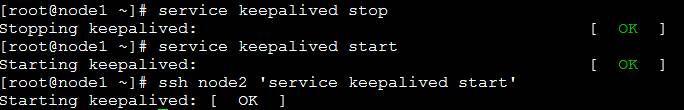

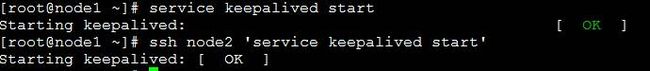

启动keepalived

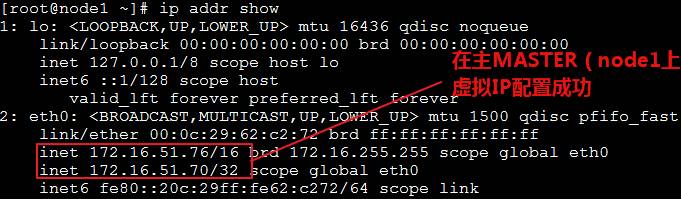

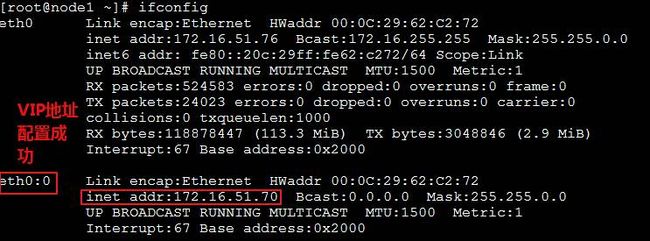

查看配置是否生效

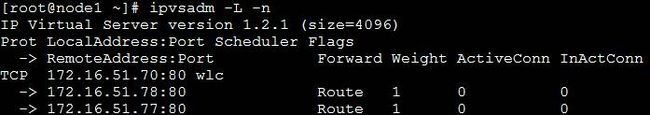

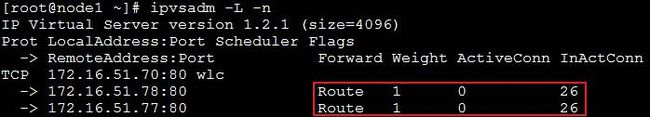

查看生成的ipvsadm规则信息

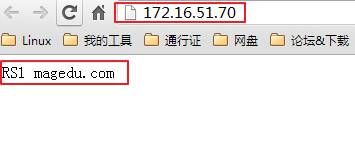

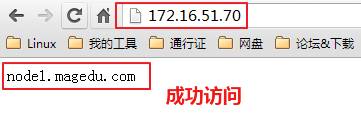

使用window主机浏览器进行访问测试

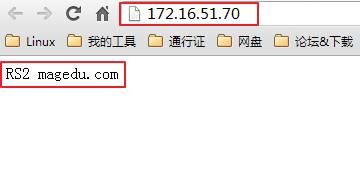

刷新一下

刷新多次后,查看相关匹配信息

基于keepalived实现web服务的高可用

这是在完成上述实验后在HA1与HA2主机实现web服务的高可用

关闭node1与node2的keepalive

#service keepalived stop

在node1与node2上安装web服务

#yum -y install httpd

启动web服务后,分别准备web页面

# echo "node1.magedu.com" >/var/www/html/index.html

# echo "node2.magedu.com" >/var/www/html/index.html

访问web进行测试

配置文件准备与配置,此rpm制作(马哥专利的哦)时已经提供了(rpm包已经上传到附件了)

# cp keepalived.conf.haproxy_examplekeepalived.conf

# vim keepalived.conf

修改内容

定义一下服务脚本名称httpd(整个配置文件就贴出来了)

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

}

notification_email_from [email protected]

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_connect_timeout 3

smtp_server 127.0.0.1

router_id LVS_DEVEL

}

vrrp_script chk_httpd {

script "killall -0 httpd"

interval 2

# check every 2 seconds

weight -2

# if failed, decrease 2 of the priority

fall 2

# require 2 failures for failures

rise 1

# require 1 sucesses for ok

}

vrrp_script chk_schedown {

script "[[ -f /etc/keepalived/down ]]&& exit 1 || exit 0"

interval 2

weight -2

}

vrrp_instance VI_1 {

interface eth0

# interface for inside_network, bound byvrrp

state MASTER

# Initial state, MASTER|BACKUP

# As soon as the other machine(s) come up,

# an election will be held and the machine

# with the highest "priority"will become MASTER.

# So the entry here doesn't matter a wholelot.

priority 101

# for electing MASTER, highest prioritywins.

# to be MASTER, make 50 more than other machines.

virtual_router_id 51

# arbitary unique number 0..255

# used to differentiate multiple instancesof vrrpd

# running on the same NIC (and hence samesocket).

garp_master_delay 1

authentication {

auth_typePASS

auth_pass password

}

track_interface {

eth0

}

# optional, monitor these as well.

# go to FAULT state if any of these godown.

virtual_ipaddress {

172.16.51.70/16 dev eth0 label eth0:0

}

#addresses add|del on change to MASTER, toBACKUP.

#With the same entries on other machines,

#the opposite transition will be occuring.

}

track_interface {

eth0

}

# optional, monitor these as well.

# go to FAULT state if any of these godown.

virtual_ipaddress {

172.16.51.70/16 dev eth0 label eth0:0

}

#addresses add|del on change to MASTER, toBACKUP.

#With the same entries on other machines,

#the opposite transition will be occuring.

#/ brd dev scope label

复制配置文件到node2主机

# scp /etc/keepalived/keepalived.confnode2:/etc/keepalived/

复制后需要修改内容如下

state BACKUP

priority 100

启动keepalived服务

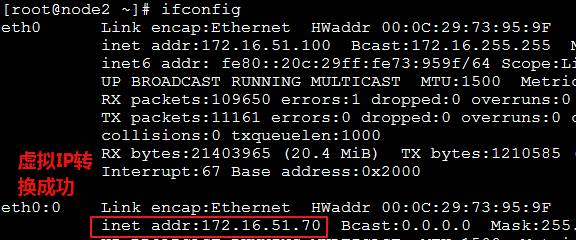

查看MASTER(node1)上主机定义的VIP(eth0别名IP)是否成功

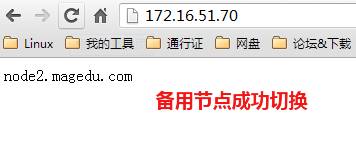

访问虚拟IP地址

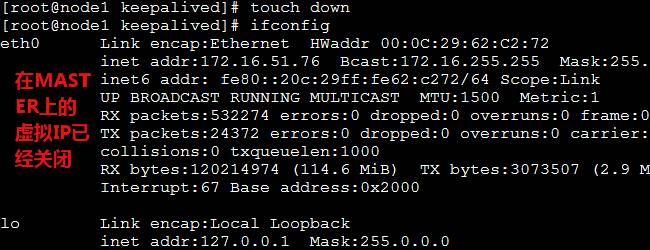

主备转换测试

# cd /etc/keepalived/

访问虚拟IP

现在把down文件删除,MASTER节点会夺回资源,因为在node1上的优先级(101)比nod2上的优先级(100)高

双主模式实现

两个主机web服务同时开启,配置的VIP不同,让他们互为主从

修改keepalived.conf配置文件(只需修改配置文件末尾“vrrp_instance VI_2”中的内容)

vrrp_instance VI_2 {

interface eth0

state BACKUP # BACKUP forslave routers

priority 100 # 100 for BACKUP

virtual_router_id 52

garp_master_delay 1

authentication {

auth_typePASS

auth_pass password

}

track_interface {

eth0

}

virtual_ipaddress {

172.16.51.80/16 dev eth0 label eth0:1

}

track_script {

chk_httpd

chk_schedown

}

notify_master "/etc/keepalived/notify.sh master eth0:1"

notify_backup "/etc/keepalived/notify.sh backup eth0:1"

notify_fault "/etc/keepalived/notify.sh fault eth0:1"

}

在node2上启用“vrrp_instance VI_2”中的内容后,修改一下第二VIP地址与脚本名称(track_httpd, chk_schedown)

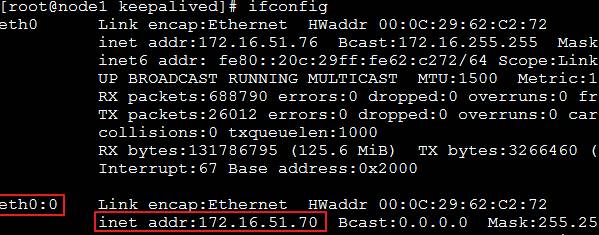

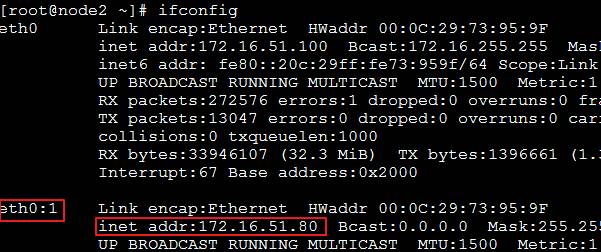

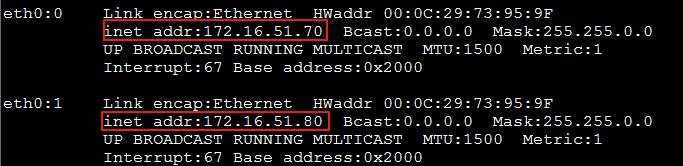

修改完成后,启动keepalived服务,查看node1与node2上的VIP地址配置

node1主机

node2主机

测试

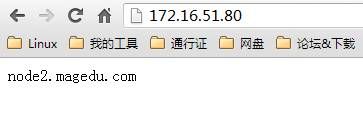

模拟node1出现故障

# cd /etc/keepalived/

# touch down

此时,访问172.16.51.70与172.16.51.80都是由node2主机返回结果

基于keepalived实现的LVS高可用与web服务的高可用就完成了,希望可以为你带来一些帮助哦