前言

AVFoundation框架是ios中很重要的框架,所有与视频音频相关的软硬件控制都在这个框架里面,接下来这几篇就主要对这个框架进行介绍和讲解。

便于读者查阅这个AVFoundation框架系列,在此提供目录直通车。

AVFoundation框架解析目录

AVFoundation框架解析目录

AVFoundation框架解析目录

本章导读

上一章节主要从整体上全览AVFoundation框架,本章主要以一个小的需求(以媒体捕捉以起点,拍摄、保存视频),打开AVFoundation的大门,带领我们欣赏这个框架带来的强大功能。

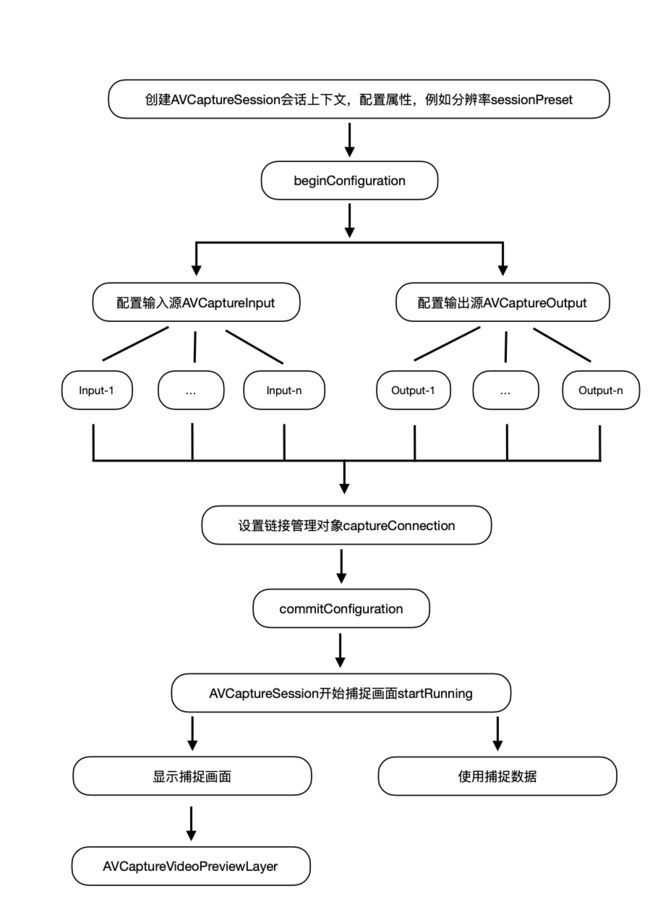

媒体捕捉流程

需求结合

了解整个AVCapture捕捉流程后,就需要结合我们具体的需求修改对应的细节,比如按照实际需要修改输入源和输出源。

几个简单的需求例子:

- 扫一扫:通过捕捉媒体,获取扫描内容,识别二维码或条形码

- 拍照:通过捕捉媒体,拍摄照片

- 录像:通过捕捉媒体,录制视频

Demo以录像为需求,通过AVCapture捕捉到画面后,使用对应输出源的数据,实现我们的需求。

源码解析

就不用伪代码了,直接上源码。

func setCameraConfiguration() {

//创建AVCaptureSession对象

let captureSession = AVCaptureSession()

self.captureSession = captureSession

captureSession.sessionPreset = .high

captureSession.beginConfiguration()

//配置输入设备

AVCaptureDevice.devices().forEach { (device) in

if device.hasMediaType(.video) && device.position == .back {

do {

let captureVideoDeviceInput = try AVCaptureDeviceInput(device: device)

if captureSession.canAddInput(captureVideoDeviceInput) {

captureSession.addInput(captureVideoDeviceInput)

}

} catch {

}

}

if device.hasMediaType(.audio) {

do {

let captureAudioDeviceInput = try AVCaptureDeviceInput(device: device)

if captureSession.canAddInput(captureAudioDeviceInput) {

captureSession.addInput(captureAudioDeviceInput)

}

} catch {

}

}

}

//配置输出设备

let captureMovieFileOutput = AVCaptureMovieFileOutput()

self.captureMovieFileOutput = captureMovieFileOutput

if captureSession.canAddOutput(captureMovieFileOutput) {

captureSession.addOutput(captureMovieFileOutput)

}

//设置链接管理对象

if let captureConnection = captureMovieFileOutput.connection(with: .video) {

captureConnection.videoScaleAndCropFactor = captureConnection.videoMaxScaleAndCropFactor //视频旋转方向设置

if captureConnection.isVideoStabilizationSupported {

captureConnection.preferredVideoStabilizationMode = .auto

}

}

captureSession.commitConfiguration()

captureSession.startRunning()

}

这里面有4大块,要吃的透彻才能活以致用。这四块本章不展开,如果有读者咨询的话会在后面详细讲。

- AVCaptureSession

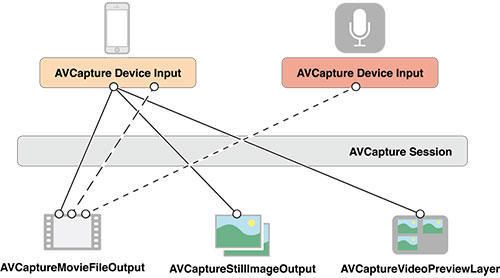

AVCaptureSession:媒体(音、视频)捕获会话,负责把捕获的音视频数据输出到输出设备中。一个AVCaptureSession可以有多个输入输出。

在视频捕获时,客户端可以实例化AVCaptureSession并添加适当的AVCaptureInputs、AVCaptureDeviceInput和输出,比如AVCaptureMovieFileOutput。通过[AVCaptureSession startRunning]开始数据流从输入到输出,和[AVCaptureSession stopRunning]停止输出输入的流动。客户端可以通过设置sessionPreset属性定制录制质量水平或输出的比特率。 - AVCaptureInput与AVCaptureDevice

设备输入数据管理对象,可以根据AVCaptureDevice创建对应AVCaptureDeviceInput对象,该对象将会被添加到AVCaptureSession中管理。 - AVCaptureOutput

设备输出数据管理对象 - AVCaptureVideoPreviewLayer

相机拍摄预览图层,是CALayer的子类,使用该对象可以实时查看拍照或视频录制效果,创建该对象需要指定对应的AVCaptureSession对象。

Demeo

import UIKit

import AVFoundation

import LEKit

class AVFCameraViewController: LEYViewController {

//时长显示

private var timeLabel: LEYLabel!

private var timer: Timer? = nil

private let timer_interval: Int = 1

private var time_length: Int = 0

private var captureSession: AVCaptureSession!

private var captureMovieFileOutput: AVCaptureMovieFileOutput!

private var videoUrl: URL? = nil

override func viewDidLoad() {

super.viewDidLoad()

self.naviView.title = "AVFoundation 视频拍摄"

//获取权限后展示UI,判断照相机和麦克风权限

RRXCAuthorizeManager.authorization(.camera) { (state) in

if state == .authorized {

RRXCAuthorizeManager.authorization(.microphone, completion: { (substate) in

if substate == .authorized {

self.setCameraConfiguration()

self.drawUIDisplay()

}

})

}

}

}

func drawUIDisplay() {

self.naviView.forwardBar = LEYNaviView.Bar.forword(image: nil, title: "开始录制", target: self, action: #selector(forwardBarAction))

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

previewLayer.videoGravity = .resizeAspectFill//.resizeAspect

previewLayer.frame = contentView.bounds

contentView.layer.addSublayer(previewLayer)

let timeLabel = LEYLabel(frame: CGRect(x: 20, y: 20, width: 80, height: 30), text: "00:00:00", font: LEYFont(14), color: .red, alignment: .center)

self.contentView.addSubview(timeLabel)

timeLabel.backgroundColor = LEYHexColor(0x000000, 0.2)

timeLabel.layer.cornerRadius = 6

timeLabel.layer.masksToBounds = true

self.timeLabel = timeLabel

}

func setCameraConfiguration() {

//创建AVCaptureSession对象

let captureSession = AVCaptureSession()

self.captureSession = captureSession

captureSession.sessionPreset = .high

captureSession.beginConfiguration()

//配置输入设备

AVCaptureDevice.devices().forEach { (device) in

if device.hasMediaType(.video) && device.position == .back {

do {

let captureVideoDeviceInput = try AVCaptureDeviceInput(device: device)

if captureSession.canAddInput(captureVideoDeviceInput) {

captureSession.addInput(captureVideoDeviceInput)

}

} catch {

}

}

if device.hasMediaType(.audio) {

do {

let captureAudioDeviceInput = try AVCaptureDeviceInput(device: device)

if captureSession.canAddInput(captureAudioDeviceInput) {

captureSession.addInput(captureAudioDeviceInput)

}

} catch {

}

}

}

//配置输出设备

let captureMovieFileOutput = AVCaptureMovieFileOutput()

self.captureMovieFileOutput = captureMovieFileOutput

if captureSession.canAddOutput(captureMovieFileOutput) {

captureSession.addOutput(captureMovieFileOutput)

}

//设置链接管理对象

if let captureConnection = captureMovieFileOutput.connection(with: .video) {

captureConnection.videoScaleAndCropFactor = captureConnection.videoMaxScaleAndCropFactor //视频旋转方向设置

if captureConnection.isVideoStabilizationSupported {

captureConnection.preferredVideoStabilizationMode = .auto

}

}

captureSession.commitConfiguration()

captureSession.startRunning()

}

@objc func forwardBarAction() {

let title = self.naviView.forwardBar?.titleLabel?.text

if title == "开始录制" {

self.naviView.forwardBar?.updateSubviews(nil, "停止录制")

startRecord()

}

if title == "停止录制" {

self.naviView.forwardBar?.updateSubviews(nil, "保存")

stopRecord()

}

if title == "保存" {

save()

}

}

func startRecord() {

time_length = 0

self.timer = Timer.every(Double(timer_interval).seconds) { (timer: Timer) in

self.time_length += self.timer_interval

let second = self.time_length % 60

let minute = (self.time_length / 60) % 60

let hour = self.time_length / 3600

self.timeLabel.text = String(format: "%02ld:%02ld:%02ld", hour, minute, second)

}

//开始录制

do {

let documentsDir = try FileManager.default.url(for: .documentDirectory, in: .userDomainMask, appropriateFor: nil, create: true)

if let fileURL = URL(string: "leyCamera.mp4", relativeTo: documentsDir) {

do {

try FileManager.default.removeItem(at:fileURL)

} catch {

}

self.videoUrl = fileURL

captureMovieFileOutput.startRecording(to: fileURL, recordingDelegate: self)

}

} catch {

fatalError("Couldn't initialize movie, error: \(error)")

}

}

func stopRecord() {

if let _timer = timer {

_timer.invalidate()

}

captureMovieFileOutput.stopRecording()

captureSession.stopRunning()

DispatchQueue.main.asyncAfter(deadline: .now() + 0.5, execute: {

self.previewVideoAfterShoot()

})

}

func save() {

if let url = videoUrl {

LEPHAsset.Manager.saveVideo(fileURL: url) { (state, asset) in

print("save video success")

DispatchQueue.main.async {

self.navigationController?.popViewController(animated: true)

}

}

}

}

// 预览视频

func previewVideoAfterShoot() {

guard let url = videoUrl else {

return

}

let videoPreviewContainerView = UIView(frame: self.contentView.bounds)

self.contentView.addSubview(videoPreviewContainerView)

videoPreviewContainerView.backgroundColor = .black

let asset = AVURLAsset(url: url)

let item = AVPlayerItem(asset: asset)

let player = AVPlayer(playerItem: item)

let playerLayer = AVPlayerLayer(player: player)

playerLayer.frame = videoPreviewContainerView.bounds

playerLayer.videoGravity = .resizeAspectFill //.resizeAspect

videoPreviewContainerView.layer.addSublayer(playerLayer)

player.play()

}

}

extension AVFCameraViewController: AVCaptureFileOutputRecordingDelegate {

func fileOutput(_ output: AVCaptureFileOutput, didFinishRecordingTo outputFileURL: URL, from connections: [AVCaptureConnection], error: Error?) {

}

}

如果喜欢,请帮忙点赞。支持转载,转载请附原文链接。