一、安装hadoop

1、将文件解压到/usr/local

[root@localhost src]# tar -zxvf hadoop-2.7.3.tar.gz -C /usr/local/

2、配置环境变量

[root@localhost src]# vim /etc/profile

增加以下配置

export HADOOP_HOME=/usr/local/hadoop-2.7.3

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

使环境变量生效,执行以下语句

[root@localhost src]# source /etc/profile

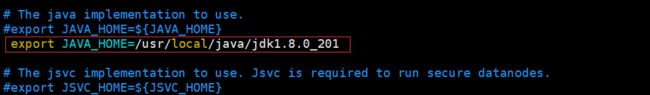

3、修改hadoop-env.sh,设置jdk

[root@localhost hadoop]# vim /usr/local/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

如图所示:

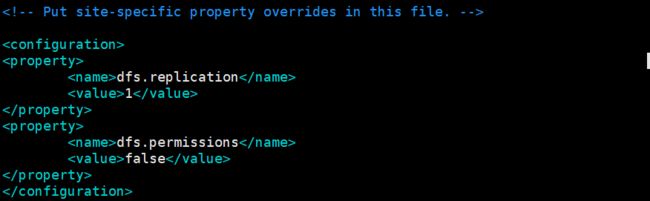

4、修改hdfs-site.xml,设置数据备份节点和权限检查

[root@localhost hadoop]# vim /usr/local/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

添加以下内容:

dfs.replication 1 dfs.permissions false

如图所示:

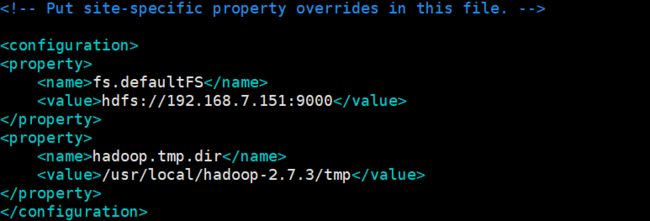

5、修改core-site.xml,设置namenode的ip和端口,以及数据存放目录

[root@localhost hadoop]# vim /usr/local/hadoop-2.7.3/etc/hadoop/core-site.xml

添加以下内容:

fs.defaultFS hdfs://192.168.7.151:9000 hadoop.tmp.dir /usr/local/hadoop-2.7.3/tmp

如图所示:

创建数据存放目录:

[root@localhost hadoop]# mkdir /usr/local/hadoop-2.7.3/tmp

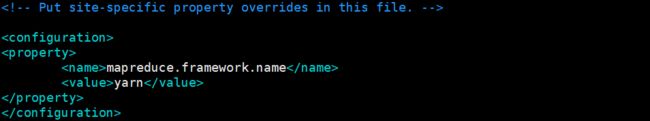

6、修改mapred-site.xml,设置

[root@localhost hadoop]#cp /usr/local/hadoop-2.7.3/etc/hadoop/mapred-site.xml.template mapred-site.xml

[root@localhost hadoop]#vim /usr/local/hadoop-2.7.3/etc/hadoop/mapred-site.xml

添加以下内容:

mapreduce.framework.name yarn

如图所示:

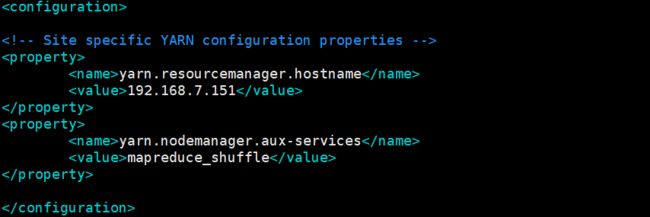

7、修改yarn-site.xml,设置

[root@localhost hadoop]# vim /usr/local/hadoop-2.7.3/etc/hadoop/yarn-site.xml

添加以下内容:

yarn.resourcemanager.hostname 192.168.7.151 yarn.nodemanager.aux-services mapreduce_shuffle

如图所示:

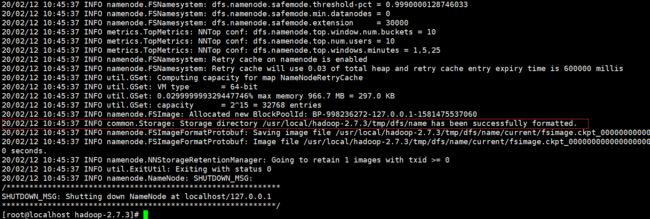

8、格式化namenode,使其产生一些必要的源信息

[root@localhost hadoop-2.7.3]# hdfs namenode -format

执行成功如图所示:

执行成功以后,/usr/local/hadoop-2.7.3/tmp下面已经生成了目录dfs

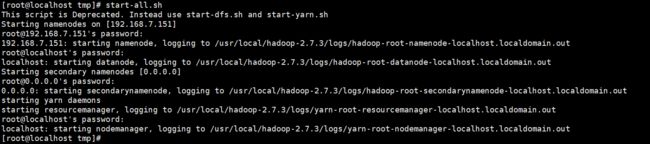

9、启动hadoop

环境变量已经配置了hadoop/sbin,直接执行start-all.sh即可

[root@localhost hadoop-2.7.3]# start-all.sh

启动成功如下图所示:

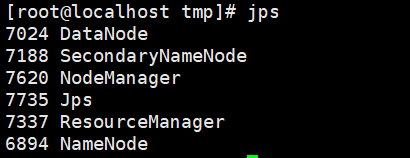

已经启动了DataNode、NodeManager、ResourceManager、SecondaryNameNode、NameNode,如图所示: