网址:http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow

Python爬虫代码:

import requests

import re

import xlwt

# #https://flightaware.com/live/flight/CCA101/history/80

url = 'http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=AH20021300174'

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36"

}

def get_page(url):

try:

response = requests.get(url, headers=headers)

if response.status_code == 200:

print('获取网页成功')

return response.text

else:

print('获取网页失败')

except Exception as e:

print(e)

f = xlwt.Workbook(encoding='utf-8')

sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True)

sheet01.write(0, 0, '序号') # 第一行第一列

sheet01.write(0, 1, '问') # 第一行第二列

sheet01.write(0, 2, '来信人') # 第一行第三列

sheet01.write(0, 3, '时间') # 第一行第四列

sheet01.write(0, 4, '网友同问') # 第一行第五列

sheet01.write(0, 5, '问题内容') # 第一行第六列

sheet01.write(0, 6, '答') # 第一行第七列

sheet01.write(0, 7, '答复时间') # 第一行第八列

sheet01.write(0, 8, '答复内容') # 第一行第九列

fopen = open('C:\\Users\\hp\\Desktop\\list.txt', 'r')

lines = fopen.readlines()

temp=0

temp1=0

urls = ['http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId={}'.format(line) for line in lines]

for url in urls:

print(url.replace("\n", ""))

page = get_page(url.replace("\n", ""))

items = re.findall('(.*?).*?(.*?).*?(.*?).*?(.*?)(.*?).*? (.*?).*?.*?(.*?).*?(.*?).*?(.*?)',page,re.S)

print(items)

print(len(items))

for i in range(len(items)):

sheet01.write(temp + i + 1, 0,temp+1)

sheet01.write(temp + i + 1, 1,items[i][0].replace( ' ','').replace(' ',''))

sheet01.write(temp + i + 1, 2, items[i][1].replace('来信人:\r\n\t \t\r\n \t\r\n\t \t','').replace( '\r\n \t\r\n ',''))

sheet01.write(temp + i + 1, 3, items[i][2].replace('时间:',''))

sheet01.write(temp + i + 1, 4, items[i][4].replace('\r\n\t\t \t\r\n\t \t','').replace('\r\n\t \r\n\t \r\n ',''))

sheet01.write(temp + i + 1, 5,items[i][5].replace('\r\n\t \t', '').replace('\r\n\t ', '').replace('','').replace(' ','').replace(' ','').replace(' ','').replace(' ',''))

sheet01.write(temp + i + 1, 6,items[i][6].replace('', ''))

sheet01.write(temp + i + 1, 7,items[i][7])

sheet01.write(temp + i + 1, 8, items[i][8].replace('\r\n\t\t\t\t\t\t\t\t','').replace(' ','').replace('\r\n\t\t\t\t\t\t\t','').replace('

','').replace(' ','').replace(' ','').replace(' ',''))

temp+=len(items)

temp1+=1

print("总爬取完毕数量:"+str(temp1))

print("打印完!!!")

f.save('letter.xls')

C:\\Users\\hp\\Desktop\\list.txt':这个文件是所有信件内容网址的后缀:

如果需要请加Q:893225523

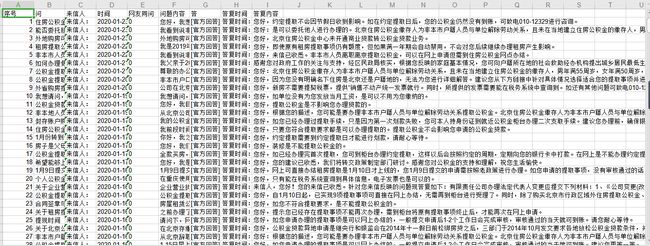

将爬虫结果主要分为:序号、问、时间、网友同问、问题内容、答、答复时间、答复内容

存储到Execl:

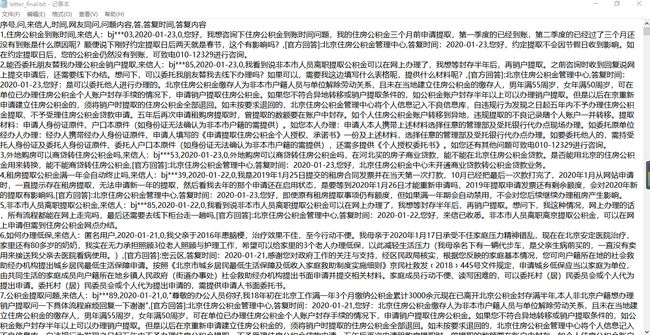

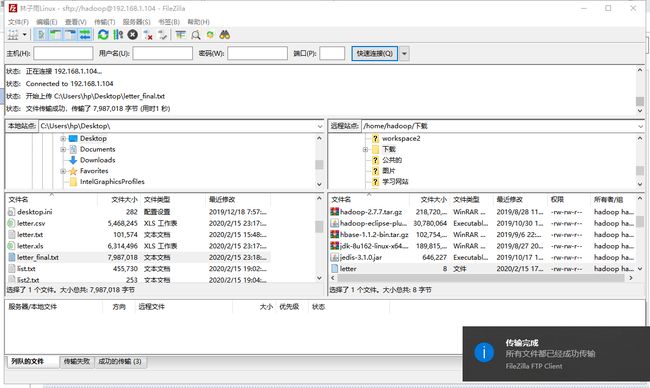

然后为了方便清洗数据,首先让execl表格转换为csv(自动以逗号间隔),然后用记事本打开,复制粘贴到txt文件中,通过FileZilla上传到虚拟机里。

特别注意,一定要在execl表里面选中所有单元格,将所有内容的英文字段改为中文字段,以防止阻碍数据清洗。

这是最终的txt文件:

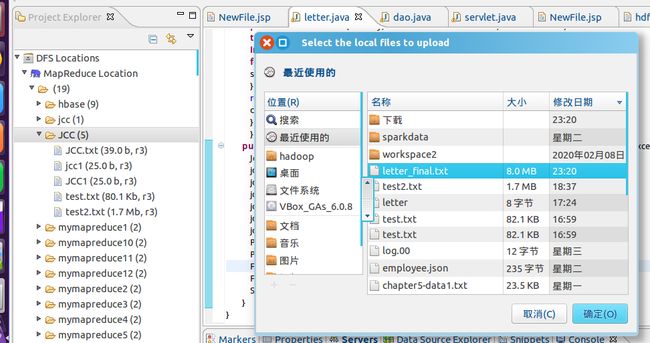

打开虚拟机:将文件上传到mapreduce,开始进行数据清洗。

package letter_count;

import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Mapper.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import test4.quchong;

import test4.quchong.doMapper;

import test4.quchong.doReducer;

public class letter {

public static class doMapper extends Mapper{

public static final IntWritable one = new IntWritable(1);

public static Text word = new Text();

@Override

protected void map(Object key, Text value, Context context)

throws IOException,InterruptedException {

//StringTokenizer tokenizer = new StringTokenizer(value.toString()," ");

String[] strNlist = value.toString().split(",");

// String str=strNlist[3].trim();

String str2=strNlist[3].substring(0,4);

System.out.println(str2);

// Integer temp= Integer.valueOf(str);

word.set(str2);

//IntWritable abc = new IntWritable(temp);

context.write(word,one);

}

}

public static class doReducer extends Reducer{

private IntWritable result = new IntWritable();

@Override

protected void reduce(Text key,Iterable values,Context context)

throws IOException,InterruptedException{

int sum = 0;

for (IntWritable value : values){

sum += value.get();

}

result.set(sum);

context.write(key,result);

}

}

public static void main(String[] args) throws IOException,ClassNotFoundException,InterruptedException {

Job job = Job.getInstance();

job.setJobName("letter");

job.setJarByClass(quchong.class);

job.setMapperClass(doMapper.class);

job.setReducerClass(doReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

Path in = new

Path("hdfs://localhost:9000/JCC/letter_final.txt");

Path out = new Path("hdfs://localhost:9000/JCC/out1");

FileInputFormat.addInputPath(job,in);

FileOutputFormat.setOutputPath(job,out);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

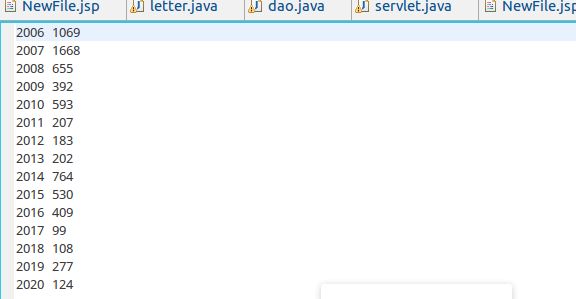

清洗完的数据:

然后打开虚拟机数据库:

输入建表语句:

create table letter

-> (

-> year varchar(255),

-> num int(11));

txt 导入mysql,首先将准备导入的文件放到mysql_files目录下,(/var/lib/mysql-files)然后确保自己的local_infile是“ON”状态。

SHOW VARIABLES LIKE '%local%';(set global local_infile='ON';)可以开启。最后导入LOAD DATA INFILE '/var/lib/mysql-files/part-r-00000' INTO TABLE stage1 fields terminated by ',';

(show variables like '%secure%';)来查看自己mysql-files的路径。

将letter_final文件复制到/var/lib/mysql-files目录下:

格式为:(以逗号间隔,方便存入mysql)

输入代码:

LOAD DATA INFILE '/var/lib/mysql-files/letter_final' INTO TABLE letter fields terminated by ',';

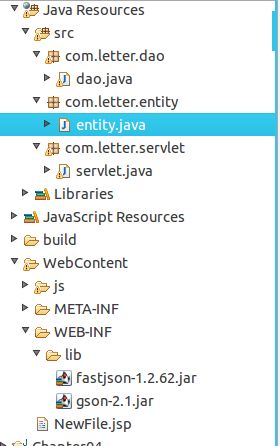

然后我们创建web,开始进行可视化。

图表的html代码:

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

Insert title here

dao层:

package com.letter.dao;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.Statement;

import java.util.ArrayList;

import java.util.List;

import com.letter.entity.entity;;

public class dao {

public List list1()

{

int sum=0;

List list =new ArrayList();

try {

// 加载数据库驱动,注册到驱动管理器

Class.forName("com.mysql.jdbc.Driver");

// 数据库连接字符串

String url = "jdbc:mysql://localhost:3306/mysql?useUnicode=true&characterEncoding=utf-8";

// 数据库用户名

String username = "root";

// 数据库密码

String password = "";

// 创建Connection连接

Connection conn = DriverManager.getConnection(url, username,

password);

// 添加图书信息的SQL语句

String sql = "select * from letter";

// 获取Statement

Statement statement = conn.createStatement();

ResultSet resultSet = statement.executeQuery(sql);

while (resultSet.next()) {

entity book = new entity();

//sum=sum+resultSet.getInt("round");

book.setYear(resultSet.getString("year"));

book.setNum(resultSet.getInt("Num"));

list.add(book);

}

resultSet.close();

statement.close();

conn.close();

}catch (Exception e) {

e.printStackTrace();

}

return list;

}

}

servlet代码:

package com.letter.servlet;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import javax.servlet.ServletException;

import javax.servlet.annotation.WebServlet;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.http.HttpSession;

import com.google.gson.Gson;

import com.letter.entity.entity;

import com.letter.dao.*;

/**

* Servlet implementation class servlet

*/

@WebServlet("/servlet")

public class servlet extends HttpServlet {

private static final long serialVersionUID = 1L;

/**

* @see HttpServlet#HttpServlet()

*/

public servlet() {

super();

// TODO Auto-generated constructor stub

}

dao dao1=new dao();

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void service(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

request.setCharacterEncoding("utf-8");

String method=request.getParameter("method");

if("view".equals(method))

{

view(request, response);

}else if("find2".equals(method))

{

find2(request, response);

}

}

private void view(HttpServletRequest request, HttpServletResponse response) throws IOException, ServletException {

request.setCharacterEncoding("utf-8");

String buy_nbr=request.getParameter("buy_nbr");

HttpSession session=request.getSession();

session.setAttribute("userInfo", buy_nbr);

request.getRequestDispatcher("left.jsp").forward(request, response);

}

private void find2(HttpServletRequest request, HttpServletResponse response) throws IOException, ServletException {

request.setCharacterEncoding("utf-8");

List list =new ArrayList();

HttpSession session=request.getSession();

String buy_nbr=(String) session.getAttribute("userInfo");

entity book = new entity();

List list2=dao1.list1();

System.out.println(list2.size());

// String buy_nbr=(String) session.getAttribute("userInfo");

// System.out.println(buy_nbr);

Gson gson2 = new Gson();

String json = gson2.toJson(list2);

// System.out.println(json);

// System.out.println(json.parse);

response.setContentType("textml; charset=utf-8");

response.getWriter().write(json);

}

}

架构:

运行结果:

那么就完整的展现出来了从网站分析爬取----》数据清洗----》数据可视化。