Cloudrea Hue Installation Guide

Hue官网

Github地址

Hue是一个开源的Apache Hadoop UI系统,最早是由Cloudera Desktop演化而来,由Cloudera贡献给开源社区,它是基于Python Web框架Django实现的。通过使用Hue我们可以在浏览器端的Web控制台上与Hadoop集群进行交互来分析处理数据,例如操作HDFS上的数据,运行MapReduce Job,Hive

一、下载

wget http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.7.0.tar.gz

------------------------------------------------------------------------------------

tar -zxvf hue-3.9.0-cdh5.7.0.tar.gz -C ../app/

三、安装依赖及编译

安装依赖;

yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy libxml2-devel libxslt-devel make mvn mysql mysql-devel openldap-devel python-devel sqlite-devel openssl-devel gmp-devel

编译:

cd ../app/hue-3.9.0-cdh5.7.0/

make apps

编译成功信息如下:

1190 static files copied to '/home/hadoop/soul/app/hue-3.9.0-cdh5.7.0/build/static', 1190 post-processed.

make[1]: Leaving directory `/home/hadoop/soul/app/hue-3.9.0-cdh5.7.0/apps'

四、修改Hue配置文件

vim ../app/hue-3.9.0-cdh5.7.0/desktop/conf/ hue.ini

[desktop]

# Set this to a random string, the longer the better.

# This is used for secure hashing in the session store.

#修改1

secret_key=jFE93j;2maomaosi22943d['d;/.q[eIW^y#e=+Iei*@

# Execute this script to produce the Django secret key. This will be used when

# `secret_key` is not set.

## secret_key_script=

# Webserver listens on this address and port

#修改2

http_host=hadoop000

http_port=9999

# Time zone name

#修改3

time_zone=Asia/Shanghai

secret_key随便填写一个30-60个长度的字符串即可,如果不填写的话Hue会提示错误信息,这个secret_key主要是出于安全考虑用来存储在session store中进行安全验证的。时区修改成亚洲时区。

将Hue配置到环境变量,然后执行supervisor即可启动

[hadoop@hadoop000 conf]$ supervisor

[INFO] Not running as root, skipping privilege drop

starting server with options:

{'daemonize': False,

'host': 'hadoop000',

'pidfile': None,

'port': 9999,

'server_group': 'hue',

'server_name': 'localhost',

'server_user': 'hue',

'ssl_certificate': None,

'ssl_certificate_chain': None,

'ssl_cipher_list': 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA',

'ssl_private_key': None,

'threads': 40,

'workdir': None}

五、访问HDFS配置

$HADOOP_HOME/tec/hadoop/

hdfs-site.xml

dfs.webhdfs.enabled

true

core-site.xml

hadoop.proxyuser.hue.hosts

*

hadoop.proxyuser.hue.groups

*

httpfs-site.xml

httpfs.proxyuser.hue.hosts

*

httpfs.proxyuser.hue.groups

*

hue.ini配置($HUE_HOME/desktop/conf/hue.ini )

[hadoop]

# Configuration for HDFS NameNode

# ------------------------------------------------------------------------

[[hdfs_clusters]]

# HA support by using HttpFs

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://hadoop000:8020

# NameNode logical name.

## logical_name=

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

# Default port is 14000 for HttpFs.

### 前面注释去掉

webhdfs_url=http://localhost:50070/webhdfs/v1

六、访问Hive配置

$HIVE_HOME/conf

hive-site.xm

hive.server2.thrift.port

10001

hive.server2.thrift.bind.host

hadoop000

hive.server2.long.polling.timeout

5000

hive.server2.authentication

NONE

hue.ini配置:

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=hadoop000

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10001

# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=$HIVE_HOME/conf

# Timeout in seconds for thrift calls to Hive service

## server_conn_timeout=120

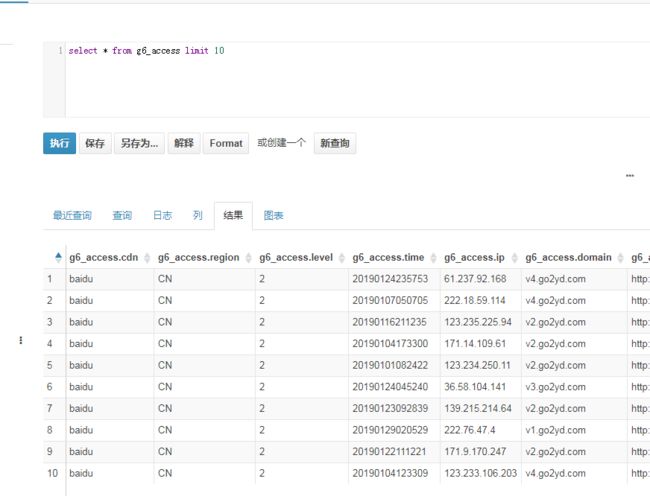

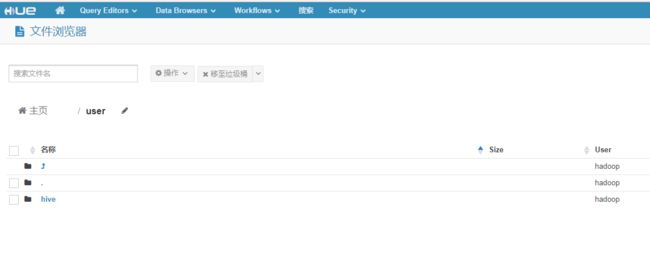

七、访问测试

1、启动HDFS

2、启动Hive2

nohup hiveserver2 &

----------------------------

netstat -anp|grep 10000

----------------------------------

beeline -u jdbc:hive2://hadoop000:10000 -n hadoop

3、启动hue

[hadoop@hadoop000 conf]$ supervisor