Interesting things

接着上一篇来写。

What did you do today

With RHEL 7 / CentOS 7, firewalld was introduced to manage iptables. IMHO, firewalld is more suited for workstations than for server environments.

It is possible to go back to a more classic iptables setup. First, stop and mask the firewalld service

- 上一篇我们发现在/etc/sysconfig/下没有看到iptables,并且我们使用iptbales -P INPUT ACCESPT

service iptables save命令写入防火墙策略的时候,抛出了"The service command supports only basic LSB actions (start, stop, restart, try-restart, reload, force-reload, status). For other actions, please try to use systemctl."异常,而且用service iptables status查看防火墙的状态也提示"Unit iptables.service could not found"

- 查阅资料发现,CentOS 7引入(默认firewalld)了firewalld来管理iptables,如果我们想回到iptables设置,首先我们需要停止firewalld,命令: systemctl stop firewalld, 然后屏蔽firewalld, systemctl mask firewalld,它是创建一个/etc/systemd/system/firewalld.service到 /dev/null的符号链接去屏蔽firewalld的服务。

-

安装iptables-services, yum install iptables-services

-

启用iptables, systemctl enable iptables.

-

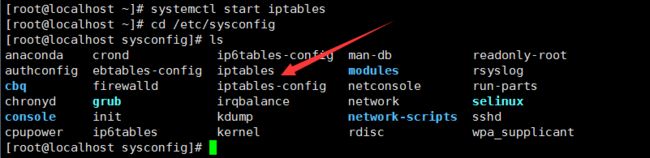

启动iptables, systemctl start iptables,然后我们进入/etc/sysconfig/,发现iptables文件出现了

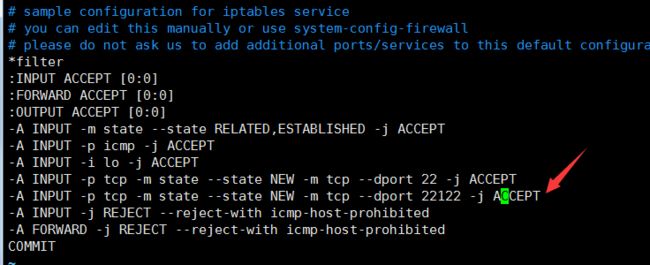

我们进入/etc/sysconfig/iptables,添加访问22122端口的策略,直接复制上面就行了,改个端口号就ok了。

-A INPUT -p tcp -m state --state NEW -m tcp --dport 22122 -j ACCEPT

-

让防火墙策略生效。service iptables save.

重启防火墙,二种方式都可以。systemctl restart iptables

service iptables restart

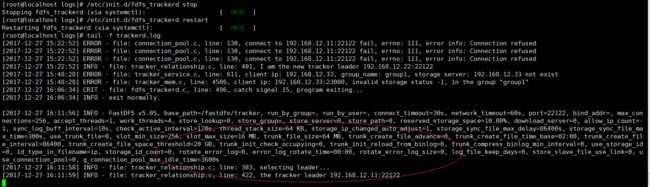

- 通过/etc/init.d/fdfs_trackerd start来启动,然后用ps -ef|grep fdfs来查看tracker是否正常启动。

-

还记得我们之前配置tracker.conf的时候把里面的base_path改成了/fastdfs/tracker,我们进入/fastdfs/tracker里面发现多了data,logs两个文件夹

我们打开logs文件夹会发现trackerd.log

[2017-12-27 10:38:41] INFO - FastDFS v5.05, base_path=/fastdfs/tracker, run_by_group=, run_by_user=, connect_timeout=30s, network_timeout=60s, port=22122, bind_addr=, max_connections=256, accept_threads=1, work_threads=4, store_lookup=0, store_group=, store_server=0, store_path=0, reserved_storage_space=10.00%, download_server=0, allow_ip_count=-1, sync_log_buff_interval=10s, check_active_interval=120s, thread_stack_size=64 KB, storage_ip_changed_auto_adjust=1, storage_sync_file_max_delay=86400s, storage_sync_file_max_time=300s, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, use_storage_id=0, id_type_in_filename=ip, storage_id_count=0, rotate_error_log=0, error_log_rotate_time=00:00, rotate_error_log_size=0, log_file_keep_days=0, store_slave_file_use_link=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s

打开data文件夹,会发现fdfs_trackerd.pid(里面是3185,进程标识符)和storage_changelog.dat(里面为空)。

接下来我们需要配置storage,将group1(192.168.12.33, 192.168.12.44)和group2(192.18.12.55, 192.168.12.66)作为我们的存储节点。

-

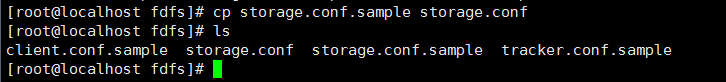

拷贝storage.conf.sample,并且重命名为storage.conf。

-

给192.168.12.44、192.168.12.55、192.168.12.66都拷贝一份storage.conf

-

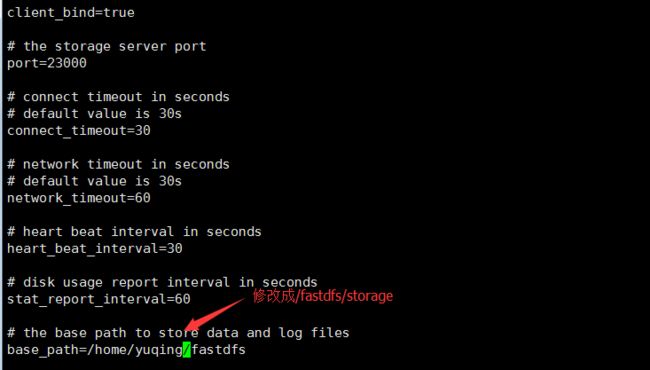

更改group1的storage.conf,把base_path改成/fastdfs/storage

-

我们可以storage server的端口号是23000,group_name修改成自己所在的组,192.168.12.33和192.168.12.44对应的group_name=group1, 192.168.12.55和192.168.12.66对应的group_name=group2。

-

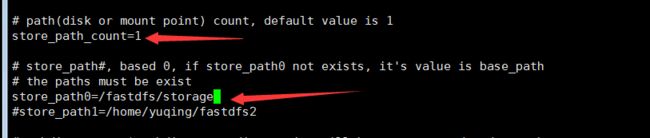

默认storage存储路径的个数是1,

我们把storage_path0修改成/fastdfs/storage

-

配置tracker_server=192.168.12.11:22122、tracker_server=192.168.12.22:2212

所配置的地址也就是tracker1和tracker2地址。

-

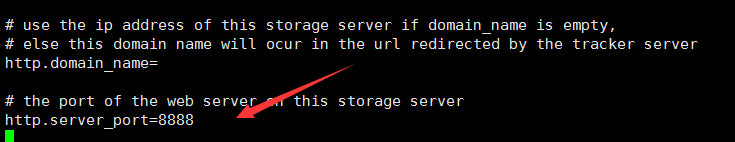

我们可以到storage的web端口是8888。

然后把配置完的stroage.conf拷贝给192.168.12.44、192.168.12.55、192.168.12.66。192.168.12.44不用做任何修改,192.168.12.55和192.168.12.66只用把group_name修改为group2即可。

-

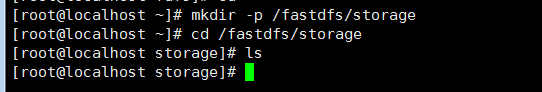

创建/fastdfs/storage。mkdir -p /fastdfs/storage

-

同样的往iptables里面添加端口23000。

-A INPUT -p tcp -m state --state NEW -m tcp --dport 23000 -j ACCEPT

-

我们在192.168.12.33启动storage。

-

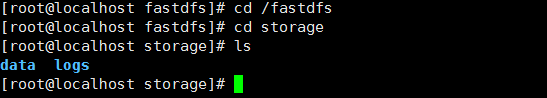

我们进入/fastdfs/storage,会发现也生成了logs和data文件夹。

-

我们先把所有的tracker1和tracker2, group1和group2都关闭。然后我先启动tracker2(192.168.12.22),tracker2会去尝试连接tracker1,tracker1肯定没有开启,所以会连接失败,tracker2会成为tracker leader。

-

开启tracker1,tracker2此时已经是leader了。所以会打印出"the tracker leader 192.168.12.22:22122"

-

开启192.168.12.33,发现成功连接tracker1,tracker2。tracker2是tracker leader。而且成功连接同一个group下的192.168.12.44.

-

开启192.168.12.44,成功连接tracker1,tracker2,192.168.12.33。

-

开启192.168.12.55 和 192.168.12.66,彼此相互连接成功,连接成功tracker1,tracker2.

-

tracker和storage集群搭建完毕了,我们可以测试一下tracker的高可用性。tracker leader一开始是tracker2(192.168.12.22),如果我们把tracker2关闭,tracker1(192.168.12.11)就会成为我们的tracker leader。

-

我们随便挑一个group1和group2所属的storage,也发现tracker leader切换到了tracker1,简直美滋滋。

-

接着我再启动tracker2,发现依然悍动不了tracker2身为leader的宝座。

所有的tracker和storage节点都启动成功后,我们可以在任意的一个storage查看storage集群信息。我这里就选192.168.12.33把,命令: /usr/bin/fdfs_monitor

/etc/fdfs/storage.conf

[root@localhost logs]# /usr/bin/fdfs_monitor /etc/fdfs/storage.conf

[2017-12-27 16:26:30] DEBUG - base_path=/fastdfs/storage, connect_timeout=30, network_timeout=60, tracker_server_count=2, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0

server_count=2, server_index=0

tracker server is 192.168.12.11:22122

group count: 2

Group 1:

group name = group1

disk total space = 18414 MB

disk free space = 17044 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 8888

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1:

id = 192.168.12.33

ip_addr = 192.168.12.33 (localhost.localdomain) ACTIVE

http domain =

version = 5.05

join time = 2017-12-27 11:52:50

up time = 2017-12-27 16:01:59

total storage = 18414 MB

free storage = 17151 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2017-12-27 16:25:58

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.12.44

ip_addr = 192.168.12.44 ACTIVE

http domain =

version = 5.05

join time = 2017-12-27 13:30:50

up time = 2017-12-27 15:53:28

total storage = 18414 MB

free storage = 17044 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2017-12-27 16:26:27

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Group 2:

group name = group2

disk total space = 17394 MB

disk free space = 16128 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 8888

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1:

id = 192.168.12.55

ip_addr = 192.168.12.55 ACTIVE

http domain =

version = 5.05

join time = 2017-12-27 13:35:06

up time = 2017-12-27 15:56:07

total storage = 17394 MB

free storage = 16128 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2017-12-27 16:26:07

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.12.66

ip_addr = 192.168.12.66 ACTIVE

http domain =

version = 5.05

join time = 2017-12-27 13:36:48

up time = 2017-12-27 15:56:54

total storage = 18414 MB

free storage = 17044 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id = 192.168.12.55

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2017-12-27 16:26:24

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

[root@localhost logs]#

输出的结果和我们预想的storage集群是一样一样的,tracker_server 有2个, group有2个。group1的ip有192.168.12.33和192.168.12.44,group2的ip有192.168.12.55和192.168.12.66.

-

使用cd /usr/bin && ls |grep fdfs来查看fdfs所有的命令。

Warning

请看下一篇。