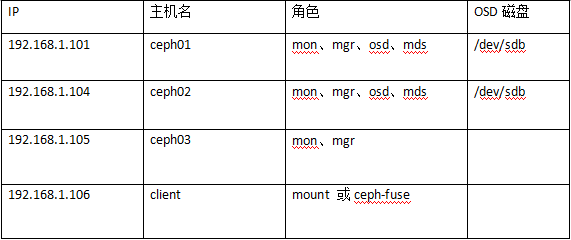

一、实验环境

操作系统:CenOS7.5 Minimal

docker 版本: 18.06.0-ce

ceph镜像版本:tag-build-master-luminous-centos-7

各测试机配置: 4核心2G内存

二、更改主机名、关闭selinux,设置防火墙

在ceph01 ceph02 ceph03 服务器:

# hostnamectl set-hostname cephX

注:X分别为01 02 03

# setenforce 0

# sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config

# firewall-cmd --zone=public --add-port=6789/tcp --permanent

# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent

# firewall-cmd --reload

三、安装docker,拉取ceph镜像

在ceph01 ceph02 ceph03 服务器:

Get Docker CE for CentOS

https://docs.docker.com/install/linux/docker-ce/centos/#install-docker-ce-1

# yum -y install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# yum list docker-ce --showduplicates| sort -r

# yum -y install docker-ce-18.06.0.ce

# systemctl start docker

# systemctl status docker

# systemctl enable docker

# docker version

设置镜像加速

# curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

# systemctl restart docker

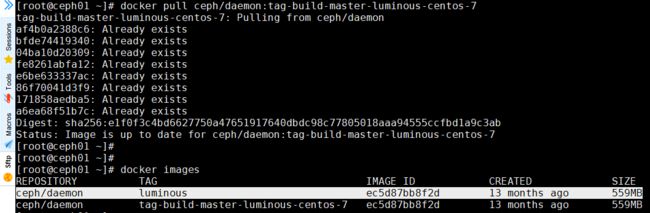

# docker pull ceph/daemon:tag-build-master-luminous-centos-7

# docker tag ceph/daemon:tag-build-master-luminous-centos-7 ceph/daemon:luminous

# docker images

四、创建ceph相关目录

在ceph01 ceph02 ceph03 服务器:

# mkdir /etc/ceph

# mkdir /var/lib/ceph

五、启动各个角色的ceph容器

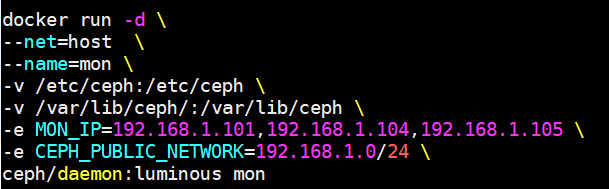

在 ceph01 服务器启动mon容器:

# docker run -d \

--net=host \

--name=mon \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph/:/var/lib/ceph \

-e MON_IP=192.168.1.101,192.168.1.104,192.168.1.105 \

-e CEPH_PUBLIC_NETWORK=192.168.1.0/24 \

ceph/daemon:luminous mon

###############################################

# docker ps -a

# docker logs mon

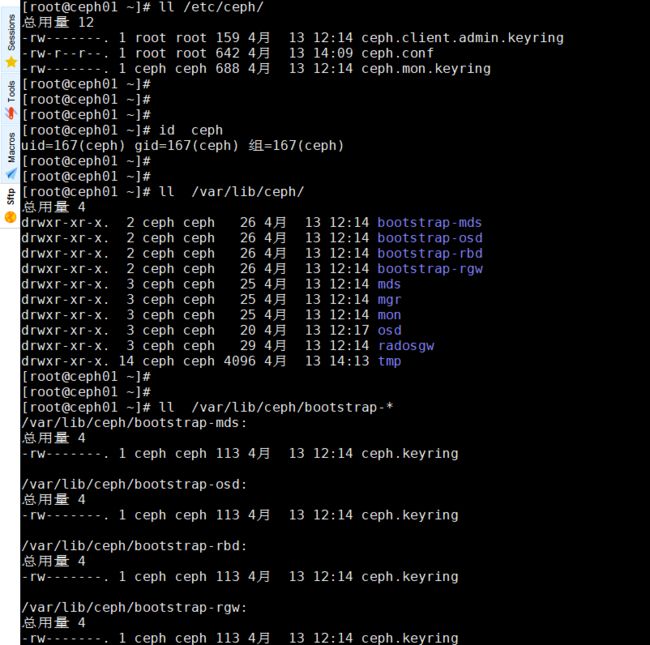

# ll /etc/ceph/

# id ceph

# ll /var/lib/ceph/

# ll /var/lib/ceph/bootstrap-*

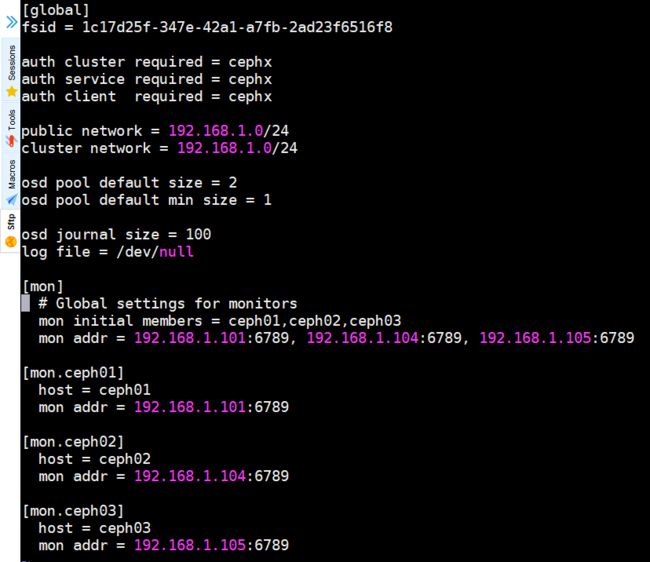

编辑配置文件/etc/ceph/ceph.conf

# vim /etc/ceph/ceph.conf

#######################################################

[global]

fsid = 1c17d25f-347e-42a1-a7fb-2ad23f6516f8

auth cluster required = cephx

auth service required = cephx

auth client required = cephx

public network = 192.168.1.0/24

cluster network = 192.168.1.0/24

osd pool default size = 2

osd pool default min size = 1

osd journal size = 100

log file = /dev/null

[mon]

# Global settings for monitors

mon initial members = ceph01,ceph02,ceph03

mon addr = 192.168.1.101:6789,192.168.1.104:6789,192.168.1.105:6789

[mon.ceph01]

host = ceph01

mon addr = 192.168.1.101:6789

[mon.ceph02]

host = ceph02

mon addr = 192.168.1.104:6789

[mon.ceph03]

host = ceph03

mon addr = 192.168.1.105:6789

############################################################

从ceph01分发配置文件和认证文件给ceph02 ceph03

# scp -r /etc/ceph/* [email protected]:/etc/ceph/

# scp -r /etc/ceph/* [email protected]:/etc/ceph/

# scp -r /var/lib/ceph/bootstrap* [email protected]:/var/lib/ceph/

# scp -r /var/lib/ceph/bootstrap* [email protected]:/var/lib/ceph/

在ceph02 ceph03 服务器启动mon容器:

# docker run -d \

--net=host \

--name=mon \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph/:/var/lib/ceph \

-e MON_IP=192.168.1.101,192.168.1.104,192.168.1.105 \

-e CEPH_PUBLIC_NETWORK=192.168.1.0/24 \

ceph/daemon:luminous mon

###############################################

# docker ps -a

# docker logs mon

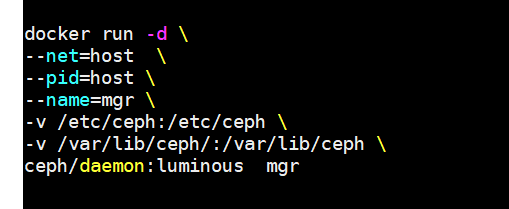

在 ceph01 ceph02 ceph03 服务器启动mgr容器:

# docker run -d \

--net=host \

--pid=host \

--name=mgr \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph/:/var/lib/ceph \

ceph/daemon:luminous mgr

#####################################

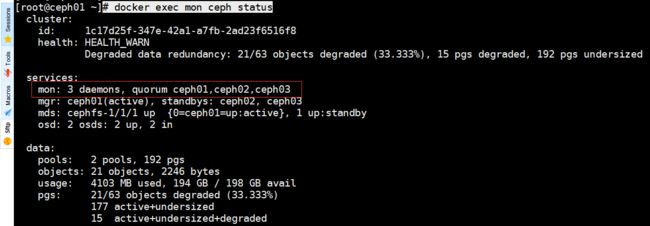

# docker exec mon ceph status

在 ceph01 ceph02 服务器清理磁盘和启动osd容器:

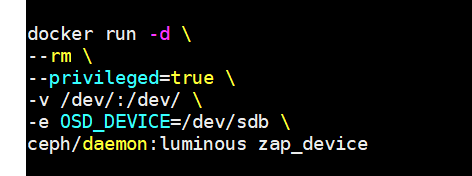

# docker run -d \

--rm \

--privileged=true \

-v /dev/:/dev/ \

-e OSD_DEVICE=/dev/sdb \

ceph/daemon:luminous zap_device

#############################################

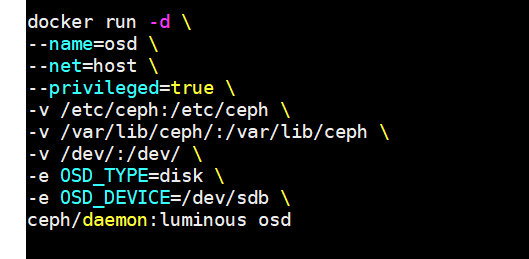

# docker run -d \

--name=osd \

--net=host \

--privileged=true \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph/:/var/lib/ceph \

-v /dev/:/dev/ \

-e OSD_TYPE=disk \

-e OSD_DEVICE=/dev/sdb \

ceph/daemon:luminous osd

#############################################

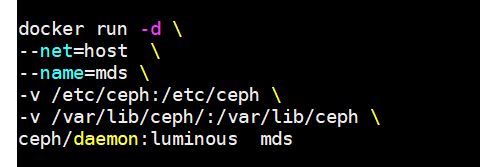

docker run -d \

--net=host \

--name=mds \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph/:/var/lib/ceph \

ceph/daemon:luminous mds

################################

在 ceph01 ceph02 ceph03 服务器:

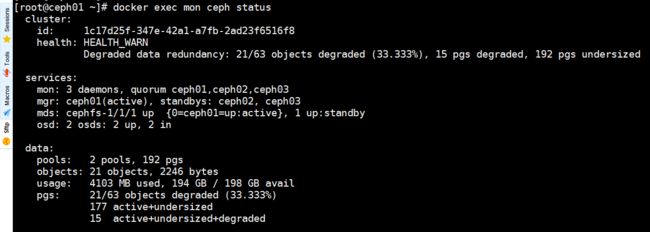

# docker exec mon ceph status

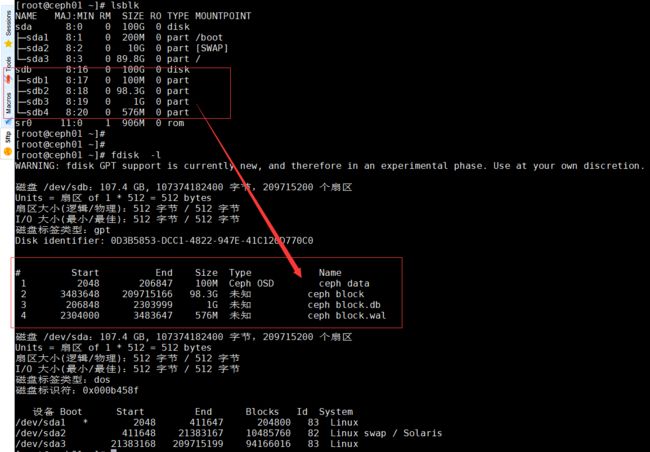

在 ceph01 ceph02 服务器

# lsblk

# fsidk -l

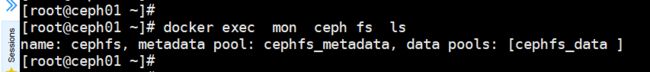

五、创建cephFS存储池

在ceph01服务器 :

# docker exec mon ceph osd pool create cephfs_data 128

# docker exec mon ceph osd pool create cephfs_metadata 64

# docker exec mon ceph fs new cephfs cephfs_metadata cephfs_data

# docker exec mon ceph fs ls

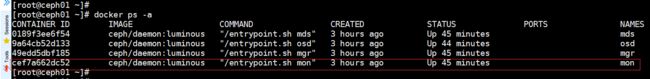

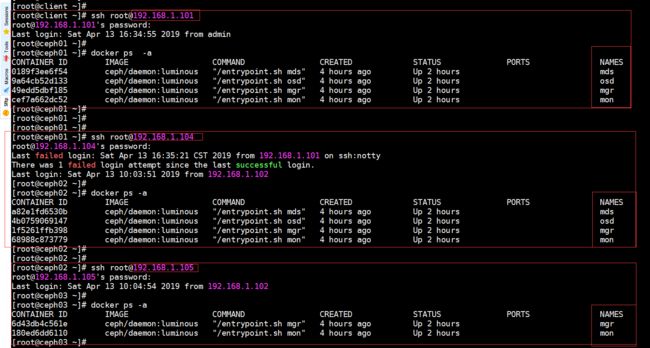

现在我们在一张图中看各个ceph节点的容器分布:

六、安装配置cephClient

客户端要挂载使用cephfs的目录,有两种方式:

1. 使用linux kernel client

2. 使用ceph-fuse

这两种方式各有优劣势,kernel client的特点在于它与ceph通信大部分都在内核态进行,因此性能要更好,缺点是L版本的cephfs要求客户端支持一些高级特性,ceph FUSE就是简单一些,还支持配额,缺点就是性能比较差,实测全ssd的集群,性能差不多为kernel client的一半。

关闭selinux

# setenforce 0

# sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config

方式一:使用linux kernel client

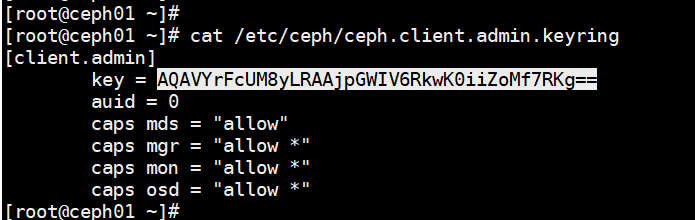

在ceph01服务器上获取admin认证key

# cat /etc/ceph/ceph.client.admin.keyring

默认采用ceph-deploy部署ceph集群是开启了cephx认证,需要挂载secret-keyring,即集群mon节点/etc/ceph/ceph.client.admin.keyring文件中的”key”值,采用secretfile可不用暴露keyring,但有1个bug,始终报错:libceph: bad option at 'secretfile=/etc/ceph/admin.secret'

Bug地址: https://bugzilla.redhat.com/show_bug.cgi?id=1030402

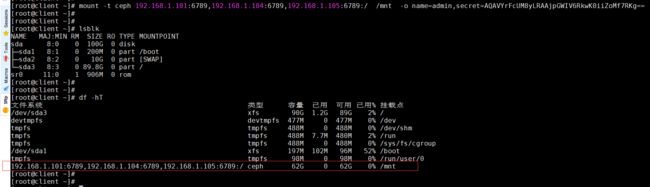

# mount -t ceph 192.168.1.101:6789,192.168.1.104:6789,192.168.1.105:6789:/ /mnt \

-o name=admin,secret=AQAVYrFcUM8yLRAAjpGWIV6RkwK0iiZoMf7RKg==

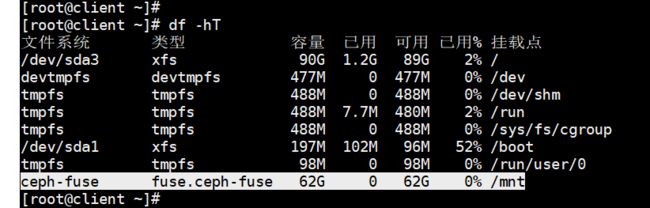

# df -hT

方式二:使用ceph-fuse

添加ceph yum仓库

# vim /etc/yum.repos.d/ceph.repo

####################################################

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/$basearch

enabled=1

gpgcheck=0

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch

enabled=1

gpgcheck=0

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS

enabled=1

gpgcheck=0

#####################################################

# yum -y install epel-release

# yum clean all

# yum repolist

安装ceph-fuse 相关组件

# yum -y install ceph-fuse

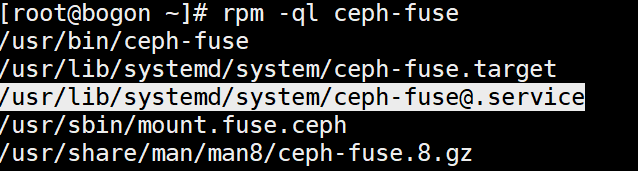

# rpm -ql ceph-fuse

创建ceph-fuse 相关目录

# mkdir /etc/ceph

# scp [email protected]:/etc/ceph/ceph.client.admin.keyring /etc/ceph

# scp [email protected]:/etc/ceph/ceph.conf /etc/ceph

# chmod 600 /etc/ceph/ceph.client.admin.keyring

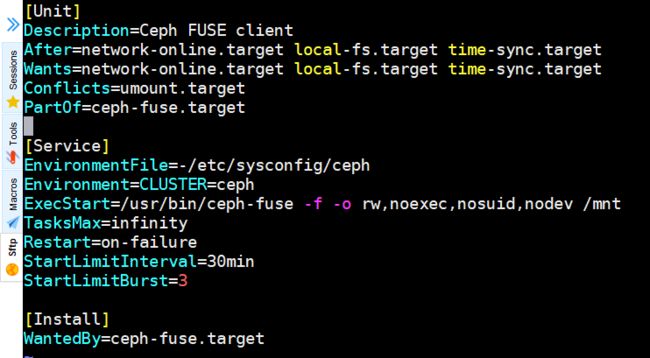

创建ceph-fuse的service文件

# cp /usr/lib/systemd/system/[email protected] /etc/systemd/system/ceph-fuse.service

# vim /etc/systemd/system/ceph-fuse.service

##############################################

[Unit]

Description=Ceph FUSE client

After=network-online.target local-fs.target time-sync.target

Wants=network-online.target local-fs.target time-sync.target

Conflicts=umount.target

PartOf=ceph-fuse.target

[Service]

EnvironmentFile=-/etc/sysconfig/ceph

Environment=CLUSTER=ceph

ExecStart=/usr/bin/ceph-fuse -f -o rw,noexec,nosuid,nodev /mnt

TasksMax=infinity

Restart=on-failure

StartLimitInterval=30min

StartLimitBurst=3

[Install]

WantedBy=ceph-fuse.target

########################################################

我们将cephfs挂载在客户端/mnt下

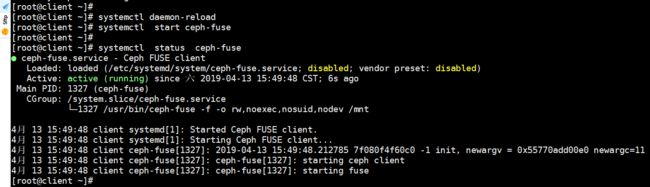

# systemctl daemon-reload

# systemctl start ceph-fuse

# systemctl status ceph-fuse

# df -hT

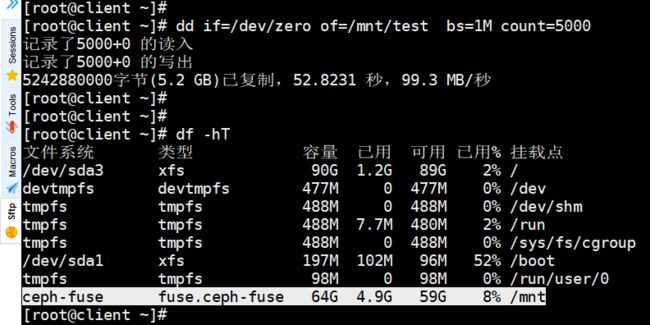

测试写入一个大文件

# dd if=/dev/zero of=/mnt/test bs=1M count=10000

# df -hT

设置cephFS 挂载子目录

从上面的可以看出,挂载cephfs的时候,源目录使用的是/,如果一个集群只提供给一个用户使用就太浪费了,能不能把集群切分成多个目录,多个用户自己挂载自己的目录进行读写呢?

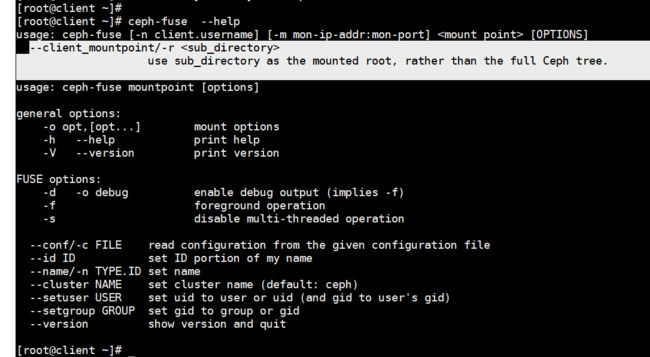

# ceph-fuse --help

使用admin挂载了cephfs的/之后,只需在/中创建目录,这些创建后的目录就成为cephFS的子树,其他用户经过配置,是可以直接挂载这些子树目录的,具体步骤为:

1. 使用admin挂载了/之后,创建了/test

# mkdir -p /opt/tmp

# ceph-fuse /opt/tmp

# mkdir /opt/tmp/test

# umount /opt/tmp

# rm -rf /opt/tmp

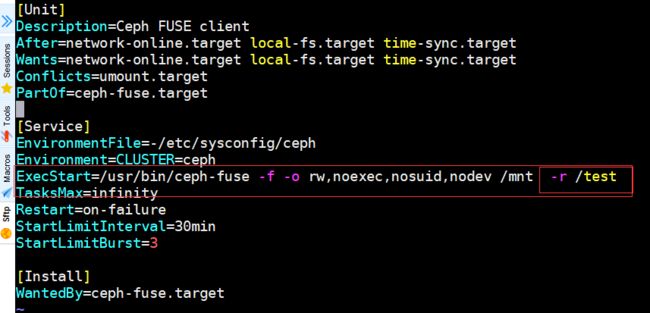

2. 设置ceph-fuse.service,挂载子目录

# vim /etc/systemd/system/ceph-fuse.service

################################################

[Unit]

Description=Ceph FUSE client

After=network-online.target local-fs.target time-sync.target

Wants=network-online.target local-fs.target time-sync.target

Conflicts=umount.target

PartOf=ceph-fuse.target

[Service]

EnvironmentFile=-/etc/sysconfig/ceph

Environment=CLUSTER=ceph

ExecStart=/usr/bin/ceph-fuse -f -o rw,noexec,nosuid,nodev /mnt -r /test

TasksMax=infinity

Restart=on-failure

StartLimitInterval=30min

StartLimitBurst=3

[Install]

WantedBy=ceph-fuse.target

###################################################################

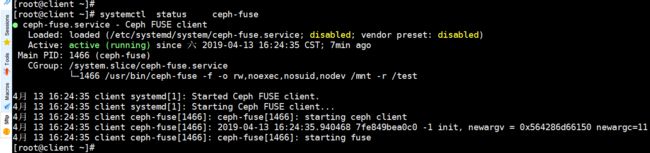

# systemctl daemon-reload

# systemctl start ceph-fuse

# systemctl status ceph-fuse

# df -hT

当然,这篇文章我们只讲了ceph的文件系统cephFS,关于另外两种存储 块存储和对象存储,大家可以参阅相关资料,自行解决!

七、参考

在CentOS7.x中使用yum安装软件的指定版本

https://www.jianshu.com/p/ca3ddf69ca4e

Ceph分布式存储系统

https://www.jianshu.com/p/2a47909121ea

Funky Penguin's Geek Cookbook:Shared Storage (Ceph)

https://geek-cookbook.funkypenguin.co.nz/ha-docker-swarm/shared-storage-ceph

Ceph - Firewalld zones and services

https://www.aevoo.fr/blog/ceph-firewalld-zones-and-services

在Docker里运行Ceph翻译版

http://dockone.io/article/558

基于docker部署ceph以及修改docker image

http://www.zphj1987.com/2017/03/15/base-on-docker-deploy-ceph/

cephFS关于subdiretory 的设置

http://strugglesquirrel.com/2018/07/17/cephfs%E5%88%9D%E6%8E%A2-%E5%B0%8F%E8%AF%95%E7%89%9B%E5%88%80/

Ceph学习笔记

https://www.bookstack.cn/read/zxj_ceph/deploy

Ceph 运维手册

https://www.bookstack.cn/read/ceph-handbook/Operation-common_operations.md

配置 CEPH

http://docs.ceph.org.cn/rados/configuration/ceph-conf

Ceph配置参数详解

http://www.yangguanjun.com/2017/05/15/Ceph-configuration

ceph-deploy 2.0.0 部署 Ceph Luminous 12.2.4

http://www.yangguanjun.com/2018/04/06/ceph-deploy-latest-luminous

ceph自动化部署

http://www.yangguanjun.com/2018/06/02/ceph-automatic-deploy

cephfs架构解读与测试分析-part1

http://www.yangguanjun.com/2017/08/30/cephfs-knowledge-share-part1

cephfs架构解读与测试分析-part2

http://www.yangguanjun.com/2017/09/18/cephfs-knowledge-share-part2