本文基于Spark 2.1.0版本、Hadoop 2.7.3版本

如无特殊说明,本文的Spark Web UI,特指: [Driver Web UI](默认是http://运行Driver程序的主机IP:4040)

Spark提供了几个可配置的属性,允许用户控制Web UI使用的安全性:

- ACL机制,允许指定的用户查看Web UI的内容、终止JOB、Stage的运行

- Filter机制,允许用户使用自定义的过滤器来控制Web UI的使用权限(本文重点)

1,ACL机制:

相关属性:

| Property Name | Default | Meaning |

|---|---|---|

| spark.acls.enable | false | Whether Spark acls should be enabled. If enabled, this checks to see if the user has access permissions to view or modify the job. Note this requires the user to be known, so if the user comes across as null no checks are done. |

| spark.ui.view.acls | Empty | Comma separated list of users that have view access to the Spark web ui. By default only the user that started the Spark job has view access. Putting a "*" in the list means any user can have view access to this Spark job. |

| spark.modify.acls | Empty | Comma separated list of users that have modify access to the Spark job. By default only the user that started the Spark job has access to modify it (kill it for example). Putting a "*" in the list means any user can have access to modify it. |

| spark.admin.acls | Empty | Comma separated list of users/administrators that have view and modify access to all Spark jobs. This can be used if you run on a shared cluster and have a set of administrators or devs who help debug when things do not work. Putting a "*" in the list means any user can have the privilege of admin. |

这个机制很简单,大家看明白每个属性的意思,就可以很快上手了,我简单举几例子说明一下。

首先,hadoop用户下,使用YARN的方式启动spark shell应用程序:

[hadoop@wl1 ~]$ spark-shell --master yarn

观察上图中两个椭圆扩起来的地方:

- 使用YARN时,默认的登陆用户是dr.who(可以在Hadoop的core-site.xml中使用hadoop.http.staticuser.user属性来指定登陆用户)

- 而spark-shell的应用程序,是用hadoop用户启动的

由于没有使能ACL机制,此时点击Tracking UI: ApplicationMaster,是可以进入该Spark应用程序的Driver Web UI的(此处图省略)。

使用如下命令重新提交该Spark应用程序:

[hadoop@wl1 ~]$ spark-shell --master yarn --conf spark.acls.enable=true

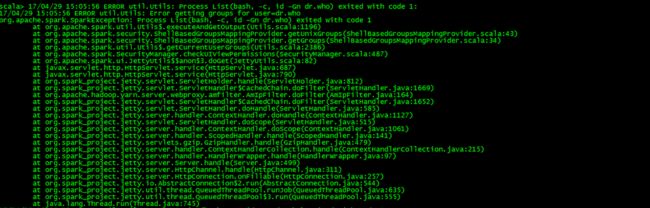

点击YARN Web UI界面的Tracking UI: ApplicationMaster,发现访问失败

说明spark.acls.enable属性为true时,开启了ACL机制。当访问Spark应用程序 Web UI的用户不是启动该应用程序的用户时,会被拒绝访问(本例的访问者是dr.who)。

使用如下命令重新提交该Spark应用程序:

[hadoop@wl1 ~]$ spark-shell --master yarn --conf spark.acls.enable=true --conf spark.ui.view.acls=dr.who

点击YARN Web UI界面的Tracking UI: ApplicationMaster,发现可以正常访问Spark应用程序的Driver Web UI了

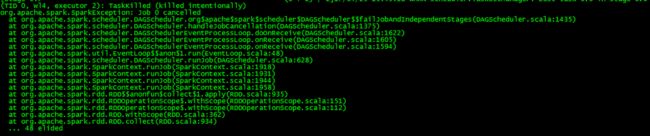

但是点击Job kill(图中椭圆框位置)时,并没有终止该Job(Stage同理),在终端有错误信息的输出

说明通过spark.ui.view.acls属性加入ACL的用户,只有view的权限,没有modify的权限。

使用如下命令重新提交该Spark应用程序:

[hadoop@wl1 ~]$ spark-shell --master yarn --conf spark.acls.enable=true --conf spark.ui.view.acls=dr.who --conf spark.modify.acls=dr.who

点击YARN Web UI界面的Tracking UI: ApplicationMaster,发现可以正常访问Spark应用程序的Driver Web UI

同时也可以通过点击Job kill来终止该Job(Stage同理)

要注意一点:spark.modify.acls属性需要和spark.ui.view.acls属性配合使用。

使用如下命令重新提交该Spark应用程序:

[hadoop@wl1 ~]$ spark-shell --master yarn --conf spark.acls.enable=true --conf spark.admin.acls=dr.who

(此处图省略)点击YARN Web UI界面的Tracking UI: ApplicationMaster,发现可以正常访问Spark应用程序的Driver Web UI,同时也可以通过点击Job kill(图中椭圆框位置)来终止该Job(Stage同理)。

说明通过spark.admin.acls属性加入ACL的用户,具有Admin的权限,可以通过Spark Driver Web UI 来view和modify Spark的应用程序。

2,Filter机制:

相关属性:

| Property Name | Default | Meaning |

|---|---|---|

| spark.ui.filters | None | Comma separated list of filter class names to apply to the Spark web UI. The filter should be a standard javax servlet Filter. Parameters to each filter can also be specified by setting a java system property of: spark. |

用户可以通过自定义的Filter过滤器,来控制Spark Driver Web UI的访问规则。

首先,实现一个符合标准javax servlet Filter的类,源码如下:

这是一个对用户名和密码进行校验的过滤器,也是HTTP访问时常用的权限核实方式

/**

* Created by wangliang on 2017/4/29.

*/

import org.apache.commons.codec.binary.Base64;

import org.apache.commons.lang.StringUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import javax.servlet.*;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.util.StringTokenizer;

public class BasicAuthFilter implements Filter {

/** Logger */

private static final Logger LOG = LoggerFactory.getLogger(BasicAuthFilter.class);

private String username = "";

private String password = "";

private String realm = "Protected";

@Override

public void init(FilterConfig filterConfig) throws ServletException {

username = filterConfig.getInitParameter("username");

password = filterConfig.getInitParameter("password");

String paramRealm = filterConfig.getInitParameter("realm");

if (StringUtils.isNotBlank(paramRealm)) {

realm = paramRealm;

}

}

@Override

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse, FilterChain filterChain)

throws IOException, ServletException {

HttpServletRequest request = (HttpServletRequest) servletRequest;

HttpServletResponse response = (HttpServletResponse) servletResponse;

String authHeader = request.getHeader("Authorization");

if (authHeader != null) {

StringTokenizer st = new StringTokenizer(authHeader);

if (st.hasMoreTokens()) {

String basic = st.nextToken();

if (basic.equalsIgnoreCase("Basic")) {

try {

String credentials = new String(Base64.decodeBase64(st.nextToken()), "UTF-8");

LOG.debug("Credentials: " + credentials);

int p = credentials.indexOf(":");

if (p != -1) {

String _username = credentials.substring(0, p).trim();

String _password = credentials.substring(p + 1).trim();

if (!username.equals(_username) || !password.equals(_password)) {

unauthorized(response, "Bad credentials");

}

filterChain.doFilter(servletRequest, servletResponse);

} else {

unauthorized(response, "Invalid authentication token");

}

} catch (UnsupportedEncodingException e) {

throw new Error("Couldn't retrieve authentication", e);

}

}

}

} else {

unauthorized(response);

}

}

@Override

public void destroy() {

}

private void unauthorized(HttpServletResponse response, String message) throws IOException {

response.setHeader("WWW-Authenticate", "Basic realm=\"" + realm + "\"");

response.sendError(401, message);

}

private void unauthorized(HttpServletResponse response) throws IOException {

unauthorized(response, "Unauthorized");

}

}

上述代码生成spark_filter.jar,放置在Spark集群中,使用如下命令提交Spark应用程序:(spark.ui.filters通过driver java属性来设置)

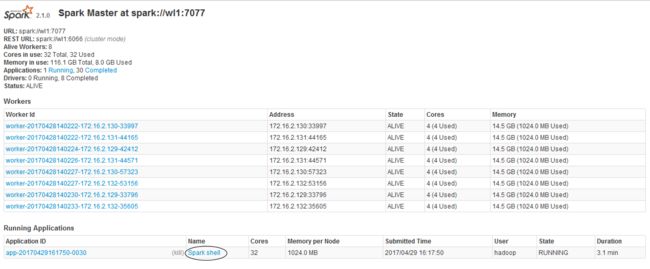

[hadoop@wl1 ~]$ spark-shell --master spark://wl1:7077 --driver-class-path /home/hadoop/testjar/spark_filter.jar --driver-java-options "-Dspark.ui.filters=BasicAuthFilter -Dspark.BasicAuthFilter.params='username=admin,password=admin,realm=20170429'"

这次使用Standalone的Client方式提交的应用程序,所以通过Spark Master的Web UI来访问Driver Web UI(下图椭圆框处)

神奇的一幕出现了,弹出了认证对话框,这个就是上面自定义的BasicAuthFilter实现的,输入正确的用户名和密码

(就是提交应用程序时-Dspark.BasicAuthFilter.params指定的)

就可以访问Spark Driver Web UI了

需要注意的地方:

如果使用YARN的方式来提交应用程序,Spark默认会加载Hadoop的org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter过滤器,当用户使用YARN的Web UI来访问Spark应用程序的Web UI时,使用的地址是过滤器生成的8088端口的代理地址,导致如果同时使用上面的

BasicAuthFilter过滤器时,用户认证总是失败,因为该认证需要和Spark 4040端口的Web Server交互才行。

所以,如果想基于YARN来控制Spark应用程序的Web UI,可以用Hadoop提供的Filter或者HTTP Kerberos的方式来实现。

相关链接:

[Spark 2.1.0 configuration] (http://spark.apache.org/docs/latest/configuration.html)

[javax servlet Filter] (http://docs.oracle.com/javaee/6/api/javax/servlet/Filter.html)

[Spark 2.1.0 security]

(http://spark.apache.org/docs/latest/security.html)

喜欢这篇文章,就点一下♥️吧