这篇论文跟上一篇的VGG论文一样,在深度学习领域同样的经典,在2015年的CVPR,该论文拿到了best paper候选的论文,在之后的PASCAL VOC2012,凡是涉及到图像语义分割的模型,都沿用了FCN的结构,并且这篇论文跟VGG的结构也很相似,区别只在于VGG最后的全连接层在FCN上替换为卷积层,因此在我们了解完VGG之后,再来了解FCN是很有意义的.这篇文章我们将对论文进行翻译,同时也是精读,希望读完之后能够有所收获,如果有所错误,也请大家指出.

Abstract

Convolutional networks are powerful visual models that yield hierarchies of features. We show that convolutional networks by themselves, trained end-to-end, pixelsto-pixels, exceed the state-of-the-art in semantic segmentation. Our key insight is to build “fully convolutional” networks that take input of arbitrary size and produce correspondingly-sized output with efficient inference and learning. We define and detail the space of fully convolutional networks, explain their application to spatially dense prediction tasks, and draw connections to prior models. We adapt contemporary classification networks (AlexNet [22], the VGG net [34], and GoogLeNet [35]) into fully convolutional networks and transfer their learned representations by fine-tuning [5] to the segmentation task. We then define a skip architecture that combines semantic information from a deep, coarse layer with appearance information from a shallow, fine layer to produce accurate and detailed segmentations. Our fully convolutional network achieves stateof-the-art segmentation of PASCAL VOC (20% relative improvement to 62.2% mean IU on 2012), NYUDv2, and SIFT Flow, while inference takes less than one fifth of a second for a typical image.

摘要

卷积网络在特征分层领域是非常强大的视觉模型。我们证明了经过端到端、像素到像素训练的卷积网络可以超过最先进的语义分割技术。我们的关键目标是建立“全卷积”网络,可以输入任意大小的数据,经过有效的推理和学习产生相应大小的输出。我们定义并且详细说明了全卷积网络的空间,解释它们在空间范围内dense prediction任务(预测每个像素所属的类别)和获取与先验模型联系的应用。我们改造当前的分类网络(AlexNet [22] ,the VGG net [34] , and GoogLeNet [35] )到全卷积网络和通过微调 [5] 传递它们的学习表现到分割任务中。然后我们定义了一个跳跃式的架构,结合来自深、粗层的语义信息和来自浅、细层的表征信息来产生准确和精细的分割。我们的完全卷积网络成为了在PASCAL VOC最出色的分割方式(在2012年相对62.2%的平均IU提高了20%),NYUDv2,和SIFT Flow,对一个典型图像推理只需要花费不到0.2秒的时间。

Introduction

Convolutional networks are driving advances in recognition. Convnets are not only improving for whole-image classification [22, 34, 35], but also making progress on local tasks with structured output. These include advances in bounding box object detection [32, 12, 19], part and keypoint prediction [42, 26], and local correspondence [26, 10]. The natural next step in the progression from coarse to fine inference is to make a prediction at every pixel. Prior approaches have used convnets for semantic segmentation [30, 3, 9, 31, 17, 15, 11], in which each pixel is labeled with the class of its enclosing object or region, but with shortcomings that this work addresses

We show that a fully convolutional network (FCN) trained end-to-end, pixels-to-pixels on semantic segmentation exceeds the state-of-the-art without further machinery. To our knowledge, this is the first work to train FCNs end-to-end (1) for pixelwise prediction and (2) from supervised pre-training. Fully convolutional versions of existing networks predict dense outputs from arbitrary-sized inputs. Both learning and inference are performed whole-image-ata-time by dense feedforward computation and backpropagation. In-network upsampling layers enable pixelwise prediction and learning in nets with subsampled pooling. This method is efficient, both asymptotically and absolutely, and precludes the need for the complications in other works. Patchwise training is common [30, 3, 9, 31, 11], but lacks the efficiency of fully convolutional training. Our approach does not make use of pre- and post-processing complications, including superpixels [9, 17], proposals [17, 15], or post-hoc refinement by random fields or local classifiers [9, 17]. Our model transfers recent success in classification [22, 34, 35] to dense prediction by reinterpreting classification nets as fully convolutional and fine-tuning from their learned representations. In contrast, previous works have applied small convnets without supervised pre-training [9, 31, 30]. Semantic segmentation faces an inherent tension between semantics and location: global information resolves what while local information resolves where. Deep feature hierarchies encode location and semantics in a nonlinear

local-to-global pyramid. We define a skip architecture to take advantage of this feature spectrum that combines deep, coarse, semantic information and shallow, fine, appearance information in Section 4.2 (see Figure 3). In the next section, we review related work on deep classification nets, FCNs, and recent approaches to semantic segmentation using convnets. The following sections explain FCN design and dense prediction tradeoffs, introduce our architecture with in-network upsampling and multilayer combinations, and describe our experimental framework. Finally, we demonstrate state-of-the-art results on PASCAL VOC 2011-2, NYUDv2, and SIFT Flow.

介绍

卷积网络正在推动识别技术。卷积网络不仅提高了全图分类[22,34,35],而且在结构化输出的本地局部任务上取得了进展。包括在目标检测边界框 [32,12,19] 、部分和关键点预测 [42,26] 和局部通信 [26,10] 都取得了进步。从粗到细推演的下一步是对每个像素进行预测,早前的方法已经将卷积网络用于语义分割 [30,3,9,31,17,15,11] ,其中每个像素被标记为其封闭对象或区域的类别,但是这项工作也有缺点

我们研究表明,完全卷积网络(FCN)训练端到端,语义分割上的像素到像素超过了现有技术水平,而无需其他的操作。我们认为,这是第一次训练端到端(1)的FCN在像素级别的预测,而且来自监督式预处理(2)。全卷积在现有的网络基础上从任意尺寸的输入预测密集输出。 学习和推理都是通过密集的前馈计算和反向传播在整个图像上进行的。网内上采样层能在像素级别预测和通过下采样池化学习。

这种方法既快速又绝对有效,并且不需要有像其他工作中的并发问题。atchwise训练是常见的 [30, 3, 9, 31, 11] ,但是缺少了全卷积训练的有效性。我们的方法不是利用预处理或者后期处理解决并发问题,包括超像素 [9,17] ,proposals [17,15] ,或者对通过随机域事后细化或者局部分类 [9,17] 。我们的模型通过重新解释分类网到全卷积网络和微调它们的学习表现将最近在分类上的成功 [22,34,35] 移植到dense prediction。与此相反,先前的工作应用的是小规模、没有超像素预处理的卷积网。

语义分割面临语义和位置之间固有的紧张关系:全局信息解决全局的信息,局部信息解决局部在哪里。 深度特征层次结构对非线性中的位置和语义进行编码.

我们在4.2节(见图3)定义了一种利用集合了深、粗层的语义信息和浅、细层的表征信息的特征谱的跨层架构.在下一节中,我们将回顾有关深度分类网络,FCN和近期使用小网络进行语义分割的方法。 以下部分解释了FCN设计和密集预测折衷方案,将我们的架构与网内上采样和多层组合相结合,并描述了我们的实验框架。 最后,我们在PASCAL VOC 2011-2,NYUDv2和SIFT Flow上展示了最先进的结果.

Related work

Our approach draws on recent successes of deep nets for image classification [22, 34, 35] and transfer learning [5, 41]. Transfer was first demonstrated on various visual recognition tasks [5, 41], then on detection, and on both instance and semantic segmentation in hybrid proposalclassifier models [12, 17, 15]. We now re-architect and finetune classification nets to direct, dense prediction of semantic segmentation. We chart the space of FCNs and situate prior models, both historical and recent, in this framework.

Fully convolutional networks To our knowledge, the idea of extending a convnet to arbitrary-sized inputs first appeared in Matan et al. [28], which extended the classic LeNet [23] to recognize strings of digits. Because their net was limited to one-dimensional input strings, Matan et al. used Viterbi decoding to obtain their outputs. Wolf and Platt [40] expand convnet outputs to 2-dimensional maps of detection scores for the four corners of postal address blocks. Both of these historical works do inference and learning fully convolutionally for detection. Ning et al. [30] define a convnet for coarse multiclass segmentation of C. elegans tissues with fully convolutional inference.

Fully convolutional computation has also been exploited in the present era of many-layered nets. Sliding window detection by Sermanet et al. [32], semantic segmentation by Pinheiro and Collobert [31], and image restoration by Eigen et al. [6] do fully convolutional inference. Fully convolutional training is rare, but used effectively by Tompson et al. [38] to learn an end-to-end part detector and spatial model for pose estimation, although they do not exposit on or analyze this method.

Alternatively, He et al. [19] discard the nonconvolutional portion of classification nets to make a feature extractor. They combine proposals and spatial pyramid pooling to yield a localized, fixed-length feature for classification. While fast and effective, this hybrid model cannot be learned end-to-end.

Dense prediction with convnets Several recent works have applied convnets to dense prediction problems, including semantic segmentation by Ning et al. [30], Farabet et al.[9], and Pinheiro and Collobert [31]; boundary prediction for electron microscopy by Ciresan et al. [3] and for natural images by a hybrid convnet/nearest neighbor model by Ganin and Lempitsky [11]; and image restoration and depth estimation by Eigen et al. [6, 7]. Common elements of these approaches include

• small models restricting capacity and receptive fields;

• patchwise training [30, 3, 9, 31, 11];

• post-processing by superpixel projection, random field regularization, filtering, or local classification [9, 3, 11];

• input shifting and output interlacing for dense output [32, 31, 11];

• multi-scale pyramid processing [9, 31, 11];

• saturating tanh nonlinearities [9, 6, 31]; and

• ensembles [3, 11],

whereas our method does without this machinery. However, we do study patchwise training 3.4 and “shift-and-stitch” dense output 3.2 from the perspective of FCNs. We also discuss in-network upsampling 3.3, of which the fully connected prediction by Eigen et al. [7] is a special case.

Unlike these existing methods, we adapt and extend deep classification architectures, using image classification as supervised pre-training, and fine-tune fully convolutionally to learn simply and efficiently from whole image inputs and whole image ground thruths.

Hariharan et al. [17] and Gupta et al. [15] likewise adapt deep classification nets to semantic segmentation, but do so in hybrid proposal-classifier models. These approaches fine-tune an R-CNN system [12] by sampling bounding boxes and/or region proposals for detection, semantic segmentation, and instance segmentation. Neither method is learned end-to-end. They achieve state-of-the-art segmentation results on PASCAL VOC and NYUDv2 respectively, so we directly compare our standalone, end-to-end FCN to their semantic segmentation results in Section 5.

We fuse features across layers to define a nonlinear localto-global representation that we tune end-to-end. In contemporary work Hariharan et al. [18] also use multiple layers in their hybrid model for semantic segmentation.

相关工作

我们的方法利用了深度网络在图像分类[22,34,35]和转移学习方面取得的最新成果[5,41]。转移首先被证明在各种视觉识别任务中[5,41],然后进行检测,并在混合融合proposal-classification模型中进行实例操作和语义分割操作[12,17,15]。我们现在重新设计和微调分类网络来指导语义分割的密集预测。我们绘制了FCN的空间框架,并在此框架中放置了过去和近期的一些模型。

全卷积网络据我们所知,扩展到任意大小输入的想法首先是由Matan等人提出.它扩展了经典的LeNet网络结构来识别数字字符串(主要是手写体)。由于他们的网络仅限于一维的输入字符串,Matan等人使用维特比解码来获得它们的输出。Wolf和Platt [40]将邮箱地址输出扩展为邮政地址块四个角的检测分数的二维地图。这些历史操作都是为了检测而进行推理和学习的全卷积。Ning等人 [30]用完全卷积推断定义线虫组织的粗糙细胞的分类分割。

全卷积计算在当今许多的多层次网络也被利用。 比如Sermanet等人的滑动窗口检测,Pinheiro和Collobert [31]的语义分割以及Eigen等人的图像恢复都使用了全卷积推理[6]. 全卷积训练是很少见的,但Tompson等人有效地使用了学习一种端到端的局部检测和姿态估计的空间模型方法。 [38]尽管他们不去解释或分析这种方法。

除此之外,He等人 [19使用]丢弃分类网络的非卷积部分来制作特征提取器。他们将proposals和空间金字塔池合并在一起,以产生用于分类的本地化的固定长度特征。尽管快速且有效,但是这种混合模型不能进行端到端的学习。

基于卷积网的dense prediction近期的一些工作已经将卷积网应用于dense prediction问题,其中包括Ning等人的语义分割。,Farabet等人 [9] 以及Pinheiro和Collobert [31] ;Ciresan等人的电子显微镜边界预测 [3] 以及Ganin和Lempitsky [11] 的通过混合卷积网和最邻近模型的处理自然场景图像;还有Eigen等人 [6,7] 的图像修复和深度估计。这些方法的相同点包括如下:

•限制容量和接受范围的小模型;

• patchwise 学习 [30, 3, 9, 31, 11];

•通过超像素投影,随机场正则化,滤波或局部分类进行后处理[9,3,11]

输入移位和dense输出的隔行交错输出 [32,31,11]

多尺度金字塔处理 [9,31,11]

饱和双曲线正切非线性 [9,6,31]

集成 [3,11]

而我们的方法没有这个机制。 但是,我们从FCN的角度来研究patchwise训练(3.4节)和“shift-and-stitch”dense输出(3.2节)。我们还讨论了网内上采样(3.3节),其中Eigen等人完全连接的预测. [7]是一个特例。

与这些现有的方法不同,我们使用图像分类作为监督式预训练来调整和扩展深度分类体系结构,并通过全卷积地进行微调以从整个图像输入和整个图像ground truths学习中简单高效地学习。

Hariharan等人 [17] 和Gupta等人 [15] 也改编深度分类网到语义分割,但是也在混合proposal-classifier模型中这么做了。这些方法通过采样边界框和region proposal进行微调了R-CNN系统 [12] ,用于检测、语义分割和实例分割。这两种办法都不能进行端到端的学习。他们分别在PASCAL VOC和NYUDv2实现了最好的分割效果,所以在第5节中我们直接将我们的独立的、端到端的FCN和他们的语义分割结果进行比较。

我们将各个层的特征融合在一起来定义一个非线性局部到全局的表示,我们可以调整端到端来去协调。 在现在的工作中Hariharan等. [18]在他们的混合模型中也使用多层进行语义分割。

Fully convolutional networks

Each layer of data in a convnet is a three-dimensional array of size h × w × d, where h and w are spatial dimensions, and d is the feature or channel dimension. The first layer is the image, with pixel size h × w, and d color channels. Locations in higher layers correspond to the locations in the image they are path-connected to, which are called their receptive fields.

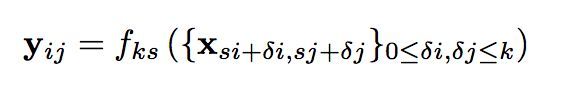

Convnets are built on translation invariance. Their basic components (convolution, pooling, and activation functions) operate on local input regions, and depend only on relative spatial coordinates. Writing xij for the data vector at location (i, j) in a particular layer, and yij for the following layer, these functions compute outputs yij by

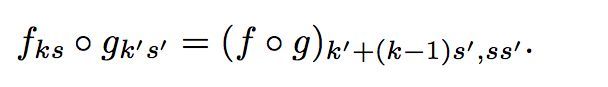

where k is called the kernel size, s is the stride or subsampling factor, and fks determines the layer type: a matrix multiplication for convolution or average pooling, a spatial max for max pooling, or an elementwise nonlinearity for an activation function, and so on for other types of layers.

This functional form is maintained under composition, with kernel size and stride obeying the transformation rule

While a general deep net computes a general nonlinear function, a net with only layers of this form computes a nonlinear filter, which we call a deep filter or fully convolutional network. An FCN naturally operates on an input of any size, and produces an output of corresponding (possibly resampled) spatial dimensions.

A real-valued loss function composed with an FCN defines a task. If the loss function is a sum over the spatial dimensions of the final layer, `(x; θ) = P ij ` 0 (xij ; θ), its gradient will be a sum over the gradients of each of its spatial components. Thus stochastic gradient descent on ` computed on whole images will be the same as stochastic gradient descent on ` 0 , taking all of the final layer receptive fields as a minibatch.

When these receptive fields overlap significantly, both feedforward computation and backpropagation are much more efficient when computed layer-by-layer over an entire image instead of independently patch-by-patch.

We next explain how to convert classification nets into fully convolutional nets that produce coarse output maps. For pixelwise prediction, we need to connect these coarse outputs back to the pixels. Section 3.2 describes a trick, fast scanning [13], introduced for this purpose. We gain insight into this trick by reinterpreting it as an equivalent network modification. As an efficient, effective alternative, we introduce deconvolution layers for upsampling in Section 3.3. In Section 3.4 we consider training by patchwise sampling, and give evidence in Section 4.3 that our whole image training is faster and equally effective.

3.1. Adapting classifiers for dense prediction

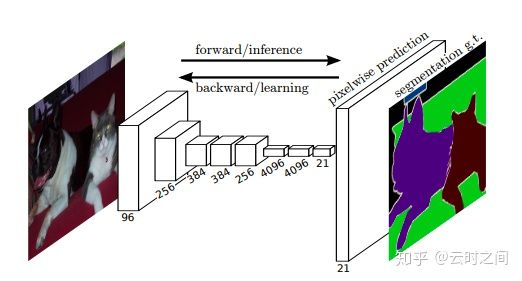

Typical recognition nets, including LeNet [23], AlexNet [22], and its deeper successors [34, 35], ostensibly take fixed-sized inputs and produce non-spatial outputs. The fully connected layers of these nets have fixed dimensions and throw away spatial coordinates. However, these fully connected layers can also be viewed as convolutions with kernels that cover their entire input regions. Doing so casts them into fully convolutional networks that take input of any size and output classification maps. This transformation is illustrated in Figure 2

Furthermore, while the resulting maps are equivalent to the evaluation of the original net on particular input patches, the computation is highly amortized over the overlapping regions of those patches. For example, while AlexNet takes 1.2 ms (on a typical GPU) to infer the classification scores of a 227×227 image, the fully convolutional net takes 22 ms to produce a 10×10 grid of outputs from a 500×500 image, which is more than 5 times faster than the na¨ıve approach1 .

The spatial output maps of these convolutionalized models make them a natural choice for dense problems like semantic segmentation. With ground truth available at every output cell, both the forward and backward passes are straightforward, and both take advantage of the inherent computational efficiency (and aggressive optimization) of convolution. The corresponding backward times for the AlexNet example are 2.4 ms for a single image and 37 ms for a fully convolutional 10 × 10 output map, resulting in a speedup similar to that of the forward pass.

While our reinterpretation of classification nets as fully convolutional yields output maps for inputs of any size, the output dimensions are typically reduced by subsampling. The classification nets subsample to keep filters small and computational requirements reasonable. This coarsens the output of a fully convolutional version of these nets, reducing it from the size of the input by a factor equal to the pixel stride of the receptive fields of the output units.

3.2. Shift-and-stitch is filter rarefaction

Dense predictions can be obtained from coarse outputs by stitching together output from shifted versions of the input. If the output is downsampled by a factor of f, shift the input x pixels to the right and y pixels down, once for every (x, y) s.t. 0 ≤ x, y < f. Process each of these f 2 inputs, and interlace the outputs so that the predictions correspond to the pixels at the centers of their receptive fields.

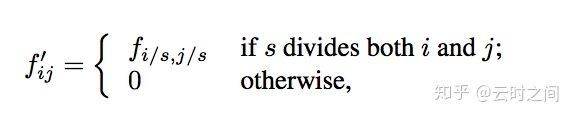

Although performing this transformation na¨ıvely increases the cost by a factor of f 2 , there is a well-known trick for efficiently producing identical results [13, 32] known to the wavelet community as the a trous algorithm [ ` 27]. Consider a layer (convolution or pooling) with input stride s, and a subsequent convolution layer with filter weights fij (eliding the irrelevant feature dimensions). Setting the lower layer’s input stride to 1 upsamples its output by a factor of s. However, convolving the original filter with the upsampled output does not produce the same result as shift-and-stitch, because the original filter only sees a reduced portion of its (now upsampled) input. To reproduce the trick, rarefy the filter by enlarging it as

(with i and j zero-based). Reproducing the full net output of the trick involves repeating this filter enlargement layerby-layer until all subsampling is removed. (In practice, this can be done efficiently by processing subsampled versions of the upsampled input.)

Decreasing subsampling within a net is a tradeoff: the filters see finer information, but have smaller receptive fields and take longer to compute. The shift-and-stitch trick is another kind of tradeoff: the output is denser without decreasing the receptive field sizes of the filters, but the filters are prohibited from accessing information at a finer scale than their original design.

Although we have done preliminary experiments with this trick, we do not use it in our model. We find learning through upsampling, as described in the next section, to be more effective and efficient, especially when combined with the skip layer fusion described later on.

3.3. Upsampling is backwards strided convolution

Another way to connect coarse outputs to dense pixels is interpolation. For instance, simple bilinear interpolation computes each output yij from the nearest four inputs by a linear map that depends only on the relative positions of the input and output cells.

In a sense, upsampling with factor f is convolution with a fractional input stride of 1/f. So long as f is integral, a natural way to upsample is therefore backwards convolution (sometimes called deconvolution) with an output stride of f. Such an operation is trivial to implement, since it simply reverses the forward and backward passes of convolution. Thus upsampling is performed in-network for end-to-end learning by backpropagation from the pixelwise loss.

Note that the deconvolution filter in such a layer need not be fixed (e.g., to bilinear upsampling), but can be learned. A stack of deconvolution layers and activation functions can even learn a nonlinear upsampling.

In our experiments, we find that in-network upsampling is fast and effective for learning dense prediction. Our best segmentation architecture uses these layers to learn to upsample for refined prediction in Section 4.2.

3.4. Patchwise training is loss sampling

In stochastic optimization, gradient computation is driven by the training distribution. Both patchwise training and fully convolutional training can be made to produce any distribution, although their relative computational efficiency depends on overlap and minibatch size. Whole image fully convolutional training is identical to patchwise training where each batch consists of all the receptive fields of the units below the loss for an image (or collection of images). While this is more efficient than uniform sampling of patches, it reduces the number of possible batches. However, random selection of patches within an image may be recovered simply. Restricting the loss to a randomly sampled subset of its spatial terms (or, equivalently applying a DropConnect mask [39] between the output and the loss) excludes patches from the gradient computation.

If the kept patches still have significant overlap, fully convolutional computation will still speed up training. If gradients are accumulated over multiple backward passes, batches can include patches from several images.2

Sampling in patchwise training can correct class imbalance [30, 9, 3] and mitigate the spatial correlation of dense patches [31, 17]. In fully convolutional training, class balance can also be achieved by weighting the loss, and loss sampling can be used to address spatial correlation.

We explore training with sampling in Section 4.3, and do not find that it yields faster or better convergence for dense prediction. Whole image training is effective and efficient.

全卷积网络

ps:睡觉,明天再更新,一天翻译一段,英语阅读不用愁^_^