Ceph集群搭建部署及常规操作

文章目录

- 一、环境准备

- 1、主机名

- 2、关闭防火墙、核心防护

- 3、配置hosts

- 4、创建免交互

- 5、配置YUM源

- 6、配置NTP时钟服务

- 二、CEPH集群搭建

- 三、CEPH扩容

- 四、osd数据恢复

- 1、模拟故障

- 2、恢复osd到集群中

- 五、ceph常规的维护命令

- 1、创建mgr服务

- 2、创建pool

- 3、删除pool

- 4、修改pool名字

- 5、查看ceph命令

- 6、配置ceph内部通信网段

- 六、制作centos_ceph离线包

一、环境准备

各节点IP分配如下表:

| 操作系统 | 主机名 | VM网段IP | NAT网段IP | 硬盘规划 | 内存 | CPU |

|---|---|---|---|---|---|---|

| Centos 7 | ceph01 | 192.168.100.101 | 192.168.11.134 | 20G+1024G | 4G | 双核双线程 |

| Centos 7 | ceph02 | 192.168.100.102 | 192.168.11.135 | 20G+1024G | 4G | 双核双线程 |

| Centos 7 | ceph03 | 192.168.100.103 | 192.168.11.136 | 20G+1024G | 4G | 双核双线程 |

1、主机名

#三个节点分别配置主机名

[root@localhost ~]# hostnamectl set-hostname ceph01

[root@localhost ~]# hostnamectl set-hostname ceph02

[root@localhost ~]# hostnamectl set-hostname ceph03

2、关闭防火墙、核心防护

#三个节点均需要操作,以ceph01为例

[root@ceph01 ~]# systemctl stop firewalld

[root@ceph01 ~]# systemctl disable firewalld

[root@ceph01 ~]# setenforce 0

[root@ceph01 ~]# sed -i '7s/enforcing/disabled/' /etc/selinux/config

3、配置hosts

#三个节点均需要操作,以ceph01为例

[root@ceph01 ~]# vim /etc/hosts

192.168.100.101 ceph01

192.168.100.102 ceph02

192.168.100.103 ceph03

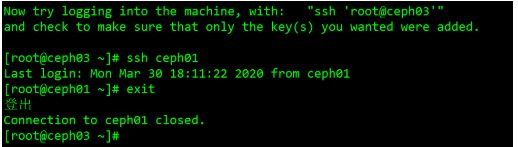

4、创建免交互

#三个节点均需要操作,以ceph01为例

[root@ceph01 ~]# ssh-keygen

[root@ceph01 ~]# ssh-copy-id root@ceph01

[root@ceph01 ~]# ssh-copy-id root@ceph02

[root@ceph01 ~]# ssh-copy-id root@ceph03

5、配置YUM源

#三个节点均需要操作,以ceph01为例

[root@ceph01 ~]# vim /etc/yum.conf

keepcache=1 //开启缓存 三个节点都改

[root@ceph01 ~]# yum -y install wget curl net-tools bash-completion //安装wget curl net-tools bash-completion

[root@ceph01 ~]# cd /etc/yum.repos.d/

[root@ceph01 yum.repos.d]# mkdir backup

[root@ceph01 yum.repos.d]# mv C* backup

[root@ceph01 yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@ceph01 yum.repos.d]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@ceph01 yum.repos.d]# cat << EOF > /etc/yum.repos.d/ceph.repo

[ceph]

name=Ceph packages for

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

EOF

[root@ceph03 yum.repos.d]# cat ceph.repo

[root@ceph03 yum.repos.d]# yum update -y //升级yum源

6、配置NTP时钟服务

① 配置ceph01时间服务器

[root@ceph01 ~]# yum -y install ntpdate ntp

[root@ceph01 ~]# ntpdate ntp1.aliyun.com //同步阿里云

[root@ceph01 ~]# clock -w //clock -w把当前系统时间写入到CMOS中

[root@ceph01 ~]# vim /etc/ntp.conf //清空加入以下内容

driftfile /var/lib/ntp/drift

restrict defaultnomodify

restrict 127.0.0.1

restrict ::1

restrict 192.168.100.0 mask 255.255.255.0 nomodify notrap fudge 127.127.1.0 stratum 10

server 127.127.1.0

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

[root@ceph01 ~]# systemctl start ntpd

[root@ceph01 ~]# systemctl enable ntpd

② ceph02同步客户端ceph01

[root@ceph02 ~]# yum -y install ntpdate

[root@ceph02 ~]# ntpdate ceph01

[root@ceph02 ~]# crontab -e

*/2 * * * * /usr/bin/ntpdate ceph01 >> /var/log/ntpdate.log

[root@ceph02 ~]# systemctl restart crond

[root@ceph02 ~]# crontab -l

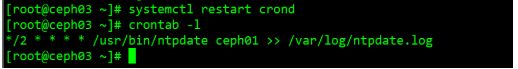

③ ceph03同步客户端ceph01

[root@ceph03 ~]# yum -y install ntpdate

[root@ceph03 ~]# ntpdate ceph01

[root@ceph03 ~]# crontab -e

*/2 * * * * /usr/bin/ntpdate ceph01 >> /var/log/ntpdate.log

[root@ceph03 ~]# systemctl restart crond

[root@ceph03 ~]# crontab -l

二、CEPH集群搭建

使用线网源搭建

#登录ceph01 ##

##mkdir /etc/ceph

##yum -y install http://download.ceph.com/rpm-mimic/el7/noarch/ceph-deploy-2.0.1-0.noarch.rpm

##yum -y install python-setuptools

##cd /etc/ceph

##ceph-deploy install ceph01 ceph02 ceph03

##scp ceph.repo epel.repo root@ceph02:/etc/yum.repos.d##

##scp ceph.repo epel.repo root@ceph03:/etc/yum.repos.d##

##yum -y install ceph ceph-redosgw三台主机###

1、三个节点均创建ceph目录并安装ceph

[root@ceph01 ~]# mkdir /etc/ceph

[root@ceph01 ~]# yum -y install ceph

2、在ceph01安装批量安装工具

[root@ceph01 ~]# yum -y install python-setuptools

[root@ceph01 ~]# yum -y install ceph-deploy

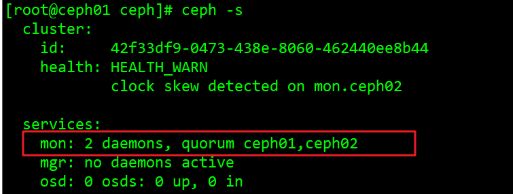

3、ceph01创建mon

[root@ceph01 ~]# cd /etc/ceph

[root@ceph01 ~]# ceph-deploy new ceph01 ceph02

4、在ceph01初始化mon并收取秘钥

[root@ceph01 ~]# cd /etc/ceph

[root@ceph01 ~]# ceph-deploy mon create-initial

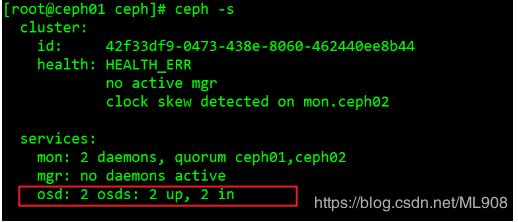

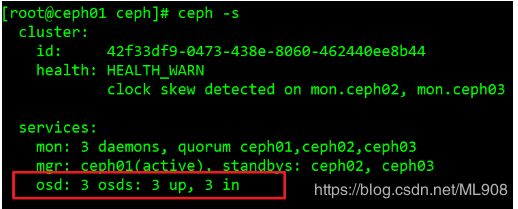

[root@ceph01 ~]# ceph -s ##查看状态

[root@ceph01 ~]# ceph-deploy osd create --data /dev/sdb ceph01

[root@ceph01 ~]# ceph-deploy osd create --data /dev/sdb ceph02

[root@ceph01 ~]# ceph -s //查看状态有2个osd

[root@ceph01 ~]# ceph osd tree

[root@ceph01 ~]# ceph osd stat

6、在ceph01将配置文件和admin秘钥下发到ceph01 ceph02

[root@ceph01 ~]# ceph-deploy admin ceph01 ceph02

7、分别在ceph01和ceph02中给秘钥增加读的权限

[root@ceph01 ~]# chmod +x /etc/ceph/ceph.client.admin.keyring

[root@ceph02 ~]# chmod +x /etc/ceph/ceph.client.admin.keyring

[root@ceph01 ~]# ceph -s

三、CEPH扩容

1、登录ceph01将ceph03 osd加入到集群中

[root@ceph01 ~]# ceph-deploy osd create --data /dev/sdb ceph03

[root@ceph01 ~]# ceph -s

[root@ceph01 ~]# ceph-deploy mon add ceph03

[root@ceph01 ~]# ceph -s

[root@ceph01 ~]# vim /etc/ceph/ceph.conf

mon_initial members = ceph01,ceph02,ceph03 //新增ceph03

mon_host = 192.168.100.101,192.168.100.102,192.168.100.103 //新增192.168.100.103

#将配置文件下发给ceph01 ceph02 ceph03

[root@ceph01 ~]# cd /etc/ceph/

[root@ceph01 ceph]# ceph-deploy --overwrite-conf config push ceph01 ceph02 ceph03

#三个节点重启mon服务

[root@ceph01 ~]# systemctl restart ceph-mon.target

#如果不知道重启mon服务,可以通过如下命令查看

systemctl list-unit-files l grep mon

四、osd数据恢复

1、模拟故障

#登录ceph03,先把信息拷贝下来

[root@ceph01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99696 root default

-3 0.99899 host ceph01

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph02

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99898 host ceph03

2 hdd 0.99898 osd.2 up 1.00000 1.00000

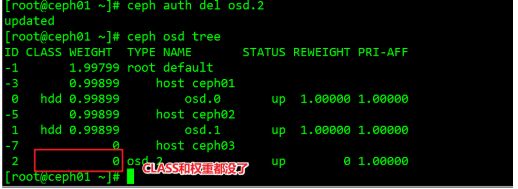

[root@ceph01 ceph]# ceph osd out osd.2

[root@ceph01 ceph]# ceph osd tree

[root@ceph01 ceph]# ceph osd crush remove osd.2

#删除osd.2的认证

[root@ceph01 ceph]# ceph auth del osd.2

[root@ceph01 ceph]# ceph osd tree

[root@ceph01 ceph]# ceph osd rm osd.2

#ceph03重启osd服务

[root@ceph03 ~]# systemctl restart ceph-osd.target

[root@ceph03 ~]# ceph osd tree

2、恢复osd到集群中

[root@ceph03 ~]# df -hT //查看ceph信息

文件系统 类型 容量 已用 可用 已用% 挂载点

devtmpfs devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs tmpfs 1.9G 22M 1.9G 2% /run

tmpfs tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda3 xfs 17G 4.9G 13G 29% /

/dev/sda1 xfs 1014M 217M 798M 22% /boot

tmpfs tmpfs 378M 4.0K 378M 1% /run/user/988

/dev/sr0 iso9660 4.4G 4.4G 0 100% /run/media/ml/CentOS 7 x86_64

tmpfs tmpfs 378M 4.0K 378M 1% /run/user/42

tmpfs tmpfs 378M 60K 378M 1% /run/user/0

tmpfs tmpfs 1.9G 52K 1.9G 1% /var/lib/ceph/osd/ceph-2

[root@ceph03 ~]# cd /var/lib/ceph/osd/ceph-2

[root@ceph03 ceph-2]# more fsid //查看fsid

daf9b00a-7562-475e-8e6d-96455c808402

[root@ceph03 ceph-2]# ceph osd create daf9b00a-7562-475e-8e6d-96455c808402 //ceph osd create uuid

[root@ceph03 ceph-2]# ceph auth add osd.2 osd 'allow *' mon 'allow rwx' -i /var/lib/ceph/osd/ceph-2/keyring //增加权限

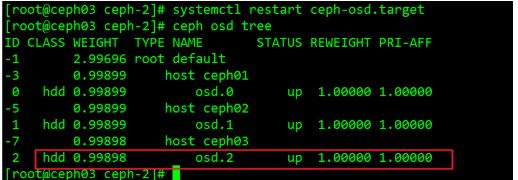

[root@ceph03 ceph-2]# ceph osd crush add 2 0.99899 host=ceph03 //0.99899是权重,host=主机名称

[root@ceph03 ceph-2]# ceph osd in osd.2

[root@ceph03 ceph-2]# systemctl restart ceph-osd.target

[root@ceph03 ceph-2]# ceph osd tree

五、ceph常规的维护命令

1、创建mgr服务

[root@ceph01 ceph]# ceph-deploy mgr create ceph01 ceph02 ceph03

2、创建pool

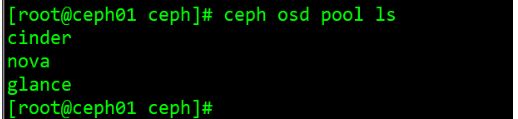

[root@ceph01 ceph]# ceph osd pool create cinder 64

[root@ceph01 ceph]# ceph osd pool create nova 64

[root@ceph01 ceph]# ceph osd pool create glance 64

[root@ceph01 ceph]# ceph osd pool ls

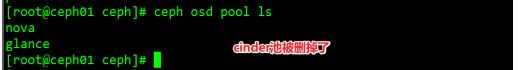

3、删除pool

[root@ceph01 ceph]# ceph osd pool rm cinder cinder --yes-i-really-really-mean-it //提示设置权限

[root@ceph01 ceph]# vim /etc/ceph/ceph.conf

mon_allow_pool_delete= true //新增删除权限

[root@ceph01 ceph]# ceph-deploy --overwrite-conf admin ceph02 ceph03 //将文件下发ceph02 ceph03

[root@ceph01 ceph]# systemctl restart ceph-mon.target //三个节点重启

[root@ceph01 ceph]# ceph osd pool rm cinder cinder --yes-i-really-really-mean-it

pool 'cinder' removed //提示删除

[root@ceph01 ceph]# ceph osd pool ls

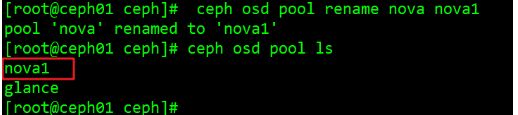

4、修改pool名字

[root@ceph01 ceph]# ceph osd pool rename nova nova1 //将Nova改成nova1

[root@ceph01 ceph]# ceph osd pool ls

5、查看ceph命令

[root@ceph01 ceph]# ceph --help

[root@ceph01 ceph]# ceph osd --help

6、配置ceph内部通信网段

[root@ceph01 ceph]# vim /etc/ceph/ceph.conf

public network= 192.168.100.0/24 //添加网段

[root@ceph01 ceph]# ceph-deploy --overwrite-conf admin ceph01 ceph02 ceph03 //将文件下发ceph02 ceph03

[root@ceph01 ceph]# systemctl restart ceph-mon.target //将各个节点的mon服务重启下

[root@ceph01 ceph]# systemctl restart ceph-osd.target //将各个节点的osd服务重启下

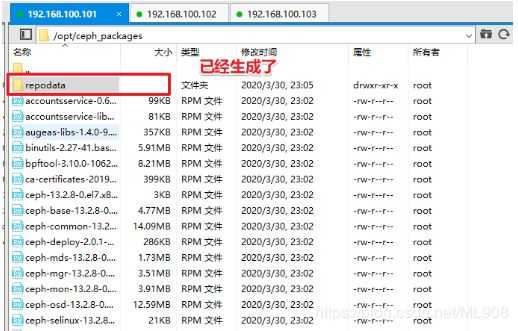

六、制作centos_ceph离线包

#将三个节点中的/var/cache/yum 所有packages文件整合到一起

#上传至/opt/ceph_packages/

#安装工具

[root@ceph01 opt]# yum -y install createrepo

[root@ceph01 opt]# cd ceph_packages

[root@ceph01 ceph_packages]# createrepo ./ //当前环境下生成新的依赖性关系