Coursera-吴恩达-深度学习-改善深层神经网络:超参数调试、正则化以及优化-week1-编程作业

本文章内容:

Coursera吴恩达深度学习课程,

第二课改善深层神经网络:超参数调试、正则化以及优化(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization)

第一周:深度学习的实用层面(Practical aspects of Deep Learning)

包含三个部分,Initialization, L2 regularization , gradient checking

编程作业,记错本。

Initialization

the first assignment of "Improving Deep Neural Networks".

By completing this assignment you will:

- Understand that different regularization methods that could help your model.

- Implement dropout and see it work on data.

- Recognize that a model without regularization gives you a better accuracy on the training set but nor necessarily on the test set.

- Understand that you could use both dropout and regularization on your model.

2 - Zero initialization

Training your neural network requires specifying an initial value of the weights. A well chosen initialization method will help learning. (防止对称权重错误,it fails to "break symmetry",)

我的:

for l in range(1, L):

### START CODE HERE ### (≈ 2 lines of code)

parameters['W' + str(l)] = np.random.rand(np.shape(layers_dims[L], layers_dims[L-1]))

parameters['b' + str(l)] = np.random.rand(np.shape(layers_dims[L], 1))

### END CODE HERE ###

return parameters

正确:

parameters['W' + str(l)] = np.zeros((layers_dims[l], layers_dims[l-1]))

parameters['b' + str(l)] = np.zeros((layers_dims[l], 1))

反思:

1.用循环内部的值小L,不是外面的那个大L

2. np.zeros的用法,内部直接设置维度。

3 - Random initialization

To break symmetry, lets intialize the weights randomly.

| 我的: parameters['W' + str(l)] = np.random.randn(layers_dims[l],layers_dims[l-1]) * 10 parameters['b' + str(l)] = np.zeros(layers_dims[l],1) |

| 正确: parameters['b' + str(l)] = np.zeros((layers_dims[l],1)) |

| 反思: np.zeros((维度)) |

If you see "inf" as the cost after the iteration 0, this is because of numerical roundoff;

舍入误差(英语:round-off error),是指运算得到的近似值和精确值之间的差异。

- The cost starts very high. This is because with large random-valued weights, the last activation (sigmoid) outputs results that are very close to 0 or 1 for some examples, and when it gets that example wrong it incurs a very high loss for that example. Indeed, when log(a[3])=log(0)log(a[3])=log(0), the loss goes to infinity.

- Poor initialization can lead to vanishing/exploding gradients, which also slows down the optimization algorithm.

- If you train this network longer you will see better results, but initializing with overly large random numbers slows down the optimization.

In summary:

- Initializing weights to very large random values does not work well.

- Hopefully intializing with small random values does better. The important question is: how small should be these random values be? Lets find out in the next part!

4 - He initialization

| 我的: parameters['W' + str(l)] = np.random.randn(layers_dims[l],layers_dims[l-1]) * np.square(2/layers_dims[l-1]) |

| 正确: parameters['W' + str(l)] = np.random.randn(layers_dims[l], layers_dims[l-1]) * np.sqrt(2 / layers_dims[l-1]) |

| 反思: np.sqrt() 是平方根。 |

What you should remember from this notebook:

- Different initializations lead to different results

- Random initialization is used to break symmetry and make sure different hidden units can learn different things

- Don't intialize to values that are too large

- He initialization works well for networks with ReLU activations.

Regularization

By completing this assignment you will:

- Understand that different regularization methods that could help your model.

- Implement dropout and see it work on data.

- Recognize that a model without regularization gives you a better accuracy on the training set but nor necessarily on the test set.

- Understand that you could use both dropout and regularization on your model.

2 - L2 Regularization

| 我的: L2_regularization_cost = (1/m)*(lambd/2)*(np.sum(np.square(Wl)) + np.sum(np.square(W2)) + np.sum(np.square(W3)))

NameError: name 'Wl' is not defined |

| 正确: L2_regularization_cost = 1./m * lambd/2 * (np.sum(np.square(W1)) + np.sum(np.square(W2)) + np.sum(np.square(W3))) |

| 反思: 输入法的错误。。。 |

Of course, because you changed the cost, you have to change backward propagation as well! All the gradients have to be computed with respect to this new cost.

Observations:

- The value of λ is a hyperparameter that you can tune using a dev set.

- L2 regularization makes your decision boundary smoother. If λλ is too large, it is also possible to "oversmooth", resulting in a model with high bias.

What you should remember -- the implications of L2-regularization on:

- The cost computation:

- A regularization term is added to the cost

- The backpropagation function:

- There are extra terms in the gradients with respect to weight matrices

- Weights end up smaller ("weight decay"):

- Weights are pushed to smaller values.

3 - Dropout

The dropped neurons don't contribute to the training in both the forward and backward propagations of the iteration.

疑问:

forward and backward时,dropout的是同一个点吗?

回答:是。会使用同一个random matrix D。

When you shut some neurons down, you actually modify your model. The idea behind drop-out is that at each iteration, you train a different model that uses only a subset of your neurons. With dropout, your neurons thus become less sensitive to the activation of one other specific neuron, because that other neuron might be shut down at any time.

3.1 - Forward propagation with dropout

nstructions: You would like to shut down some neurons in the first and second layers. To do that, you are going to carry out 4 Steps:

keep_prob - probability of keeping a neuron active during drop-out, scalar.

| 我的: D1 = np.random.rand(np.shape(A1)) # Step 1: initialize matrix D1 = np.random.rand(..., ...) D1 = None # Step 2: convert entries of D1 to 0 or 1 (using keep_prob as the threshold) A1 = A1 * D1 # Step 3: shut down some neurons of A1 A1 = A1/ keep_prob # Step 4: scale the value of neurons that haven't been shut down |

| 正确: D1 = np.random.rand(A1.shape[0], A1.shape[1]) # Step 1: initialize matrix D1 = np.random.rand(..., ...) D1 = (D1 < keep_prob) # Step 2: convert entries of D1 to 0 or 1 (using keep_prob as the threshold) A1 = A1 * D1 # Step 3: shut down some neurons of A1 A1 = A1 / keep_prob |

| 反思: 不会使用D1 = (D1 < keep_prob) np.random.rand()函数的输入是两个参数。 |

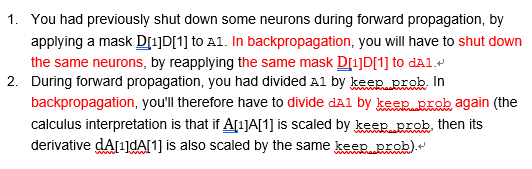

3.2 - Backward propagation with dropout

Instruction: Backpropagation with dropout is actually quite easy. You will have to carry out 2 Steps:

反向传播时代码:

dA2 = dA2 * D2 # Step 1: Apply mask D2 to shut down the same neurons as during the forward propagation

dA2 = dA2/ keep_prob # Step 2: Scale the value of neurons that haven't been shut down

Note:

- A common mistake when using dropout is to use it both in training and testing. You should use dropout (randomly eliminate nodes) only in training.

- Deep learning frameworks like tensorflow, PaddlePaddle, keras or caffe come with a dropout layer implementation. Don't stress - you will soon learn some of these frameworks.

What you should remember about dropout:

- Dropout is a regularization technique.

- You only use dropout during training. Don't use dropout (randomly eliminate nodes) during test time.

- Apply dropout both during forward and backward propagation.

- During training time, divide each dropout layer by keep_prob to keep the same expected value for the activations. For example, if keep_prob is 0.5, then we will on average shut down half the nodes, so the output will be scaled by 0.5 since only the remaining half are contributing to the solution. Dividing by 0.5 is equivalent to multiplying by 2. Hence, the output now has the same expected value. You can check that this works even when keep_prob is other values than 0.5.