Cloudera Manager CDH 集成 Kerberos

Cloudera Manager5.11.1 集成Kerberos

Kerberos 安装配置

Cloudera提供了非常简便的Kerberos集成方式,基本做到了自动化部署。

系统:CentOS 7.3

操作用户:admin

角色分布如下:

| 角色 | 部署节点 |

|---|---|

| KDC, AS, TGS | 192.168.0.200 |

| Kerberos Agent | 192.168.0.[201-206] |

假设slaves文件如下:

192.168.0.201

192.168.0.202

192.168.0.203

192.168.0.204

192.168.0.205

192.168.0.206Kerberos安装

在192.168.0.200上安装服务端:

yum -y install krb5-server openldap-clients 在192.168.0.[201-206]上安装客户端:

pssh -h slaves -P -l root -A "yum -y install krb5-devel krb5-workstation"在安装完上述的软件之后,会在KDC主机上生成配置文件/etc/krb5.conf和/var/kerberos/krb5kdc/kdc.conf,它们分别反映了realm name 以及 domain-to-realm mappings。

修改配置

配置krb5.conf

/etc/krb5.conf: 包含Kerberos的配置信息。例如,KDC的位置,Kerberos的admin的realms 等。需要所有使用的Kerberos的机器上的配置文件都同步。这里仅列举需要的基本配置。

请替换DOMAIN.COM为自定义域名:

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = EXAMPLE.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

EXAMPLE.COM = {

kdc = master

admin_server = master

}

[domain_realm]

.example.com = EXAMPLE.COM

example.com = EXAMPLE.COM说明:

- [logging]:表示server端的日志的打印位置

- [libdefaults]:每种连接的默认配置,需要注意以下几个关键的小配置

- default_realm = EXAMPLE.COM 默认的realm,必须跟要配置的realm的名称一致。

- udp_preference_limit = 1 禁止使用udp可以防止一个Hadoop中的错误

- ticket_lifetime表明凭证生效的时限,一般为24小时。

- renew_lifetime表明凭证最长可以被延期的时限,一般为一个礼拜。当凭证过期之后,

对安全认证的服务的后续访问则会失败。

- [realms]:列举使用的realm。

- kdc:代表要kdc的位置。格式是 机器:端口

- admin_server:代表admin的位置。格式是机器:端口

- default_domain:代表默认的域名

- [appdefaults]:可以设定一些针对特定应用的配置,覆盖默认配置。

//这一步可以不需要,因为在Cloudera Manager中启用配置krb5.conf后,它会自动帮你部署到客户端。

分发krb5.conf至所有client:

pscp -h slaves krb5.conf /tmp

pssh -h slaves "cp /tmp/krb5.conf /etc"配置kdc.conf

默认放在 /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

EXAMPLE.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

max_life = 25h

max_renewable_life = 8d

}

说明:

- BOCLOUD.COM:是设定的realms。名字随意。Kerberos可以支持多个realms,会增加复杂度。本文不探讨。大小写敏感,一般为了识别使用全部大写。这个realms跟机器的host没有大关系。

- max_renewable_life = 8d 涉及到是否能进行ticket的renwe必须配置。

- master_key_type:和supported_enctypes默认使用aes256-cts。由于,JAVA使用aes256-cts验证方式需要安装额外的jar包,推荐不使用。

- acl_file:标注了admin的用户权限。文件格式是

Kerberos_principal permissions [target_principal] [restrictions]支持通配符等。 - admin_keytab:KDC进行校验的keytab。后文会提及如何创建。

- supported_enctypes:支持的校验方式。注意把aes256-cts去掉。

配置kadm5.acl

修改服务端192.168.0.200上的配置文件/var/kerberos/krb5kdc/kadm5.acl,以允许具备匹配条件的admin用户进行远程登录权限:

*/[email protected] *说明:

标注了admin的用户权限,需要用户自己创建。文件格式是

Kerberos_principal permissions [target_principal] [restrictions]

支持通配符等。最简单的写法是

*/[email protected] *

代表名称匹配*/[email protected] 都认为是admin,权限是 * 代表全部权限。创建Kerberos数据库

在192.168.0.201上对数据库进行初始化,默认的数据库路径为/var/kerberos/krb5kdc,如果需要重建数据库,将该目录下的principal相关的文件删除即可,请牢记数据库密码。

kdb5_util create -r EXAMPLE.COM -s说明:

- [-s] 表示生成 stash file ,并在其中存储master server key(krb5kdc)

- [-r] 来指定一个realm name , 当krb5.conf中定义了多个realm时使用

- 当 Kerberos database创建好之后,在 /var/kerberos/ 中可以看到生成的 principal相关文件

启动Kerberos服务

在192.168.0.200上执行:

# 启动服务命令

systemctl start krb5kdc

systemctl start kadmin

# 加入开机启动项

systemctl enable krb5kdc

systemctl enable kadmin查看进程:

[root@master ~]# netstat -anpl|grep kadmin

tcp 0 0 0.0.0.0:749 0.0.0.0:* LISTEN 67465/kadmind

tcp 0 0 0.0.0.0:464 0.0.0.0:* LISTEN 67465/kadmind

tcp6 0 0 :::749 :::* LISTEN 67465/kadmind

tcp6 0 0 :::464 :::* LISTEN 67465/kadmind

udp 0 0 0.0.0.0:464 0.0.0.0:* 67465/kadmind

udp6 0 0 :::464 :::* 67465/kadmind

[root@master ~]# netstat -anpl|grep kdc

tcp 0 0 0.0.0.0:88 0.0.0.0:* LISTEN 67402/krb5kdc

tcp6 0 0 :::88 :::* LISTEN 67402/krb5kdc

udp 0 0 0.0.0.0:88 0.0.0.0:* 67402/krb5kdc

udp6 0 0 :::88 :::* 67402/krb5kdc 创建Kerberos管理员principal

# 需要设置两次密码

kadmin.local -q "addprinc root/admin"

//使用cloudera-scm/admin作为CM创建其它principals的超级用户

kadmin.local -q "addprinc cloudera-scm/admin"principal的名字的第二部分是admin,那么根据之前配置的acl文件,该principal就拥有administrative privileges,这个账号将会被CDH用来生成其他用户/服务的principal。

注意需要先kinit保证已经有principal缓存。

[root@master ~]# kinit cloudera-scm/admin

Password for cloudera-scm/admin@BOCLOUD.COM:

[root@master ~]# klist

Ticket cache: KEYRING:persistent:0:0

Default principal: cloudera-scm/admin@BOCLOUD.COM

Valid starting Expires Service principal

09/01/2017 11:51:17 09/02/2017 11:51:16 krbtgt/BOCLOUD.COM@BOCLOUD.COM

renew until 09/08/2017 11:51:16

Kerberos客户端支持两种,一是使用 principal + Password,二是使用 principal + keytab,前者适合用户进行交互式应用,例如hadoop fs -ls这种,后者适合服务,例如yarn的rm、nm等。

principal + keytab就类似于ssh免密码登录,登录时不需要密码了。

Cloudera Manager 添加 Kerberos

在此之前,请确保以下前序工作完成:

- KDC已经安装好并且正在运行

将KDC配置为允许renewable tickets with non-zerolifetime,我们在之前修改kdc.conf文件的时候已经添加了max_life和max_renewable_life这个2个属性,前者表示服务端允许的Service ticket最大生命周期,后者表示服务端允许的Service ticket更新周期。这2个属性必须分别大于等于客户端对应的配置ticket_lifetime和renew_lifetime。我们假设,不这样进行配置:ticket_lifetime = 8d, max_life = 7d, renew_lifetime = 25h, max_renew_life = 24h,那么可能造成的结果就是当service持有的票据超过24小时没有去更新,在第24.5小时的时候去进行更新,请求会遭到拒绝,报错:Ticket expired while renewing credentials,永远无法进行正常更新。对于Cloudera来说,因为更新机制被透明(Cloudera有renew进程会去定期更新),即使我们手动使用`modprinc -maxrenewlife 1week krbtgt/[email protected] 进行更新,也无济于事。

在Cloudera Manager Server上安装openldap-clients

- 为Cloudera Manager创建了超级管理员principal,使其能够有权限在KDC中创建其他的principals,如上面创建的cloudera-scm;

再次确认完毕后进入如下步骤:

1.启用Kerberos

2.确认完成启用Kerberos前的准备

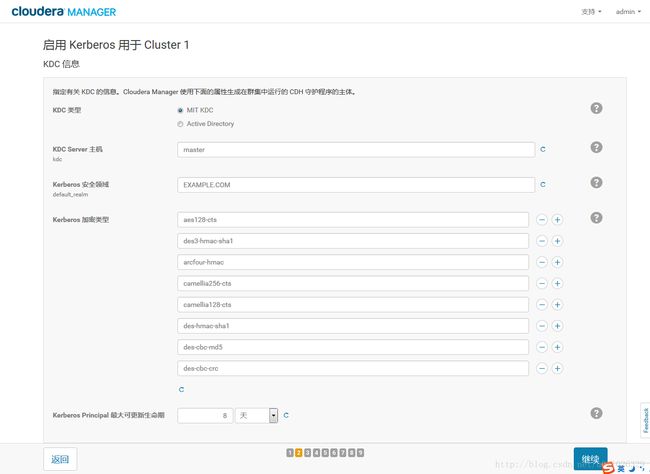

3.KDC信息

要注意的是:这里的 Kerberos Encryption Types 必须跟KDC实际支持的加密类型匹配(即kdc.conf中的值)

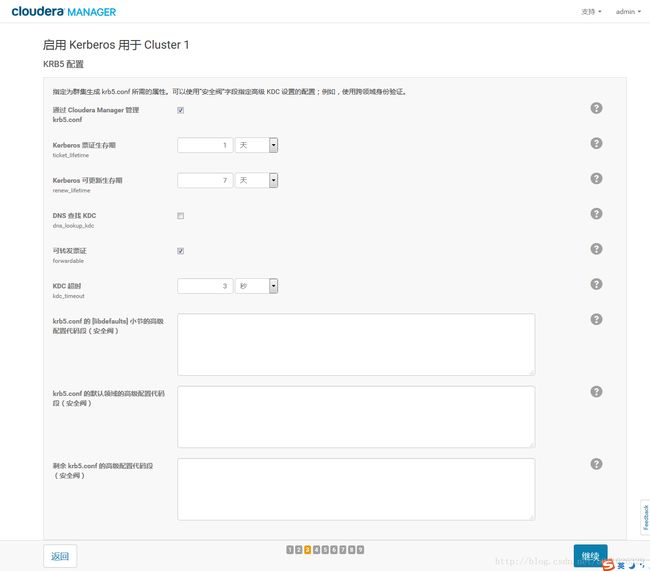

4.KDB5信息

5.KDC Account Manager

6.导入KDC Account Manager 凭据

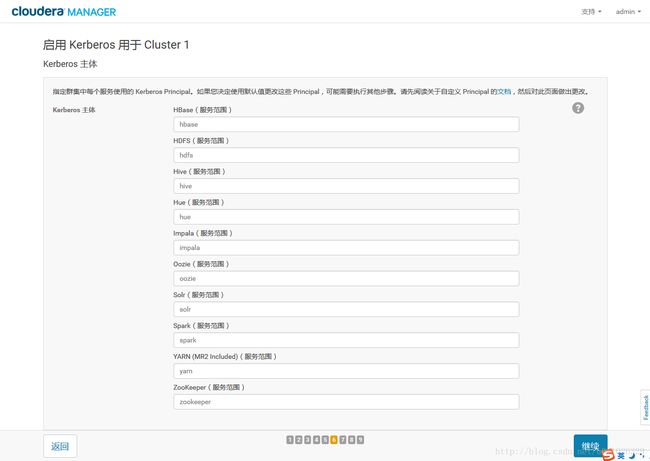

7.Kerberos Principals

8.Set HDFS Port

9.重启服务

之后 Cloudera Manager 会自动重启集群服务,启动之后会提示 Kerberos 已启用。在 Cloudera Manager 上启用 Kerberos 的过程中,会自动做以下的事情:

- 集群中有多少个节点,每个账户就会生成对应个数的 principal ;

- 为每个对应的 principal 创建 keytab;

- 部署 keytab 文件到指定的节点中;

- 在每个服务的配置文件中加入有关 Kerberos 的配置;

启用之后访问集群的所有资源都需要使用相应的账号来访问,否则会无法通过 Kerberos 的 authenticatin。

除此之外,对于特定的服务需要做额外配置,本文只介绍HDFS、YARN、HBase,其余服务请参照官方文档自行进行配置。

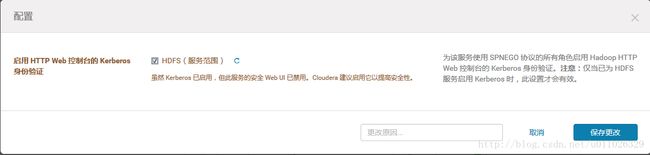

对于HDFS,Yarn,需要开启Web Concole 的认证,以防未认证用户访问资源界面:

Authentication for access to the HDFS, MapReduce, and YARN roles’ web consoles can be enabled via a configuration option for the appropriate service. To enable this authentication:

- From the Clusters tab, select the service (HDFS, MapReduce, or YARN) for which you want to enable authentication.

- Click the Configuration tab.

- Expand Service-Wide > Security, check the Enable Authentication for HTTP Web-Consoles property, and save your changes.A command is triggered to generate the new required credentials.

- Once the command finishes, restart all roles of that service.

HDFS开启HTTP Web:

Yarn开启HTTP Web:

HBase 开启 REST 认证:

Step 5: Create the HDFS Superuser

Step 5: Create the HDFS Superuser

To be able to create home directories for users, you will need access to the HDFS superuser account. (CDH automatically created the HDFS superuser account on each cluster host during CDH installation.) When you enabled Kerberos for the HDFS service, you lost access to the default HDFS superuser account using sudo -u hdfs commands. Cloudera recommends you use a different user account as the superuser, not the default hdfs account.

Designating a Non-Default Superuser Group

To designate a different group of superusers instead of using the default hdfs account, follow these steps:

- Go to the Cloudera Manager Admin Console and navigate to the HDFS service.

- Click the

Configurationtab. - Select

Scope>HDFS (Service-Wide). - Select

Category>Security. - Locate the Superuser Group property and change the value to the appropriate group name for your environment. For example,

- Click Save Changes to commit the changes.

- Restart the HDFS service.

To enable your access to the superuser account now that Kerberos is enabled, you must now create a Kerberos principal or an Active Directory user whose first component is

[root@master ~]# sudo -u hdfs hdfs dfs -ls

17/08/29 18:15:02 WARN security.UserGroupInformation: PriviledgedActionException as:hdfs (auth:KERBEROS) cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

17/08/29 18:15:02 WARN ipc.Client: Exception encountered while connecting to the server : javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

17/08/29 18:15:02 WARN security.UserGroupInformation: PriviledgedActionException as:hdfs (auth:KERBEROS) cause:java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

ls: Failed on local exception: java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]; Host Details : local host is: "master/192.168.46.10"; destination host is: "master":8020;

此时直接用CM生成的principal访问HDFS会失败,因为那些自动生成的principal的密码是随机的,用户并不知道而通过命令行的方式访问HDFS需要先使用kinit来登录并获得ticket

所以使用kinit hdfs/[email protected]需要输入密码的时候无法继续

用户可以通过创建一个[email protected]的principal并记住密码从命令行中访问HDFS

[root@master ~]# kadmin.local -q "addprinc hdfs"

[root@master ~]# kinit hdfs

[root@master ~]# hdfs dfs -ls

Found 4 items

drwxr-xr-x - hdfs supergroup 0 2017-08-29 12:00 .Trash

drwx------ - hdfs supergroup 0 2017-08-29 10:49 .staging

drwxr-xr-x - hdfs supergroup 0 2017-08-29 10:35 QuasiMonteCarlo_1503974105326_258590930

drwxr-xr-x - hdfs supergroup 0 2017-08-29 10:35 QuasiMonteCarlo_1503974128088_828012046[root@master ~]# kadmin.local -q "addprinc test"

//test principal 不能访问 HDFS

[root@master ~]# kinit test

[root@master ~]# hdfs dfs -ls

ls: `.': No such file or directory

[root@master ~]# kinit hdfs

[root@master ~]# hdfs dfs -mkdir /user/test

[root@master ~]# hdfs dfs -chown test:test /user/test

//test principal 可以访问 HDFS

[root@master ~]# kinit test

[root@master ~]# hdfs dfs -ls Step 6: Get or Create a Kerberos Principal for Each User Account

Get or Create a Kerberos Principal for Each User Account

# kadmin.local -q "list_principals"

# kadmin.local -q "addprinc hive"

# kadmin.local -q "addprinc hbase"

# kadmin.local -q "addprinc impala"

# kadmin.local -q "addprinc spark"

# kadmin.local -q "addprinc oozie"

# kadmin.local -q "addprinc hue"

# kadmin.local -q "addprinc yarn"

# kadmin.local -q "addprinc zookeeper"

......Step 7: Prepare the Cluster for Each User

Step 7: Prepare the Cluster for Each User

Step 8: Verify that Kerberos Security is Working

Step 8: Verify that Kerberos Security is Working

Step 9: (Optional) Enable Authentication for HTTP Web Consoles for Hadoop Roles

Step 9: (Optional) Enable Authentication for HTTP Web Consoles for Hadoop Roles

遇到的问题

hdfs用户提交mr作业无法运行

# kinit hdfs

# hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi 10 100异常信息1:

Diagnostics: Application application_1504017397148_0002 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is hdfs

main : requested yarn user is hdfs

Requested user hdfs is not whitelisted and has id 986,which is below the minimum allowed 1000

原因:

Linux user 的 user id 要大于等于1000,否则会无法提交Job

例如,如果以hdfs(id为986)的身份提交一个job,就会看到以上的错误信息

解决方法:

1.使用命令 usermod -u 修改一个用户的user id

2.修改Clouder关于这个该项的设置,Yarn->配置->min.user.id修改为合适的值(如0),默认为1000

异常信息2:hdfs用户被禁止运行 YARN container

Diagnostics: Application application_1504019347914_0001 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is hdfs

main : requested yarn user is hdfs

Requested user hdfs is banned原因:

yarn的设置中将hdfs用户禁用了

解决方法:

修改Clouder关于这个该项的设置,Yarn->配置->banned.users 将hdfs用户移除

Beeline/JDBC测试:

# beeline --verbose=true

beeline > !connect jdbc:hive2://master:10000/default;principal=hive/[email protected];

Error: Could not open client transport with JDBC Uri: jdbc:hive2://master:10000/default;principal=hive/[email protected];: Peer indicated failure: GSS initiate failed (state=08S01,code=0)

java.sql.SQLException: Could not open client transport with JDBC Uri: jdbc:hive2://master:10000/default;principal=hive/[email protected];: Peer indicated failure: GSS initiate failed

Caused by: org.apache.thrift.transport.TTransportException: Peer indicated failure: GSS initiate failed在使用jdbc+kerberos时,主要注意几个问题

1.链接字符串格式,其中user,password并不生效

jdbc:hive2://

比如Connection con = DriverManager.getConnection("jdbc:hive2://host:10000/cdnlog;principal=hdfs/host@KERBEROS_HADOOP", "user1", "");

在传入hiveserver2时,用户并不是user1

2.在有tgt cache时,传入的用户为当前tgt的用户(可以通过klist查看)

3.principal的名称和hiveserver2的主机名需要一致

4.For Beeline connection string, it should be "!connect jdbc:hive2://. Please make sure it is the hive’s principal, not the user’s. And when you kinit, it should be kinit user’s keytab, not the hive’s keytab.

# kadmin.local -q "addprinc hive"

# kinit hive

# beeline --verbose=true

beeline > !connect jdbc:hive2://master:10000/default;principal=hive/master@EXAPLE.COM;

scan complete in 4ms

Connecting to jdbc:hive2://master:10000/default;principal=hive/master@EXAPLE.COM;

Connected to: Apache Hive (version 1.1.0-cdh5.11.1)

Driver: Hive JDBC (version 1.1.0-cdh5.11.1)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://master:10000/default> kerberos常用操作

添加keytab:

# kadmin.local -q "ktadd -k /etc/openldap/ldap.keytab ldap/cdh1@JAVACHEN.COM"