本文中采用的SIFT提取视频中的和待检测图像特征点,并利用特征点之间的映射关系找到视频中待检测物体的位置,绘制出绿色的边界并显示出来。

检测视频

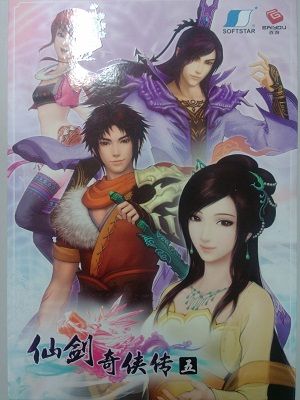

视频地址 图片:待检测的图片(仙剑奇侠传5的游戏盒子)

图片:待检测的图片(仙剑奇侠传5的游戏盒子)

程序源代码

#include

为了达到实时处理的效果,可以使用SURF替代SIFT,不过处理效果不如SIFT理想。

本文中的程序采用的是Opencv的2.4.8版本,在2.4.5版本以后可以结合VS2013和Image Watch插件,在调试的时候可以实时显示出图像,方便观测结果,再也不需要用各种imshow()来显示结果。

图片:Image Watch直接显示内存中图片信息

SIFT原理解析可以查看SIFT算法详解 这篇博文