第6章 HBase API操作(二)---数据封装与数据迁移

上篇:第6章 HBase API操作(二)

1、数据的封装

使用多线程的线程安全对数据进行封装

首先,创建一个工具类:HbaseUtil(操作工具类)

具体代码实现:

package studey.bigdate.util;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

/**

* HBase操作工具类

*/

public class HbaseUtil {

private static ThreadLocal<Connection> connHolder = new ThreadLocal<Connection>();

private static Connection conn = null;

private HbaseUtil() {

}

/**

* 获取HBase连接对象

*

*/

public static void makeHBaseConnection() throws IOException {

// Configuration conf= HBaseConfiguration.create();

// conn=ConnectionFactory.createConnection(conf);

// return ConnectionFactory.createConnection(conf);

Connection connection = connHolder.get();

if (conn == null) {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "hadoop105");

conn = ConnectionFactory.createConnection(conf);

connHolder.set(conn);

}

}

/**

* 增加数据

* @param tablename

* @param rowkey

* @param family

* @param column

* @param value

* @throws Exception

*/

public static void insertData(String tablename,String rowkey,String family,String column,String value)throws Exception{

Connection conn = connHolder.get();

Table table = conn.getTable(TableName.valueOf(tablename));

Put put=new Put(Bytes.toBytes(rowkey));

put.addColumn(Bytes.toBytes(family),Bytes.toBytes(column),Bytes.toBytes(value));

table.put(put);

table.close();

}

public static void close() throws IOException {

Connection connection = connHolder.get();

if (conn != null) {

conn.close();

connHolder.remove();

}

}

}

其次,在主程序调用该方法:

TestHbaseAPI_4.java

package study.bigdate.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import studey.bigdate.util.HbaseUtil;

import java.io.IOException;

/**

* 测试 HBase API

*/

public class TestHbaseAPI_4 {

public static void main(String[] args) throws Exception {

//创建连接

HbaseUtil.makeHBaseConnection();

//增加数据

HbaseUtil.insertData("atguigu:student","1002","info","name","lisi");

//关闭连接

HbaseUtil.close();

}

}

启动程序,控制台打印出数据

我们就可以在Linux环境下,在HBase的客户端扫描查看这条数据是否成功添加?

hbase(main):006:0> scan 'atguigu:student'

ROW COLUMN+CELL

1002 column=info:name, timestamp=1579800170162, value=lisi

1 row(s) in 0.0680 seconds

hbase(main):007:0>

以上说明添加成功!欧克

2、MR–数据迁移

MapReduce

通过HBase的相关JavaAPI,我们可以实现伴随HBase操作的MapReduce过程,比如使用MapReduce将数据从本地文件系统导入到HBase的表中,比如我们从HBase中读取一些原始数据后使用MapReduce做数据分析。

2.1 官方HBase-MapReduce

(1)查看HBase的MapReduce任务的执行

[root@hadoop105 hbase-1.3.1]# bin/hbase mapredcp

.......

.......

#生成许多jar包

/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-hadoop-compat-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/guava-12.0.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-server-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-prefix-tree-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-protocol-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-common-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/hbase-client-1.3.1.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/module/hbase-1.3.1/lib/metrics-core-2.2.0.jar

(2)环境变量的导入

执行环境变量的导入(临时生效,在命令行执行下述操作)

$ export HBASE_HOME=/opt/module/hbase-1.3.1

$ export HADOOP_HOME=/opt/module/hadoop-2.7.2

$ export HADOOP_CLASSPATH=`${HBASE_HOME}/bin/hbase mapredcp`

永久生效:在/etc/profile配置

export HBASE_HOME=/opt/module/hbase-1.3.1

export HADOOP_HOME=/opt/module/hadoop-2.7.2

并在hadoop-env.sh中配置:(注意:在for循环之后配)

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/usr/local/hadoop/module/hbase-1.3.1/lib/*

操作步骤:

(1)首先,在Linux环境下的HBase的客户端操作:创建一个atguigu:user数据表

hbase(main):001:0> create 'atguigu:user','info'

0 row(s) in 2.8620 seconds

=> Hbase::Table - atguigu:user

hbase(main):002:0>

接下来在idea工具对代码编写:

(1)在java文件目录下创建一个包(com.study.bigdata.hbase)

在该包下创建类(TableApplication2)

代码编写:

ScanDataMapper.java

package com.study.bigdata.hbase.mapper;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import java.io.IOException;

public class ScanDataMapper extends TableMapper<ImmutableBytesWritable, Put> {

@Override

protected void map(ImmutableBytesWritable key, Result result, Context context) throws

IOException, InterruptedException {

//运行mapper,查询数据

Put put=new Put(key.get());

for (Cell cell:result.rawCells()){

put.addColumn(

CellUtil.cloneFamily(cell),

CellUtil.cloneQualifier(cell),

CellUtil.cloneValue(cell)

);

}

context.write(key,put);

}

}

InsertDataReducer.java

package com.study.bigdata.hbase.reducer;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.io.NullWritable;

import java.io.IOException;

public class InsertDataReducer extends TableReducer<ImmutableBytesWritable,Put, NullWritable> {

@Override

protected void reduce(ImmutableBytesWritable key, Iterable<Put> values, Context context) throws IOException, InterruptedException {

//运行reduce,增加数据

for (Put put:values){

context.write(NullWritable.get(),put);

}

}

}

HbaseMapperReduceTool.java

package com.study.bigdata.hbase.tool;

import com.study.bigdata.hbase.mapper.ScanDataMapper;

import com.study.bigdata.hbase.reducer.InsertDataReducer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.JobStatus;

import org.apache.hadoop.util.Tool;

public class HbaseMapperReduceTool implements Tool {

public int run(String[] args) throws Exception {

//作业

Job job= Job.getInstance();

job.setJarByClass(HbaseMapperReduceTool.class);

//mapper

TableMapReduceUtil.initTableMapperJob("atguigu:student",

new Scan(),

ScanDataMapper.class,

ImmutableBytesWritable.class,

Put.class,

job

);

/* "",

new can(),

ScanDataMapper.class,

ImmutableBytesWritable.class,

Put.class,

job*/

//reducer

TableMapReduceUtil.initTableReducerJob(

"atguigu:user",

InsertDataReducer.class,

job

);

boolean flg = job.waitForCompletion(true);

return flg ? JobStatus.State.SUCCEEDED.getValue():JobStatus.State.FAILED.getValue();

}

public void setConf(Configuration conf) {

}

public Configuration getConf() {

return null;

}

}

TableApplication2.java

package com.study.bigdata.hbase;

import com.study.bigdata.hbase.tool.HbaseMapperReduceTool;

import org.apache.hadoop.util.ToolRunner;

public class TableApplication2 {

public static void main(String[] args) throws Exception {

//ToolRunner可以运行MR

ToolRunner.run(new HbaseMapperReduceTool(),args);

}

}

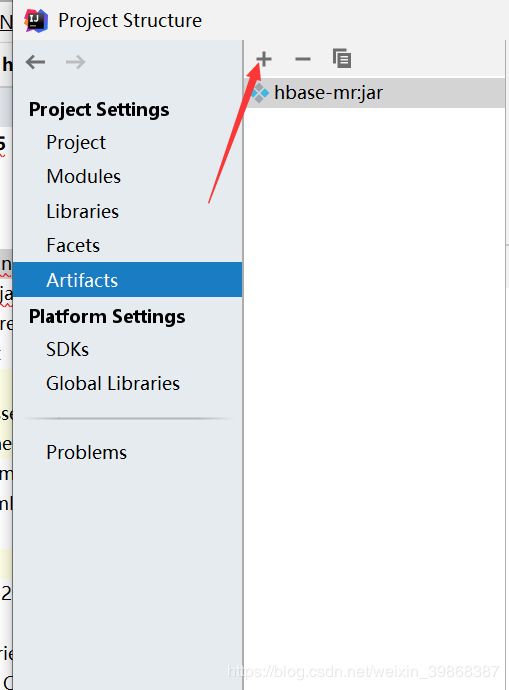

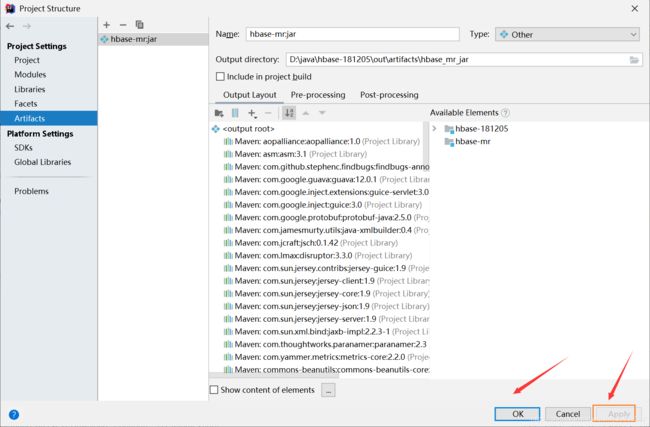

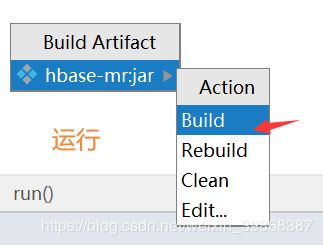

配置环境:

运行成功:

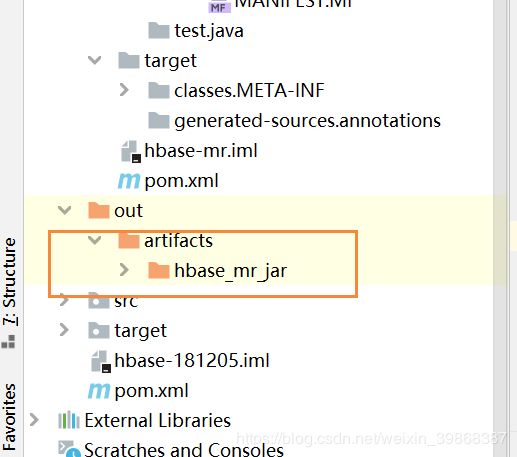

打包成功,拷贝出来部署到Linux环境下,并使用命令对其操作!

[root@hadoop107 ~]# cd /usr/module/

[root@hadoop107 module]# ll

total 8

drwxr-xr-x. 2 root root 4096 Jan 23 23:40 hbase_mr_jar

[root@hadoop107 module]#

执行命令:

[root@hadoop107 hbase-1.3.1]# yarn jar /usr/module/hbase_mr_jar/hbase-mr.jar