百度Apollo无人车车道线检测挑战赛(二)

百度Apollo车道线分割比赛二(模型搭建)

百度Apollo车道线分割比赛一(数据处理)

百度Apollo车道线分割比赛三(模型训练)

本实现暂时实现两种常见的分割模型Unet,deeplabv3plus,最后在实现两个模型的融合(百度比赛主要看分割的miou不侧重模型的实时性),其中Unet的backbone采用resnet101,deeplabv3plus采用的backbone为Xcepthon65。backbone可以灵活选择,而且pytorch中有预训练好的权重,可以加载实现快速的训练。

一.Unet

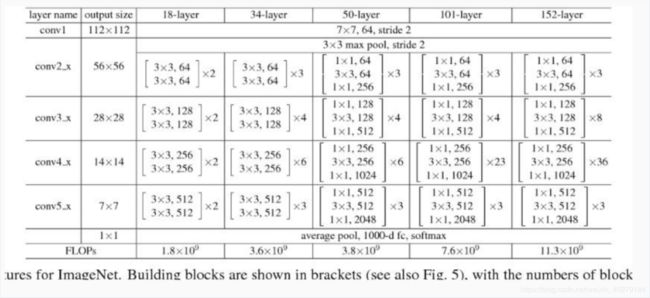

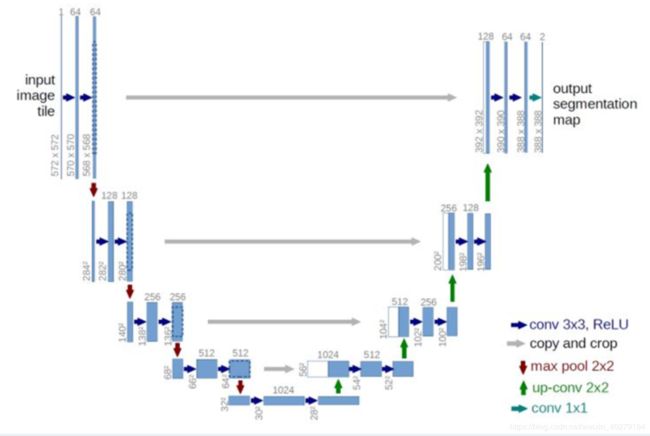

Resnet系列参数如下图,在Unet模型分为 encoder block 和decoder block ,resnet去掉最后的全连接层作为Unet的backbone。另外decoder block上采样采用转置卷积,上采样止原图的1/4大小,最后计算loss时需要将最后输出双线性插值到原图大小再和label做loss。

resnet系列:

Unet结构:

处理文件为models.Unet_ResNet101.py,代码如下:

#coding:utf-8

#@author: Jiangnan He

#@date:2019.12.10 18:21

'''

resnet101-unet

1.上采样 还是采用 转置卷积

2.进行高低层特征融合时使用了torch.cat() 替换了 FCN 中的 "+"

3.resnet101 下采样1/32 所以进行了 5次下采样 本实现进行了 3次高低层特征融合 最后得到heatmap为原图的1/4

4.与label做loss 时直接将输出 heatmap 上采样到image尺寸

layer0 input

↓

layer1 conv3x3 ch=64 (1/2)

↓

layer2 reslayer(maxpool) ch=256 (1/4) --------------------------- 256+256---conv3 ch=256

↓ ↑upconv2

layer3 reslayer ch=512 (1/8) ------------------------------ 512+512---conv3 ch=512

↓ ↑upconv2

layer4 reslayer ch=1024 (1/16)---------------------------------- 1024+1024--conv3 ch=1024

↓ ↑upconv2

layer5 reslaeyr ch=2048 (1/32)-----------------------------------------

'''

import torch

import torch.nn as nn

from torch.autograd import Variable

from torchsummary import summary

from torch.nn import functional as F

class Block(nn.Module):

def __init__(self,in_ch,out_ch,ksize=3,stride=1,padding=1):

super(Block,self).__init__()

self.conv1=nn.Conv2d(in_ch,out_ch,kernel_size=ksize,stride=stride,padding=padding)

self.bn=nn.BatchNorm2d(out_ch)

self.relu=nn.ReLU(inplace=True)

def forward(self, x):

return self.relu(self.bn(self.conv1(x)))

def make_layers(in_channels, layer_list):

layers = []

for v in layer_list:

layers += [Block(in_channels, v)]

in_channels = v

return nn.Sequential(*layers)

#层

class Layer(nn.Module):

def __init__(self, in_channels, layer_list):

super(Layer, self).__init__()

self.layer = make_layers(in_channels, layer_list)

def forward(self, x):

out = self.layer(x)

return out

#残差块

class ResBlock(nn.Module):

def __init__(self,ch_list,downsample,Res):# ch_list=[in_ch,ch,out_ch]

super(ResBlock,self).__init__()

self.res=Res# 残差块 还是 瓶颈块的标志位

self.ds = downsample # 残差块时 是否下采样

#第一个1x1 卷积

self.firconv1x1=Block(ch_list[0],ch_list[1],1,1,0) #第一个1x1卷积 不下采样

self.firconv1x1d = Block(ch_list[0],ch_list[1], 1,2,0) #第一个1x1卷积 下采样

# 3x3卷积

self.conv3x3=Block(ch_list[1],ch_list[1],3,1,1)

#第二个1x1卷积

self.secconv1x1=Block(ch_list[1],ch_list[2],1,1,0)

#skip 卷积操作

self.resconv1x1d=Block(ch_list[0],ch_list[2],1,2,0) #skip下采样的1x1卷积

self.resconv1x1=Block(ch_list[0],ch_list[2],1,1,0) #skip下采样的1x1卷积

def forward(self,x):

if self.res==True:#此时为残差块

if self.ds==True:#skip有卷积操作需要下采样

residual=self.resconv1x1d(x)

f1=self.firconv1x1d(x)

else:#skip有卷积操作不需要下采样

residual = self.resconv1x1(x)

f1 = self.firconv1x1(x)

else:# 瓶颈块sikp无卷积操作

residual=x

f1 = self.firconv1x1(x)

f2=self.conv3x3(f1)

f3=self.secconv1x1(f2)

f3+=residual

return f3

#Res层

class ResLayer(nn.Module):

def __init__(self,ch_list1,ch_list2,downsample,numBotBlock):

super(ResLayer,self).__init__()

self.num = numBotBlock

self.resb=ResBlock(ch_list1,downsample,True)

self.botb=ResBlock(ch_list2,downsample,False)

self.BoB=self.make_layers()

def make_layers(self):

layers=[]

for i in range(self.num):

layers+=[self.botb]

return nn.Sequential(*layers)

def forward(self,x):

res=self.resb(x)

return self.BoB(res)

#resnet101

class ResNet101(nn.Module):

def __init__(self):

super(ResNet101,self).__init__()

self.conv0=nn.Conv2d(3,64,kernel_size=3,stride=1,padding=1)

self.conv1=nn.Conv2d(64,64,kernel_size=3,stride=2,padding=1) #1/2

self.pool=nn.MaxPool2d(kernel_size=2,stride=2) #1/4

self.layer1=ResLayer([64,64,256],[256,64,256],False,4)

self.layer2=ResLayer([256,128,512],[512,128,512],True,3) #1/8 ch= 512

self.layer3=ResLayer([512,256,1024],[1024,256,1024],True,23) #1/16 ch= 1024

self.layer4=ResLayer([1024,512,2048],[2048,512,2048],True,6) #1/32 ch=2048

#权重初始化 conv 以及BN

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')#mode="fan_in" weight方差在前向传播中保持不变 mode="fan_out" weight后向传播方差不变

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def forward(self, x):

layer0=self.conv0(x) #1

layer1=self.conv1(layer0) #1/2

layer2 =self.layer1(self.pool(layer1) ) #1/4

layer3=self.layer2(layer2) #1/8

layer4 = self.layer3(layer3) #1/16

layer5 = self.layer4(layer4) #1/32

#print(layer0.size(),layer1.size(),layer2.size(),layer3.size(),layer4.size(),layer5.size())

return [layer2,layer3,layer4,layer5]

#上采样模块

class Upsample(nn.Module):

def __init__(self,in_ch1,in_ch2,upsampleratio=2):# layer42048 layer3 1024

super(Upsample,self).__init__()

self.conv1=Block(in_ch1,in_ch2)# layer4 2048-->1024

self.conv2=Block(in_ch2,in_ch2)#layer3 1024-->1024

self.conv3=Block(in_ch2*2,in_ch2) # cat layer3 2048--> 1024

self.trans_conv=nn.ConvTranspose2d(in_ch2,in_ch2,upsampleratio,stride=upsampleratio)

def forward(self,featrue1,featrue2):#layer4 ,layer3

#

return self.conv3( torch.cat([ self.trans_conv(self.conv1(featrue1)), self.conv2(featrue2) ],dim=1) )

class UnetDecode(nn.Module):

def __init__(self,n,in_ch,out_ch,upsratio,trans_conv=False): # in_ch VGG 后通道数 out_ch 为上采样之后的通道数

super(UnetDecode,self).__init__()

self.conv1=Layer(in_ch,[out_ch]*n) #这里加n层Block

self.trans_conv=nn.ConvTranspose2d(out_ch,out_ch,upsratio,stride=upsratio)# upsratio 上采样倍数

def forward(self,x):

return self.trans_conv(self.conv1(x))

class Unet_resnet101(nn.Module):

def __init__(self,n,in_ch,out_ch,upsratio):

super(Unet_resnet101,self).__init__()

self.encode=ResNet101()

self.catup1=Upsample(2048,1024)

self.catup2=Upsample(1024,512)

self.catup3=Upsample(512,256)

self.decode=UnetDecode(n,in_ch,out_ch,upsratio)

self.classfier=nn.Conv2d(256,10,3,padding=1)

def forward(self,x):

size=x.shape[-2:]

features=self.encode(x)

[layer2,layer3,layer4,layer5]=features#

#layer1 256*16 layer2 :512*8*8 layer3 : 1024*4*4 layer4 : 2048*2*2

layer4=self.catup1(layer5,layer4)

layer3=self.catup2(layer4,layer3)

layer2=self.catup3(layer3,layer2)

#return self.classfier(layer2)

return F.interpolate(self.classfier(layer2), size=size, mode='bilinear', align_corners=False)

if __name__=="__main__":

if torch.cuda.is_available():

model=Unet_resnet101(4,2048,64,4).cuda()

x = Variable(torch.randn(1, 3, 64, 64)).cuda()

else:

model=Unet_resnet101(4,2048,64,4)

x = Variable(torch.randn(1, 3, 64, 64))

model.eval()

y=model(x)

print("y.size",y.size())

summary(model,(3,64,64))

二. Deeplabv3plus

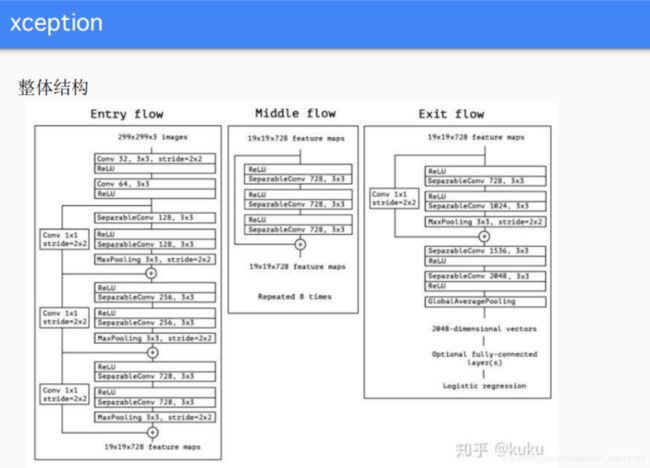

Xception系列Xception41 ,Xception65 ,Xception71 其中Xception41结构如图,本文采用的是Xception65作为backbone。

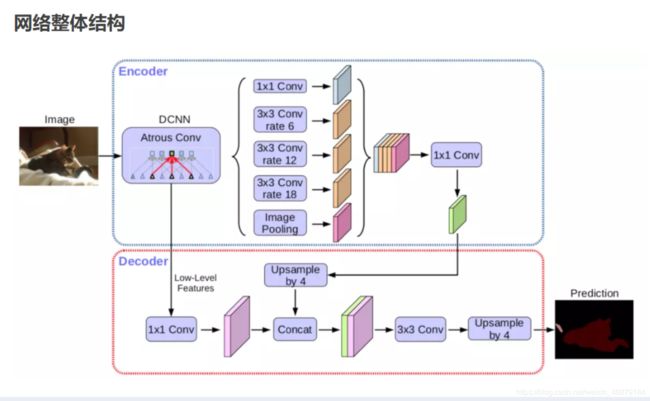

Deeplabv3plus结构如下,与Unet不同卷积采用深度可分离卷积,上采样方式为双线性插值:

处理文件为models.deeplabv3p_Xception65.py,代码如下:

#coding:utf-8

#@author: Jiangnan He

#@date:2019.12.17 15:03

''

'''

本实现为deeplabv3+,backbone为Xception_65

'''

import torch.nn as nn

import torch

from torchsummary import summary

from torch.nn import functional as F

#实现深度分离卷积 将卷积分程in_ch 组进行卷积 接着用1x1 卷积进行融合

class depthwiseconv(nn.Module):

def __init__(self, in_ch, out_ch,ksize=3,stride=1,padding=1):

super(depthwiseconv, self).__init__()

self.depth_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=in_ch,

kernel_size=ksize,

stride=stride,

padding=padding,

groups=in_ch

)

self.point_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=out_ch,

kernel_size=1,

stride=1,

padding=0,

groups=1

)

def forward(self, x):

return self.point_conv(self.depth_conv(x))

#块

class Block(nn.Module):

def __init__(self,in_ch,out_ch,ksize=3,stride=1,padding=1,sep=True):

super(Block,self).__init__()

#是否为深度可分离卷积模块标志位

self.issepconv = sep

self.conv=nn.Conv2d(in_ch,out_ch,kernel_size=ksize,stride=stride,padding=padding,bias=False)

self.sepconv = depthwiseconv(in_ch, out_ch, ksize, stride, padding)

self.Bn=nn.BatchNorm2d(out_ch)

self.relu=nn.ReLU(inplace=True)

def forward(self,x):

if self.issepconv==True:

return self.relu(self.Bn(self.sepconv(x)))

else:

return self.relu(self.Bn(self.conv(x)))

#层

class Layer(nn.Module):

def __init__(self,in_ch,out_ch,ksize,stride,padding=1,upsample=True):# 64 128 3 1 1

super(Layer,self).__init__()

self.upsample=upsample#是否进行下采样

self.block1=Block(in_ch,out_ch,ksize,stride,padding)

self.block2 = Block(out_ch, out_ch, ksize, stride, padding)

self.block3 = Block(out_ch, out_ch, ksize, stride, padding)#不进行下采样时ksize=3 padding=1 same

#下采样时

self.block4=Block(out_ch, out_ch, ksize, stride=2, padding=1)#进行下采样时

self.skipconv=nn.Conv2d(in_ch,out_ch,kernel_size=1,stride=2,padding=0)#padding设置注意

self.skipconv1=nn.Conv2d(in_ch,out_ch,kernel_size=1,stride=1,padding=0)#padding设置注意

if self.upsample==True:#进行下采样时

layer = [self.block1, self.block2, self.block4]

else:#不进行下采样时的组合

layer=[self.block1,self.block2,self.block3]

self.layer = self.layer=nn.Sequential(*layer)

def forward(self,x):

if self.upsample==True:

out=self.layer(x)

skipout=self.skipconv(x)

return torch.cat([out,skipout],dim=1)

else:

out=self.layer(x)

skipout=self.skipconv1(x)

return torch.cat([out,skipout],dim=1)

#模型 Xception 作为backbone

class DCNN(nn.Module):

def __init__(self):

super(DCNN,self).__init__()

self.conv1=Block(3,32,3,2,1,False)#conv 1/2

self.conv2=Block(32,64,3,1,1,False)#conv

self.layer1=Layer(64,128,3,1,1,True)#128 1/4

self.layer2=Layer(256,256,3,1,1,True) #512 /8

self.layer3 =Layer( 512,728, 3, 1, 1, True)# 1456 /16

self.BlockLayer=Layer(1456,728,3,1,1,False)#repeat 16times

self.layer4 = self.make_layer(16)

self.layer5=Layer(1456,1024,3,1,1,True) #1/32

self.conv3=Block(2048,1536,3,1,1,True)#sepconv

self.conv4 = Block(1536, 1536, 3, 1, 1, True) # sepconv

self.conv5=Block(1536, 2048, 3, 1, 1, True) # sepconv

def make_layer(self,numlayer):

layer=[]

for i in range(numlayer):

layer+=[self.BlockLayer]

return nn.Sequential(*layer)

def forward(self, x):

#entry flow

conv1=self.conv1(x)

conv2=self.conv2(conv1)

layer1=self.layer1(conv2)#

layer2=self.layer2(layer1)

layer3 = self.layer3(layer2)

#middlle flow

layer4 = self.layer4(layer3)

#exit flow

layer5 = self.layer5(layer4)

conv3=self.conv3(layer5)

conv4=self.conv4(conv3)#

conv5=self.conv5(conv4)

# print("DCNN ",conv1.size(), conv2.size(),layer1.size(),layer2.size(),layer3.size() ,layer4.size(), layer5.size(), conv3.size(),conv4.size(),conv5.size())

return layer2,conv5

class ASPPConv(nn.Sequential):

def __init__(self, in_channels, out_channels, dilation):

modules = [

nn.Conv2d(in_channels, out_channels, 3, padding=dilation, dilation=dilation, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU()

]

super(ASPPConv, self).__init__(*modules)

class ASPPPooling(nn.Sequential):

def __init__(self, in_channels, out_channels):

super(ASPPPooling, self).__init__(

nn.AdaptiveAvgPool2d(1),

nn.Conv2d(in_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU())

def forward(self, x):

size = x.shape[-2:]

for mod in self:

x = mod(x)

return F.interpolate(x, size=size, mode='bilinear', align_corners=False)

class ASPP(nn.Module):

def __init__(self, in_channels):

super(ASPP, self).__init__()

out_channels = 256

modules = []

modules.append(nn.Sequential(

nn.Conv2d(in_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU()))

rate1, rate2, rate3 = (6,12,18)

modules.append(ASPPConv(in_channels, out_channels, rate1))

modules.append(ASPPConv(in_channels, out_channels, rate2))

modules.append(ASPPConv(in_channels, out_channels, rate3))

modules.append(ASPPPooling(in_channels, out_channels))

self.convs = nn.ModuleList(modules)

self.project = nn.Sequential(

nn.Conv2d(5 * out_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(),

nn.Dropout(0.5))

def forward(self, x):

res = []

for conv in self.convs:

res.append(conv(x))

res = torch.cat(res, dim=1)

return self.project(res)

class deeplabv3p(nn.Module):

def __init__(self):

super(deeplabv3p,self).__init__()

self.DCNN=DCNN()

self.ASPP=ASPP(2048)

self.conv1=Block(512,256,ksize=1,padding=0,sep=False)

self.conv2=Block(2*256,8,ksize=3,padding=1,sep=False)# 原本为 3x3 这里测试模型 输入很小 所以改为1x1

self.conv3 = Block(256, 256, ksize=1, padding=0, sep=False)

#初始化参数

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')#mode="fan_in" weight方差在前向传播中保持不变 mode="fan_out" weight后向传播方差不变

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def forward(self,x):

lowfeature,out=self.DCNN(x)

lowfeature=self.conv1(lowfeature)#

#print(lowfeature.size(),'lowfeaturesize1')

f1=self.conv3(self.ASPP(out))

#print(f1.size(),'f1')

hightfeature=F.interpolate(f1,scale_factor=4, mode='bilinear', align_corners=False)

#print(hightfeature.size(),"hightfeatsize")

out1=torch.cat([lowfeature,hightfeature],dim=1)

return F.interpolate(self.conv2(out1),scale_factor=8, mode='bilinear', align_corners=False)

if __name__=="__main__":

if torch.cuda.is_available():

x=torch.randn(1,3,128, 384).cuda()

model=deeplabv3p().cuda()

else:

x=torch.randn(1,3,128, 384)

model=deeplabv3p()

model.eval()

y=model(x)

print(y.size(),"y.size")

'''

summary(model, (3, 128, 384))

'''