Statistical Signal Processing (UESTC)

Teacher's email: [email protected]

熊文汇老师

使用教材:

由于是用英文教学,所以术语以及相关描述等都会使用英语。

In this lecture, we talk about 2 problems detection and estimation.

Detection: we have several hypothesis, and we need to confirm which it is. Or maybe we need to confirm whether it's something which means yes or no.

Estimation: we need to ask what the value is. we should estimate a deterministic signal. And we can estimate a random signal.

Vectorization

Sometimes we can find that a signal can be decomposinged. Like:

x_i is weight factor. Fin_i(t) is base function.

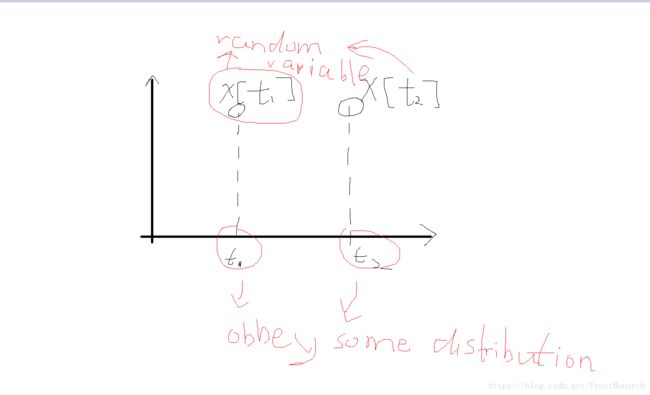

Random signal

For any given time we can calculate its mean(expectation)

And we can compute its auto-correlation(ACF)

Power spectrum density(PSD)

For additive white gaussian noise(AWGN), Its PSD is a constant from every frequency.

And we know that PSD's inverse fourier transform is auto-correlation(ACF). so for additive white gaussian noise(AWGN), its ACF is impulse function and ACF = 0 while k!= 0.

Detection

We make some notations here.

P(x)is a probability density function !

Prior knowledge:

![]()

![]()

![]()

And we assume that ![]() . Then first of all, in this situation H_0 or H_1 happens for sure, we can use NP (Neyman-Pearson) theorm.

. Then first of all, in this situation H_0 or H_1 happens for sure, we can use NP (Neyman-Pearson) theorm.

Detect H_1 if L(x) = P(x;H_1) / P(x;H_0) > ![]() . P(x;H_1) and P(x;H_0) are our observations and it's known. They are like the product of a set of probability density function(PDF).

. P(x;H_1) and P(x;H_0) are our observations and it's known. They are like the product of a set of probability density function(PDF).

![]()

The problem is how we choose ![]() .

.

We can fix the P_FA then we can have a ![]() . our detector's mission is to draw a boundary (

. our detector's mission is to draw a boundary (![]() ).

).

And we also have

And we can not minimize P_FA and P_D simultaneously.

For example, H1 is white noise with DC while H0 is just white noise.

The above NP theorm is about H1 or H0 happen for sure. Now we think about H0 or H1 happen with some probability.

Bayesian approach

![]()

![]()

![]()

And we need to minimize the Pe.

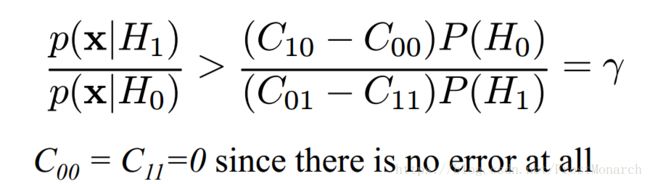

The above method we minimize the Pe(Probability of error). Now we choose to minimize the bayes risk.

After the calculus. we have

Decide H1 if

Now we assume that we have multiple hypothesis(![]() )

)

Or according to maximum likelyhood(ML) we have:

Matched filter

We also make some notations here.

![]()

![]()

![]()

![]()

Based on NP-theorm mentioned above we have

(The calculs needed to be added in next week)

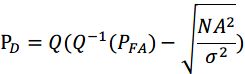

And we use Pfa & SNR to measure it.

Homework

Before we decide a detector it's important to think about a question.

![]()

![]()

s[n] is a deterministic or random. s[n] is deterministic meaning given a n we can have a value like 1 or 22 or 30. s[n] is random meaning given a n we have a random variable.

Facing these 2 situations, their likehood functions will differ. If s[n] is a deterministic signal then s[n] just changes the mean of w[n]. while if s[n] is random, it has a covariance matrix which will affect the variance matrix.If s[n]'s covariance matrix is Cs and its u = u_1. w[n] + s[n] ~ N(u_1 + u_0 , sigma0^2*E + C_s). Assume that w[n] ~ N(u_0 , sigma0^2 ).

As mentioned above, when our signal is deterministic we can build a matched filter.

while the s[n] is a stochastic process we have ![]()

Likelyhood ratio test(design a NP detector)

There are 2 different situation. One point observation or multy points observatoins. If the question doesn't mention we can work out the question in one of these two observations.

One point observation:

The likelyhood becomes a pdf and often we have P_fa = Pr{x[0]>gamma;H_0};P_D = Pr{x[0]>gamma;H_1} .

Multy point observations:

The likelyhood becomes the product of the observations. And often we have P_fa = Pr{T(x)>gamma;H_0};P_D = Pr{T(x)>gamma;H_1} .

REMEMBER while we make intergration to compute P_fa or P_D, the X_i can be treated as the random variable of H_0(if computing P_fa) or H_1 (if computing P_D).

while we're having the problem detect DC in AWGN, we have a important equation:

Assume that we have two hypotheses,

![]()

![]()

where w[n] is WGN with variance of ![]() , s[n] is something.

, s[n] is something.

we decide H1 if ![]()

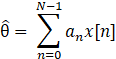

where ![{\color{Red} T(x) = \sum_{n=0}^{N-1}x[n]s[n]}](http://img.e-com-net.com/image/info8/a880d475d7b64eeeb09cce35295b1a94.gif) , (T(x) is important).

, (T(x) is important). ![\gamma^{'} = \sigma^2 ln\gamma + 1/2 \sum_{n=0}^{N-1}s^2[n]](http://img.e-com-net.com/image/info8/4d55b5c6f4c84a609f5182101a1ed1a6.gif) ; Pay attention to the T(x).

; Pay attention to the T(x).

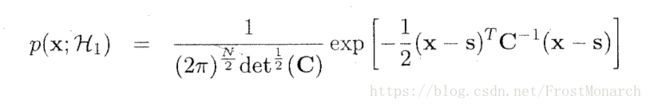

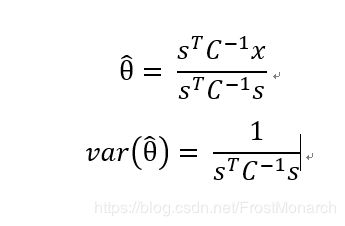

while we're detecting gaussian distribution as x ~ N (μ,C). The likelyhood should become

N should be the number of your observations which should also should be the number of the dimention of μ. s should be the μ here.

C is a Symmetric positive definite matrix. And X^T*C^(-1)*s = (X^T*C^(-1)*s)^T

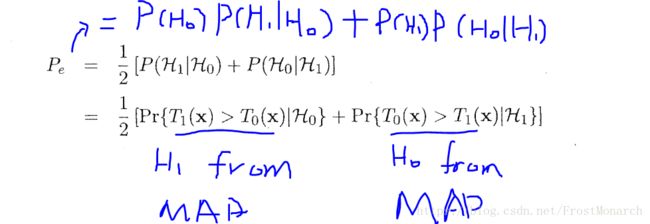

Design a MAP detector

While we are talking minimum the probability of error or optimal, we should design a MAP detector. So while we are making a MAP detector our goal is to minimize the probability of error. For NP detector we should maximize the probability of detection.

An example with equal prior probability on how to compute p_e

It's almost the same as NP detector except we decide H_1 if likelyhood function L(x) > P(H_0)/P(H_1) = gamma

P(H_0) & P(H_1) are prior probability which are known.

If the gaussian distribution's sigma is big. The distribution would be shorter and fatter.

Prewhitener

![]()

Where C is a covariance matrix, D is a prewhitener. D is a upper triangle matrix and its diagonal elements are all positive.

Boundary

In order to draw a boudary, we need to make a detector and we decide H_i then draw a boudary in a picture with the axes of observation.

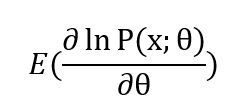

CRLB estimator

If we want to use the CRLB ,we should first comput  and check whether it's zero or not. If it's zero, then we can use CRLB.

and check whether it's zero or not. If it's zero, then we can use CRLB.

CRLB is the variance lower bound of a unbiased estimator

(2)

![]()

where g(x) is a MVU estimator which variance can be higher than CRLB, and its variance is 1/I(theta).

Linear model

![]()

We can get the estimator

![]()

Sufficent statistic

When we're applying RBLS, and we want to find T(x). We can do a variable substitution to make it clear which term can be used to be a sufficent statistic.

BLUE

BLUE means best linear unbiased estimator which best means lowest variance for linear estimator.

then ![]()

MLE

Its aim is to make

LSE

s[n] = H * theta

H is a N*P matrix, which means we have N samples and we have P unknown.

![]()

Integration knowlege

![]() a,b are real numbers, then

a,b are real numbers, then

aX+b ~ ![]()

In order to calculate pr{X>![]() }, we should make

}, we should make ![]() ~N(0,1) , (For example Y=ax+b) then the Q function variable should be replaced with ax+b.

~N(0,1) , (For example Y=ax+b) then the Q function variable should be replaced with ax+b.

Sometimes, we need to compute the chi square integration ( where X_i ~N(0,1)), and we fave a formular to compute N =1

where X_i ~N(0,1)), and we fave a formular to compute N =1

![]() where

where ![]()

Z= X + Y, if both X,Y are independent the pdf of Z is