图像的翻转,仿射变换

import cv2

import numpy as np

from matplotlib import pyplot as plt

import math

from skimage import util,color

import random

import datetime

import pywt

'''

cv读入图像与plt显示图像之间bgr->rgb的转换

'''

def img_plt(img):

b,g,r = cv2.split(img)

img = cv2.merge([r, g, b])

return img

def img_translation(img,tx,ty):

dst_img = np.zeros((img.shape),dtype='uint8')

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if i+tx<dst_img.shape[0] and j+ty<dst_img.shape[1]:

dst_img[i+tx][j+ty] = img[i][j]

return dst_img

def img_resize(img,sx,sy):

if len(img.shape)<=2:

dst_img = np.zeros((round(img.shape[0]*sx),round(img.shape[1]*sy)),dtype='uint8')

else:

dst_img = np.zeros((round(img.shape[0] * sx), round(img.shape[1] * sy),img.shape[2]), dtype='uint8')

for i in range(dst_img.shape[0]):

for j in range(dst_img.shape[1]):

if round(i/sx) < img.shape[0] and round(j/sy) < img.shape[1]:

dst_img[i][j] = img[round(i/sx)][round(j/sy)]

return dst_img

def img_rotation(img,th):

dst_img = np.zeros((img.shape), dtype='uint8')

row = img.shape[0]

col = img.shape[1]

for i in range(row):

for j in range(col):

m = i*math.cos(th)-j*math.sin(th)+row/2*(1-math.cos(th))+col/2*math.sin(th)

n = i*math.sin(th)+j*math.cos(th)+col/2*(1-math.cos(th))-row/2*math.sin(th)

if m >=0 and m < row and n >=0 and n<col:

dst_img[i][j] = img[math.floor(m)][math.floor(n)]

return dst_img

def NN_interpolation(img,dst_h,dst_w):

scr_h = img.shape[0]

scr_w = img.shape[1]

if len(img.shape)>2:

dst_img=np.zeros((dst_h,dst_w,img.shape[2]),dtype=np.uint8)

else:

dst_img = np.zeros((dst_h, dst_w), dtype=np.uint8)

for i in range(dst_h):

for j in range(dst_w):

scr_x=round(i*(scr_h/dst_h))

scr_y=round(j*(scr_w/dst_w))

if scr_x < scr_h and scr_y < scr_w:

dst_img[i,j]=img[scr_x,scr_y]

return dst_img

def bilinear_interpolation(img, dst_h,dst_w):

src_h = img.shape[0]

src_w = img.shape[1]

if src_h == dst_h and src_w == dst_w:

return img.copy()

if len(img.shape) > 2:

dst_img = np.zeros((dst_h, dst_w, img.shape[2]), dtype=np.uint8)

else:

dst_img = np.zeros((dst_h, dst_w), dtype=np.uint8)

scale_x, scale_y = float(src_h) / dst_h, float(src_w) / dst_w

for dstx in range(dst_h):

for dsty in range(dst_w):

srcy = (dsty + 0.5) * scale_y - 0.5

srcx = (dstx + 0.5) * scale_x - 0.5

src_y0 = int(math.floor(srcy))

src_y1 = min(src_y0 + 1, src_w - 1)

src_x0 = int(math.floor(srcx))

src_x1 = min(src_x0 + 1, src_h - 1)

if src_x0 != src_x1 and src_y1 != src_y0:

ge = ((src_x1 - srcx) * img[src_x0, src_y0] + (srcx - src_x0) * img[src_x1, src_y0]) / (src_x1 - src_x0)

gf = ((src_x1 - srcx) * img[src_x0, src_y1] + (srcx - src_x0) * img[src_x1, src_y1] )/ (src_x1 - src_x0)

dst_img[dstx, dsty] = ((src_y1 - srcy) * ge + (srcy - src_y0) * gf) / (src_y1 - src_y0)

return dst_img

if __name__ == '__main__':

img1 = cv2.imread('lena-color-need-process.png')

mask_img = cv2.imread('lena-color-mask.png')

mask_img = cv2.cvtColor(mask_img, cv2.COLOR_BGR2GRAY)

dst_TELEA = cv2.inpaint(img1, mask_img, 3, cv2.INPAINT_TELEA)

dst_NS = cv2.inpaint(img1, mask_img, 3, cv2.INPAINT_NS)

img1 = img_plt(img1)

dst_TELEA = img_plt(dst_TELEA)

dst_NS = img_plt(dst_NS)

plt.figure(1)

plt.xlabel("Image patching")

plt.subplot(221),plt.xlabel("degraded image"),plt.imshow(img1)

plt.subplot(222), plt.xlabel("mask image"), plt.imshow(mask_img,cmap = 'gray')

plt.subplot(223),plt.xlabel("TELEA"),plt.imshow(dst_TELEA)

plt.subplot(224), plt.xlabel("NS"), plt.imshow(dst_NS)

img = cv2.imread('lena-color.jpg')

img = img_plt(img)

plt.figure(2)

plt.imshow(img)

plt.show()

图像去雾

import cv2

import numpy as np

from matplotlib import pyplot as plt

import math

def zmMinFilterGray(src, r=7):

return cv2.erode(src, np.ones((2 * r + 1, 2 * r + 1)))

def guidedfilter(m,I, p, r, eps):

height, width = I.shape

m_I = cv2.boxFilter(I, -1, (r, r))

m_p = cv2.boxFilter(p, -1, (r, r))

m_Ip = cv2.boxFilter(I * p, -1, (r, r))

cov_Ip = m_Ip - m_I * m_p

m_II = cv2.boxFilter(I * I, -1, (r, r))

var_I = m_II - m_I * m_I

a = cov_Ip / (var_I + eps)

b = m_p - a * m_I

m_a = cv2.boxFilter(a, -1, (r, r))

m_b = cv2.boxFilter(b, -1, (r, r))

V1 = m_a * I + m_b

bins = 2000

ht = np.histogram(V1, bins)

d = np.cumsum(ht[0]) / float(V1.size)

for lmax in range(bins - 1, 0, -1):

if d[lmax] <= 0.999:

break

A = np.mean(m, 2)[V1 >= ht[1][lmax]].max()

return V1,A

def Defog(m, r, eps, w, maxV1):

V1 = np.min(m, 2)

Dark_Channel = zmMinFilterGray(V1, 7)

V1,A = guidedfilter(m,V1, Dark_Channel, r, eps)

V1 = np.minimum(V1 * w, maxV1)

return V1, A

def deHaze(m, r=81, eps=0.001, w=0.95, maxV1=0.80, bGamma=False):

Y = np.zeros(m.shape)

Mask_img, A = Defog(m, r, eps, w, maxV1)

for k in range(3):

Y[:,:,k] = (m[:,:,k] - Mask_img)/(1-Mask_img/A)

Y = np.clip(Y, 0, 1)

if bGamma:

Y = Y ** (np.log(0.5) / np.log(Y.mean()))

return Y

def img_plt(img):

b,g,r = cv2.split(img)

img = cv2.merge([r, g, b])

return img

if __name__ == '__main__':

img = cv2.imread('fog0.jpg')

m = np.uint8(deHaze(img / 255.0) * 255)

plt.subplot(121),plt.imshow(img)

plt.title('Input Image'), plt.xticks([]), plt.yticks([])

plt.subplot(122),plt.imshow(m)

plt.title('Defog result'), plt.xticks([]), plt.yticks([])

plt.show()

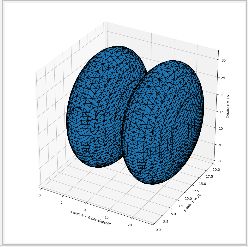

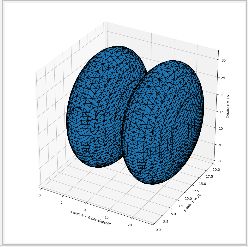

3D

import numpy as np

import pandas as pd

import cv2

import numpy as np

from skimage.draw import ellipsoid

import matplotlib.pyplot as plt

from skimage import measure, morphology

from mpl_toolkits.mplot3d.art3d import Poly3DCollection

if __name__ == '__main__':

ellip_base = ellipsoid(6, 10, 16, levelset=True)

ellip_double = np.concatenate((ellip_base[:-1, ...],

ellip_base[2:, ...]), axis=0)

verts, faces, normals, values = measure.marching_cubes_lewiner(ellip_double, 0)

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, projection='3d')

mesh = Poly3DCollection(verts[faces])

mesh.set_edgecolor('k')

ax.add_collection3d(mesh)

ax.set_xlabel("x-axis: a = 6 per ellipsoid")

ax.set_ylabel("y-axis: b = 10")

ax.set_zlabel("z-axis: c = 16")

ax.set_xlim(0, 24)

ax.set_ylim(0, 20)

ax.set_zlim(0, 32)

plt.tight_layout()

plt.show()

添加水印

添加字体水印

import cv2

import numpy as np

from matplotlib import pyplot as plt

from PIL import Image, ImageDraw, ImageFont

def add_text_to_image(image, text):

font = ImageFont.truetype('C:\Windows\Fonts\STXINGKA.TTF', 36)

new_img = Image.new('RGBA', (image.size[0] * 3, image.size[1] * 3), (0, 0, 0, 0))

new_img.paste(image, image.size)

font_len = len(text)

rgba_image = new_img.convert('RGBA')

text_overlay = Image.new('RGBA', rgba_image.size, (255, 255, 255, 0))

image_draw = ImageDraw.Draw(text_overlay)

for i in range(0, rgba_image.size[0], font_len * 40 + 100):

for j in range(0, rgba_image.size[1], 200):

image_draw.text((i, j), text, font=font, fill=(0, 0, 0, 50))

text_overlay = text_overlay.rotate(-45)

image_with_text = Image.alpha_composite(rgba_image, text_overlay)

image_with_text = image_with_text.crop((image.size[0], image.size[1], image.size[0] * 2, image.size[1] * 2))

return image_with_text

if __name__ == '__main__':

img = Image.open("lena-color.jpg")

im_after = add_text_to_image(img, '测试使用')

im_after.show()

添加图片水印

im = Image.open("lena-color.jpg")

mark=Image.open("mask.jpg")

layer=Image.new('RGBA', im.size, (0,0,0,0))

layer.paste(mark, (int(im.size[0]/3),int(im.size[1]/3)))

out=Image.composite(layer,im,layer)

out.show()

im1 = Image.open("lena-color.jpg")

im2 = Image.open("mask.jpg")

layer=Image.new('RGBA', im1.size, (0,0,0,0))

layer.paste(im2, (int(im1.size[0]/3),int(im1.size[1]/3)))

im1=im1.convert("RGBA")

newim1 = Image.alpha_composite(im1,layer)

newim1.show()

newim2 = Image.blend(im1,layer,0.5)

newim2.show()

vidcap = cv2.VideoCapture('test.mov')

fps = vidcap.get(cv2.CAP_PROP_FPS)

size = (int(vidcap.get(cv2.CAP_PROP_FRAME_WIDTH)),

int(vidcap.get(cv2.CAP_PROP_FRAME_HEIGHT)))

count = 0

success,image = vidcap.read()

while success:

cv2.imwrite("./frame/frame%d.jpg" % count, image)

if cv2.waitKey(10) == 27:

break

count += 1

success, image = vidcap.read()

def pyramid_image(image):

level = 3

temp = image.copy()

pyramid_images = []

for i in range(level):

dst = cv2.pyrDown(temp)

pyramid_images.append(dst)

cv2.imshow("gussian" + str(i), dst)

temp = dst.copy()

return pyramid_images

def laplian_image(image):

pyramid_images = pyramid_image(image)

level = len(pyramid_images)

for i in range(level - 1, -1, -1):

if (i - 1) < 0:

expand = cv2.pyrUp(pyramid_images[i], dstsize=image.shape[:2])

lpls = cv2.subtract(image, expand)

cv2.imshow("lap" + str(i), lpls)

else:

expand = cv2.pyrUp(pyramid_images[i], dstsize=pyramid_images[i - 1].shape[:2])

lpls = cv2.subtract(pyramid_images[i - 1], expand)

cv2.imshow("lap" + str(i), lpls)

src = cv2.imread("lena-color.jpg")

cv2.imshow("input image", src)

pyramid_image(src)

cv2.waitKey(0)

cv2.destroyAllWindows()