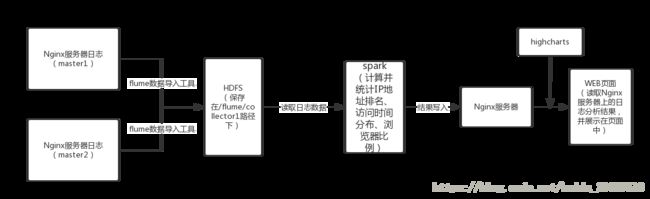

大数据可视化之Nginx服务器日志分析及可视化展示(Nginx+flume+HDFS+Spark+Highcharts)

项目说明:

本项目为一个web程序,能够利用大数据技术,对nginx服务器的访问日志进行图形化展示。当访问此程序时,可以看到nginx日志中统计出来的全天分时网站点击量等信息的图表展示。每条Nginx日志的平均大小为250字节左右,假设每天产生1亿条访问日志,日志文件的总大小约为230GB,本程序适合对此类海量日志数据进行分析和统计工作,并快速得出统计结果。

程序运行环境:

- 采用三台虚拟机来模拟真实环境下的Hadoop高可用集群服务。主机名和ip地址分别为master1-192.168.49.128、master2-192.168.49.129、slave1-192.168.49.130。

- 在master1和master2上分别安装flume数据导入工具、Nginx服务器以及Spark2。

项目架构图:

实现过程:

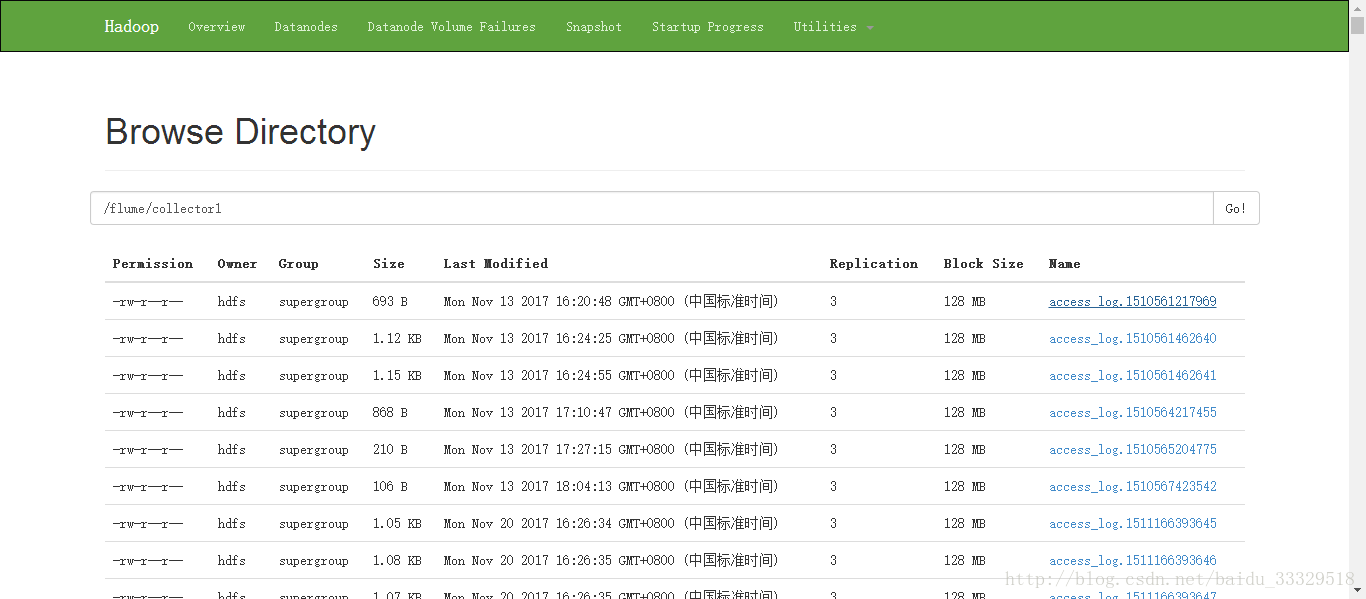

第一步:搭建分布式Nginx服务器环境,在两台虚拟机上分别配置Nginx服务器以及flume,将两台Nginx服务器的日志文件统一导入到HDFS上的同一个目录下(此项目为HDFS上的/flume/collector1目录)。

master1的flume配置文件如下:

tail1.sources=src1

tail1.channels=ch1

tail1.sinks=sink1

tail1.sources.src1.type=exec

tail1.sources.src1.command=tail -F /var/log/nginx/access.log

tail1.sources.src1.channels=ch1

tail1.channels.ch1.type=memory

tail1.channels.ch1.capacity=500

tail1.sinks.sink1.type=avro

tail1.sinks.sink1.hostname=192.168.49.128

tail1.sinks.sink1.port=6000

tail1.sinks.sink1.batch-size=1

tail1.sinks.sink1.channel=ch1

collector1.sources=src1

collector1.channels=ch1

collector1.sinks=sink1

collector1.sources.src1.type=avro

collector1.sources.src1.bind=192.168.49.128

collector1.sources.src1.port=6000

collector1.sources.src1.channels=ch1

collector1.channels.ch1.type=memory

collector1.channels.ch1.capacity=500

collector1.sinks.sink1.type=hdfs

collector1.sinks.sink1.hdfs.path=/flume/collector1

collector1.sinks.sink1.hdfs.filePrefix=access_log

collector1.sinks.sink1.hdfs.writeFormat=Text

collector1.sinks.sink1.channel=ch1

collector1.sinks.sink1.hdfs.fileType=DataStreammaster2的flume配置文件如下:

tail1.sources=src1

tail1.channels=ch1

tail1.sinks=sink1

tail1.sources.src1.type=exec

tail1.sources.src1.command=tail -F /var/log/nginx/access.log

tail1.sources.src1.channels=ch1

tail1.channels.ch1.type=memory

tail1.channels.ch1.capacity=500

tail1.sinks.sink1.type=hdfs

tail1.sinks.sink1.hdfs.path=/flume/collector1

tail1.sinks.sink1.hdfs.filePrefix=access_log

tail1.sinks.sink1.hdfs.writeFormat=Text

tail1.sinks.sink1.channel=ch1

tail1.sinks.sink1.hdfs.fileType=DataStream

日志文件导入后的结果如下图:

第二步:使用Spark提取相关的日志数据项并分析。(本文中以Nginx服务器中的按时间统计浏览量为例进行说明)

Nginx日志格式样例如下:

192.168.49.123 - - [25/Nov/2017:17:05:11 +0800] "GET /ip/part-00000 HTTP/1.1" 200 364 "http://192.168.49.128/project/index.html" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0" "-"

192.168.49.1 - - [22/Nov/2017:09:34:03 +0800] "GET /nginx-logo.png HTTP/1.1" 200 368 "http://192.168.49.129/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:57.0) Gecko/20100101 Firefox/57.0" "-"

192.168.49.1 - - [22/Nov/2017:09:35:56 +0800] "GET /poweredby.png HTTP/1.1" 200 2811 "http://192.168.49.129/" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.86 Safari/537.36" "-"

192.168.49.1 - - [22/Nov/2017:09:45:56 +0800] "GET /nginx-logo.png HTTP/1.1" 200 368 "http://192.168.49.129/" "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2" "-"采用Spark将日志文件通过冒号进行分割并提取第二部分,从而提取到小时数,统计之后将最终结果以TXT文本文件的形式写入Nginx服务器下的time目录中。关键代码如下:

log.map(lambda line:line.split(':')[1]).map(lambda line:(line,1)).reduceByKey(lambda a1,a2:a1+a2).sortByKey(lambda a1,a2:a1+a2).map(lambda (key,value):(int(key),value)).repartition(1).saveAsTextFile("file:///usr/share/nginx/html/time")分析后生成的TXT文本文件的内容如下,文件名为part-00000:

(9, 419)

(10, 237)

(11, 1614)

(12, 438)

(14, 15)

(15, 114)

(16, 318)第三步:在Highcharts中调用统计和分析生成的TXT文件中的统计结果,进行图表的绘制与展示。采用ajax来读取数据。

先附上JavaScript代码:

<script language="JavaScript">

$(document).ready(function() {

var title = {

style: {

fontSize: '37px'

},

text: 'Ngnix服务器天访问量分布'

};

var subtitle = {

text: '来源:随机生成'

};

var xAxis = {

title: {

text: '访问时间(小时)'

},

categories: count2()

};

var yAxis = {

title: {

text: '访问量(次)'

},

plotLines: [{

value: 0,

width: 1,

color: '#808080'

}]

};

var tooltip = {

valueSuffix: '次'

}

var legend = {

layout: 'vertical',

align: 'right',

verticalAlign: 'middle',

borderWidth: 0

};

var series = [{

name: 'Nginx',

data: count()

}];

var json = {};

json.title = title;

json.subtitle = subtitle;

json.xAxis = xAxis;

json.yAxis = yAxis;

json.tooltip = tooltip;

json.legend = legend;

json.series = series;

$('#container2').highcharts(json);

});

script>

X轴xAxis的数据源采用count2函数来从文件中读取。count2函数代码如下:

function count2() {

var rst;

$.ajax({

type: "get",

async: false,

url: "/time/part-00000",

success: function(data) {

var a1 = data.split(')');

var key = "";

for(i = 0; i < a1.length - 1; i++) {

if(i == 0) {

key = key + (a1[i].split(',')[0]).substr(1) + ",";

} else {

key = key + (a1[i].split(',')[0]).substr(2);

if(i < a1.length - 2) {

key = key + ",";

}

}

}

rst = eval('[' + key + ']');

}

});

return rst;

}Y轴中每一项的值series采用count函数从文件中读取,count函数代码如下:

function count() {

var rst;

$.ajax({

type: "get",

async: false,

url: "/time/part-00000",

success: function(data) {

var a1 = data.split(')');

var b1 = "";

var key = "";

for(i = 0; i < a1.length - 1; i++) {

b1 = b1.concat(a1[i].split(',')[1]);

if(i < a1.length - 2) {

b1 = b1 + ",";

}

}

for(i = 0; i < a1.length - 1; i++) {

if(i == 0) {

key = key + (a1[i].split(',')[0]).substr(1) + ",";

} else {

key = key + (a1[i].split(',')[0]).substr(2);

if(i < a1.length - 2) {

key = key + ",";

}

}

}

rst = eval('[' + b1 + ']');

}

});

return rst;

}第四步:编写spark脚本,在每次运行程序之前删掉原来产生的结果文件,参考代码如下:

import shutil

import os

path="/usr/share/nginx/html/time"

if os.path.exists(path):

shutil.rmtree(path)第五步:设定Linux的计划任务,通过crontab -e命令,在一定的间隔时间内,重新运行Spark程序,更新统计结果,实现数据的实时统计与展示。

最终效果如下图所示:

参考文章:

http://blog.csdn.net/lifuxiangcaohui/article/details/49949865