计算机视觉——相机标定之张正友标定法

这里写目录标题

- 一.相机标定

- 1.相机标定原理

- 2.相机标定步骤

- 3.数据集其他注意事项

- 二.相机标定实验

- 1.数据集

- 2.代码实现

- 3.实验结果

- 4.代码解读

一.相机标定

1.相机标定原理

2.相机标定步骤

3.数据集其他注意事项

二.相机标定实验

1.数据集

2.代码实现

3.实验结果

4.代码解读

一.相机标定:

1.原理:

一般来说,标定的过程分为两个部分:

从世界坐标系转换为相机坐标系,这一步是三维点到三维点的转换,包括 R RR,t tt (相机外参)等参数。

从相机坐标系转为图像坐标系,这一步是三维点到二维点的转换,包括 K KK(相机内参)等参数。

简单来说就是从世界坐标系换到图像坐标系的过程,也就是求最终的投影矩阵 P PP 的过程。

2.相机标定的步骤

1).准备一个张正友标定法的棋盘格,棋盘格大小已知,用相机对其进行不同角度的拍摄,得到一组图像(标定图片的数量通常在15~25张之间,图像数量太少,容易导致标定参数不准确。);

2).对图像中的特征点如标定板角点进行检测,得到标定板角点的像素坐标值,根据已知的棋盘格大小和世界坐标系原点,计算得到标定板角点的物理坐标值;

3).求解内参矩阵与外参矩阵。根据物理坐标值和像素坐标值的关系,求出H矩阵,进而构造v矩阵,求解B矩阵,利用B矩阵求解相机内参矩阵A,最后求解每张图片对应的相机外参矩阵;

4).求解畸变参数。利用u^,u, v^,v构造矩阵,计算径向畸变参数;

5).利用L-M(Levenberg-Marquardt)算法对上述参数进行优化。

**** 张正友标定法算法步骤:

标定板图像输入–>角点坐标提取–>构造方程–>参数解算–>最小二乘法参数估计–>最大似然参数优化–>畸变参数计算–>畸变矫正–>输出矫正图像

3.其他注意事项:

1).准备数据集时,标定板拍摄的张数要能覆盖整个测量空间及整个测量视场,把相机图像分成四个象限,应保证拍摄的标定板图像均匀分布在四个象限中,且在每个象限中建议进行不同方向的两次倾斜;

2).圆或者圆环特征的像素数尽量大于20,标定板的成像尺寸应大致占整幅画面的1/4;

3).用辅助光源对标定板进行打光,保证标定板的亮度足够且均匀;

4).标定板成像不能过爆,过爆会导致特征轮廓的提取的偏移,从而导致圆心提取不准确;

5).标定板特征成像不能出现明显的离焦距,出现离焦时可通过调整调整标定板的距离、光圈的大小和像距(对于定焦镜头,通常说的调焦就是指调整像距);

6).标定过程,相机的光圈、焦距不能发生改变,改变需要重新标定;

二.相机标定实验

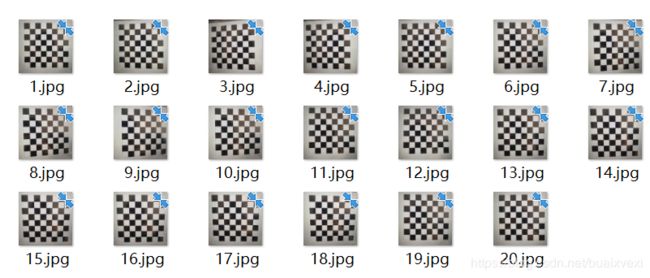

1.数据集:

角点范围是(7,7)

2.代码实现:

calib_RGB.py

# -*- coding: utf-8 -*-

"""

Homework: Calibrate the Camera with ZhangZhengyou Method.

Picture File Folder: ".\pic\RGB_camera_calib_img", Without Distort.

By YouZhiyuan 2019.11.18

"""

import os

import numpy as np

import cv2

import glob

def calib(inter_corner_shape, size_per_grid, img_dir,img_type):

# criteria: only for subpix calibration, which is not used here.

# criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

w,h = inter_corner_shape

# cp_int: corner point in int form, save the coordinate of corner points in world sapce in 'int' form

# like (0,0,0), (1,0,0), (2,0,0) ....,(10,7,0).

cp_int = np.zeros((w*h,3), np.float32)

cp_int[:,:2] = np.mgrid[0:w,0:h].T.reshape(-1,2)

# cp_world: corner point in world space, save the coordinate of corner points in world space.

cp_world = cp_int*size_per_grid

obj_points = [] # the points in world space

img_points = [] # the points in image space (relevant to obj_points)

images = glob.glob(img_dir + os.sep + '**.' + img_type)

for fname in images:

img = cv2.imread(fname)

gray_img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# find the corners, cp_img: corner points in pixel space.

ret, cp_img = cv2.findChessboardCorners(gray_img, (w,h), None)

# if ret is True, save.

if ret == True:

# cv2.cornerSubPix(gray_img,cp_img,(11,11),(-1,-1),criteria)

obj_points.append(cp_world)

img_points.append(cp_img)

# view the corners

cv2.drawChessboardCorners(img, (w,h), cp_img, ret)

cv2.imshow('FoundCorners',img)

cv2.waitKey(1)

cv2.destroyAllWindows()

# calibrate the camera

ret, mat_inter, coff_dis, v_rot, v_trans = cv2.calibrateCamera(obj_points, img_points, gray_img.shape[::-1], None, None)

print (("ret:"),ret)

print (("internal matrix:\n"),mat_inter)

# in the form of (k_1,k_2,p_1,p_2,k_3)

print (("distortion cofficients:\n"),coff_dis)

print (("rotation vectors:\n"),v_rot)

print (("translation vectors:\n"),v_trans)

# calculate the error of reproject

total_error = 0

for i in range(len(obj_points)):

img_points_repro, _ = cv2.projectPoints(obj_points[i], v_rot[i], v_trans[i], mat_inter, coff_dis)

error = cv2.norm(img_points[i], img_points_repro, cv2.NORM_L2)/len(img_points_repro)

total_error += error

print(("Average Error of Reproject: "), total_error/len(obj_points))

return mat_inter, coff_dis

if __name__ == '__main__':

inter_corner_shape = (7,7)

size_per_grid = 1.5

img_dir = "C://Users//pc//Desktop//pic//RGB_img"

img_type = "jpg"

calib(inter_corner_shape, size_per_grid, img_dir,img_type)

calib_IR.py

# -*- coding: utf-8 -*-

"""

Homework: Calibrate the Camera with ZhangZhengyou Method.

Picture File Folder: ".\pic\IR_camera_calib_img", With Distort.

By YouZhiyuan 2019.11.18

"""

import os

import numpy as np

import cv2

import glob

import pylab

def calib(inter_corner_shape, size_per_grid, img_dir,img_type):

# criteria: only for subpix calibration, which is not used here.

# criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

w,h = inter_corner_shape

# cp_int: corner point in int form, save the coordinate of corner points in world sapce in 'int' form

# like (0,0,0), (1,0,0), (2,0,0) ....,(10,7,0).

cp_int = np.zeros((w*h,3), np.float32)

cp_int[:,:2] = np.mgrid[0:w,0:h].T.reshape(-1,2)

# cp_world: corner point in world space, save the coordinate of corner points in world space.

cp_world = cp_int*size_per_grid

obj_points = [] # the points in world space

img_points = [] # the points in image space (relevant to obj_points)

images = glob.glob(img_dir + os.sep + '**.' + img_type)

for fname in images:

img = cv2.imread(fname)

gray_img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# find the corners, cp_img: corner points in pixel space.

ret, cp_img = cv2.findChessboardCorners(gray_img, (w,h), None)

# if ret is True, save.

if ret == True:

# cv2.cornerSubPix(gray_img,cp_img,(11,11),(-1,-1),criteria)

obj_points.append(cp_world)

img_points.append(cp_img)

# view the corners

cv2.drawChessboardCorners(img, (w,h), cp_img, ret)

cv2.imshow('FoundCorners',img)

cv2.waitKey(10)

cv2.destroyAllWindows()

# calibrate the camera

ret, mat_inter, coff_dis, v_rot, v_trans = cv2.calibrateCamera(obj_points, img_points, gray_img.shape[::-1], None, None)

print (("ret:"),ret)

print (("internal matrix:\n"),mat_inter)

# in the form of (k_1,k_2,p_1,p_2,k_3)

print (("distortion cofficients:\n"),coff_dis)

print (("rotation vectors:\n"),v_rot)

print (("translation vectors:\n"),v_trans)

# calculate the error of reproject

total_error = 0

for i in range(len(obj_points)):

img_points_repro, _ = cv2.projectPoints(obj_points[i], v_rot[i], v_trans[i], mat_inter, coff_dis)

error = cv2.norm(img_points[i], img_points_repro, cv2.NORM_L2)/len(img_points_repro)

total_error += error

print(("Average Error of Reproject: "), total_error/len(obj_points))

return mat_inter, coff_dis

def dedistortion(inter_corner_shape, img_dir,img_type, save_dir, mat_inter, coff_dis):

w,h = inter_corner_shape

images = glob.glob(img_dir + os.sep + '**.' + img_type)

for fname in images:

img_name = fname.split(os.sep)[-1]

img = cv2.imread(fname)

newcameramtx, roi = cv2.getOptimalNewCameraMatrix(mat_inter,coff_dis,(w,h),0,(w,h)) # 自由比例参数

dst = cv2.undistort(img, mat_inter, coff_dis, None, newcameramtx)

# clip the image

# x,y,w,h = roi

# dst = dst[y:y+h, x:x+w]

cv2.imwrite(save_dir + os.sep + img_name, dst)

print('Dedistorted images have been saved to: %s successfully.' %save_dir)

if __name__ == '__main__':

inter_corner_shape = (11,8)

size_per_grid = 0.02

img_dir = "C://Users//Garfield//Desktop//pic//IR_camera_calib_img"

img_type = "png"

# calibrate the camera

mat_inter, coff_dis = calib(inter_corner_shape, size_per_grid, img_dir,img_type)

# dedistort and save the dedistortion result.

save_dir = "C://Users//Garfield//Desktop//pic//save_dedistortion"

if(not os.path.exists(save_dir)):

os.makedirs(save_dir)

dedistortion(inter_corner_shape, img_dir, img_type, save_dir, mat_inter, coff_dis)

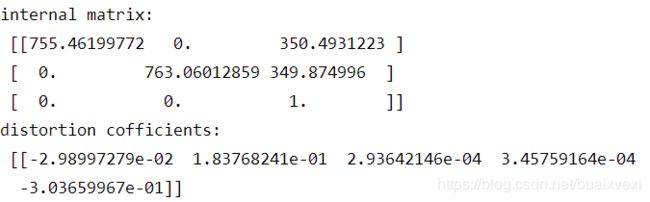

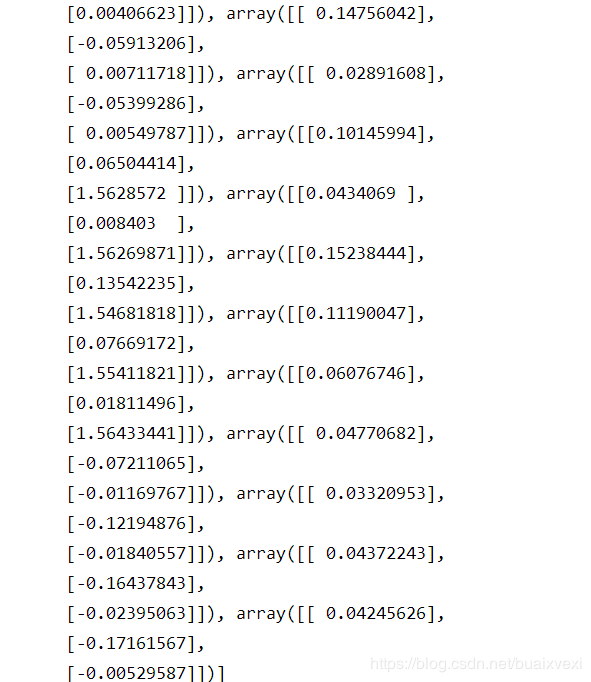

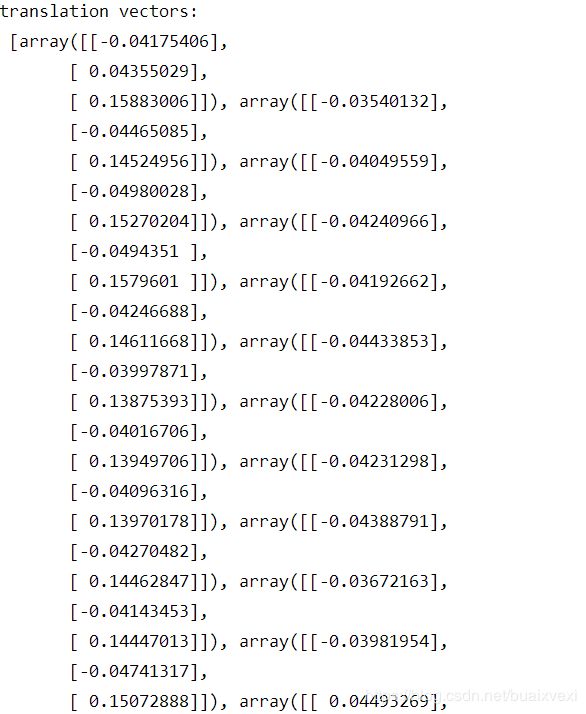

3.实验结果:

![]()

内置矩阵:

旋转向量:

平移向量:

平均重投影误差约为0.0409

4.代码解读

标定:

显示角点位置(可选):cv2.drawChessboardCorners

image =cv.drawChessboardCorners(image, patternSize, corners, patternWasFound )

进行标定,计算参数cv2.calibrateCamera

retval, cameraMatrix, distCoeffs, rvecs, tvecs = cv.calibrateCamera( objectPoints, imagePoints, imageSize, cameraMatrix, distCoeffs[, rvecs[, tvecs[, flags[, criteria]]]])

calibrateCamera(obj_points, img_points, size, None, None)函数的参数:

输入:

object_points :世界坐标系中的点。

image_points :其对应的图像点。

size :图像的大小,在计算相机的内参数和畸变矩阵需要用到;

输出:

mtx :内参数矩阵。

dist :畸变矩阵。

rvecs :旋转向量。

tvecs :位移向量