Deep Learning with Python 系列笔记(四):卷积处理可视化

可视化中间激活层,包括在一个网络中显示由不同convolution和pooling 层输出的特征映射,给定一个特定的输入(一个层的输出通常称为它的“activation”,即激活函数的输出)。这就给出了如何将输入分解到网络所学习的不同 filters 的视图。我们想要可视化的这些功能图有3个维度:宽度、高度和深度(通道)。每个通道都编码相对独立的特征,因此,作为二维图像,可视化这些特征图的正确方法是:通过独立地绘制每个通道的内容。

>>> from keras.models import load_model

>>> model = load_model('cats_and_dogs_small_2.h5')

>>> model.summary() # As a reminder.

________________________________________________________________

Layer (type) Output Shape Param #

================================================================

conv2d_5 (Conv2D) (None, 148, 148, 32) 896

________________________________________________________________

maxpooling2d_5 (MaxPooling2D) (None, 74, 74, 32) 0

________________________________________________________________

conv2d_6 (Conv2D) (None, 72, 72, 64) 18496

________________________________________________________________

maxpooling2d_6 (MaxPooling2D) (None, 36, 36, 64) 0

________________________________________________________________

conv2d_7 (Conv2D) (None, 34, 34, 128) 73856

________________________________________________________________

maxpooling2d_7 (MaxPooling2D) (None, 17, 17, 128) 0

________________________________________________________________

conv2d_8 (Conv2D) (None, 15, 15, 128) 147584

________________________________________________________________

maxpooling2d_8 (MaxPooling2D) (None, 7, 7, 128) 0

________________________________________________________________

flatten_2 (Flatten) (None, 6272) 0

________________________________________________________________

dropout_1 (Dropout) (None, 6272) 0

________________________________________________________________

dense_3 (Dense) (None, 512) 3211776

________________________________________________________________

dense_4 (Dense) (None, 1) 513

================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

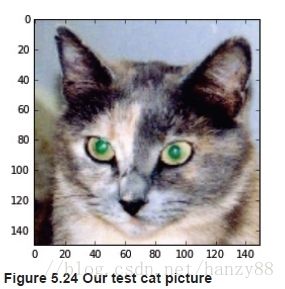

我们将使用猫的图片作为输入图像,而不是网络训练的图像的一部分。

img_path = '/Users/fchollet/Downloads/cats_and_dogs_small/test/cats/cat.1700.jpg'

# We preprocess the image into a 4D tensor

from keras.preprocessing import image

import numpy as np

img = image.load_img(img_path, target_size=(150, 150))

img_tensor = image.img_to_array(img)

img_tensor = np.expand_dims(img_tensor, axis=0)

# Remember that the model was trained on inputs

# that were preprocessed in the following way:

img_tensor /= 255.

# Its shape is (1, 150, 150, 3)

print(img_tensor.shape)

显示图片:

import matplotlib.pyplot as plt

plt.imshow(img_tensor[0])

为了提取我们想要查看的feature map,我们将创建一个Keras模型,它将批量的图像作为输入,并输出所有卷积和池化层的激活。为此,我们将使用Keras类模型。使用两个参数实例化一个模型:输入张量(或输入张量的列表)和输出张量(或输出张量列表)。结果类是一个Keras模型,就像您熟悉的顺序模型一样,将指定的输入映射到指定的输出。模型类的区别在于,它允许有多个输出的模型,而不像序列。

from keras import models

# Extracts the outputs of the top 8 layers:

layer_outputs = [layer.output for layer in model.layers[:8]]

# Creates a model that will return these outputs, given the model input:

activation_model = models.Model(inputs=model.input, outputs=layer_outputs)

当输入图像输入时,该模型返回原始模型中图层激活的值。这是第一次遇到多输出模型:直到现在,所看到的模型只有一个输入和一个输出。在一般情况下,模型可以有任意数量的输入和输出。这个有一个输入和5个输出,每层激活一个输出。

# This will return a list of 5 Numpy arrays:

# one array per layer activation

activations = activation_model.predict(img_tensor)

例如,这是我们的cat图像输入的第一个卷积层的激活:

>>> first_layer_activation = activations[0]

>>> print(first_layer_activation.shape)

(1, 148, 148, 32)

import matplotlib.pyplot as plt

plt.matshow(first_layer_activation[0, :, :, 4], cmap='viridis')

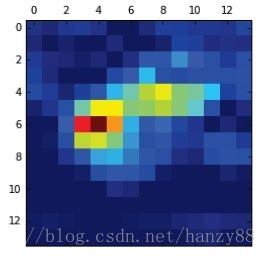

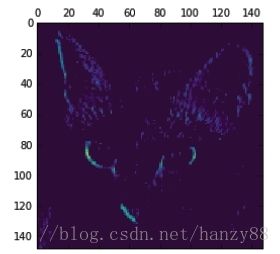

这个通道似乎编码了一个对角边缘检测器。让我们试试第七通道,但请注意,你自己的通道可能会有所不同,因为卷积层学习的特定 filters 并不确定。

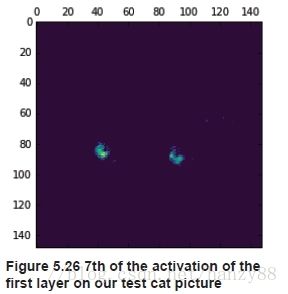

plt.matshow(first_layer_activation[0, :, :, 7], cmap='viridis')

这个看起来像一个“亮绿点”探测器,用来编码猫的眼睛。在这一点上,让我们去绘制一个完整的可视化的网络中所有的激活。我们将在5个激活映射中提取并绘制每个通道,我们将把结果叠加到一个大的图像张量中,并将各通道并排放在一起。

Visualizing every channel in every intermediate activation

# These are the names of the layers, so can have them as part of our plot

layer_names = []

for layer in model.layers[:8]:

layer_names.append(layer.name)

images_per_row = 16

# Now let's display our feature maps

for layer_name, layer_activation in zip(layer_names, activations):

# This is the number of features in the feature map

n_features = layer_activation.shape[-1]

# The feature map has shape (1, size, size, n_features)

size = layer_activation.shape[1]

# We will tile the activation channels in this matrix

n_cols = n_features // images_per_row

display_grid = np.zeros((size * n_cols, images_per_row * size))

# We'll tile each filter into this big horizontal grid

for col in range(n_cols):

for row in range(images_per_row):

channel_image = layer_activation[0,

:, :,

col * images_per_row + row]

# Post-process the feature to make it visually palatable

channel_image -= channel_image.mean()

channel_image /= channel_image.std()

channel_image *= 64

channel_image += 128

channel_image = np.clip(channel_image, 0, 255).astype('uint8')

display_grid[col * size : (col + 1) * size,

row * size : (row + 1) * size] = channel_image

# Display the grid

scale = 1. / size

plt.figure(figsize=(scale * display_grid.shape[1],

scale * display_grid.shape[0]))

plt.title(layer_name)

plt.grid(False)

plt.imshow(display_grid, aspect='auto', cmap='viridis')

这里有一些值得注意的地方:

- 第一层充当各种边缘检测器的集合。在这个阶段,激活仍然保留了初始图像中几乎所有的信息。

- 当在更深的网络时,激活变得越来越抽象,视觉上的解释也越来越少。他们开始编码高级概念,如“猫耳”或“猫眼”。高阶的陈述对图像的视觉内容的信息越来越少,而与图像类相关的信息也越来越多。

- 激活的稀疏性随着层的深度的增加而增加:在第一层,所有的过滤器都被输入图像激活,但是在下面的层中,越来越多的过滤器是空白的。这意味着过滤器所编码的模式不是在输入图像中找到的。

我们刚刚证明了由深层神经网络所学习到的表征的一个非常重要的普遍特征:一层提取的特征随着层的深度越来越抽象。层的激活对所看到的特定输入的信息越来越少,而关于目标的信息越来越多(在我们的例子中,图像的类:猫或狗)。深神经网络有效地充当了信息蒸馏管道,与原始数据(在我们的例子中,篮板图片),并获得多次转换,这样无关紧要的信息过滤掉(如图像的特定的视觉外观)而有用的信息得到放大和精制(如图像的类)。

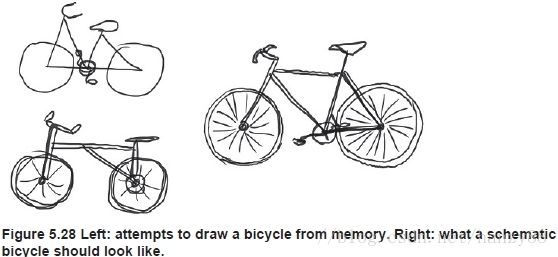

这类似于人类和动物感知世界的方式:观察一个场景几秒钟后,一个人就能记住其中的抽象物体(如自行车、树),但不记得这些物体的具体外观。事实上,如果你现在想要从脑海中画出一辆普通的自行车,即使你在你的一生中已经看到了成千上万辆自行车,你也很有可能无法做到这一点。现在就试试吧:这种效果是绝对真实的。你的大脑已经学会了完全抽象它的视觉输入,把它转换成高层次的视觉概念,同时完全过滤掉不相关的视觉细节,使我们很难记住我们周围的事物实际上是什么样子。

Visualizing convnet filters

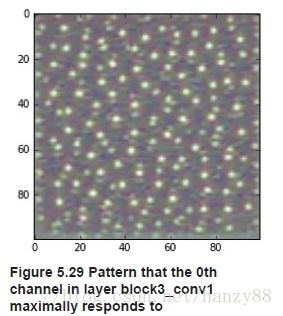

另一件容易的事是检查the filters learned by convnets,它显示了每个 filers 要响应的视觉模式。这可以通过输入空间的梯度提升来完成:将梯度下降应用到一个convnet的输入图像的值,从而最大限度地响应一个特定的 filters,从一个空白的输入图像开始。由此产生的输入图像将是选择的过滤器最大响应的一个。

这个过程很简单:我们将建立一个损失函数,在给定的卷积层中给定滤波器的值最大化,然后我们将使用随机梯度下降法来调整输入图像的值,从而最大化这个激活值。例如,在VGG16网络的“block3_conv1”层中,对filter 0的激活的损失是预先训练的ImageNet:

from keras.applications import VGG16

from keras import backend as K

model = VGG16(weights='imagenet',

include_top=False)

layer_name = 'block3_conv1'

filter_index = 0

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])

为了实现梯度下降,我们需要模型的输入相信Loss的梯度。为此,我们将使用与Keras的后端模块打包的渐变函数。

# The call to `gradients` returns a list of tensors (of size 1 in this case)

# hence we only keep the first element -- which is a tensor.

grads = K.gradients(loss, model.input)[0]

在梯度下降过程中使用一个不明显的技巧是使梯度张量标准化,将其除以它的L2范数(张量中值的平方的平均值的平方根)。这确保对输入图像进行的更新的大小始终在同一范围内。

# We add 1e-5 before dividing so as to avoid accidentally dividing by 0.

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)

iterate = K.function([model.input], [loss, grads])

# Let's test it:

import numpy as np

loss_value, grads_value = iterate([np.zeros((1, 150, 150, 3))])

在这一点上,我们可以定义一个Python循环来做随机梯度下降。

通过对输入参数的随机梯度下降,使损失最大化:

# We start from a gray image with some noise

input_img_data = np.random.random((1, 150, 150, 3)) * 20 + 128.

# Run gradient ascent for 40 steps

step = 1. # this is the magnitude of each gradient update

for i in range(40):

# Compute the loss value and gradient value

loss_value, grads_value = iterate([input_img_data])

# Here we adjust the input image in the direction that maximizes the loss

input_img_data += grads_value * step

得到的图像张量将是一个浮点张量(1,150,150,3),其值在[0,255]内可能不是整数。因此,我们需要后处理这个张量,把它变成可显示的图像。我们用下面这个简单的效用函数来做。

Utility function to convert a tensor into a valid image

def deprocess_image(x):

# normalize tensor: center on 0., ensure std is 0.1

x -= x.mean()

x /= (x.std() + 1e-5)

x *= 0.1

# clip to [0, 1]

x += 0.5

x = np.clip(x, 0, 1)

# convert to RGB array

x *= 255

x = np.clip(x, 0, 255).astype('uint8')

return x

现在我们有了所有的部分,让我们把它们放到一个Python函数中,作为输入一个层名和一个过滤器索引,它返回一个有效的图像张量,它代表了最大化激活指定过滤器的模式。

Putting it all together: a function to generate filter visualizations

def generate_pattern(layer_name, filter_index, size=150):

# Build a loss function that maximizes the activation

# of the nth filter of the layer considered.

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])

# Compute the gradient of the input picture wrt this loss

grads = K.gradients(loss, model.input)[0]

# Normalization trick: we normalize the gradient

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)

# This function returns the loss and grads given the input picture

iterate = K.function([model.input], [loss, grads])

# We start from a gray image with some noise

input_img_data = np.random.random((1, size, size, 3)) * 20 + 128.

# Run gradient ascent for 40 steps

step = 1.

for i in range(40):

loss_value, grads_value = iterate([input_img_data])

input_img_data += grads_value * step

img = input_img_data[0]

return deprocess_image(img)

Visualising the response patterns of filter 0 of block3_conv1

>>> plt.imshow(generate_pattern('block3_conv1', 0))

似乎在图层block3_conv1中过滤器0对圆点图案有响应,现在有趣的部分是:我们可以开始想象每一个层的每一个过滤器。为了简单起见,我们只看每一层的前64个过滤器,只看每个卷积块的第一层(block1_conv1, block2_conv1, block3_conv1, block4_conv1, block5_conv1)。我们将在8x8网格的64x64筛选器模式中安排输出,每个过滤器模式之间有一些黑边距。

Generating of grid of all filter response patterns in a layer

layer_name = 'block1_conv1'

size = 64

margin = 5

# This a empty (black) image where we will store our results.

results = np.zeros((8 * size + 7 * margin, 8 * size + 7 * margin, 3))

for i in range(8): # iterate over the rows of our results grid

for j in range(8): # iterate over the columns of our results grid

# Generate the pattern for filter `i + (j * 8)` in `layer_name`

filter_img = generate_pattern(layer_name, i + (j * 8), size=size)

# Put the result in the square `(i, j)` of the results grid

horizontal_start = i * size + i * margin

horizontal_end = horizontal_start + size

vertical_start = j * size + j * margin

vertical_end = vertical_start + size

results[horizontal_start: horizontal_end, vertical_start: vertical_end, :] = filter_img

# Display the results grid

plt.figure(figsize=(20, 20))

plt.imshow(results)

这些 Filter 可视化告诉我们很多关于Convnet 网络层如何看世界的信息:在一个convnet中,每个层仅仅学习了一个 filters 集合,这样它们的输入就可以被表示为 filters 的组合。这类似于傅里叶变换将信号分解为余弦函数。当我们在模型中深入时,这些Convnet 网络过滤器中的 filter 变得越来越复杂和完善:

- 模型中的第一个层的过滤器(block1_conv1) 简单的 编码方向边和颜色(在某些情况下是有色的边)。

- 来自block2_conv1的过滤器编码了由边缘和颜色组合而成的简单纹理。

- 更高层次的过滤器开始类似于自然图像中发现的纹理:羽毛、眼睛、树叶等。

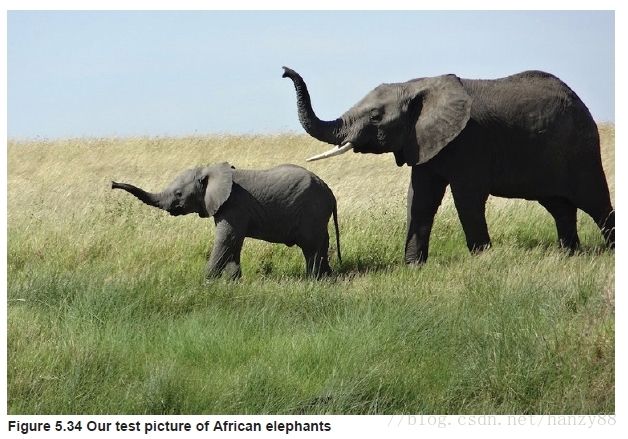

显示类激活的热图

我们将介绍一种更加可视化的技术,它有助于理解给定图像的哪些部分引导了一个 convnet 进行最终的分类决策。这有助于“调试” convnet 网络的决策过程,特别是在分类错误的情况下,它还允许您定位图像中的特定对象。

这类技术被称为“类激活映射”(CAM)可视化,它包括在输入图像上生成“类激活”的热图。一个“类激活”的热图是一个2D网格,它与一个特定的输出类关联,计算每个输入图像中的每个位置,说明每个位置对所考虑的类的重要性。例如,先给定一个图像,送入一个“猫”,类激活地图可视化允许我们为类生成一个热图“猫”。

Loading the VGG16 network with pre-trained weights

from keras.applications.vgg16 import VGG16

# Note that we are including the densely-connected classifier on top;

# all previous times, we were discarding it.

model = VGG16(weights='imagenet')

将图像转换成VGG16模型可以读的东西:VGG16模型训练的图像是224x244,处理规则都打包在keras.applications.vgg16.preprocess_input函数中。因此,我们需要加载图像,将其调整为224x224,将其转换为Numpy float32张量,并应用这些预处理。

Pre-processing an input image for VGG16

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input, decode_predictions

import numpy as np

# The local path to our target image

img_path = '/Users/fchollet/Downloads/creative_commons_elephant.jpg'

# `img` is a PIL image of size 224x224

img = image.load_img(img_path, target_size=(224, 224))

# `x` is a float32 Numpy array of shape (224, 224, 3)

x = image.img_to_array(img)

# We add a dimension to transform our array into a "batch"

# of size (1, 224, 224, 3)

x = np.expand_dims(x, axis=0)

# Finally we preprocess the batch

# (this does channel-wise color normalization)

x = preprocess_input(x)

然后我们可以在图像上运行预训练的网络,并将其预测向量解码为人类可读的格式:

>>> preds = model.predict(x)

>>> print('Predicted:', decode_predictions(preds, top=3)[0])

Predicted:', [(u'n02504458', u'African_elephant', 0.92546833),

(u'n01871265', u'tusker', 0.070257246),

(u'n02504013', u'Indian_elephant', 0.0042589349)]

图片预测的前三个结果:

- 非洲象(概率为 92.5%)

- 长牙象(概率为7%)

- 印度象(概率为0.4%)

其中,非洲象对应最大概率的值在386的位置:

>>> np.argmax(preds[0])

386

Setting up the Grad-CAM algorithm

# This is the "african elephant" entry in the prediction vector

african_elephant_output = model.output[:, 386]

# The is the output feature map of the `block5_conv3` layer,

# the last convolutional layer in VGG16

last_conv_layer = model.get_layer('block5_conv3')

# This is the gradient of the "african elephant" class with regard to

# the output feature map of `block5_conv3`

grads = K.gradients(african_elephant_output, last_conv_layer.output)[0]

# This is a vector of shape (512,), where each entry

# is the mean intensity of the gradient over a specific feature map channel

pooled_grads = K.mean(grads, axis=(0, 1, 2))

# This function allows us to access the values of the quantities we just defined:

# `pooled_grads` and the output feature map of `block5_conv3`,

# given a sample image

iterate = K.function([model.input], [pooled_grads, last_conv_layer.output[0]])

# These are the values of these two quantities, as Numpy arrays,

# given our sample image of two elephants

pooled_grads_value, conv_layer_output_value = iterate([x])

# We multiply each channel in the feature map array

# by "how important this channel is" with regard to the elephant class

for i in range(512):

conv_layer_output_value[:, :, i] *= pooled_grads_value[i]

# The channel-wise mean of the resulting feature map

# is our heatmap of class activation

heatmap = np.mean(conv_layer_output_value, axis=-1)

为了实现可视化的目的,我们还将使热图在0和1之间标准化。

Heatmap post-processing

heatmap = np.maximum(heatmap, 0)

heatmap /= np.max(heatmap)

plt.matshow(heatmap)

最后,我们将使用OpenCV生成一个图像,它将原始图像与我们刚刚获得的热图叠加在一起:

Superimposing the heatmap with the original picture, and saving it to disk

import cv2

# We use cv2 to load the original image

img = cv2.imread(img_path)

# We resize the heatmap to have the same size as the original image

heatmap = cv2.resize(heatmap, (img.shape[1], img.shape[0]))

# We convert the heatmap to RGB

heatmap = np.uint8(255 * heatmap)

# We apply the heatmap to the original image

heatmap = cv2.applyColorMap(heatmap, cv2.COLORMAP_JET)

# 0.4 here is a heatmap intensity factor

superimposed_img = heatmap * 0.4 + img

# Save the image to disk

cv2.imwrite('/Users/fchollet/Downloads/elephant_cam.jpg', superimposed_img)

这个可视化技术回答了两个重要的问题:

- 为什么网络认为这张照片里有一只非洲象?

- 图片中的非洲象在哪里?

尤其有趣的是,大象幼仔的耳朵被强烈地激活了:这可能是这个网络如何分辨非洲和印度大象的区别。

总结

- Convnets 网络是处理视觉分类问题的最佳工具。

- Convnets 通过学习模块化模式和概念的层次结构来表现视觉世界。

- 他们学习的过程很容易检查,他们并不是黑盒问题。