A strategy to quantify embedding layer

Basic idea

Embedding is mainly in the process of word pre-training. Two embedding methods, word2vec and GloVe, are commonly used. Generally speaking, the calculation matrix size of embedding is \(V \times h\) where, \(V\) is the size of the one-hot vector, \(h\) is the size of the vector after embedding. For a slightly larger corpus, the parameters of this process are very large, the main reason is that the \(V\) is too large. The main idea is to not use one-hot vector to represent words, but to use a code \(C_w\) to represent, the way to express is:

That is, the dimension of the word becomes the \(M\) dimension, where \(C_w^i \in [1,K]\) , Therefore, \(C_w^i\) can essentially be regarded as a one-hot vector of \(K\) dimension, and \(C_w\) is a collection of one-hot vectors. At this time, if we want to embedding the word vector C, we need a matrix, which is \(E_1, E_2, \dots, E_M\).

For example

if we have \(C_{dog} = (3, 2, 4, 1)\) and \(C_{dogs} = (3, 2, 4, 2)\) , in this condition, \(K = 4\) and \(M=4\), \(E_1 = \{e_{11}, e_{12}, e_{13}, e_{14}\}\) \(E_2 = \{e_{21}, e_{22}, e_{23}, e_{24}\}\) and \(\dots\) \(E_4\) , Among them, we need to know that the dimension of \(e_{ij}\) is \(1 \times H\) , and the process of embedding is :

So the matrix of the embedding process is \(M \times K \times h\)

Unknown parameters

Then for a word, we want to embedding it, then the parameters we need to know are \(C\) and \(E_1, E_2, \dots, E_M\) and we usually call \(E\) as codebooks .we wish to find a set of codes \(\hat C\) and combined codebook \(\hat E\) that can produce the embeddings with the same effectiveness as \(\tilde E(w)\), Among them, \(\tilde E(w)\) represents the original embedding method, such as GloVe. At this step, it is clear that we can use the loss function method to obtain the parameter matrices \(C\) and \(E\) , Then we get the following formula:

To learn the codebooks \(E\) and code assignment \(C\) . In this work, the author propose a straight-forward method to directly learn the code assignment and codebooks simutaneously in an end-to-end neural network. In this work, the author encourage the discreteness with the Gumbel-Softmax trick for producing compositional codes.

Why Gumbel-Softmax

As mentioned above, the way we obtain codebooks and code assignments is to use neural networks, but code assignments are discrete variables. In the process of back propagation of neural networks, we are all using continuous variables. For discrete variables In the process of back propagation of the neural network and the calculation of gradient descent, it needs to be introduced Gumbel-Softmax.

Generally speaking, for the discrete variable \(z\), it is assumed to be an on-hot vector with a distribution of \(\boldsymbol{\pi}=\left[\pi_{1}; \pi_{2}; \ldots; \pi_ {k}\right]\) Then the process of forward propagation is \(\pi \longrightarrow z\) to get a one-hot of \(z\), but the problem is that we cannot directly use \(z \longrightarrow \pi\) in the process of back propagation , because in the process of \(\pi \longrightarrow z\) we usually use function argmax.

Explainable cause

- Sampling process for discrete variables, using distributed sampling for intermediate calculation results, you can pan the results of softmax

- Use a derivative function instead of argmax to represent the probability distribution

The corresponding two formulas are:

In fact, this part of my own understanding is not very clear, and I will use blogs to explain it later.

Learning with Neural Network

First, we need to know that the input of the neural network is a one-hot vector of \(V\), then what we need to do is Minimize the loss function (2), In this process, the optimized parameters are obtained, \(C\) and \(E\) .

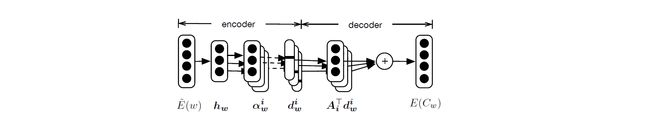

Let’s first look at the structure of the network, and then explain what each step does. Then we know the relationship between the parameters in the network and the unknown \(C\) and \(E\).

In this network, the first layer is \(\hat E(w)\) , which is a traditional embedding layer, such as GloVe, then \(\tilde{\mathbf{E}} \in \mathbb{R}^{|V| \times H}\) , we get \(h_w \in \mathbb{R}^{MK/2 \times 1}\) , and we get \(h_w\) and \(\alpha_w^i\) with:

and \(\alpha_w \in \mathbb{R}^{M \times k}\) , the next step is Gumbel-Softmax, and we get:

In the above process, we convert the problem of learning discrete codes \(C_w\) to a problem of finding a set of optimal one-hot vectors \(d_w^1, \dots, d_w^M\). and \(d_w^i \in \mathbb{R}^{K \times 1}\) .

and the next step, we use a matrix \(E\) with \(C_w\) to get \(E(C_w)\). In the neural network, we use \(A_1, \dots, A_M\) to donate the matrix \(E_1, E_2, \dots, E_M\). Therefore:

and \(\boldsymbol{A}_{\boldsymbol{i}} \in \mathbb{R}^{K \times H}\) , and the result is in \(\mathbb{R}^{H \times 1}\) , So ,we can use the formula(2) to calculate the loss function and back propagation.

Get Code from Neural Network

In the above neural network, the main parameters in our training process are \(\left({\theta}, {b}, {\theta}^{\prime}, {b}^{\prime}, {A}\right)\) , Once the coding learning model is trained, the code \(C_w\) for each word can be easily obtained by applying argmax to the one-hot vectors \(d_w^1, \dots, d_w^M\). The basis vectors (codewords) for composing the embeddings can be found as the row vectors in the weight matrix \(A\) which just the same as \(E_1, E_2, \dots, E_M\).

So this process is equivalent to simplifying the embedding layer. It is similar to knowledge distillation in training methods. In terms of training results, it is mainly to quantify the one-hot vector of the original embedding model. Therefore, using this embedding layer for downstream tasks will increase the model calculation speed and compress the size of the model.

Where we compress the model

Finally, let's explain where the model compresses the original embedding layer, first for a \(C_{w}=\left(C_{w}^{1}, C_{w}^{2}, \ldots , C_{w}^{M}\right)\), Assuming that the size of \(C_w\) in the original model is \(V \in \mathbb{R}^{N}\), now it is \(C_w \in \mathbb{R}^{M \times K}\) , Then, we use binary encoding for each \(C_w^i\) , we get the length of vector in \(C_w\) is \(M \log _{2} K\) , but in original model, the \(C_w\) is \(V \in \mathbb{R}^{N}\), we could chose the appropriate \(M\) and \(K\) to increase the ratio of data compression. On the other hand, in the process of embedding, the size of the embedding matrix will also be reduced, and the size after the reduction is \(M \times K \times H\).