filebeat-logstash-es综合运用

日志格式

- 系统日志

- 正常业务员日志

- 正常业务日志(

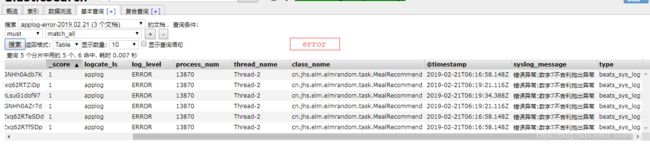

pretty) error日志

业务场景

实操

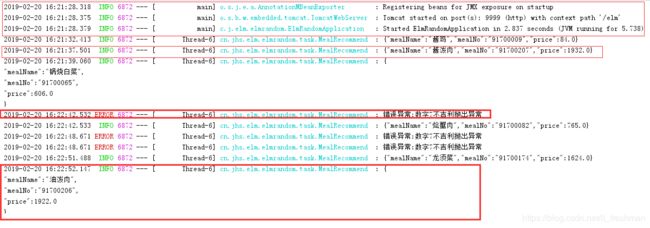

1.生产消息

通过吃什么app,随机的不间断的生产消息:

java源码

@Component

public class MealRecommend implements InitializingBean , DisposableBean {

private Logger logger = LogManager.getLogger(MealRecommend.class);

private CountDownLatch latch = new CountDownLatch(1);

private final String MEAL_NAME_STR = "蒸羊羔,蒸熊掌,蒸鹿尾,烧花鸭,烧雏鸡,烧子鹅,炉猪,炉鸭,酱鸡,腊肉,松花,小肚,晾肉,香肠什锦苏盘,熏鸡白肚,清蒸八宝猪,江米酿鸭子,罐野鸡,罐鹌鹑,卤什件,卤子鹅,山鸡,兔脯,菜蟒,银鱼,清蒸哈什蚂,烩鸭腰,烤鸭条,清拌腰丝,黄心管,焖白鳝,焖黄鳝,豆鼓鲇鱼,锅烧鲤鱼,烀烂甲鱼,抓炒鲤鱼,抓炒对虾,软炸里脊,软炸鸡,什锦套肠,卤煮寒鸦,麻酥油卷,熘鲜蘑,熘鱼脯,熘鱼肚,熘鱼片,醋烟肉片,烟三鲜,烟鸽子蛋,熘白蘑,熘什件,炒银丝,烟刀鱼,清蒸火腿,炒白虾,炝青蛤,炒面鱼,炝竹笋,芙蓉燕菜,炒虾仁,熘腰花,烩海参,炒蹄筋,锅烧海参,锅烧白菜,炸木耳,炒肝尖,桂花翅子,清蒸翅子,炸飞禽炸汁,炸排骨,清蒸江瑶柱,糖熘芡仁米,拌鸡丝,拌肚丝,什锦豆腐,什锦丁,糟鸭,糟熘鱼片,熘蟹肉,炒蟹肉,烩蟹肉,清拌蟹肉,蒸南瓜,酿倭瓜,炒丝瓜,酿冬瓜.烟鸭掌,焖鸭掌,焖笋,炝茭白,茄子晒炉肉,鸭羹,蟹肉羹,鸡血汤,三鲜木樨汤,红丸子,白丸子,南煎丸子,四喜丸子,三鲜丸子,氽丸子,鲜虾丸子,鱼脯丸子,饹炸丸子,豆腐丸子,樱桃肉,马牙肉,米粉肉,一品肉,栗子肉,坛子肉,红焖肉,黄焖肉,酱豆腐肉,晒炉肉,炖肉,黏糊肉,烀肉,扣肉,松肉,罐肉,烧肉,大肉,烤肉,白肉,红肘子,白肘子,熏肘子,水晶肘子,蜜蜡肘子,锅烧肘子,扒肘条,炖羊肉,酱羊肉,烧羊肉,烤羊肉,清羔羊肉,五香羊肉,氽三样,爆三样,炸卷果,烩散丹,烩酸燕,烩银丝,烩白杂碎,氽节子,烩节子,炸绣球,三鲜鱼翅,栗子鸡,氽鲤鱼,酱汁鲫鱼,活钻鲤鱼,板鸭,筒子鸡,烩脐肚,烩南荠,爆肚仁,盐水肘花,锅烧猪蹄,拌稂子,炖吊子,烧肝尖,烧肥肠,烧心,烧肺,烧紫盖,烧连帖,烧宝盖,油炸肺,酱瓜丝,山鸡丁,拌海蜇,龙须菜,炝冬笋,玉兰片,烧鸳鸯,烧鱼头,烧槟子,烧百合,炸豆腐,炸面筋,炸软巾,糖熘饹,拔丝山药,糖焖莲子,酿山药,杏仁酪,小炒螃蟹,氽大甲,炒荤素,什锦葛仙米,鳎目鱼,八代鱼,海鲫鱼,黄花鱼,鲥鱼,带鱼,扒海参,扒燕窝,扒鸡腿,扒鸡块,扒肉,扒面筋,扒三样,油泼肉,酱泼肉,炒虾黄,熘蟹黄,炒子蟹,炸子蟹,佛手海参,炸烹,炒芡子米,奶汤,翅子汤,三丝汤,熏斑鸠,卤斑鸠,海白米,烩腰丁,火烧茨菰,炸鹿尾,焖鱼头,拌皮渣,氽肥肠,炸紫盖,鸡丝豆苗,十二台菜,汤羊,鹿肉,驼峰,鹿大哈,插根,炸花件,清拌粉皮,炝莴笋,烹芽韭,木樨菜,烹丁香,烹大肉,烹白肉,麻辣野鸡,烩酸蕾,熘脊髓,咸肉丝,白肉丝,荸荠一品锅,素炝春不老,清焖莲子,酸黄菜,烧萝卜,脂油雪花菜,烩银耳,炒银枝,八宝榛子酱,黄鱼锅子,白菜锅子,什锦锅子,汤圆锅子,菊花锅子,杂烩锅子,煮饽饽锅子,肉丁辣酱,炒肉丝,炒肉片,烩酸菜,烩白菜,烩豌豆,焖扁豆,氽毛豆,炒豇豆,腌苤蓝丝";

private List<String> mealNameList;

private List<Meal> mealList;

private int mealCateSize;

@Data

@AllArgsConstructor

private class Meal{

private String mealName ;

private String mealNo;

private double price;

@Override

public String toString(){

long curMills = System.currentTimeMillis();

if(curMills % 3 == 0){

return "{\n" +

"\"mealName\":\"" + this.mealName + "\",\n" +

"\"mealNo\":\"" + this.mealNo + "\",\n" +

"\"price\":" + this.price + "\n" +

"}";

}else if(curMills % 7 == 0 ){

throw new RuntimeException("数字7不吉利抛出异常");

}

return JSON.stringify(this);

}

}

private static final Random random = new Random();

@Override

public void afterPropertiesSet() throws Exception {

mealNameList = Arrays.asList(MEAL_NAME_STR.split(","));

mealCateSize = mealNameList.size();

mealList = new ArrayList<>(mealCateSize);

for (int i = 0; i < mealNameList.size(); i++) {

String mealName = mealNameList.get(i);

String mealNo = "91700" + String.format("%03d", i + 1);

//单价

double price = 28 * (i + 1) / 3 ;

mealList.add(new Meal(mealName,mealNo,price));

}

new Thread(() -> {

while(latch.getCount() > 0) {

try {

int rd = random.nextInt(mealCateSize);

logger.info(mealList.get(rd));

Thread.sleep(random.nextInt(mealCateSize * 30));

} catch (Exception e) {

// e.printStackTrace();

logger.error("错误异常:"+e.getMessage());

}

}

}).start();

}

@Override

public void destroy() throws Exception {

latch.countDown();

}

}

部署elm-random.jar

通过命令nohup java -jar elm-random.jar > elk_app.out 2>&1 &启动命令。

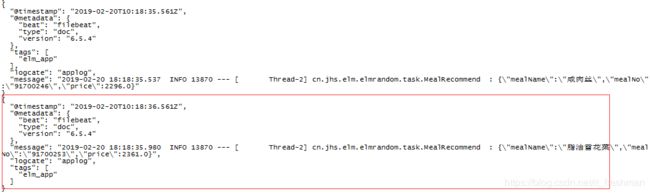

2.filebeat采集日志

配置filebeat.yml

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/logstash/elk_app/elk_app.out

fields:

logcate: "applog"

fields_under_root: true

tags: ["elm_app"]

multiline.pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

multiline.negate: true

multiline.match: after

#============================= Filebeat modules ===============================

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

#-------------------------------- Logstash output

### 测试时使用

output.console:

pretty: true

#output.logstash:

# hosts: ["s156:5054"]

#

#

#================================ Procesors =====================================

processors:

# - add_host_metadata: ~

# - add_cloud_metadata: ~

- drop_fields:

fields: ["@timestamp","sort","beat","input_type","offset","source","input","host","prospector"]

3.logstash日志采集

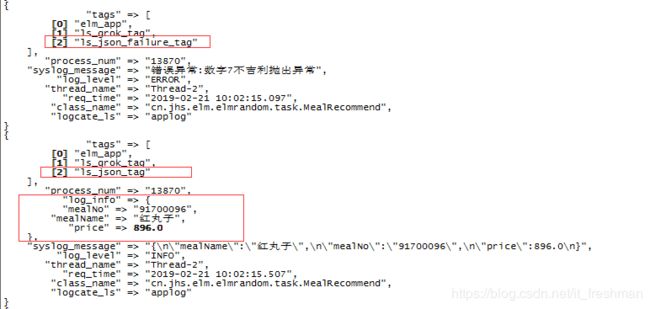

1. filter-grok

input {

beats {

port => 5054

type => beats_sys_log

}

}

filter {

if [type] == "beats_sys_log" {

grok {

match => {

"message" => "%{TIMESTAMP_ISO8601:req_time}%{SPACE}%{LOGLEVEL:log_level} %{NUMBER:process_num} --- \[ %{USERNAME:thread_name}] %{USERNAME:class_name} : %{GREEDYDATA:syslog_message}"

}

add_field => {

"logcate_ls" => "%{logcate}"

}

add_tag => ["ls_grok_tag"]

remove_field => ["message","logcate","@version","@timestamp"]

remove_tag => ["beats_input_codec_plain_applied"]

}

}

}

output {

stdout{

codec => rubydebug

}

}

https://grokdebug.herokuapp.com/

grok-patterns

json {

source => "syslog_message"

## 如果 不配置,解析后的json为 root-field

target => "log_info"

tag_on_failure => ["ls_json_failure_tag"]

add_tag => ["ls_json_tag"]

}

3. filter-json

date {

##match => [ field, formats... ]

match => [ "req_time", "yyyy-MM-dd HH:mm:ss.SSS" ]

### 默认为@timestamp,为了演示下面的mutate插件,在此多此一举

target => "req_time_fmt"

remove_field => ["req_time"]

}

4.filter-mutate

mutate {

rename => {"req_time_fmt" => "@timestamp"}

}

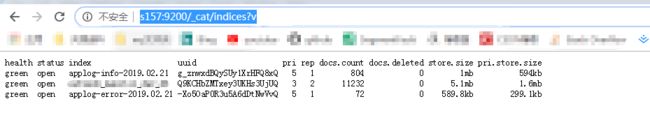

5. outputs

output {

stdout{

codec => rubydebug

}

if "ls_json_failure_tag" in [tags] {

elasticsearch {

hosts => ["S157:9200","S158:9200","S161:9200"]

## index 默认值是"logstash-%{+YYYY.MM.dd}",不允许包含大写字母

index => "%{logcate_ls}-error-%{+YYYY.MM.dd}"

}

}else if "ls_json_tag" in [tags] {

elasticsearch {

hosts => ["S157:9200","S158:9200","S161:9200"]

index => "%{logcate_ls}-info-%{+YYYY.MM.dd}"

}

}

}