强化学习实战(二):用Q-Learning和SARSA解决出租车问题

强化学习实战(二):用Q-Learning和SARSA解决出租车问题

- 1. 出租车问题

- 问题描述

- 2. Q-Learning和SARSA

- 理论部分暂略

- 2.1 Q-Learning

- 2.1.1 算法描述

- 2.1.2 流程图

- 2.2 SARSA

- 2.2.1 算法描述

- 2.2.2 流程图

- 2.3 二者的区别

- 3. 代码实现

- 3.1 gym环境的一些解释

- 3.1.1 env.reset()

- 3.1.2 env.step()

- 3.2 Q-Learning

- 3.3 SARSA

- 4.Reference

1. 出租车问题

问题描述

The Taxi Problem

from "Hierarchical Reinforcement Learning with the MAXQ Value Function Decomposition"

by Tom Dietterich

Description:

There are four designated locations in the grid world indicated by R(ed), B(lue), G(reen), and Y(ellow). When the episode starts, the taxi starts off at a random square and the passenger is at a random location. The taxi drive to the passenger's location, pick up the passenger, drive to the passenger's destination (another one of the four specified locations), and then drop off the passenger. Once the passenger is dropped off, the episode ends.

Observations:

There are 500 discrete states since there are 25 taxi positions, 5 possible locations of the passenger (including the case when the passenger is the taxi), and 4 destination locations.

MAP = [

"+---------+",

"|R: | : :G|",

"| : : : : |",

"| : : : : |",

"| | : | : |",

"|Y| : |B: |",

"+---------+",

]

Actions:

There are 6 discrete deterministic actions:

- 0: move south

- 1: move north

- 2: move east

- 3: move west

- 4: pickup passenger

- 5: dropoff passenger

Rewards:

There is a reward of -1 for each action and an additional reward of +20 for delievering the passenger. There is a reward of -10 for executing actions "pickup" and "dropoff" illegally.

Rendering:

- blue: passenger

- magenta: destination

- yellow: empty taxi

- green: full taxi

- other letters (R, G, B and Y): locations for passengers and destinations

actions:

- 0: south

- 1: north

- 2: east

- 3: west

- 4: pickup

- 5: dropoff

state space is represented by:

(taxi_row, taxi_col, passenger_location, destination)

2. Q-Learning和SARSA

理论部分暂略

2.1 Q-Learning

2.1.1 算法描述

2.1.2 流程图

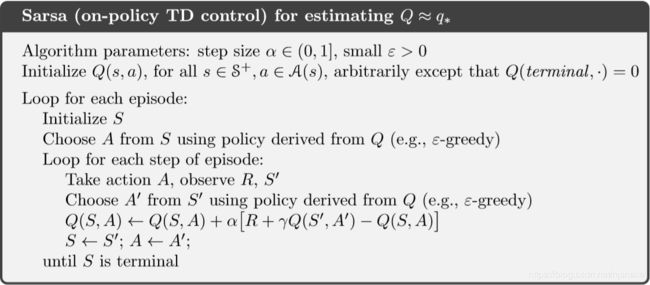

2.2 SARSA

2.2.1 算法描述

2.2.2 流程图

2.3 二者的区别

首先介绍两个概念:

同策略(on-policy):产生数据的策略与评估和要改善的策略是同一个策略。

异策略(off-policy):产生数据的策略与评估和要改善的策略不是同一个策略。

Q-Learning是off-policy算法,SARSA是on-policy算法。

我们从两者的算法描述可以看出,Q-Learning在episode内的每一step都采用ε-贪婪策略重新选取动作,即下一step选取的动作跟上一step没有直接关系;而SARSA在episode内下一step执行的动作是上一step更新Q表时采用ε-贪婪策略选取的那个动作。

3. 代码实现

3.1 gym环境的一些解释

3.1.1 env.reset()

Resets the state of the environment and returns an initial observation.

Returns: observation (object): the initial observation of the space.

.reset()用于重置环境,回到初始状态。可以理解为,游戏从头开始了。

3.1.2 env.step()

Run one timestep of the environment's dynamics. When end of episode is reached, you are responsible for calling `reset()` to reset this environment's state.

Accepts an action and returns a tuple (observation, reward, done, info).

Args:

action (object): an action provided by the environment

Returns:

observation (object): agent's observation of the current environment

reward (float) : amount of reward returned after previous action

done (boolean): whether the episode has ended, in which case further step() calls will return undefined results

info (dict): contains auxiliary diagnostic information (helpful for debugging, and sometimes learning)

.step()用于执行一个动作,最后返回一个元组(observation, reward, done, info)

元组变量的含义:

observation (object): 智能体执行动作a后的状态,也就是所谓的“下一步状态s’ ”

reward (浮点数) : 智能体执行动作a后获得的奖励

done (布尔值): 判断episode是否结束,即s’是否是最终状态?是,则done=True;否,则done=False。

info (字典): 一些辅助诊断信息(有助于调试,也可用于学习),一般用不到。

3.2 Q-Learning

import gym

import random

env = gym.make('Taxi-v2')

# 学习率

alpha = 0.5

# 折扣因子

gamma = 0.9

# ε

epsilon = 0.05

# 初始化Q表

Q = {}

for s in range(env.observation_space.n):

for a in range(env.action_space.n):

Q[(s, a)] = 0

# 更新Q表

def update_q_table(prev_state, action, reward, nextstate, alpha, gamma):

# maxQ(s',a')

qa = max([Q[(nextstate, a)] for a in range(env.action_space.n)])

# 更新Q值

Q[(prev_state, action)] += alpha * (reward + gamma * qa - Q[(prev_state, action)])

# ε-贪婪策略选取动作

def epsilon_greedy_policy(state, epsilon):

# 如果<ε,随机选取一个另外的动作(探索)

if random.uniform(0, 1) < epsilon:

return env.action_space.sample()

# 否则,选取令当前状态下Q值最大的动作(开发)

else:

return max(list(range(env.action_space.n)), key=lambda x: Q[(state, x)])

# 训练1000个episode

for i in range(1000):

r = 0

# 初始化状态(env.reset()用于重置环境)

state = env.reset()

# 一个episode

while True:

# 输出当前agent和environment的状态(可删除)

# env.render()

# 采用ε-贪婪策略选取动作

action = epsilon_greedy_policy(state, epsilon)

# 执行动作,得到一些信息

nextstate, reward, done, _ = env.step(action)

# 更新Q表

update_q_table(state, action, reward, nextstate, alpha, gamma)

# s ⬅ s'

state = nextstate

# 累加奖励

r += reward

# 判断episode是否到达最终状态

if done:

break

# 打印当前episode的奖励

print("[Episode %d] Total reward: %d" % (i + 1, r))

env.close()

3.3 SARSA

import gym

import random

env = gym.make('Taxi-v2')

# 学习率

alpha = 0.5

# 折扣因子

gamma = 0.9

# ε

epsilon = 0.05

# 初始化Q表

Q = {}

for s in range(env.observation_space.n):

for a in range(env.action_space.n):

Q[(s, a)] = 0.0

# ε-贪婪策略选取动作

def epsilon_greedy_policy(state, epsilon):

# 如果<ε,随机选取一个另外的动作(探索)

if random.uniform(0, 1) < epsilon:

return env.action_space.sample()

# 否则,选取令当前状态下Q值最大的动作(开发)

else:

return max(list(range(env.action_space.n)), key=lambda x: Q[(state, x)])

# 训练1000个episode

for i in range(1000):

r = 0

# 初始化状态(env.reset()用于重置环境)

state = env.reset()

# 采用ε-贪婪策略选取动作

action = epsilon_greedy_policy(state, epsilon)

# 一个episode

while True:

# 输出当前agent和environment的状态(可删除)

env.render()

# 执行动作得到的一些信息

nextstate, reward, done, _ = env.step(action)

# 采用ε-贪婪策略选取下一步的动作

nextaction = epsilon_greedy_policy(nextstate, epsilon)

# 更新Q表

Q[(state, action)] += alpha * (reward + gamma * Q[(nextstate, nextaction)] - Q[(state, action)])

# a ⬅ a'

action = nextaction

# s ⬅ s'

state = nextstate

# 累加奖励

r += reward

# 判断episode是否到达最终状态

if done:

break

# 打印当前episode的奖励

print("[Episode %d] Total reward: %d" % (i + 1, r))

env.close()

4.Reference

[1] http://gym.openai.com/

[2] Reinforcement Learning: An Introduction (2018)

[3] Hands-On Reinforcement Learning with Python: Master reinforcement and deep reinforcement learning using OpenAI Gym and TensorFlow [M]

敬请批评指正!