OpenCV目标追踪

OpenCV目标追踪

python命令行传参

add_argument()的使用方法

argparse 是 Python 内置的一个用于命令项选项与参数解析的模块,通过在程序中定义好我们需要的参数,argparse 将会从 sys.argv 中解析出这些参数,并自动生成帮助和使用信息。

简单示例

- 创建 ArgumentParser() 对象

- 调用 add_argument() 方法添加参数

- 使用 parse_args() 解析添加的参数

# 一个小例子

import argparse

# constrcut the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-n", "--name", required = True,

help = "name of the user"

)

args = vars(ap.parse_args())

# display a friendly message to the user

print("hello {}, nice to meet you!".format(args["name"]))

第四行:创建一个ArgumentParser类的对象,命名为ap

第五行:

这里只调用了一个命令行参数,–name。不管是在命令行中输入-n/–name都可以,因为这里required(可选参数是否可以省略)设置为true。

help:添加一些说明信息来提示你输入

usage:xxx.py --help/-h 都可以。

第六行:

调用对象上的vars()将解析后的命令行参数转化为字典.

key:命令行参数的名称

value:为命令行参数提供的字典的值

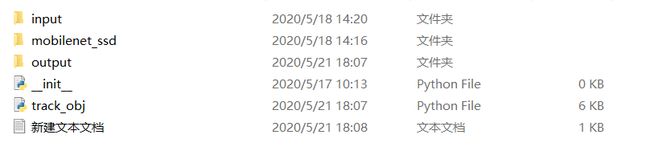

项目结构

-

input/ : 输入的视频,用来进行目标追踪

-

output/ : 经过处理后的视频,目标被矩形框标记

-

- mobilenet_ssd/

- The Caffe CNN model files

实现dlib对象追踪器

引用必须的库

from imutils.video import FPS

import numpy as np

import argparse

import imutils

import dlib

import cv2

解析命令行参数

### 解析命令行参数 ###

ap= argparse.ArgumentParser()

ap.add_argument("-p","--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m","--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-v","--video", required=True,

help="path to input video file")

ap.add_argument("-l","--label", required=True,

help="class label we are interested in detecting + tracking")

ap.add_argument("-o","--output",type=str,

help="path to optional output video file")

ap.add_argument("-c","--confidence",type=float, default=0.2,

help="minimum probability to filter weak detections")

args= vars(ap.parse_args())

-

–prototxt: path to the Caffe deploy prototxt file.

e.g: -p ./mobilenet_ssd/MobileNetSSD_deploy.prototxt

-

–model: path to the Caffe pre-trained model.

e.g: -m ./mobilenet_ssd/MobileNetSSD_deploy.caffemodel

-

–video : 输入的视频(不支持网络摄像头)路径.

e.g: -v ./input/xxx.mp4

-

–label: 对要检测和跟踪的目标的标签,下面会有具体的类表

e.g: -l person

另外2个可选参数:

-

–output: 想保存目标追踪输出的结果

e.g: -o ./output/object_result.avi

-

–confidence: With a

default=0.2

这是最小的概率阈值,从而允许我们从Caffe目标i检测器中过滤掉微弱检测

初始化标签列表

这些类都已经被MobileNet_SSD模型训练过能够检测。

我们用已预训练过的MobileNet_SSD模型在一个帧中来表现目标追踪,目标的位置交给dlib的相关跟踪器追踪,以便在视频的剩余帧中进行跟踪。

我们的这个模型包含20个下载的支持的目标检测的类(再加上一个后台的类)。

注意事项:如果要使用不同的Caffe模型,需要重新定义下面的类表(同样地,如果你使用下面已经下载好的模型就不用去调整)。

# initialize the list of class labels MobileNet SSD was trained to

# detect

CLASSES= ["background","aeroplane","bicycle","bird","boat",

"bottle","bus","car","cat","chair","cow","diningtable",

"dog","horse","motorbike","person","pottedplant","sheep",

"sofa","train","tvmonitor"]

# load our serialized model from disk

print("[INFO] loading model...")

net= cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

初始化视频流

在开始遍历帧之前,我们需要加载模型到内存中(上面最后一行)。加载中只需要prototxt和model文件的路径,这些在输入命令行参数中已输入。

初始化视频流,dlib相关的追踪器,写视频对象,要检测的类标签。

在最后一行,实例化FPS的吞吐量估计(帧数),为了最后的计数

# initialize the video stream, dlib correlation tracker, # output video writer, and predicted class label

print("[INFO] starting video stream...")

vs= cv2.VideoCapture(args["video"])

tracker= None

writer= None

label= ""

# start the frames per second throughput estimator

fps= FPS().start()

在视频帧上进行循环遍历

帧的大小被重新调整大小(能够更快的处理)

转变颜色信道(OpenCV颜色的存储是BGR)为RGB

在运行中,可以通过命令行参数来输出视频路径,所以这里实例化了一个video writer

# loop over frames from the video file stream

while True:

# grab the next frame from the video file

(grabbed, frame) = vs.read()

# check to see if we have reached the end of the video file

if frame is None:

break

# resize the frame for faster processing and then convert the frame from BGR to RGB ordering (dlib needs RGB ordering)

frame = imutils.resize(frame, width=600)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# if we are supposed to be writing a video to disk, initialize the writer

if args["output"] is not None and writer is None:

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(args["output"], fourcc, 30,(frame.shape[1], frame.shape[0]), True)

为追踪任务来检测目标(tracker为空)

如果tracker为空,首先要检测输入帧中的对象

获取一个帧,转化它到blob对象(图像中相同像素的连通域,直观理解为色斑:相同像素组成的一小块,一小块特征,一团,一坨)中

通过网络来传递这个blob(包含追踪器和预测器)

# if our correlation object tracker is None we first need to apply an object detector to seed the tracker with something to actually track

if tracker is None:

# grab the frame dimensions and convert the frame to a blob

(h, w) = frame.shape[:2]

blob = cv2.dnn.blobFromImage(frame, 0.007843, (w, h), 127.5)

# pass the blob through the network and obtain the detections and predictions

net.setInput(blob)

detections = net.forward()

处理检测到的对象

如果我们的检测器找到一个对象,我们会抓取到一个可能性最大的。

这篇文章只演示如何使用dlib库来实现单目标的追踪,所以我们找到可能性最大的检测目标。

特征会演示如何检测和提取到具体的目标。

我们将会获取与对象关联的置信度和标签。

# ensure at least one detection is made

if len(detections) > 0:

# find the index of the detection with the largest

# probability -- out of convenience we are only going

# to track the first object we find with the largest

# probability; future examples will demonstrate how to

# detect and extract *specific* objects

i = np.argmax(detections[0, 0, :, 2])

# grab the probability associated with the object along with its class label

conf = detections[0, 0, i, 2]

label = CLASSES[int(detections[0, 0, i, 1])]

过滤掉弱检测结果

首先要确保我们获取到了正确的目标类型(通过该目标的置信度比较)。这个例子中我们使用person或者cat,所以你可以看到过滤的结果。

我们通过确定目标的的边界box来协调我们的目标。

然后建立box对象来绘制目标对象。

# filter out weak detections by requiring a minimum confidence

if conf > args["confidence"] and label == args["label"]:

# compute the (x, y)-coordinates of the bounding box for the object

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

# construct a dlib rectangle object from the bounding box coordinates and then start the dlib correlation tracker

tracker = dlib.correlation_tracker()

rect = dlib.rectangle(startX, startY, endX, endY)

tracker.start_track(rgb, rect)

# draw the bounding box and text for the object

cv2.rectangle(frame, (startX, startY), (endX, endY),

(0, 255, 0), 2)

cv2.putText(frame, label, (startX, startY - 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 255, 0), 2)

处理已经建立好追踪器的情形

更新追踪目标(在这个更新方法的后面执行繁重的工作)。

从追踪器获取到目标对象的位置,比如一个机器人能够根据追踪器返回的目标进行寻找,在本篇文章中,我们只将追踪目标用box框起来并且注释。

# otherwise, we've already performed detection so let's track

# the object

else:

# update the tracker and grab the position of the tracked

# object

tracker.update(rgb)

pos = tracker.get_position()

# unpack the position object

startX = int(pos.left())

startY = int(pos.top())

endX = int(pos.right())

endY = int(pos.bottom())

# draw the bounding box from the correlation object tracker

cv2.rectangle(frame, (startX, startY), (endX, endY),

(0, 255, 0), 2)

cv2.putText(frame, label, (startX, startY - 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 255, 0), 2)

完成最后的循环

将结果输出到我们想要的视频中。

将处理好的帧(有box和注释)显示出来。

更新FPS值

# check to see if we should write the frame to disk

if writer is not None:

writer.write(frame)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("s"):

break

# update the FPS counter

fps.update()

收尾工作

打印FPS吞吐量和释放指针。

# stop the timer and display FPS information

fps.stop()

print("[INFO] elapsed time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# check to see if we need to release the video writer pointer

if writer is not None:

writer.release()

# do a bit of cleanup

cv2.destroyAllWindows()

vs.release()

完整代码及示例

# USAGE

# python track_object.py --prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \

# --model mobilenet_ssd/MobileNetSSD_deploy.caffemodel --video input/race.mp4 \

# --label person --output output/race_output.avi

# import the necessary packages

from imutils.video import FPS

import numpy as np

import argparse

import imutils

import dlib

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-v", "--video", required=True,

help="path to input video file")

ap.add_argument("-l", "--label", required=True,

help="class label we are interested in detecting + tracking")

ap.add_argument("-o", "--output", type=str,

help="path to optional output video file")

ap.add_argument("-c", "--confidence", type=float, default=0.2,

help="minimum probability to filter weak detections")

args = vars(ap.parse_args())

# initialize the list of class labels MobileNet SSD was trained to

# detect

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

# initialize the video stream, dlib correlation tracker, output video

# writer, and predicted class label

print("[INFO] starting video stream...")

vs = cv2.VideoCapture(args["video"])

tracker = None

writer = None

label = ""

# start the frames per second throughput estimator

fps = FPS().start()

# loop over frames from the video file stream

while True:

# grab the next frame from the video file

(grabbed, frame) = vs.read()

# check to see if we have reached the end of the video file

if frame is None:

break

# resize the frame for faster processing and then convert the

# frame from BGR to RGB ordering (dlib needs RGB ordering)

frame = imutils.resize(frame, width=600)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# if we are supposed to be writing a video to disk, initialize

# the writer

if args["output"] is not None and writer is None:

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(args["output"], fourcc, 30,

(frame.shape[1], frame.shape[0]), True)

# if our correlation object tracker is None we first need to

# apply an object detector to seed the tracker with something

# to actually track

if tracker is None:

# grab the frame dimensions and convert the frame to a blob

(h, w) = frame.shape[:2]

blob = cv2.dnn.blobFromImage(frame, 0.007843, (w, h), 127.5)

# pass the blob through the network and obtain the detections

# and predictions

net.setInput(blob)

detections = net.forward()

# ensure at least one detection is made

if len(detections) > 0:

# find the index of the detection with the largest

# probability -- out of convenience we are only going

# to track the first object we find with the largest

# probability; future examples will demonstrate how to

# detect and extract *specific* objects

i = np.argmax(detections[0, 0, :, 2])

# grab the probability associated with the object along

# with its class label

conf = detections[0, 0, i, 2]

label = CLASSES[int(detections[0, 0, i, 1])]

# filter out weak detections by requiring a minimum

# confidence

if conf > args["confidence"] and label == args["label"]:

# compute the (x, y)-coordinates of the bounding box

# for the object

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

# construct a dlib rectangle object from the bounding

# box coordinates and then start the dlib correlation

# tracker

tracker = dlib.correlation_tracker()

rect = dlib.rectangle(startX, startY, endX, endY)

tracker.start_track(rgb, rect)

# draw the bounding box and text for the object

cv2.rectangle(frame, (startX, startY), (endX, endY),

(0, 255, 0), 2)

cv2.putText(frame, label, (startX, startY - 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 255, 0), 2)

# otherwise, we've already performed detection so let's track

# the object

else:

# update the tracker and grab the position of the tracked

# object

tracker.update(rgb)

pos = tracker.get_position()

# unpack the position object

startX = int(pos.left())

startY = int(pos.top())

endX = int(pos.right())

endY = int(pos.bottom())

# draw the bounding box from the correlation object tracker

cv2.rectangle(frame, (startX, startY), (endX, endY),

(0, 255, 0), 2)

cv2.putText(frame, label, (startX, startY - 15),

cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 255, 0), 2)

# check to see if we should write the frame to disk

if writer is not None:

writer.write(frame)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# update the FPS counter

fps.update()

# stop the timer and display FPS information

fps.stop()

print("[INFO] elapsed time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# check to see if we need to release the video writer pointer

if writer is not None:

writer.release()

# do a bit of cleanup

cv2.destroyAllWindows()

vs.release()

引用至https://www.pyimagesearch.com/2018/10/22/object-tracking-with-dlib/

完整资源也可在该网站下载,这篇文章做了一下整理。