Nvidia Jetson Xavier配置tensorflow1.13.1 C++ API

Nvidia Jetson Xavier配置tensorflow1.13.1 C++ API

温馨提示:源码编译tensorflow所占内存比较大,最好在一个全新的AGX上编译或者加入sd卡或者硬盘。本人是在一个全新的AGX上进行的编译,并卸载了一些无用软件,已成功。

配置清华源

首先备份/etc/apt/sources.list

cp /etc/apt/sources.list /etc/apt/sources.list.bak

将sources.list修改为:

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-updates main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-updates main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-security main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-security main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-backports main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial-backports main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial main universe restricted

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ xenial main universe restricted

安装gcc-5

AGX自带的gcc版本过高,需要降低到5,我第一次编译的时候未降级就失败了,可以通过建立软连接的方式让两个版本共存。

sudo apt-get install gcc-5

sudo apt-get install g++-5

此时终端输入gcc -v依然是AGX自带的版本,此时删除旧连接,建立新的的软连接。

sudo rm -rf /usr/bin/gcc /usr/bin/g++

sudo ln -s /usr/bin/gcc-5 /usr/bin/gcc

sudo ln -s /usr/bin/g++-5 /usr/bin/g++

此时终端输入gcc -v即是gcc version 5.4.0 20160609 (Ubuntu/Linaro 5.4.0-6ubuntu1~16.04.12)

安装jdk1.8

sudo apt-get install openjdk-8-jdk

安装bazel

下载bazel0.19.2

AGX上需要下载源码bazel-0.19.2-dist.zip进行编译安装,bazel的版本要与tensorflow的版本相对应,否则会编译失败。

![]()

编译的时候需要注意,先新建一个bazel的文件夹,然后将压缩包移动进去,在文件夹内解压。

unzip bazel-0.19.2-dist.zip

bash ./compile.sh

编译会持续较长时间,编译产生的文件bazel放在./output里面,将其复制到/usr/local/bin

sudo cp ./output/bazel /usr/local/bin

终端查看版本信息

bazel version

安装protocbuf3.6.1

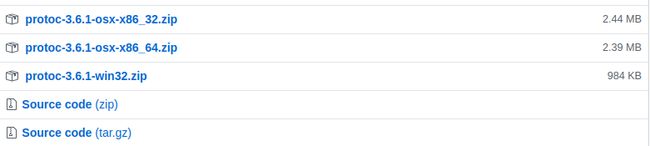

protocbuf的版本要与tensorflow版本相对应,tensorflow源码./tensorflow-1.13.1/tensorflow/workspace.bzl会有对应需要的protocbuf的版本,tensorflow1.13.1需要protocbuf3.6.1,并且在编译时会自行下载依赖文件,我自己下载源码后进行的编译安装。

先贴出protobuf官方下载的连接,点击最下面的选项Source Code(tar.gz)进行下载,下载后解压到个人文件夹。

编译安装之前需要先安装依赖,否则会出错。

sudo apt-get install autoconf automake libtool curl make

然后进入到解压后的文件夹下打开终端,输入以下命令进行安装。

./autogen.sh

./configure

make

sudo make install

sudo ldconfig

# sudo make uninstall 安装错版本后卸载指令

protoc --version # 查看protobuf版本

安装Eigen库

编译tensorflow时会自行下载对应的Eigen源码,我是自己下载后进行编译安装。

使用如下命令安装,我们这里使用的版本是 Eigen 3.3.4:

wget -t 0 -c https://github.com/eigenteam/eigen-git-mirror/archive/3.3.4.zip

unzip 3.3.4.zip

cd eigen-git-mirror-3.3.4/

mkdir build

cd build

cmake ..

make

sudo make install

编译tensorfow c++ API

下载源码

git clone https://github.com/tensorflow/tensorflow.git

cd tensorflow

git checkout r1.13.1

然后需要修改部分代码,这部分中-代表删除,+代表添加

首先是./tensorflow/lite/kernels/internal/BUILD

diff --git a/tensorflow/lite/kernels/internal/BUILD b/tensorflow/lite/kernels/internal/BUILD

index 4be3226938..7226f96fdf 100644

--- a/tensorflow/lite/kernels/internal/BUILD

+++ b/tensorflow/lite/kernels/internal/BUILD

@@ -22,15 +22,12 @@ HARD_FP_FLAGS_IF_APPLICABLE = select({

NEON_FLAGS_IF_APPLICABLE = select({

":arm": [

"-O3",

- "-mfpu=neon",

],

":armeabi-v7a": [

"-O3",

- "-mfpu=neon",

],

":armv7a": [

"-O3",

- "-mfpu=neon",

],

"//conditions:default": [

"-O3",

然后是./third_party/aws/BUILD.bazel b/third_party/aws/BUILD.bazel

diff --git a/third_party/aws/BUILD.bazel b/third_party/aws/BUILD.bazel

index 5426f79e46..e08f8fc108 100644

--- a/third_party/aws/BUILD.bazel

+++ b/third_party/aws/BUILD.bazel

@@ -24,7 +24,7 @@ cc_library(

"@org_tensorflow//tensorflow:raspberry_pi_armeabi": glob([

"aws-cpp-sdk-core/source/platform/linux-shared/*.cpp",

]),

- "//conditions:default": [],

+ "//conditions:default": glob(["aws-cpp-sdk-core/source/platform/linux-shared/*.cpp",]),

}) + glob([

"aws-cpp-sdk-core/include/**/*.h",

"aws-cpp-sdk-core/source/*.cpp",

最后是./third_party/gpus/crosstool/BUILD.tpl b/third_party/gpus/crosstool/BUILD.tpl

diff --git a/third_party/gpus/crosstool/BUILD.tpl b/third_party/gpus/crosstool/BUILD.tpl

index db76306ffb..184cd35b87 100644

--- a/third_party/gpus/crosstool/BUILD.tpl

+++ b/third_party/gpus/crosstool/BUILD.tpl

@@ -24,6 +24,7 @@ cc_toolchain_suite(

"x64_windows|msvc-cl": ":cc-compiler-windows",

"x64_windows": ":cc-compiler-windows",

"arm": ":cc-compiler-local",

+ "aarch64": ":cc-compiler-local",

"k8": ":cc-compiler-local",

"piii": ":cc-compiler-local",

"ppc": ":cc-compiler-local",

修改 tensorflow 里面的 protobuf 和 eigen 版本配置(当然如果你安装的 protobuf 就是 3.6.1 就不需要这些步骤了):

在刚才的步骤中,我们已经安装了自己的一个 protobuf 版本,然而比较坑的是 tensorflow 自己也会下载一个版本,同时内部也有自己定义的版本号,这些版本很可能都不是统一的。最后会造成版本冲突。因此我们这里建议预先修改 tensorflow 源码将内部的版本统一成我们系统的版本。

打开 tensorflow/workspace.bzl,将有关protocbuf版本信息修改为自己安装的版本号,如v3.6.1.tar.gz。

tf_http_archive(

name = "protobuf_archive",

urls = [

"https://mirror.bazel.build/github.com/google/protobuf/archive/v3.6.1.tar.gz",

"https://github.com/google/protobuf/archive/v3.6.1.tar.gz",

],

sha256 = "3d4e589d81b2006ca603c1ab712c9715a76227293032d05b26fca603f90b3f5b",

strip_prefix = "protobuf-3.6.1",

)

# We need to import the protobuf library under the names com_google_protobuf

# and com_google_protobuf_cc to enable proto_library support in bazel.

# Unfortunately there is no way to alias http_archives at the moment.

tf_http_archive(

name = "com_google_protobuf",

urls = [

"https://mirror.bazel.build/github.com/google/protobuf/archive/v3.6.1.tar.gz",

"https://github.com/google/protobuf/archive/v3.6.1.tar.gz",

],

sha256 = "3d4e589d81b2006ca603c1ab712c9715a76227293032d05b26fca603f90b3f5b",

strip_prefix = "protobuf-3.6.1",

)

tf_http_archive(

name = "com_google_protobuf_cc",

urls = [

"https://mirror.bazel.build/github.com/google/protobuf/archive/v3.6.1.tar.gz",

"https://github.com/google/protobuf/archive/v3.6.1.tar.gz",

],

sha256 = "3d4e589d81b2006ca603c1ab712c9715a76227293032d05b26fca603f90b3f5b",

strip_prefix = "protobuf-3.6.1",

)

打开 tensorflow/contrib/cmake/external/protobuf.cmake

修改:set(PROTOBUF_TAG v3.6.1)

打开 tensorflow/tools/ci_build/install/install_proto3.sh

修改:PROTOBUF_VERSION="3.6.1"

打开 tensorflow/tools/ci_build/protobuf/protobuf_optimized_pip.sh

修改:PROTOBUF_VERSION="3.6.1"

接下来是创建交换分区,这个是因为Xavier自带的内存不够用,必须使用交换分区才行,我当初没有创建,编译了几个小时之后报错。。。

首先用命令free查看系统内 Swap 分区大小

free -m

total used free shared buff/cache available

Mem: 15692 5695 7437 787 2558 10505

Swap: 0 0 0

创建8G的swap空间

fallocate -l 8G swapfile

ls -lh swapfile

sudo chmod 600 swapfile

ls -lh swapfile

sudo mkswap swapfile

sudo swapon swapfile

swapon -s

接下来开始编译我们的tensorflow c++ API

首先配置configure文件

1. $./configure

2. Please specify the location of python. [Default is /usr/bin/python]:/usr/bin/python3

5. Found possible Python library paths:

6. /usr/local/lib/python3.6/dist-packages

7. /usr/lib/python3.6/dist-packages

8. Please input the desired Python library path to use. Default is [/usr/local/lib/python3.6/dist-packages]

10. Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

11. No XLA JIT support will be enabled for TensorFlow.

13. Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

14. No OpenCL SYCL support will be enabled for TensorFlow.

16. Do you wish to build TensorFlow with ROCm support? [y/N]: n

17. No ROCm support will be enabled for TensorFlow.

19. Do you wish to build TensorFlow with CUDA support? [y/N]: y

20. CUDA support will be enabled for TensorFlow.

22. Please specify the CUDA SDK version you want to use. [Leave empty to default to CUDA 10.0]:

25. Please specify the location where CUDA 10.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:/usr/local/cuda-10.0

28. Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7]:7.3.1

31. Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:/usr/lib/aarch64-linux-gnu

34. Do you wish to build TensorFlow with TensorRT support? [y/N]: n

35. TensorRT support will be enabled for TensorFlow.

37. Please specify the location where TensorRT is installed. [Default is /usr/lib/aarch64-linux-gnu]:

40. Please specify the locally installed NCCL version you want to use. [Default is to use [https://github.com/nvidia/nccl]:](https://github.com/nvidia/nccl]:)

43. Please specify a list of comma-separated Cuda compute capabilities you want to build with.

44. You can find the compute capability of your device at: [https://developer.nvidia.com/cuda-gpus.](https://developer.nvidia.com/cuda-gpus.)

45. Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 3.5,7.0]: 7.2

48. Do you want to use clang as CUDA compiler? [y/N]: n

49. nvcc will be used as CUDA compiler.

51. Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:/usr/bin/gcc

54. Do you wish to build TensorFlow with MPI support? [y/N]: n

55. No MPI support will be enabled for TensorFlow.

57. Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

60. Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: n

61. Not configuring the WORKSPACE for Android builds.

然后编译tensorflow

bazel build --config=opt --config=cuda --config=nonccl //tensorflow:libtensorflow_cc.so --incompatible_remove_native_http_archive=false --verbose_failures --cxxopt="-D_GLIBCXX_USE_CXX11_ABI=0"

编译时所占内存比较大,电脑就不要干其他事情,静静等待即可。编译成功后会在./bazel-bin/tensorflow生成libtensorflow_cc.so,libtensorflow_framework.so两个文件。

测试tensorflow c++ API

首先把需要的文件挑选出来。

将"tensorflow/bazel-genfiles/tensorflow/"中的cc和core文件夹中的内容copy到"tensorflow/tensorflow/"中,然后选择合并覆盖。

复制tensorflow/tensorflow/文件到到home下(或者 /usr/local/include,都可以),并删除不需要的文件:

sudo mkdir -p /usr/local/include/tensorflow

sudo cp -r tensorflow /usr/local/include/tensorflow/

sudo find /usr/local/include/tensorflow/tensorflow -type f ! -name "*.h" -delete

复制 third_party 文件夹:

$ sudo cp -r third_party /usr/local/include/tensorflow/

$ sudo rm -r /usr/local/include/google/tensorflow/third_party/py

# Note: newer versions of TensorFlow do not have the following directory

$ sudo rm -r /usr/local/include/tensorflow/third_party/avro

复制.so文件:

sudo cp libtensorflow_cc.so /usr/local/lib/

sudo cp libtensorflow_framework.so /usr/local/lib/

首先编写CMakeLists.txt

cmake_minimum_required(VERSION 3.5)

project(test_tensorflow)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

add_definitions(-D_GLIBCXX_USE_CXX11_ABI=0)

link_directories(/usr/local/lib/)

include_directories(

/usr/local/include/tensorflow

/usr/local/include/eigen3

)

find_package(OpenCV REQUIRED)

add_executable(test_tensorflow main.cpp)

target_link_libraries(test_tensorflow tensorflow_cc tensorflow_framework)

target_link_libraries(test_tensorflow ${OpenCV_LIBS})

测试代码

#include 编译和调用时遇到的问题

可能会遇到下载不下来文件的情况

bazel build出现”no such package ‘@icu//’: java.io.IOException…"的错误,主要是icu模块的release-62-1.tar.gz包下载失败。

以为是网络不稳定,试了N次,几乎每次都是出现这个报错。查了一下才发现需要梯子,导致powershell编译时下载包失败!

解决方案:通过chrome浏览器下载release-62-1.tar.gz包(浏览器也需要梯子,不过浏览器下载容易的多),然后将tar.gz包上传到http文件服务器,通过http文件服务器加载tar.gz包。

如何在本地搭建一个简单的HTTP服务器?

sudo apt-get install httpd

输出如下信息

意思是选择一个安装,那么我选择apache2来安装

sudo apt-get install apache2

sudo apt-get install apache2-dev

查看是否安装成功

sudo systemctl status apache2

在浏览器输入localhost或者本机的ip

成功!并会生成一个/var/www/html文件,将下载好的tar.gz包复制到/var/www/html下。

修改tensorflow/third_party/icu/workspace.bzl文件,urls中添加:

"http://localhost/release-62-1.tar.gz"

测试案例时遇到一些问题

(1)fatal error: absl/strings/string_view.h

解决方案,git clone https://github.com/abseil/abseil-cpp,然后把该库加到搜索目录里面

(2)tensorflow::status::tostringabi:cxx11 const

解决方案,编译选项设置-D_GLIBCXX_USE_CXX11_ABI=0,在CMakeLists.txt中加入add_definitions(-D_GLIBCXX_USE_CXX11_ABI=0)

如果使用的是QT,则在.pro文件中加入DEFINES += _GLIBCXX_USE_CXX11_ABI=0。

(3)对‘tensorflow::SessionOptions::SessionOptions()’未定义的引用

找不到正确的libtensorflow_cc.so,添加动态链接库路径

到此,我们的tensorflow c++ API编译成功了!!!

参考连接:

https://www.jianshu.com/p/746ec48edbdb

https://blog.csdn.net/yz2zcx/article/details/83153588

https://blog.csdn.net/yz2zcx/article/details/83153588

Tensorflow C++ 从训练到部署(1):环境搭建

https://www.cnblogs.com/buyizhiyou/p/10405634.html