21个tensorflow项目的读后感(一)

0 第一章手写字体识别

1 下载数据

数据格式为

| 压缩文件名 | 大小 |

|---|---|

| train-images-idx3-ubyte.gz | training set images (9912422 bytes) |

| train-labels-idx1-ubyte.gz | training set labels (28881 bytes) |

| t10k-images-idx3-ubyte.gz | test set images (1648877 bytes) |

| t10k-labels-idx1-ubyte.gz | test set labels (4542 bytes) |

下载地址 http://yann.lecun.com/exdb/mnist/

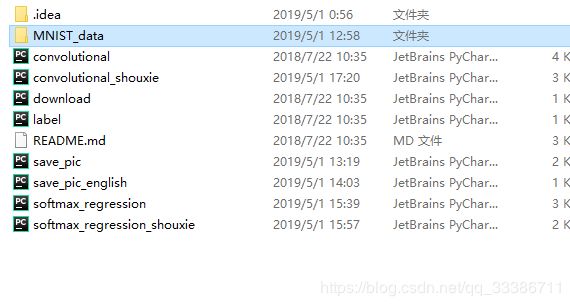

保存名为MNIST_datad的文件夹下

2 通过softmax函数来进行分类

首先导入包,并判断数据是否存在

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data',one_hot=True)

然后通过占位方式读取数据,并创建权值变量W和偏置b.

# 原始数据占位

x = tf.placeholder(tf.float32,[None,784])

# 设置权值空间

W = tf.Variable(tf.zeros([784,10]))

# 设置偏置空间

b = tf.Variable(tf.zeros([10]))

# 表示输出形式

y = tf.nn.softmax(tf.matmul(x,W)+b)

# 预测输出占位

y_ = tf.placeholder(tf.float32,[None,10])

softmax函数

S o f t m a x = e x i ∑ j e x i Softmax = \frac{e^{x_{i}}}{\sum_{j}{e^{x_{i}}}} Softmax=∑jexiexi

举个栗子,比如一个样本输出2个属性,我们要判断它属于哪一类.那么它的计算如下:

| 序号 | 属性1 | 属性2 | 标签 |

|---|---|---|---|

| 1 | 0.5 | 1.2 | [0,1] |

| 2 | 2.6 | 0 | [1,0] |

对于序号1:

e 0.5 e 1.2 + e 0.5 = 0.3318 \frac{e^{0.5}}{e^{1.2}+e^{0.5}} =0.3318 e1.2+e0.5e0.5=0.3318 e 1.2 e 1.2 + e 0.5 = 0.6682 \frac{e^{1.2}}{e^{1.2}+e^{0.5}} =0.6682 e1.2+e0.5e1.2=0.6682

对于序列2:

e 2..6 e 2.6 + e 0 = 0.9308 \frac{e^{2..6}}{e^{2.6}+e^{0}} = 0.9308 e2.6+e0e2..6=0.9308 e 0.0 e 2.6 + e 0.0 = 0.0692 \frac{e^{0.0}}{e^{2.6}+e^{0.0}} =0.0692 e2.6+e0.0e0.0=0.0692

通过与标签对比,从而判断它们分别属于哪一类.

# 设置交叉熵函数

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_*tf.log(y)))

# 通过梯度下降的学习率

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(cross_entropy)

# 开启会话

sess = tf.InteractiveSession()

# 初始化参数

tf.global_variables_initializer().run()

# 进行1000步梯度下降

for _ in range(1000):

# 在mnist.train中取100个训练数据

# batch_xs是形状为(100, 784)的图像数据,batch_ys是形如(100, 10)的实际标签

# batch_xs, batch_ys对应着两个占位符x和y_

batch_xs, batch_ys = mnist.train.next_batch(100)

# 在Session中运行train_step,运行时要传入占位符的值

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

# 正确的预测结果

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

# 计算预测准确率,它们都是Tensor

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 在Session中运行Tensor可以得到Tensor的值

# 这里是获取最终模型的正确率

print(sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels}))

3 通过两层卷积进行分类

导入库和数据

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# 读取数据

mnist = input_data.read_data_sets('MNIST_data',one_hot = True)

# x为训练图像的占位符、y_为训练图像标签的占位符

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

# 将单张图片从784维向量重新还原为28x28的矩阵图片

x_image = tf.reshape(x, [-1, 28, 28, 1])

定义卷积层,池化层函数

def weight_variable(shape):

initial = tf.truncated_normal(shape,stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1,shape=shape)

return tf.Variable(initial)

def conv2d(x,W):

return tf.nn.conv2d(x,W,strides = [1,1,1,1],padding = 'SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x,ksize=[1,2,2,1],strides = [1,2,2,1], padding='SAME')

设置第一个卷积层

# 第一个卷积操作

w_conv1 = weight_variable([5,5,1,32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_images,w_conv1)+b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

设置第二个卷积层

# 第二个卷积操作

w_conv2 = weight_variable([5,5,32,64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1,w_conv2)+b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

# In[]

# 全连接

w_fc1 = weight_variable([7*7*64,1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2,[-1,7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat,w_fc1)+b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1,keep_prob)

w_fc2 = weight_variable([1024,10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(h_fc1_drop,w_fc2)+b_fc2

# 损失函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_,logits=y_conv))

train_step = tf.train.AdagradOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1),tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

# 创建Session对变量初始化

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

for i in range(20000):

batch = mnist.train.next_batch(50)

if i%100==0:

train_accuracy = accuracy.eval(feed_dict={x:batch[0],y_:batch[1],keep_prob:1.0})

print('step %d, training accuracy %g' %(i,train_accuracy))

train_step.run(feed_dict={x:batch[0],y_:batch[1],keep_prob:0.5})

print('test accuracy %g' % accuracy.eval(feed_dict={x:mnist.test.images,y_:mnist.test.labels,keep_prob:1.0}))