分别将yawn数据集的Normal Talking 和 Yawning的视频单独截取出来。

yawn_split_video

import os

import cv2

import numpy as np

import sys

print(sys.path)

NUM_ELEMENT = 3

NUM_VIDEO_CLASS = 4

TAGS = ['Normal', 'Talking', 'Yawning']

DATA_SET_LIST = ['train_lst', 'test_lst']

for data_set in DATA_SET_LIST:

split_file_list = ['../dataset/' + data_set + '/male_yawn_split.lst',

'../dataset/' + data_set + '/female_yawn_split.lst',

'../dataset/' + data_set + '/dash_female_yawn_split_yawning.lst',

'../dataset/' + data_set + '/dash_male_yawn_split_yawning.lst']

dataset_path_list = ['../YawDD_dataset/Mirror/Male_mirror',

'../YawDD_dataset/Mirror/Female_mirror',

'../YawDD_dataset/Dash/Female',

'../YawDD_dataset/Dash/Male']

save_path_list = ['../dataset/' + data_set + '/mirror_male_split_output',

'../dataset/' + data_set + '/mirror_female_split_output',

'../dataset/' + data_set + '/dash_female_split_output',

'../dataset/' + data_set + '/dash_male_split_output']

for video_index in range(NUM_VIDEO_CLASS):

split_file = split_file_list[video_index]

dataset_path = dataset_path_list[video_index]

save_path = save_path_list[video_index]

for i in range(len(TAGS)):

path = os.path.join(save_path, TAGS[i])

if os.path.exists(path) is False:

os.makedirs(path)

print('split file: ', split_file)

fd = open(split_file)

for line in fd.readlines():

line = line.strip('\n')

line = line.split(' ')

video_file = os.path.join(dataset_path, line[0])

print('video_file: ',video_file)

num_clips = int((len(line) - 1) / NUM_ELEMENT)

clips_info = np.array(list(map(int, line[1:])))

clips_info = clips_info.reshape(num_clips, NUM_ELEMENT)

cap = cv2.VideoCapture(video_file)

fcnt = cap.get(cv2.CAP_PROP_FRAME_COUNT)

fps = cap.get(cv2.CAP_PROP_FPS)

fsize = (int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)),

int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)))

print(video_file)

for i in range(num_clips):

start_slice = clips_info[i, 0]

fstart = int(start_slice * fps)

if clips_info[i, 1] > 0:

end_slice = clips_info[i, 1]

fend = min(int(end_slice * fps), fcnt)

else:

fend = int(fcnt) - 20

if fstart >= fend:

print('=======start > end, continue======')

continue

print('%d --> %d, tag: %s' % (fstart, fend, TAGS[clips_info[i, 2]]))

assert(fstart > 0 or fend <= fcnt)

cap.set(cv2.CAP_PROP_POS_FRAMES, fstart)

[_, file_name] = os.path.split(video_file)

[video_name, _] = os.path.splitext(file_name)

clip_file = '%s/%s/%s-clip-%d.avi' % (save_path, TAGS[clips_info[i, 2]], video_name, i)

fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D')

writer = cv2.VideoWriter(clip_file, fourcc, fps=fps, frameSize=fsize)

for n in range(fend-fstart):

success, img = cap.read()

writer.write(img)

writer.release()

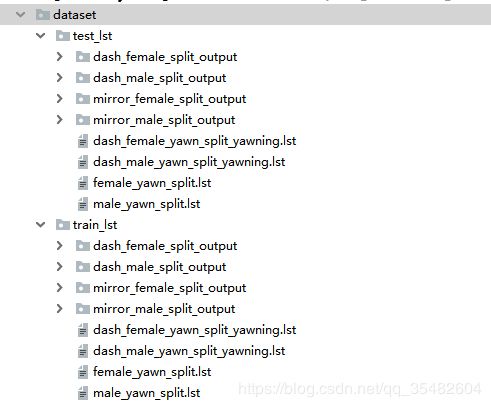

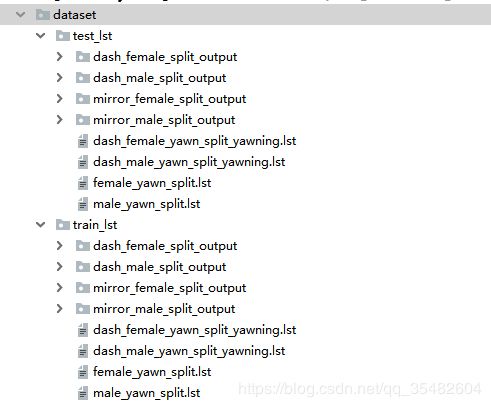

最后产生的结果:

把测试集相应的文件夹的各个类别的视频中的人脸截取出来,每隔两帧截取一张图片,并把标注的结果保存下来。

import cv2

import numpy as np

import face_detector as fd

import os

import multiprocessing

def drawBoxes(im, boxes):

x1 = boxes[0]

y1 = boxes[1]

x2 = boxes[2]

y2 = boxes[3]

cv2.putText(im, 'face', (int(x1), int(y1)), 5, 1.0, [255, 0, 0])

cv2.rectangle(im, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 0), 1)

return im

def rectify_bbox(bbox, img):

max_height = img.shape[0]

max_width = img.shape[1]

bbox[0] = max(bbox[0], 0)

bbox[1] = max(bbox[1], 0)

bbox[2] = min(bbox[2], max_width)

bbox[3] = min(bbox[3], max_height)

return bbox

def process(video_dir, save_dir, train_file_list):

minsize = 20

total_face = 0

with open(train_file_list, 'a+') as f_trainList:

for dir_path, dir_names, _ in os.walk(video_dir):

print('----------------------------------------')

print('dir_path: ',dir_path)

print('dir_names: ',dir_names)

print('_: ',_)

for dir_name in dir_names:

print('video_dir_name : ' + dir_path + '/' + dir_name)

video_dir_name = os.path.join(dir_path, dir_name)

if dir_name in ['Normal']:

label = '0'

elif dir_name in ['Talking']:

label = '0'

elif dir_name in ['Yawning']:

label = '1'

else:

print("Too bad, label invalid.")

continue

video_names = os.listdir(video_dir_name)

print('video_names: ',video_names)

for video_name in video_names:

frame_count = -1

cap = cv2.VideoCapture(os.path.join(video_dir_name, video_name))

if cap.isOpened():

ftotal = cap.get(cv2.CAP_PROP_FRAME_COUNT)

read_success = True

else:

read_success = False

while read_success:

read_success, img = cap.read()

try:

img.shape

except:

break

frame_count += 1

if frame_count % SAMPLE_STEP == 0:

conf, raw_boxes = fd.get_facebox(image=img, threshold=0.7)

if len(raw_boxes) == 0:

print('***too sad, the face can not be detected!')

continue

elif len(raw_boxes) == 1:

bbox = raw_boxes[0]

elif len(raw_boxes) > 1:

bbox = raw_boxes[0]

bbox = rectify_bbox(bbox, img)

x = int(bbox[0])

y = int(bbox[1])

w = int(bbox[2] - bbox[0])

h = int(bbox[3] - bbox[1])

face = img[y:y + h, x:x + w, :]

face_sp = np.shape(face)

face_resize = face

face_resize = cv2.resize(face_resize, (CROPPED_WIDTH, CROPPED_HEIGHT))

if DEBUG:

cv2.imshow('face', face)

ch = cv2.waitKey(40000) & 0xFF

cv2.imshow('face_trans', face_resize)

ch = cv2.waitKey(40000) & 0xFF

img = drawBoxes(img, bbox)

cv2.imshow('img', img)

ch = cv2.waitKey(40000) & 0xFF

if ch == 27:

break

total_face += 1

img_file_name = video_name.split('.')[0]

img_file_name = img_file_name + '-' + str(total_face) + '_' + str(label) + '.jpg'

img_save_path = os.path.join(save_dir, img_file_name)

f_trainList.write(img_save_path + ' ' + label + '\n')

cv2.imwrite(img_save_path, face_resize)

cap.release()

print('Total face img: {}'.format(total_face))

if __name__ == "__main__":

SAMPLE_STEP = 2

NUM_WORKER = 1

DEBUG = False

CROPPED_WIDTH = 112

CROPPED_HEIGHT = 112

video_dir = '../dataset/test_lst'

face_save_dir = '../extracted_face/face_image'

if not os.path.exists(face_save_dir):

os.makedirs(face_save_dir)

train_file_list_dir = '../extracted_face/'

file_list_name = 'test.txt'

process(video_dir, face_save_dir, train_file_list_dir+file_list_name)

将数据集分别划分为训练集,验证集,测试集

"""

This script split dataset into four sets: test.txt, trainval.txt, train.txt, val.txt

"""

import os

import random

class ListGenerator:

"""Generate a list of specific files in directory."""

def __init__(self):

"""Initialization"""

self.file_list = []

def generate_list(self, src_dir, save_dir, format_list=['jpg', 'png'], train_val_ratio=0.9, train_ratio=0.9):

"""Generate the file list of format_list in target_dir

Args:

target_dir: the directory in which files will be listed.

format_list: a list of file extention names.

Returns:

a list of file urls.

"""

self.src_dir = src_dir

self.save_dir = save_dir

self.format_list = format_list

self.train_val_ratio = train_val_ratio

self.train_ratio = train_ratio

pos_num = 0

for file_path, _, current_files in os.walk(self.src_dir, followlinks=False):

current_image_files = []

for filename in current_files:

if filename.split('.')[-1] in self.format_list:

current_image_files.append(filename)

sample_num = len(current_image_files)

file_list_index = range(sample_num)

tv = int(sample_num * self.train_val_ratio)

tr = int(tv * self.train_ratio)

print("train_val_num: {}\ntrain_num: {}".format(tv, tr))

train_val = random.sample(file_list_index, tv)

train = random.sample(train_val, tr)

ftrain_val = open(self.save_dir + '/trainval.txt', 'w')

ftest = open(self.save_dir + '/test.txt', 'w')

ftrain = open(self.save_dir + '/train.txt', 'w')

fval = open(self.save_dir + '/val.txt', 'w')

for i in file_list_index:

label = current_image_files[i].split('.')[0]

label = label.split('_')[-1]

if label == '1':

pos_num += 1

name = os.path.join(self.src_dir, current_image_files[i]) + ' ' + str(label) + '\n'

if i in train_val:

ftrain_val.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

print('positive num : {}'.format(pos_num))

ftrain_val.close()

ftrain.close()

fval.close()

ftest.close()

return self.file_list

def main():

"""MAIN"""

lg = ListGenerator()

lg.generate_list(src_dir='../extracted_face/face_image',

save_dir='../extracted_face')

print("Done !!")

if __name__ == '__main__':

main()