基于python的ELM(极限学习机)分类及回归实现(附带自己数据链接及推导)

ELM主程序来源:参考@QuantumEntanglement

经过了小小的修改,十分感谢该博主

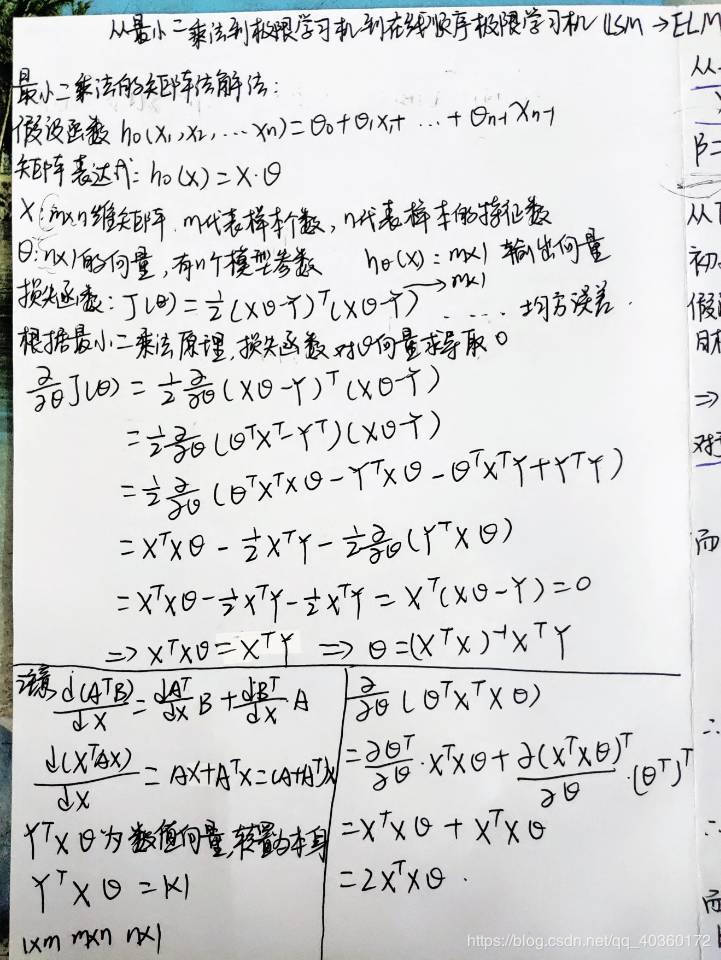

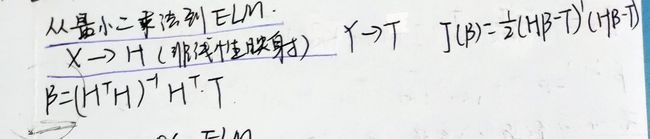

自己理解推导过程:从最小二乘法到极限学习机

1.分类

# -*- coding: utf-8 -*-

"""

Created on Sun Mar 29 10:59:58 2020

@author: 小小飞在路上

"""

import numpy as np

from sklearn.preprocessing import OneHotEncoder

from sklearn.model_selection import train_test_split #数据集的分割函数

from sklearn.preprocessing import StandardScaler #数据预处理

from sklearn import metrics

import pandas as pd

from sklearn.utils import shuffle

class RELM_HiddenLayer:

"""

正则化的极限学习机

:param x: 初始化学习机时的训练集属性X

:param num: 学习机隐层节点数

:param C: 正则化系数的倒数

"""

def __init__(self, x, num, C=10):

row = x.shape[0]

columns = x.shape[1]

rnd = np.random.RandomState()

# 权重w

self.w = rnd.uniform(-1, 1, (columns, num))

# 偏置b

self.b = np.zeros([row, num], dtype=float)

for i in range(num):

rand_b = rnd.uniform(-0.4, 0.4)

for j in range(row):

self.b[j, i] = rand_b

self.H0 = np.matrix(self.softplus(np.dot(x, self.w) + self.b))

self.C = C

self.P = (self.H0.H * self.H0 + len(x) / self.C).I

#.T:转置矩阵,.H:共轭转置,.I:逆矩阵

@staticmethod

def sigmoid(x):

"""

激活函数sigmoid

:param x: 训练集中的X

:return: 激活值

"""

return 1.0 / (1 + np.exp(-x))

@staticmethod

def softplus(x):

"""

激活函数 softplus

:param x: 训练集中的X

:return: 激活值

"""

return np.log(1 + np.exp(x))

@staticmethod

def tanh(x):

"""

激活函数tanh

:param x: 训练集中的X

:return: 激活值

"""

return (np.exp(x) - np.exp(-x))/(np.exp(x) + np.exp(-x))

# 分类问题 训练

def classifisor_train(self, T):

"""

初始化了学习机后需要传入对应标签T

:param T: 对应属性X的标签T

:return: 隐层输出权值beta

"""

if len(T.shape) > 1:

pass

else:

self.en_one = OneHotEncoder()

T = self.en_one.fit_transform(T.reshape(-1, 1)).toarray()

pass

all_m = np.dot(self.P, self.H0.H)

self.beta = np.dot(all_m, T)

return self.beta

# 分类问题 测试

def classifisor_test(self, test_x):

"""

传入待预测的属性X并进行预测获得预测值

:param test_x:被预测标签的属性X

:return: 被预测标签的预测值T

"""

b_row = test_x.shape[0]

h = self.softplus(np.dot(test_x, self.w) + self.b[:b_row, :])

result = np.dot(h, self.beta)

result =np.argmax(result,axis=1)

return result

# In[]

#数据读取及划分

url = 'C:/Users/weixifei/Desktop/TensorFlow程序/data1.csv'

data = pd. read_csv(url, sep=',',header=None)

data=np.array(data)

data=shuffle(data)

X_data=data[:,:23]

Y=data[:,23]

labels=np.asarray(pd.get_dummies(Y),dtype=np.int8)

num_train=0.3

X_train,X_,Y_train,Y_=train_test_split(X_data,labels,test_size=num_train,random_state=20)

X_test,X_vld,Y_test,Y_vld=train_test_split(X_,Y_,test_size=0.1,random_state=20)

# In[]

#数据标准化处理

stdsc = StandardScaler()

X_train=stdsc.fit_transform(X_train)

X_test=stdsc.fit_transform(X_test)

X_vld=stdsc.fit_transform(X_vld)

Y_true=np.argmax(Y_test,axis=1)

# In[]

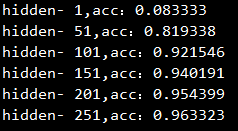

#不同隐藏层结果对比

result=[]

for j in range(1,300,50):

a = RELM_HiddenLayer(X_train,j)

a.classifisor_train(Y_train)

predict = a.classifisor_test(X_test)

acc=metrics.precision_score(predict,Y_true, average='macro')

# result.append(pre)

print('hidden- %d,acc:%f'%(j,acc))

2.回归

程序

"""

Created on Tue Apr 21 22:37:14 2020

@author: 小小飞在路上

"""

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (8, 5)

class RELM_HiddenLayer:

"""

正则化的极限学习机

:param x: 初始化学习机时的训练集属性X

:param num: 学习机隐层节点数

:param C: 正则化系数的倒数

"""

def __init__(self, x, num, C=10):

row = x.shape[0]

columns = x.shape[1]

rnd = np.random.RandomState()

# 权重w

self.w = rnd.uniform(-1, 1, (columns, num))

# 偏置b

self.b = np.zeros([row, num], dtype=float)

for i in range(num):

rand_b = rnd.uniform(-0.4, 0.4)

for j in range(row):

self.b[j, i] = rand_b

self.H0 = np.matrix(self.sigmoid(np.dot(x, self.w) + self.b))

self.C = C

self.P = (self.H0.H * self.H0 + len(x) / self.C).I

@staticmethod

def sigmoid(x):

"""

激活函数sigmoid

:param x: 训练集中的X

:return: 激活值

"""

return 1.0 / (1 + np.exp(-x))

# 回归问题 训练

def regressor_train(self, T):

"""

初始化了学习机后需要传入对应标签T

:param T: 对应属性X的标签T

:return: 隐层输出权值beta

"""

all_m = np.dot(self.P, self.H0.H)

self.beta = np.dot(all_m, T)

return self.beta

# 回归问题 测试

def regressor_test(self, test_x):

"""

传入待预测的属性X并进行预测获得预测值

:param test_x:特征

:return: 预测值

"""

b_row = test_x.shape[0]

h = self.sigmoid(np.dot(test_x, self.w) + self.b[:b_row, :])

result = np.dot(h, self.beta)

return result

#产生数据集

x=np.linspace(0,20,200)

noise=np.random.normal(0,0.08,200)

y=np.sin(x)+np.cos(0.5*x)+noise

#转化成二维形式

x=np.array(x).reshape(-1,1)

y=np.array(y).reshape(-1,1)

j=0

#绘制原始散点图

plt.plot(x,y,'or')

#不同隐藏层线条设置不同的颜色

color=['g','b','y','c','m']

#比较不同隐藏层拟合效果

for i in range(5,30,5):

my_EML = RELM_HiddenLayer(x,i)

my_EML.regressor_train(y)

x_test = np.linspace(0,20,200).reshape(-1,1)

y_test = my_EML.regressor_test(x_test)

plt.plot(x_test,y_test,color[j])

plt.title('EML_regress')

plt.xlabel('x')

plt.ylabel('y')

j+=1

#增加图例

plt.legend([['original'],['hidden_5'],['hidden_10'],['hidden_15'],['hidden_20'],['hidden_25']],loc='upper right')

plt.show()