吴恩达机器学习ex1MATLAB实现完整版

前言:线性回归的实现在机器学习中是相对比较容易理解和实现的,最近回顾了一下之前学的线性回归,重新理了一下吴恩达的作业,加之之前做的时候很多地方不明白。因此,重新将其实现一遍。

简单来说,利用梯度下降算法求解线性回归方程主要分为两步:1、计算代价函数;2、最小化代价函数得到参数θ。

下面依次来实现单变量和多变量的线性回归问题。

单变量问题

computeCost.m

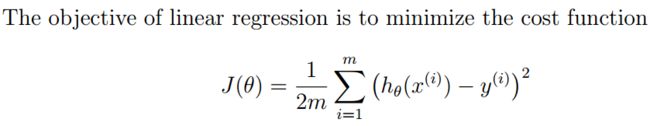

这个函数的作用就是计算代价函数,代价函数的计算公式如下。

对于的MATLAB代码实现也相当简单 ,这里采用向量化的方式实现。

function J = computeCost(X, y, theta)

%COMPUTECOST Compute cost for linear regression

% J = COMPUTECOST(X, y, theta) computes the cost of using theta as the

% parameter for linear regression to fit the data points in X and y

% Initialize some useful values

m = length(y); % number of training examples

% You need to return the following variables correctly

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta

% You should set J to the cost.

J = sum((X * theta - y) .^ 2 ) / (2 * m);

% =========================================================================

endgradientDescent..m

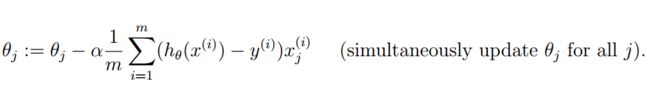

这个函数的作用就是进行梯度下降运算,在运行梯度下降时唯一需要注意的就是对于σ变量的同步更新。通过一定次数的迭代,可以得到目标参数σ的值。

同样,这里的代码也采用向量化实现。在代码中定义了一个temp变量,用来临时存储计算的θ值,在所有的θ值计算完毕后做到同时更新。

function [theta, J_history] = gradientDescent(X, y, theta, alpha, num_iters)

%GRADIENTDESCENT Performs gradient descent to learn theta

% theta = GRADIENTDESCENT(X, y, theta, alpha, num_iters) updates theta by

% taking num_iters gradient steps with learning rate alpha

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

temp = theta;

for iter = 1:num_iters

% ====================== YOUR CODE HERE ======================

% Instructions: Perform a single gradient step on the parameter vector

% theta.

%

% Hint: While debugging, it can be useful to print out the values

% of the cost function (computeCost) and gradient here.

%

for i = 1:size(X,2),

temp(i,1) = theta(i,1) - alpha / m * sum((X * theta - y) .* X(:,i));

end;

theta = temp;

% ============================================================

% Save the cost J in every iteration

J_history(iter) = computeCost(X, y, theta);

end

end多变量问题

featureNormalize.m

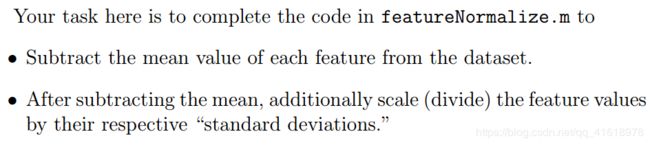

这个函数的功能是对特征量进行适当的缩放(归一化),常见的归一化方法有两种:1、(val - min)/ (max - min);2、(val - mu) / sigma²。这里mu是均值,sigma是标准差。

在程序缩放代码中,按照吴恩达作业中的提示来做。如下。

function [X_norm, mu, sigma] = featureNormalize(X)

%FEATURENORMALIZE Normalizes the features in X

% FEATURENORMALIZE(X) returns a normalized version of X where

% the mean value of each feature is 0 and the standard deviation

% is 1. This is often a good preprocessing step to do when

% working with learning algorithms.

% You need to set these values correctly

X_norm = X;

mu = zeros(1, size(X, 2));

sigma = zeros(1, size(X, 2));

% ====================== YOUR CODE HERE ======================

% Instructions: First, for each feature dimension, compute the mean

% of the feature and subtract it from the dataset,

% storing the mean value in mu. Next, compute the

% standard deviation of each feature and divide

% each feature by it's standard deviation, storing

% the standard deviation in sigma.

%

% Note that X is a matrix where each column is a

% feature and each row is an example. You need

% to perform the normalization separately for

% each feature.

%

% Hint: You might find the 'mean' and 'std' functions useful.

%

% 计算每个特征量的均值和标准差

for i = 1 : size(X,2);

mu(:,i) = mean(X(:,i));

sigma(:,i) = std(X(:,i));

end

X_norm = bsxfun(@rdivide,bsxfun(@minus,X,mu),sigma);

% ============================================================

endcomputeCostMulti.m

这个函数的功能是计算多元代价函数,由于之前在单变量的时候使用的代价函数是向量化的,因此,在对多变量的线性回归问题这里同样适用。

function J = computeCostMulti(X, y, theta)

%COMPUTECOSTMULTI Compute cost for linear regression with multiple variables

% J = COMPUTECOSTMULTI(X, y, theta) computes the cost of using theta as the

% parameter for linear regression to fit the data points in X and y

% Initialize some useful values

m = length(y); % number of training examples

% You need to return the following variables correctly

J = 0;

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta

% You should set J to the cost.

J = sum((X * theta - y) .^ 2) / (2 * m);

% =========================================================================

end

gradientDescentMulti.m

原理同单变量,向量化处理。

function [theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters)

%GRADIENTDESCENTMULTI Performs gradient descent to learn theta

% theta = GRADIENTDESCENTMULTI(x, y, theta, alpha, num_iters) updates theta by

% taking num_iters gradient steps with learning rate alpha

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

thetaTemp = theta;

for iter = 1:num_iters

% ====================== YOUR CODE HERE ======================

% Instructions: Perform a single gradient step on the parameter vector

% theta.

%

% Hint: While debugging, it can be useful to print out the values

% of the cost function (computeCostMulti) and gradient here.

%

for i = 1 : size(theta,1);

thetaTemp(i,:) = theta(i,:) - (alpha / m) * sum((X * theta - y) .* X(:,i));

end

% ============================================================

theta = thetaTemp;

% Save the cost J in every iteration

J_history(iter) = computeCostMulti(X, y, theta);

end

end利用计算出来的θ值,Estimate the price of a 1650 sq-ft, 3 br house。

price = [1 ([1650 3] - mu) ./ sigma] * theta; % You should change this输出

Predicted price of a 1650 sq-ft, 3 br house (using gradient descent):

$293223.790542normalEqn.m

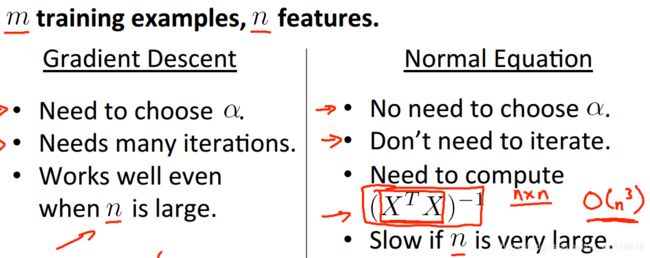

最后这个函数是利用正规方程获得θ,相比于利用梯度下降算法,利用正规方程解决回归问题要简单一些。但是正规方程在特征量比较多的时候运算会较慢,同时当特征数量比样本数多时直接利用正规方程会出错。这时需要对方程进行一些处理。

这里给出正规方程和梯度下降算法的对比,如下。

MATLAB代码实现如下

function [theta] = normalEqn(X, y)

%NORMALEQN Computes the closed-form solution to linear regression

% NORMALEQN(X,y) computes the closed-form solution to linear

% regression using the normal equations.

theta = zeros(size(X, 2), 1);

% ====================== YOUR CODE HERE ======================

% Instructions: Complete the code to compute the closed form solution

% to linear regression and put the result in theta.

%

% ---------------------- Sample Solution ----------------------

theta = inv(X' * X) * X' * y;

% -------------------------------------------------------------

% ============================================================

end利用计算出来的θ值,Estimate the price of a 1650 sq-ft, 3 br house。

price = [1 1650 3] * theta; % You should change this输出

Predicted price of a 1650 sq-ft, 3 br house (using normal equations):

$293081.464335通过比较正规方程和梯度下降的输出,两者之间应该是近似相等。