lenet5实现手写数字识别---python

lenet5实现手写数字识别

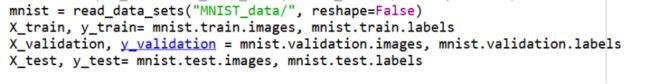

一. 导入数据集

下载数据集

查看第一张图片的尺寸

输出数据集规模

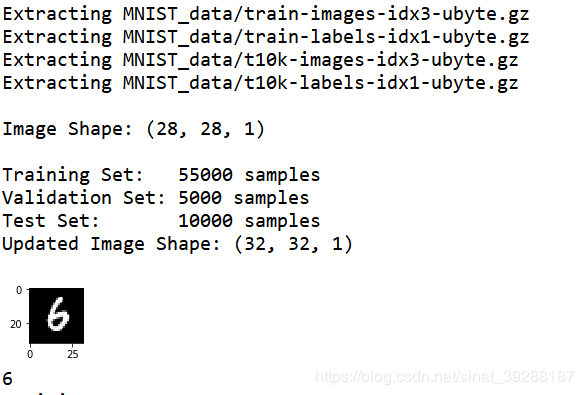

可知图片尺寸为28*28像素,通道数为1。训练集包含55000张图片,验证集包含5000张图片,测试集包含10000张图片

将训练集进行填充

# 因为mnist数据集的图片是28*28*1的格式,而lenet只接受32*32的格式

# 所以只能在这个基础上填充

随机的看一张图并处理后输出

运行结果如下:

二.lenet5网络层次

设置参数,mu为均值,sigma为标准差

卷积层:对原图像使用5*5*6的卷积核进行卷积处理,抛弃用过滤器在输入的矩阵中按步长移动时最后的不足部分

激活:进入非线性特征,将所有负激活变为0

池化层:对卷积生成的图像进行池化处理,池化矩阵尺寸为2*2

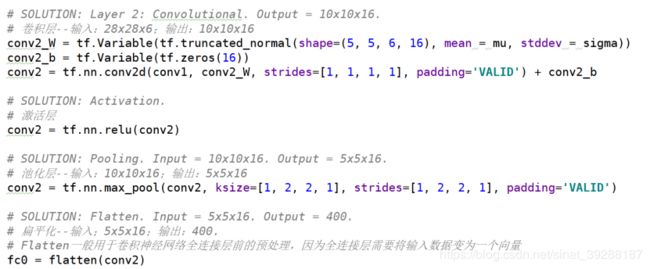

同上再次进行卷积、激活、池化

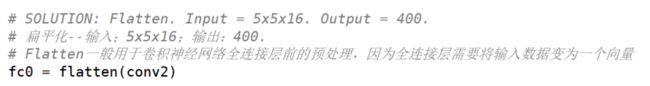

扁平化:因为全连接层需要将输入数据变为一个向量,所以对数据进行扁平化处理

全连接层:类似卷积层处理

第一次全连接:

第二次全连接:

第三次全连接:

设置批处理参数,独热编码,学习率等相关参数

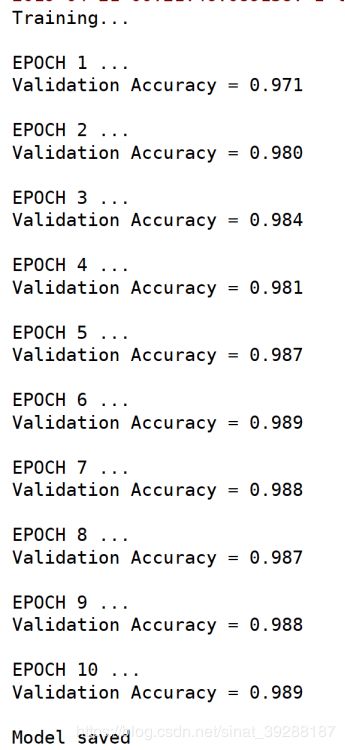

程序执行结果:

程序运行会出现warning。为了方便观察结果。

在程序中加入即可:

import warnings

warnings.filterwarnings("ignore")# -*- coding: utf-8 -*-

"""

Created on Wed May 15 21:01:47 2019

@author: cheng

"""

import random

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from sklearn.utils import shuffle

from tensorflow.contrib.layers import flatten

#from tensorflow.examples.tutorials.mnist import input_data

from tensorflow.contrib.learn.python.learn.datasets.mnist import read_data_sets

def load_data():

'''

导入数据

:return:训练集、验证集、测试集

'''

# mnist = input_data.read_data_sets("MNIST_data/", reshape=False)

mnist = read_data_sets("MNIST_data/", reshape=False)

X_train, y_train= mnist.train.images, mnist.train.labels

X_validation, y_validation = mnist.validation.images, mnist.validation.labels

X_test, y_test= mnist.test.images, mnist.test.labels

assert(len(X_train) == len(y_train))

assert(len(X_validation) == len(y_validation))

assert(len(X_test) == len(y_test))

print()

print("Image Shape: {}".format(X_train[0].shape))

print()

print("Training Set: {} samples".format(len(X_train)))

print("Validation Set: {} samples".format(len(X_validation)))

print("Test Set: {} samples".format(len(X_test)))

# 将训练集进行填充

# 因为mnist数据集的图片是28*28*1的格式,而lenet只接受32*32的格式

# 所以只能在这个基础上填充

X_train = np.pad(X_train, ((0, 0), (2, 2), (2, 2), (0, 0)), 'constant')

X_validation = np.pad(X_validation, ((0, 0), (2, 2), (2, 2), (0, 0)), 'constant')

X_test = np.pad(X_test, ((0, 0), (2, 2), (2, 2), (0, 0)), 'constant')

print("Updated Image Shape: {}".format(X_train[0].shape))

# 随机的看一张图

index = random.randint(0, len(X_train))

image = X_train[index].squeeze()# 从数组的形状中删除单维条目,即把shape中为1的维度去掉

plt.figure(figsize=(1,1))

plt.imshow(image, cmap="gray")

plt.show()

print("number:",y_train[index])

# 打乱数据集的顺序

X_train, y_train = shuffle(X_train, y_train)

return X_train, y_train, X_validation, y_validation,X_test, y_test

#SOLUTION: Implement LeNet-5

def LeNet(x):

# Arguments used for tf.truncated_normal, randomly defines variables for the weights and biases for each layer

# 用于tf.truncated_normal的参数, 随机定义每个图层的权重和偏差的变量

mu=0

sigma=0.1

# SOLUTION: Layer 1: Convolutional.卷积 Input = 32x32x1. Output = 28x28x6.

conv1_W=tf.Variable(tf.truncated_normal(shape=(5, 5, 1, 6), mean = mu, stddev = sigma))

#tf.truncated_normal从截断的正态分布中输出随机值。

conv1_b=tf.Variable(tf.zeros(6))

#tf.Variable(initializer,name)initializer是初始化参数

conv1=tf.nn.conv2d(x, conv1_W, strides=[1, 1, 1, 1], padding='VALID') + conv1_b

#卷积函数

# SOLUTION: Activation.

conv1 = tf.nn.relu(conv1)

#计算激活函数 relu,即 max(features, 0)。即将矩阵中每行的非最大值置0。

# SOLUTION: Pooling. Input = 28x28x6. Output = 14x14x6.

conv1=tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

# SOLUTION: Layer 2: Convolutional. Output = 10x10x16.

conv2_W=tf.Variable(tf.truncated_normal(shape=(5, 5, 6, 16), mean = mu, stddev = sigma))

conv2_b=tf.Variable(tf.zeros(16))

conv2=tf.nn.conv2d(conv1, conv2_W, strides=[1, 1, 1, 1], padding='VALID') + conv2_b

# SOLUTION: Activation.

conv2=tf.nn.relu(conv2)

# SOLUTION: Pooling. Input = 10x10x16. Output = 5x5x16.

conv2=tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

# SOLUTION: Flatten. Input = 5x5x16. Output = 400.

fc0=flatten(conv2)#平化降维

# SOLUTION: Layer 3: Fully Connected全连接层. Input = 400. Output = 120.

fc1_W = tf.Variable(tf.truncated_normal(shape=(400, 120), mean = mu, stddev = sigma))

fc1_b = tf.Variable(tf.zeros(120))

fc1 = tf.matmul(fc0, fc1_W) + fc1_b

#matmul将矩阵a乘以矩阵b(不是对应元素相乘),生成a * b。

# SOLUTION: Activation.

fc1 = tf.nn.relu(fc1)

# SOLUTION: Layer 4: Fully Connected. Input = 120. Output = 84.

fc2_W = tf.Variable(tf.truncated_normal(shape=(120, 84), mean = mu, stddev = sigma))

fc2_b = tf.Variable(tf.zeros(84))

fc2 = tf.matmul(fc1, fc2_W) + fc2_b

# SOLUTION: Activation.

fc2 = tf.nn.relu(fc2)

# SOLUTION: Layer 5: Fully Connected. Input = 84. Output = 10.

fc3_W = tf.Variable(tf.truncated_normal(shape=(84, 10), mean = mu, stddev = sigma))

fc3_b = tf.Variable(tf.zeros(10))

logits = tf.matmul(fc2, fc3_W) + fc3_b

return logits

EPOCHS=10

BATCH_SIZE=128

X_train, y_train, X_validation, y_validation,X_test, y_test=load_data()

x=tf.placeholder(tf.float32, (None, 32, 32, 1))

#y=tf.placeholder(tf.int32, (None))

#one_hot_y=tf.one_hot(y, 10)

y=tf.placeholder(tf.int32, (None))#在模型中的占位

one_hot_y=tf.one_hot(y, 10)#one_hot(indices, depth)

#Training Pipeline

rate=0.001

logits=LeNet(x)

cross_entropy=tf.nn.softmax_cross_entropy_with_logits(labels=one_hot_y, logits=logits)

#print("cross_entropy:",cross_entropy)

loss_operation=tf.reduce_mean(cross_entropy)

optimizer=tf.train.AdamOptimizer(learning_rate = rate)#寻找全局最优点的优化算法,引入了二次方梯度校正。

training_operation=optimizer.minimize(loss_operation)

#Model Evaluation模型评估

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(one_hot_y, 1))#argmax某一维上的其数据最大值所在的索引值

accuracy_operation = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

saver = tf.train.Saver()

def evaluate(X_data, y_data):

num_examples = len(X_data)

total_accuracy = 0

sess = tf.get_default_session()#返回当前线程的默认会话

for offset in range(0, num_examples, BATCH_SIZE):

batch_x, batch_y = X_data[offset:offset+BATCH_SIZE], y_data[offset:offset+BATCH_SIZE]

accuracy = sess.run(accuracy_operation, feed_dict={x: batch_x, y: batch_y})

total_accuracy+= (accuracy * len(batch_x))

return total_accuracy / num_examples

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())#global_variables_initializer返回一个用来初始化 计算图中 所有global variable的 op。run了 所有global Variable 的 assign op

num_examples = len(X_train)

print("Training...")

print()

for i in range(EPOCHS):

X_train, y_train=shuffle(X_train, y_train)#随机排序

for offset in range(0, num_examples, BATCH_SIZE):

end=offset+BATCH_SIZE

batch_x, batch_y=X_train[offset:end], y_train[offset:end]

sess.run(training_operation,feed_dict={x: batch_x, y: batch_y})

# loss, acc = sess.run(training_operation,feed_dict={x: batch_x, y: batch_y})

# print("Step " + str(offset) + ", Minibatch Loss= " + \

# "{:.4f}".format(loss) + ", Training Accuracy= " + \

# "{:.3f}".format(acc))

validation_accuracy=evaluate(X_validation, y_validation)

print("EPOCH {} ...".format(i+1))

print("Validation Accuracy = {:.3f}".format(validation_accuracy))

print()

saver.save(sess, './lenet')

print("Model saved")

#Evaluate the Model

with tf.Session() as sess:

saver.restore(sess, tf.train.latest_checkpoint('.'))#加载模型训练好的的网络和参数来测试,或进一步训练

test_accuracy = evaluate(X_test, y_test)

print("Test Accuracy = {:.3f}".format(test_accuracy))