日志采集Flume配置

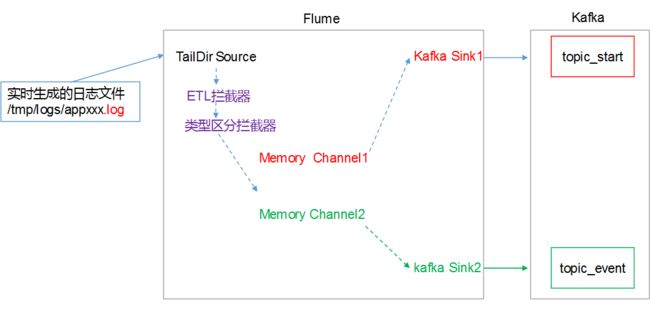

1)Flume配置分析

Flume直接读log日志的数据,log日志的格式是app-yyyy-mm-dd.log。

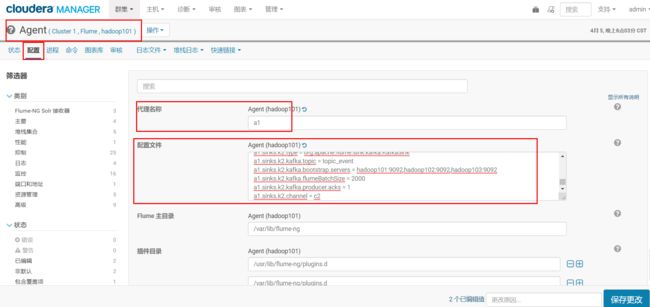

2)Flume的具体配置如下:

在CM管理页面上点击Flume,

在实例页面选择hadoop101上的Agent

3)在CM管理页面hadoop101上Flume的配置中找到代理名称改为a1

4)在配置文件如下内容(flume-kafka)

a1.sources=r1 a1.channels=c1 c2 a1.sinks=k1 k2 # configure source a1.sources.r1.type = TAILDIR a1.sources.r1.positionFile = /opt/module/flume/log_position.json a1.sources.r1.filegroups = f1 a1.sources.r1.filegroups.f1 = /tmp/logs/app.+ a1.sources.r1.fileHeader = true a1.sources.r1.channels = c1 c2 #interceptor a1.sources.r1.interceptors = i1 i2 a1.sources.r1.interceptors.i1.type = com.atguigu.flume.interceptor.LogETLInterceptor$Builder a1.sources.r1.interceptors.i2.type = com.atguigu.flume.interceptor.LogTypeInterceptor$Builder # selector a1.sources.r1.selector.type = multiplexing a1.sources.r1.selector.header = topic a1.sources.r1.selector.mapping.topic_start = c1 a1.sources.r1.selector.mapping.topic_event = c2 # configure channel a1.channels.c1.type = memory a1.channels.c1.capacity=10000 a1.channels.c1.byteCapacityBufferPercentage=20 a1.channels.c2.type = memory a1.channels.c2.capacity=10000 a1.channels.c2.byteCapacityBufferPercentage=20 # configure sink # start-sink a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.kafka.topic = topic_start a1.sinks.k1.kafka.bootstrap.servers = hadoop101:9092,hadoop102:9092,hadoop103:9092 a1.sinks.k1.kafka.flumeBatchSize = 2000 a1.sinks.k1.kafka.producer.acks = 1 a1.sinks.k1.channel = c1 # event-sink a1.sinks.k2.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k2.kafka.topic = topic_event a1.sinks.k2.kafka.bootstrap.servers = hadoop101:9092,hadoop102:9092,hadoop103:9092 a1.sinks.k2.kafka.flumeBatchSize = 2000 a1.sinks.k2.kafka.producer.acks = 1 a1.sinks.k2.channel = c2

注意:com.xxx.flume.interceptor.LogETLInterceptor和com.xxx.flume.interceptor.LogTypeInterceptor是自定义的拦截器的全类名。需要根据用户自定义的拦截器做相应修改。

5)修改/opt/module/flume/log_position.json的读写权限

注意:Json文件的父目录一定要创建好,并改好权限

[root@hadoop101 ~]# mkdir -p /opt/module/flume

[root@hadoop101 ~]# cd /opt/module/flume/

[root@hadoop101 flume]# touch log_position.json

[root@hadoop101 flume]# ll

总用量 0

-rw-r--r-- 1 root root 0 4月 5 20:05 log_position.json

[root@hadoop101 flume]# chmod 777 log_position.json

[root@hadoop101 flume]# ll

总用量 0

-rwxrwxrwx 1 root root 0 4月 5 20:05 log_position.json

[root@hadoop101 flume]# xsync /opt/module/flume/

fname=flume

pdir=/opt/module

------------------- hadoop102 --------------

sending incremental file list

flume/

flume/log_position.json

sent 108 bytes received 35 bytes 286.00 bytes/sec

total size is 0 speedup is 0.00

------------------- hadoop103 --------------

sending incremental file list

flume/

flume/log_position.json

sent 108 bytes received 35 bytes 286.00 bytes/sec

total size is 0 speedup is 0.00

Flume拦截器

本项目中自定义了两个拦截器,分别是:ETL拦截器、日志类型区分拦截器。

ETL拦截器主要用于,过滤时间戳不合法和Json数据不完整的日志

日志类型区分拦截器主要用于,将启动日志和事件日志区分开来,方便发往Kafka的不同Topic。

打包,拦截器打包之后,只需要单独包,不需要将依赖的包上传。打包之后要放入flume的lib文件夹下面。

注意:为什么不需要依赖包?因为依赖包在flume的lib目录下面已经存在了。

采用root用户将flume-interceptor-1.0-SNAPSHOT.jar包放入到hadoop101的/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/flume-ng/lib/文件夹下面

[root@hadoop101 flume]# cd /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/flume-ng/lib/

[root@hadoop101 lib]# ll

总用量 608

lrwxrwxrwx 1 root root 41 4月 5 18:31 apache-log4j-extras-1.1.jar -> ../../../jars/apache-log4j-extras-1.1.jar

lrwxrwxrwx 1 root root 29 4月 5 18:31 async-1.4.0.jar -> ../../../jars/async-1.4.0.jar

lrwxrwxrwx 1 root root 34 4月 5 18:31 asynchbase-1.7.0.jar -> ../../../jars/asynchbase-1.7.0.jar

lrwxrwxrwx 1 root root 30 4月 5 18:31 avro-ipc.jar -> ../../../lib/avro/avro-ipc.jar

[root@hadoop101 lib]# ls | grep interceptor

flume-interceptor-1.0-SNAPSHOT.jar

分发Flume到hadoop102

[root@hadoop101 lib]$ xsync flume-interceptor-1.0-SNAPSHOT.jar

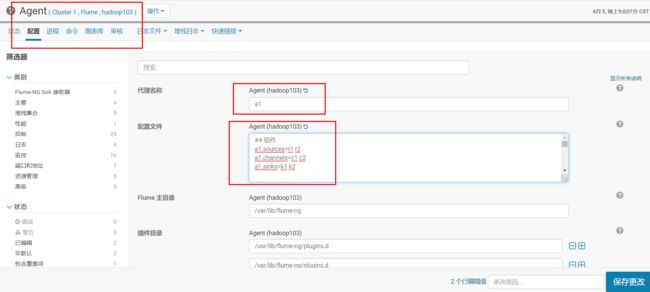

Flume的具体配置如下:

(1)在CM管理页面hadoop103上Flume的配置中找到代理名称

日志生成数据传输到HDFS

1)将log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar上传都hadoop102的/opt/module目录

2)分发log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar到hadoop103

[root@hadoop102 module]# xsync log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar

3)在/root/bin目录下创建脚本lg.sh

[root@hadoop102 bin]$ vim lg.sh

4)在脚本中编写如下内容

#! /bin/bash

for i in hadoop102 hadoop103

do

ssh $i "java -classpath /opt/module/log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar com.atguigu.appclient.AppMain $1 $2 >/opt/module/test.log &"

done

5)修改脚本执行权限

[root@hadoop102 bin]$ chmod 777 lg.sh

6)启动脚本

[root@hadoop102 module]$ lg.sh

Kafka的使用

[root@hadoop101 cloudera]# ll

总用量 16

drwxr-xr-x 2 cloudera-scm cloudera-scm 4096 4月 5 20:26 csd

drwxr-xr-x 2 root root 4096 4月 5 20:39 parcel-cache

drwxr-xr-x 2 cloudera-scm cloudera-scm 4096 4月 5 20:38 parcel-repo

drwxr-xr-x 6 cloudera-scm cloudera-scm 4096 4月 5 20:40 parcels

[root@hadoop101 cloudera]# cd parcels/

[root@hadoop101 parcels]# ll

总用量 12

lrwxrwxrwx 1 root root 27 4月 5 19:25 CDH -> CDH-5.12.1-1.cdh5.12.1.p0.3

drwxr-xr-x 11 root root 4096 8月 25 2017 CDH-5.12.1-1.cdh5.12.1.p0.3

drwxr-xr-x 4 root root 4096 12月 19 2014 HADOOP_LZO-0.4.15-1.gplextras.p0.123

lrwxrwxrwx 1 root root 25 4月 5 20:40 KAFKA -> KAFKA-3.0.0-1.3.0.0.p0.40

drwxr-xr-x 6 root root 4096 10月 6 2017 KAFKA-3.0.0-1.3.0.0.p0.40

[root@hadoop101 parcels]# cd KAFKA

[root@hadoop101 KAFKA]# ll

总用量 16

drwxr-xr-x 2 root root 4096 10月 6 2017 bin

drwxr-xr-x 5 root root 4096 10月 6 2017 etc

drwxr-xr-x 3 root root 4096 10月 6 2017 lib

drwxr-xr-x 2 root root 4096 10月 6 2017 meta

[root@hadoop101 KAFKA]# /opt/cloudera/parcels/KAFKA/bin/kafka-topics --zookeeper hadoop101:2181 --list

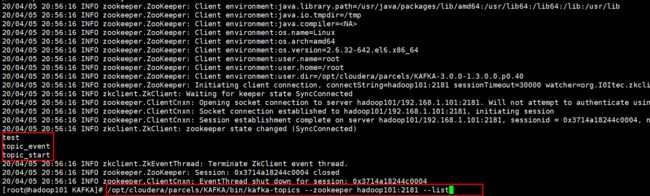

创建 Kafka Topic

进入到/opt/cloudera/parcels/KAFKA目录下分别创建:启动日志主题、事件日志主题。

1)创建启动日志主题

[root@hadoop101 KAFKA]# ll

总用量 16

drwxr-xr-x 2 root root 4096 10月 6 2017 bin

drwxr-xr-x 5 root root 4096 10月 6 2017 etc

drwxr-xr-x 3 root root 4096 10月 6 2017 lib

drwxr-xr-x 2 root root 4096 10月 6 2017 meta

[root@hadoop101 KAFKA]# bin/kafka-topics --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --create --replication-factor 1 --partitions 1 --topic topic_start

[root@hadoop101 KAFKA]# bin/kafka-topics --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --create --replication-factor 1 --partitions 1 --topic topic_event

测试Kafka 生产者:

[root@hadoop101 KAFKA]# bin/kafka-console-producer --broker-list hadoop101:9092 --topic test SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 20/04/05 21:00:45 INFO producer.ProducerConfig: ProducerConfig values: acks = 1 batch.size = 16384 bootstrap.servers = [hadoop101:9092] buffer.memory = 33554432 client.id = console-producer compression.type = none connections.max.idle.ms = 540000 enable.idempotence = false interceptor.classes = null key.serializer = class org.apache.kafka.common.serialization.ByteArraySerializer linger.ms = 1000 max.block.ms = 60000 max.in.flight.requests.per.connection = 5 max.request.size = 1048576 metadata.max.age.ms = 300000 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner receive.buffer.bytes = 32768 reconnect.backoff.max.ms = 1000 reconnect.backoff.ms = 50 request.timeout.ms = 1500 retries = 3 retry.backoff.ms = 100 sasl.jaas.config = null sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.mechanism = GSSAPI security.protocol = PLAINTEXT send.buffer.bytes = 102400 ssl.cipher.suites = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS transaction.timeout.ms = 60000 transactional.id = null value.serializer = class org.apache.kafka.common.serialization.ByteArraySerializer 20/04/05 21:00:45 INFO utils.AppInfoParser: Kafka version : 0.11.0-kafka-3.0.0 20/04/05 21:00:45 INFO utils.AppInfoParser: Kafka commitId : unknown >Hello World >Hello kris

Kafka消费者:

[root@hadoop101 KAFKA]# bin/kafka-console-consumer --bootstrap-server hadoop101:9092 --from-beginning --topic test SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 20/04/05 21:00:54 INFO consumer.ConsumerConfig: ConsumerConfig values: auto.commit.interval.ms = 5000 auto.offset.reset = earliest bootstrap.servers = [hadoop101:9092] check.crcs = true client.id = connections.max.idle.ms = 540000 enable.auto.commit = true exclude.internal.topics = true fetch.max.bytes = 52428800 fetch.max.wait.ms = 500 fetch.min.bytes = 1 group.id = console-consumer-53066 heartbeat.interval.ms = 3000 interceptor.classes = null internal.leave.group.on.close = true isolation.level = read_uncommitted key.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer max.partition.fetch.bytes = 1048576 max.poll.interval.ms = 300000 max.poll.records = 500 metadata.max.age.ms = 300000 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor] receive.buffer.bytes = 65536 reconnect.backoff.max.ms = 1000 reconnect.backoff.ms = 50 request.timeout.ms = 305000 retry.backoff.ms = 100 sasl.jaas.config = null sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.mechanism = GSSAPI security.protocol = PLAINTEXT send.buffer.bytes = 131072 session.timeout.ms = 10000 ssl.cipher.suites = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS value.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer 20/04/05 21:00:54 INFO utils.AppInfoParser: Kafka version : 0.11.0-kafka-3.0.0 20/04/05 21:00:54 INFO utils.AppInfoParser: Kafka commitId : unknown 20/04/05 21:00:55 INFO internals.AbstractCoordinator: Discovered coordinator hadoop101:9092 (id: 2147483594 rack: null) for group console-consumer-53066. 20/04/05 21:00:55 INFO internals.ConsumerCoordinator: Revoking previously assigned partitions [] for group console-consumer-53066 20/04/05 21:00:55 INFO internals.AbstractCoordinator: (Re-)joining group console-consumer-53066 20/04/05 21:00:58 INFO internals.AbstractCoordinator: Successfully joined group console-consumer-53066 with generation 1 20/04/05 21:00:58 INFO internals.ConsumerCoordinator: Setting newly assigned partitions [test-0] for group console-consumer-53066 Hello World Hello kris

查看某个Topic的详情

[root@hadoop101 KAFKA]# bin/kafka-topics --zookeeper hadoop101:2181 --describe --topic test SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/lib/kafka/libs/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 20/04/05 21:02:39 INFO zkclient.ZkEventThread: Starting ZkClient event thread. 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:host.name=hadoop101 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_144 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.home=/opt/module/jdk1.8.0_144/jre 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.class.path=:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/activation-1.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/aopalliance-1.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/aopalliance-repackaged-2.5.0-b05.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/apacheds-i18n-2.0.0-M15.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/api-asn1-api-1.0.0-M20.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/api-util-1.0.0-M20.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/argparse4j-0.7.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/asm-3.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/avro-1.7.6-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/cglib-2.2.1-v20090111.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-beanutils-1.8.3.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-beanutils-core-1.8.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-cli-1.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-codec-1.9.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-collections-3.2.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-compress-1.4.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-configuration-1.6.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-digester-1.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-el-1.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-httpclient-3.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-io-2.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-lang-2.6.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-lang3-3.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-logging-1.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-math3-3.1.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-net-3.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/commons-pool2-2.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/connect-api-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/connect-file-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/connect-json-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/connect-runtime-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/connect-transforms-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/gson-2.2.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/guava-20.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/guice-3.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/guice-servlet-3.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-annotations-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-auth-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-common-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-mapreduce-client-common-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-mapreduce-client-core-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-mapreduce-client-jobclient-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-mapreduce-client-shuffle-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-yarn-api-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-yarn-client-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-yarn-common-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-yarn-server-common-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hadoop-yarn-server-nodemanager-2.6.0-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hk2-api-2.5.0-b05.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hk2-locator-2.5.0-b05.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/hk2-utils-2.5.0-b05.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/htrace-core4-4.0.1-incubating.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/httpclient-4.4.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/httpcore-4.4.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-annotations-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-core-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-core-asl-1.8.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-databind-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-jaxrs-1.8.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-jaxrs-base-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-jaxrs-json-provider-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-mapper-asl-1.8.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-module-jaxb-annotations-2.8.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jackson-xc-1.8.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jasper-compiler-5.5.23.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jasper-runtime-5.5.23.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javassist-3.20.0-GA.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javassist-3.21.0-GA.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javax.annotation-api-1.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javax.inject-1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javax.inject-2.5.0-b05.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/java-xmlbuilder-0.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javax.servlet-api-3.1.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/javax.ws.rs-api-2.0.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jaxb-api-2.2.2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-client-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-common-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-container-servlet-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-container-servlet-core-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-guava-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-guice-1.9.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-media-jaxb-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jersey-server-2.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jets3t-0.9.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jettison-1.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-continuation-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-http-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-io-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-security-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-server-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-servlet-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-servlets-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-util-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jetty-util-9.2.15.v20160210.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jopt-simple-5.0.3.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jsch-0.1.42.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jsp-api-2.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/jsr305-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka_2.11-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka_2.11-0.11.0-kafka-3.0.0-sources.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka_2.11-0.11.0-kafka-3.0.0-test-sources.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka-clients-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka-log4j-appender-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka-streams-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka-streams-examples-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/kafka-tools-0.11.0-kafka-3.0.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/leveldbjni-all-1.8.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/libthrift-0.9.3.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/log4j-1.2.17.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/lz4-1.3.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/maven-artifact-3.5.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/metrics-core-2.2.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/metrics-servlet-2.2.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/osgi-resource-locator-1.0.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/paranamer-2.3.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/plexus-utils-3.0.24.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/protobuf-java-2.5.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/reflections-0.9.11.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/rocksdbjni-5.0.1.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/scala-library-2.11.11.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/scala-parser-combinators_2.11-1.0.4.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-binding-kafka-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-core-common-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-core-model-db-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-core-model-kafka-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-policy-common-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-policy-kafka-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-provider-common-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-provider-db-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/sentry-provider-file-1.5.1-cdh5.11.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/servlet-api-2.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/shiro-core-1.2.3.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/slf4j-api-1.7.25.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/slf4j-api-1.7.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/slf4j-log4j12-1.7.25.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/slf4j-log4j12-1.7.5.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/snappy-java-1.1.2.6.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/stax-api-1.0-2.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/validation-api-1.1.0.Final.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/xmlenc-0.52.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/xz-1.0.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/zkclient-0.10.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/zookeeper-3.4.10.jar:/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40/bin/../lib/kafka/bin/../libs/zookeeper-3.4.5-cdh5.13.0.jar 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:java.compiler=20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-642.el6.x86_64 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:user.name=root 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:user.home=/root 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Client environment:user.dir=/opt/cloudera/parcels/KAFKA-3.0.0-1.3.0.0.p0.40 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=hadoop101:2181 sessionTimeout=30000 watcher=org.I0Itec.zkclient.ZkClient@52e6fdee 20/04/05 21:02:39 INFO zkclient.ZkClient: Waiting for keeper state SyncConnected 20/04/05 21:02:39 INFO zookeeper.ClientCnxn: Opening socket connection to server hadoop101/192.168.1.101:2181. Will not attempt to authenticate using SASL (unknown error) 20/04/05 21:02:39 INFO zookeeper.ClientCnxn: Socket connection established to hadoop101/192.168.1.101:2181, initiating session 20/04/05 21:02:39 INFO zookeeper.ClientCnxn: Session establishment complete on server hadoop101/192.168.1.101:2181, sessionid = 0x3714a18244c0005, negotiated timeout = 30000 20/04/05 21:02:39 INFO zkclient.ZkClient: zookeeper state changed (SyncConnected) Topic:test PartitionCount:1 ReplicationFactor:1 Configs: Topic: test Partition: 0 Leader: 52 Replicas: 52 Isr: 52 20/04/05 21:02:39 INFO zkclient.ZkEventThread: Terminate ZkClient event thread. 20/04/05 21:02:39 INFO zookeeper.ZooKeeper: Session: 0x3714a18244c0005 closed 20/04/05 21:02:39 INFO zookeeper.ClientCnxn: EventThread shut down for session: 0x3714a18244c0005

删除 Kafka Topic

1)删除启动日志主题

[root@hadoop101 KAFKA]$ bin/kafka-topics --delete --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --topic topic_start

2)删除事件日志主题

[root@hadoop101 KAFKA]$ bin/kafka-topics --delete --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --topic topic_event

Oozie基于Hue实现GMV指标全流程调度

1 执行前的准备

1)添加MySQL驱动文件

[root@hadoop101 ~]# cp /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /opt/cloudera/parcels/CDH

CDH/ CDH-5.12.1-1.cdh5.12.1.p0.3/

[root@hadoop101 ~]# cp /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib

lib/ lib64/ libexec/

[root@hadoop101 ~]# cp /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/hadoop/lib

[root@hadoop101 ~]# cp /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/sqoop/lib/

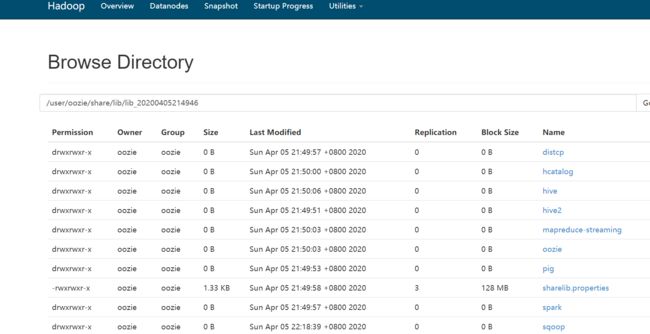

[root@hadoop101 ~]# hadoop fs -put /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /user/oozie/share/lib/lib_20190903103134/sqoop

put: `/user/oozie/share/lib/lib_20190903103134/sqoop': No such file or directory

[root@hadoop101 ~]# hadoop fs -put /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /user/oozie/share/lib/lib_20200405214946/sqoop

[root@hadoop101 ~]# xsync /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/hadoop/lib

fname=lib

pdir=/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/hadoop

------------------- hadoop102 --------------

sending incremental file list

lib/

lib/mysql-connector-java-5.1.27-bin.jar

sent 877414 bytes received 246 bytes 1755320.00 bytes/sec

total size is 3041243 speedup is 3.47

------------------- hadoop103 --------------

sending incremental file list

lib/

lib/mysql-connector-java-5.1.27-bin.jar

sent 877414 bytes received 246 bytes 585106.67 bytes/sec

total size is 3041243 speedup is 3.47

[root@hadoop101 ~]# xsync /opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/sqoop/lib/

fname=lib

pdir=/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/sqoop

------------------- hadoop102 --------------

sending incremental file list

lib/

lib/mysql-connector-java-5.1.27-bin.jar

sent 874734 bytes received 134 bytes 1749736.00 bytes/sec

total size is 873514 speedup is 1.00

------------------- hadoop103 --------------

sending incremental file list

lib/

lib/mysql-connector-java-5.1.27-bin.jar

sent 874734 bytes received 134 bytes 1749736.00 bytes/sec

total size is 873514 speedup is 1.00

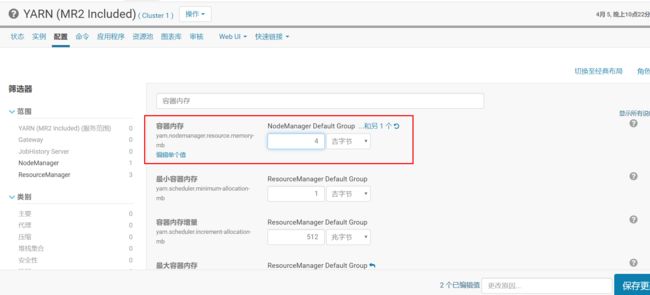

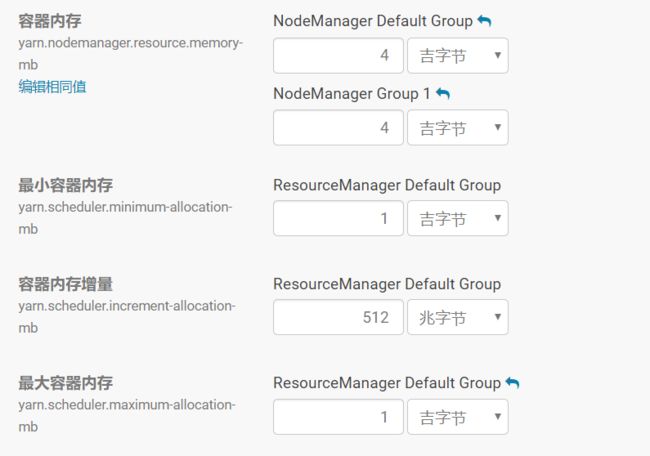

2)修改YARN的容器内存yarn.nodemanager.resource.memory-mb为4G

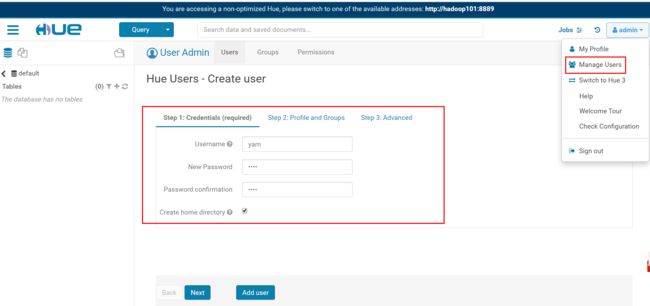

在Hue中创建Oozie任务GMV

1)生成数据

安装Oozie可视化js,复制ext-2.2.zip到/opt/cloudera/parcels/CDH/lib/oozie/libext(或/var/lib/oozie)中,解压

[root@hadoop101 libext]# ll

总用量 860

drwxr-xr-x 9 root root 4096 8月 4 2008 ext-2.2

-rw-r--r-- 1 oozie oozie 872303 4月 5 22:24 mysql-connector-java.jar

drwxr-xr-x 5 oozie oozie 4096 4月 5 22:24 tomcat-deployment

[root@hadoop101 libext]# unzip /opt/software/ext-2.2.zip

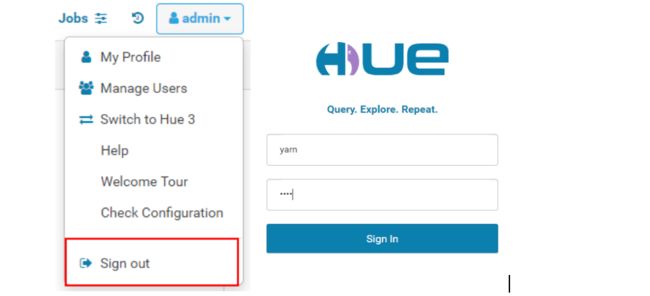

查看Hue的web页面

选择query->scheduler->workflow

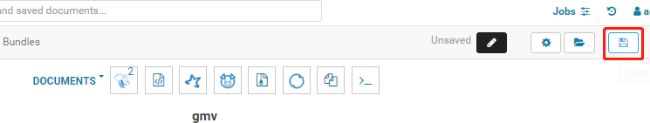

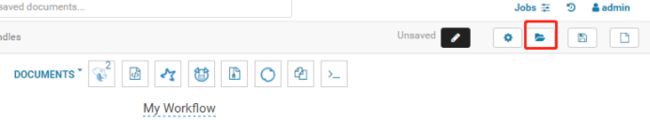

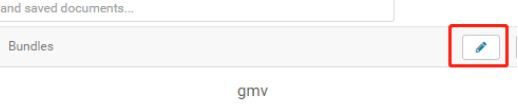

点击My Workflow->输入gmv

点击保存

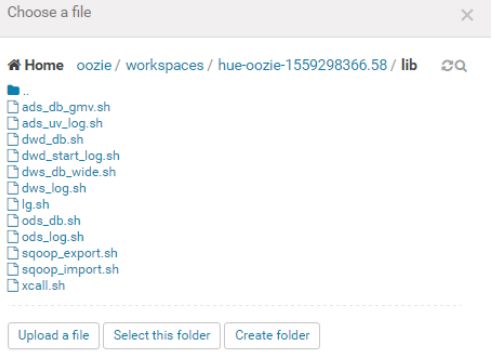

3 编写任务脚本并上传到HDFS

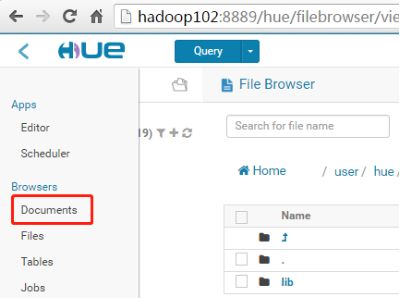

1)点击workspace,查看上传情况

2)上传要执行的脚本导HDFS路径

[root@hadoop101 bin]# hadoop fs -put /root/bin/*.sh /user/hue/oozie/workspaces/hue-oozie-1559457755.83/lib

3)点击左侧的->Documents->gmv

编写任务调度

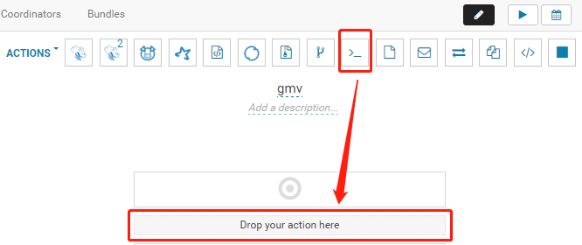

1)点击编辑

2)选择actions

3)拖拽控件编写任务

4)选择执行脚本

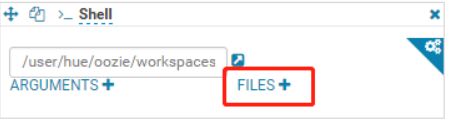

5)Files再选择执行脚本

6)定义脚本参数名称

7)按顺序执行3-6步骤。

ods_db.sh、dwd_db.sh、dws_db_wide.sh、ads_db_gmv.sh和sqoop_export.sh

其中ods_db.sh、dwd_db.sh、dws_db_wide.sh、ads_db_gmv.sh只有一个参数do_date;

sqoop_export.sh只有一个参数all。

8)点击保存

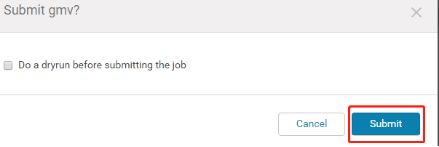

5 执行任务调度

1)点击执行

2)点击提交