大数据【五十】- Flume【四】 :Flume拦截器---自定义拦截器

一。自定义拦截器过程

1. 实现接口Interceptor

import org.apache.flume.interceptor.Interceptor;

public class MyInterceptor implements Interceptor{

}

2. 重写Inteceptor接口中的方法:

代码运行顺序:initialize() --> intercept(Event) / intercept(List

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

return null;

}

@Override

public List intercept(List list) {

return null;

}

@Override

public void close() {

} 3. 写一个新类来实现 Interceptor.Builder接口

public static class Builder implements Interceptor.Builder{

}4. 重写 Interceptor.Builder接口中的方法

@Override

public Interceptor build() {

return null;

}

@Override

public void configure(Context context) {

}二。案例七:Flume自定义拦截器

(一)目的

让文件的字母的小写变成大写

(二)依赖包

org.apache.flume

flume-ng-core

1.8.0

org.apache.maven.plugins

maven-jar-plugin

2.4

true

lib/

org.apache.maven.plugins

maven-compiler-plugin

1.8

1.8

utf-8

(三)代码

package ToUpCase;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.util.ArrayList;

import java.util.List;

public class MyInterceptor implements Interceptor{

@Override

public void initialize() {

System.err.println("============初始化initialize方法=============");

}

/**

* 拦截source发送到通道channel的消息

* @param event 接收需要过滤的event

* @return 返回业务处理后的event

*/

@Override

public Event intercept(Event event) {

//获取时间对象中的字节数据

byte[] arr = event.getBody();

//将获取的数据转换成String,然后转化为大写

event.setBody(new String(arr).toUpperCase().getBytes());

//返回到消息中

return event;

}

/**

* 接收过滤后的事件集合

*/

@Override

public List intercept(List events) {

ArrayList list = new ArrayList<>();

//将这些数据放在一个集合中

for(Event event : events){

list.add(intercept(event));

}

return list;

}

@Override

public void close() {

System.err.println("============结束close方法=============");

}

}

public static class Builder implements Interceptor.Builder{

//获取配置文件的属性

@Override

public Interceptor build() {

return new MyInterceptor();

}

@Override

public void configure(Context context) {

}

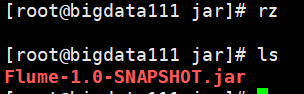

}(四)打包

1. 使用Maven做成Jar包 --> Maven【六】- maven项目打包 / Clean指令 / package指令

2. 在flume的目录下建立文件夹jar :

[root@bigdata111 flume-1.8.0]# mkdir jar

3. 上传此jar到这个jar目录中

(五)配置文件

ToUpCase.conf 【/opt/module/flume-1.8.0/jobconf】

| #1.agent a1.sources = r1 a1.sinks =k1 a1.channels = c1

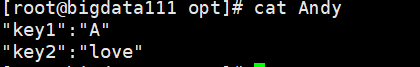

# Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /opt/Andy a1.sources.r1.interceptors = i1 #全类名$Builder a1.sources.r1.interceptors.i1.type = ToUpCase.MyInterceptor$Builder

# Describe the sink a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path =hdfs://bigdata111:9000/ToUpCase1 a1.sinks.k1.hdfs.filePrefix = events- a1.sinks.k1.hdfs.round = true a1.sinks.k1.hdfs.roundValue = 10 a1.sinks.k1.hdfs.roundUnit = minute a1.sinks.k1.hdfs.rollInterval = 3 a1.sinks.k1.hdfs.rollSize = 20 a1.sinks.k1.hdfs.rollCount = 5 a1.sinks.k1.hdfs.batchSize = 1 a1.sinks.k1.hdfs.useLocalTimeStamp = true #生成的文件类型,默认是 Sequencefile,可用 DataStream,则为普通文本 a1.sinks.k1.hdfs.fileType = DataStream

# Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 |

补充:

a1.sources.r1.interceptors.i1.type = ToUpCase.MyInterceptor$Builder

其中的MyInterceptor和Builder是从我们写的类名上复制过来的

(五)运行命令:

bin/flume-ng agent -c conf/ -n a1 -f jobconf/ToUpCase.conf -C jar/Flume-1.0-SNAPSHOT.jar -Dflume.root.logger=DEBUG,console

(六)测试结果

============初始化initialize方法=============

2019-11-04 06:58:17,966 (lifecycleSupervisor-1-4) [INFO - org.apache.flume.source.ExecSource.start(ExecSource.java:168)] Exec source starting with command: tail -F /opt/Andy

2019-11-04 06:58:17,970 (lifecycleSupervisor-1-4) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:119)] Monitored counter group for type: SOURCE, name: r1: Successfully registered new MBean.

2019-11-04 06:58:17,971 (lifecycleSupervisor-1-4) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)] Component type: SOURCE, name: r1 started

2019-11-04 06:58:18,012 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:119)] Monitored counter group for type: SINK, name: k1: Successfully registered new MBean.

2019-11-04 06:58:18,013 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)] Component type: SINK, name: k1 started

2019-11-04 06:58:18,027 (lifecycleSupervisor-1-4) [DEBUG - org.apache.flume.source.ExecSource.start(ExecSource.java:183)] Exec source started

2019-11-04 06:58:18,065 (SinkRunner-PollingRunner-DefaultSinkProcessor) [DEBUG - org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:141)] Polling sink runner starting

2019-11-04 06:58:22,105 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false

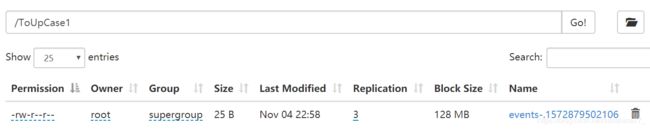

2019-11-04 06:58:24,714 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:251)] Creating hdfs://bigdata111:9000/ToUpCase1/events-.1572879502106.tmp

2019-11-04 06:58:27,041 (hdfs-k1-call-runner-0) [DEBUG - org.apache.htrace.core.Tracer$Builder.loadSamplers(Tracer.java:106)] sampler.classes = ; loaded no samplers

2019-11-04 06:58:27,086 (hdfs-k1-call-runner-0) [DEBUG - org.apache.htrace.core.Tracer$Builder.loadSpanReceivers(Tracer.java:128)] span.receiver.classes = ; loaded no span receivers

2019-11-04 06:58:32,201 (hdfs-k1-call-runner-0) [DEBUG - org.apache.flume.sink.hdfs.AbstractHDFSWriter.reflectGetNumCurrentReplicas(AbstractHDFSWriter.java:200)] Using getNumCurrentReplicas--HDFS-826

2019-11-04 06:58:32,217 (hdfs-k1-call-runner-0) [DEBUG - org.apache.flume.sink.hdfs.AbstractHDFSWriter.reflectGetDefaultReplication(AbstractHDFSWriter.java:228)] Using FileSystem.getDefaultReplication(Path) from HADOOP-8014

2019-11-04 06:58:35,220 (hdfs-k1-roll-timer-0) [DEBUG - org.apache.flume.sink.hdfs.BucketWriter$2.call(BucketWriter.java:291)] Rolling file (hdfs://bigdata111:9000/ToUpCase1/events-.1572879502106.tmp): Roll scheduled after 3 sec elapsed.

2019-11-04 06:58:35,220 (hdfs-k1-roll-timer-0) [INFO - org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:393)] Closing hdfs://bigdata111:9000/ToUpCase1/events-.1572879502106.tmp

2019-11-04 06:58:35,353 (hdfs-k1-call-runner-6) [INFO - org.apache.flume.sink.hdfs.BucketWriter$8.call(BucketWriter.java:655)] Renaming hdfs://bigdata111:9000/ToUpCase1/events-.1572879502106.tmp to hdfs://bigdata111:9000/ToUpCase1/events-.1572879502106

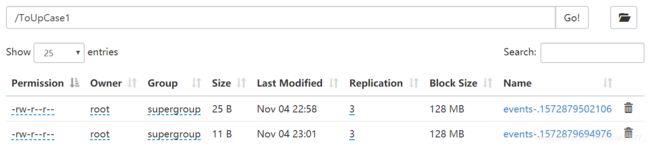

追加字符“i love you”,生成新文件。

在35秒的时候文件还是tmp文件,在38秒的时候文件已经变成最终文件了。因为rollInterval 的设置,3秒之后将临时文件滚动成最终目标文件。

2019-11-04 07:01:34,975 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false

2019-11-04 07:01:35,256 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:251)] Creating hdfs://bigdata111:9000/ToUpCase1/events-.1572879694976.tmp

2019-11-04 07:01:35,590 (hdfs-k1-call-runner-7) [DEBUG - org.apache.flume.sink.hdfs.AbstractHDFSWriter.reflectGetNumCurrentReplicas(AbstractHDFSWriter.java:200)] Using getNumCurrentReplicas--HDFS-826

2019-11-04 07:01:35,591 (hdfs-k1-call-runner-7) [DEBUG - org.apache.flume.sink.hdfs.AbstractHDFSWriter.reflectGetDefaultReplication(AbstractHDFSWriter.java:228)] Using FileSystem.getDefaultReplication(Path) from HADOOP-8014

2019-11-04 07:01:38,592 (hdfs-k1-roll-timer-0) [DEBUG - org.apache.flume.sink.hdfs.BucketWriter$2.call(BucketWriter.java:291)] Rolling file (hdfs://bigdata111:9000/ToUpCase1/events-.1572879694976.tmp): Roll scheduled after 3 sec elapsed.

2019-11-04 07:01:38,593 (hdfs-k1-roll-timer-0) [INFO - org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:393)] Closing hdfs://bigdata111:9000/ToUpCase1/events-.1572879694976.tmp

2019-11-04 07:01:38,665 (hdfs-k1-call-runner-1) [INFO - org.apache.flume.sink.hdfs.BucketWriter$8.call(BucketWriter.java:655)] Renaming hdfs://bigdata111:9000/ToUpCase1/events-.1572879694976.tmp to hdfs://bigdata111:9000/ToUpCase1/events-.1572879694976

2019-11-04 07:01:38,684 (hdfs-k1-roll-timer-0) [INFO - org.apache.flume.sink.hdfs.HDFSEventSink$1.run(HDFSEventSink.java:382)] Writer callback called.

2019-11-04 07:01:48,010 (conf-file-poller-0) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:127)] Checking file:jobconf/ToUpCase.conf for changes

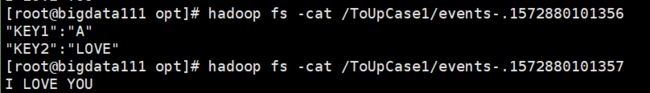

[root@bigdata111 opt]# hadoop fs -cat /ToUpCase1/*

"KEY1":"A" "KEY2":"LOVE" I LOVE YOU

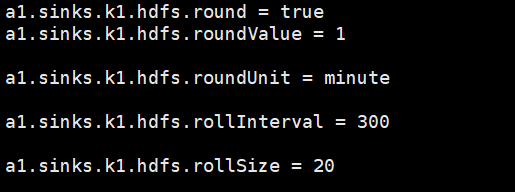

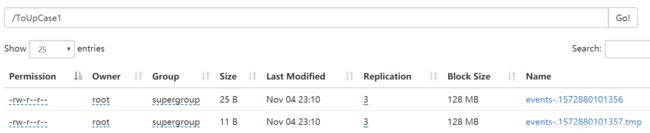

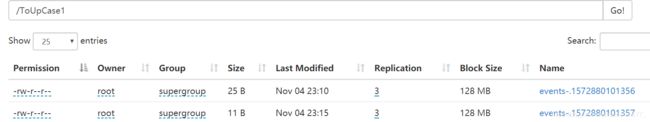

(七)测试roundInterval

如果将roundInterval改为300秒,当不满足300秒但是追加新文本的时候,events-.**56自动由【events-.**56.tmp】文件变为【events-.**56】。并产生新文件【events-.**57.tmp】。滚动的结果和round的设置无关。

过去了300秒之后,tmp文件变为了最终文件。

2019-11-04 07:10:19,079 Creating hdfs://bigdata111:9000/ToUpCase1/events-.1572880101357.tmp

2019-11-04 07:15:19,436 Renaming hdfs://bigdata111:9000/ToUpCase1/events-.1572880101357.tmp to hdfs://bigdata111:9000/ToUpCase1/events-.1572880101357