MediaRecorder创建Surface流程学习

Android 配置相机流的时候需要给cameraservice传递一个surface对象,

在分析SnapdragonCamera配置HFR录像流的过程中,发现SnapdragonCamera是通过创建MediaRecorder来获取surface的

mMediaRecorder.getSurface()

本文主要分析下MediaRecorder创建surface的过程,顺便整理下MediaRecorder的代码逻辑架构

apk 创建代码

//frameworks\base\media\java\android\media

private void setUpMediaRecorder(int cameraId) throws IOException {

Log.d(TAG, "setUpMediaRecorder");

...

if (mMediaRecorder == null) mMediaRecorder = new MediaRecorder();

mMediaRecorder.setVideoSource(MediaRecorder.VideoSource.SURFACE);

mMediaRecorder.setOutputFormat(mProfile.fileFormat);

mMediaRecorder.setOutputFile(fileName);

mMediaRecorder.setVideoFrameRate(mProfile.videoFrameRate);

mMediaRecorder.setVideoEncodingBitRate(mProfile.videoBitRate);

mMediaRecorder.setVideoSize(mProfile.videoFrameWidth, mProfile.videoFrameHeight);

mMediaRecorder.setVideoEncoder(videoEncoder);

mMediaRecorder.setMaxDuration(mMaxVideoDurationInMs);

try {

mMediaRecorder.setMaxFileSize(maxFileSize);

} catch (RuntimeException exception) {

}

try {

mMediaRecorder.prepare();

} catch (IOException e) {

}

}

进入MediaRecorder.java,主要讲述构造函数MediaRecorder()和prepare()函数

//frameworks\base\media\java\android\media\MediaRecorder.java

public MediaRecorder() {

....

String packageName = ActivityThread.currentPackageName();

/* Native setup requires a weak reference to our object.

* It's easier to create it here than in C++.

*/

native_setup(new WeakReference<MediaRecorder>(this), packageName,

ActivityThread.currentOpPackageName());

}

public void prepare() throws IllegalStateException, IOException

{

...

_prepare();

}

_prepare是native 方法,定义如下:

//frameworks\base\media\java\android\media\MediaRecorder.java

private native final void native_setup(Object mediarecorder_this,

String clientName, String opPackageName) throws IllegalStateException;

private native void _prepare() throws IllegalStateException, IOException;

进入JNI 层实现

//frameworks\base\media\jni\android_media_MediaRecorder.cpp

static void android_media_MediaRecorder_native_setup(JNIEnv *env, jobject thiz, jobject weak_this,

jstring packageName, jstring opPackageName)

{

...

// 创建MediaRecorder

sp<MediaRecorder> mr = new MediaRecorder(String16(opPackageNameStr.c_str()));

// create new listener and give it to MediaRecorder

sp<JNIMediaRecorderListener> listener = new JNIMediaRecorderListener(env, thiz, weak_this);

mr->setListener(listener);

// pass client package name for permissions tracking

mr->setClientName(clientName);

}

static void android_media_MediaRecorder_prepare(JNIEnv *env, jobject thiz)

{

sp<MediaRecorder> mr = getMediaRecorder(env, thiz);

//该处获取的surface为null

jobject surface = env->GetObjectField(thiz, fields.surface);

if (surface != NULL) {

....

}

//调用mr->prepare(),mediarecoder的surface是在该方法中完成创建的。

process_media_recorder_call(env, mr->prepare(), "java/io/IOException", "prepare failed.");

}

进到mediarecorder.cpp类

//frameworks\av\media\libmedia\mediarecorder.cpp

//构造函数

MediaRecorder::MediaRecorder(const String16& opPackageName) : mSurfaceMediaSource(NULL)

{

ALOGV("constructor");

//获取IMediaPlayerService服务代理对象(实际是BpMediaPlayerService类对象)

const sp<IMediaPlayerService> service(getMediaPlayerService());

if (service != NULL) {

//通过IMediaPlayerService服务创建IMediaRecorder(实际是BpMediaRecorder类)对象mMediaRecorder

mMediaRecorder = service->createMediaRecorder(opPackageName);

}

if (mMediaRecorder != NULL) {

mCurrentState = MEDIA_RECORDER_IDLE;

}

doCleanUp();

}

prepare 方法

//frameworks\av\media\libmedia\mediarecorder.cpp

status_t MediaRecorder::prepare()

{

ALOGV("prepare");

...

status_t ret = mMediaRecorder->prepare();

...

return ret;

}

获取IMediaPlayerService服务代理的实现 代码:

// establish binder interface to MediaPlayerService

/*static*/const sp<IMediaPlayerService>

IMediaDeathNotifier::getMediaPlayerService()

{

ALOGV("getMediaPlayerService");

Mutex::Autolock _l(sServiceLock);

if (sMediaPlayerService == 0) {

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

binder = sm->getService(String16("media.player"));

if (binder != 0) {

break;

}

ALOGW("Media player service not published, waiting...");

usleep(500000); // 0.5 s

} while (true);

if (sDeathNotifier == NULL) {

sDeathNotifier = new DeathNotifier();

}

binder->linkToDeath(sDeathNotifier);

sMediaPlayerService = interface_cast<IMediaPlayerService>(binder);

}

ALOGE_IF(sMediaPlayerService == 0, "no media player service!?");

return sMediaPlayerService;

}

IMediaPlayerService服务实现代码:

//rc 文件

//frameworks\av\media\mediaserver\mediaserver.rc

service media /system/bin/mediaserver

class main

user media

group audio camera inet net_bt net_bt_admin net_bw_acct drmrpc mediadrm

ioprio rt 4

writepid /dev/cpuset/foreground/tasks /dev/stune/foreground/tasks

进程入口:

//frameworks\av\media\mediaserver\main_mediaserver.cpp

int main(int argc __unused, char **argv __unused)

{

signal(SIGPIPE, SIG_IGN);

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm(defaultServiceManager());

ALOGI("ServiceManager: %p", sm.get());

InitializeIcuOrDie();

//将服务注册给IServiceManager

MediaPlayerService::instantiate();

ResourceManagerService::instantiate();

registerExtensions();

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}

MediaPlayerService注册实现代码

//frameworks\av\media\libmediaplayerservice\MediaPlayerService.cpp

void MediaPlayerService::instantiate() {

defaultServiceManager()->addService(

String16("media.player"), new MediaPlayerService());

}

至此可以看出,将MediaPlayerService注册给了serviceManager

继续分析 service->createMediaRecorder(opPackageName)

进入到IMediaPlayerService

//frameworks\av\media\libmedia\IMediaPlayerService.cpp

virtual sp<IMediaRecorder> createMediaRecorder(const String16 &opPackageName)

{

Parcel data, reply;

data.writeInterfaceToken(IMediaPlayerService::getInterfaceDescriptor());

data.writeString16(opPackageName);

//Binder IPC

remote()->transact(CREATE_MEDIA_RECORDER, data, &reply);

return interface_cast<IMediaRecorder>(reply.readStrongBinder());

}

进入到BnMediaPlayerService

status_t BnMediaPlayerService::onTransact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

switch (code) {

....

case CREATE_MEDIA_RECORDER: {

CHECK_INTERFACE(IMediaPlayerService, data, reply);

const String16 opPackageName = data.readString16();

sp<IMediaRecorder> recorder = createMediaRecorder(opPackageName);

reply->writeStrongBinder(IInterface::asBinder(recorder));

return NO_ERROR;

} break;

....

default:

return BBinder::onTransact(code, data, reply, flags);

}

}

进入到BnMediaPlayerService的实现类MediaPlayerService

sp<IMediaRecorder> MediaPlayerService::createMediaRecorder(const String16 &opPackageName)

{

pid_t pid = IPCThreadState::self()->getCallingPid();

//创建MediaRecorderClient对象

sp<MediaRecorderClient> recorder = new MediaRecorderClient(this, pid, opPackageName);

wp<MediaRecorderClient> w = recorder;

Mutex::Autolock lock(mLock);

mMediaRecorderClients.add(w);

ALOGV("Create new media recorder client from pid %d", pid);

return recorder;

}

由此可见mediarecorder.cpp中createMediaRecorder(opPackageName)返回的其实是MediaRecorderClient对应的代理对象BpMediaRecorder。

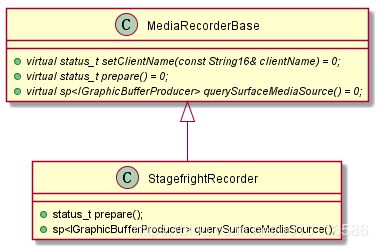

MediaRecorderClient类继承实现了IMediaRecorder,类图下如:

MediaRecorderClient 内部成员变量mRecorder是在其构造函数中初始创建的,类型为:StagefrightRecorder

//frameworks\av\media\libmediaplayerservice\MediaRecorderClient.cpp

MediaRecorderClient::MediaRecorderClient(const sp<MediaPlayerService>& service, pid_t pid,

const String16& opPackageName)

{

ALOGV("Client constructor");

mPid = pid;

mRecorder = AVMediaServiceFactory::get()->createStagefrightRecorder(opPackageName);

mMediaPlayerService = service;

}

createStagefrightRecorder创建的是StagefrightRecorder

//frameworks\av\media\libmediaplayerservice\StagefrightRecorder.cpp

StagefrightRecorder *AVMediaServiceFactory::createStagefrightRecorder(

const String16 &opPackageName) {

return new StagefrightRecorder(opPackageName);

}

类图如下

MediaRecorderClient是通过mRecorder对象完成prepare等操作的,代码如下:

//frameworks\av\media\libmediaplayerservice\MediaRecorderClient.cpp

status_t MediaRecorderClient::prepare()

{

ALOGV("prepare");

Mutex::Autolock lock(mLock);

if (mRecorder == NULL) {

ALOGE("recorder is not initialized");

return NO_INIT;

}

return mRecorder->prepare();

}

进入StagefrightRecorder.cpp

//frameworks\av\media\libmediaplayerservice\StagefrightRecorder.cpp

status_t StagefrightRecorder::prepare() {

ALOGV("prepare");

Mutex::Autolock autolock(mLock);

//apk配置过mVideoSource为VIDEO_SOURCE_SURFACE

if (mVideoSource == VIDEO_SOURCE_SURFACE) {

return prepareInternal();

}

return OK;

}

进入prepareInternal()函数,该方法是初始化所需的编码器,本文配置的是setupMPEG4orWEBMRecording

//frameworks\av\media\libmediaplayerservice\StagefrightRecorder.cpp

status_t StagefrightRecorder::prepareInternal() {

ALOGV("prepare");

if (mOutputFd < 0) {

ALOGE("Output file descriptor is invalid");

return INVALID_OPERATION;

}

// Get UID and PID here for permission checking

mClientUid = IPCThreadState::self()->getCallingUid();

mClientPid = IPCThreadState::self()->getCallingPid();

status_t status = OK;

switch (mOutputFormat) {

case OUTPUT_FORMAT_DEFAULT:

case OUTPUT_FORMAT_THREE_GPP:

case OUTPUT_FORMAT_MPEG_4:

case OUTPUT_FORMAT_WEBM:

//配置pMPEG4orWEBM编码器

status = setupMPEG4orWEBMRecording();

break;

case OUTPUT_FORMAT_AMR_NB:

case OUTPUT_FORMAT_AMR_WB:

status = setupAMRRecording();

break;

case OUTPUT_FORMAT_AAC_ADIF:

case OUTPUT_FORMAT_AAC_ADTS:

status = setupAACRecording();

break;

case OUTPUT_FORMAT_RTP_AVP:

status = setupRTPRecording();

break;

case OUTPUT_FORMAT_MPEG2TS:

status = setupMPEG2TSRecording();

break;

default:

if (handleCustomRecording() != OK) {

ALOGE("Unsupported output file format: %d", mOutputFormat);

status = UNKNOWN_ERROR;

}

break;

}

ALOGV("Recording frameRate: %d captureFps: %f",

mFrameRate, mCaptureFps);

return status;

}

setupMPEG4orWEBMRecording代码实现

//frameworks\av\media\libmediaplayerservice\StagefrightRecorder.cpp

status_t StagefrightRecorder::setupMPEG4orWEBMRecording() {

mWriter.clear();

mTotalBitRate = 0;

status_t err = OK;

sp<MediaWriter> writer;

sp<MPEG4Writer> mp4writer;

if (mOutputFormat == OUTPUT_FORMAT_WEBM) {

writer = new WebmWriter(mOutputFd);

} else {

//创建的是CreateMPEG4Writer

writer = mp4writer = AVFactory::get()->CreateMPEG4Writer(mOutputFd);

}

if (mVideoSource < VIDEO_SOURCE_LIST_END) {

setDefaultVideoEncoderIfNecessary();

sp<MediaSource> mediaSource;

//创建mediaSource,本文应该是空

err = setupMediaSource(&mediaSource);

if (err != OK) {

return err;

}

//创建encoder,下步重点分析

sp<MediaCodecSource> encoder;

err = setupVideoEncoder(mediaSource, &encoder);

if (err != OK) {

return err;

}

//向MPEG4Writer添加encoder

writer->addSource(encoder);

mVideoEncoderSource = encoder;

mTotalBitRate += mVideoBitRate;

}

if (mOutputFormat != OUTPUT_FORMAT_WEBM) {

// Audio source is added at the end if it exists.

// This help make sure that the "recoding" sound is suppressed for

// camcorder applications in the recorded files.

// TODO Audio source is currently unsupported for webm output; vorbis encoder needed.

// disable audio for time lapse recording

bool disableAudio = mCaptureFpsEnable && mCaptureFps < mFrameRate;

if (!disableAudio && mAudioSource != AUDIO_SOURCE_CNT) {

err = setupAudioEncoder(writer);

if (err != OK) return err;

mTotalBitRate += mAudioBitRate;

}

if (mCaptureFpsEnable) {

mp4writer->setCaptureRate(mCaptureFps);

}

if (mInterleaveDurationUs > 0) {

mp4writer->setInterleaveDuration(mInterleaveDurationUs);

}

if (mLongitudex10000 > -3600000 && mLatitudex10000 > -3600000) {

mp4writer->setGeoData(mLatitudex10000, mLongitudex10000);

}

}

if (mMaxFileDurationUs != 0) {

writer->setMaxFileDuration(mMaxFileDurationUs);

}

if (mMaxFileSizeBytes != 0) {

writer->setMaxFileSize(mMaxFileSizeBytes);

}

if (mVideoSource == VIDEO_SOURCE_DEFAULT

|| mVideoSource == VIDEO_SOURCE_CAMERA) {

mStartTimeOffsetMs = mEncoderProfiles->getStartTimeOffsetMs(mCameraId);

} else if (mVideoSource == VIDEO_SOURCE_SURFACE) {

// surface source doesn't need large initial delay

mStartTimeOffsetMs = 100;

}

if (mStartTimeOffsetMs > 0) {

writer->setStartTimeOffsetMs(mStartTimeOffsetMs);

}

writer->setListener(mListener);

mWriter = writer;

return OK;

}

分析下setupVideoEncoder

status_t StagefrightRecorder::setupVideoEncoder(

const sp<MediaSource> &cameraSource,

sp<MediaCodecSource> *source) {

source->clear();

sp<AMessage> format = new AMessage();

//本文采用的是VIDEO_ENCODER_H264编码??VIDEO_ENCODER_MPEG_4_SP??待确定

switch (mVideoEncoder) {

case VIDEO_ENCODER_H263:

format->setString("mime", MEDIA_MIMETYPE_VIDEO_H263);

break;

case VIDEO_ENCODER_MPEG_4_SP:

format->setString("mime", MEDIA_MIMETYPE_VIDEO_MPEG4);

break;

case VIDEO_ENCODER_H264:

format->setString("mime", MEDIA_MIMETYPE_VIDEO_AVC);

break;

case VIDEO_ENCODER_VP8:

format->setString("mime", MEDIA_MIMETYPE_VIDEO_VP8);

break;

case VIDEO_ENCODER_HEVC:

format->setString("mime", MEDIA_MIMETYPE_VIDEO_HEVC);

break;

default:

CHECK(!"Should not be here, unsupported video encoding.");

break;

}

//cameraSource为NULL

if (cameraSource != NULL) {

sp<MetaData> meta = cameraSource->getFormat();

int32_t width, height, stride, sliceHeight, colorFormat;

CHECK(meta->findInt32(kKeyWidth, &width));

CHECK(meta->findInt32(kKeyHeight, &height));

CHECK(meta->findInt32(kKeyStride, &stride));

CHECK(meta->findInt32(kKeySliceHeight, &sliceHeight));

CHECK(meta->findInt32(kKeyColorFormat, &colorFormat));

format->setInt32("width", width);

format->setInt32("height", height);

format->setInt32("stride", stride);

format->setInt32("slice-height", sliceHeight);

format->setInt32("color-format", colorFormat);

} else {

//HFR走的是该处

format->setInt32("width", mVideoWidth);

format->setInt32("height", mVideoHeight);

format->setInt32("stride", mVideoWidth);

format->setInt32("slice-height", mVideoHeight);

format->setInt32("color-format", OMX_COLOR_FormatAndroidOpaque);

// set up time lapse/slow motion for surface source

if (mCaptureFpsEnable) {

if (!(mCaptureFps > 0.)) {

ALOGE("Invalid mCaptureFps value: %lf", mCaptureFps);

return BAD_VALUE;

}

format->setDouble("time-lapse-fps", mCaptureFps);

}

}

setupCustomVideoEncoderParams(cameraSource, format);

format->setInt32("bitrate", mVideoBitRate);

format->setInt32("frame-rate", mFrameRate);

format->setInt32("i-frame-interval", mIFramesIntervalSec);

if (mVideoTimeScale > 0) {

format->setInt32("time-scale", mVideoTimeScale);

}

if (mVideoEncoderProfile != -1) {

format->setInt32("profile", mVideoEncoderProfile);

}

if (mVideoEncoderLevel != -1) {

format->setInt32("level", mVideoEncoderLevel);

}

uint32_t tsLayers = 1;

bool preferBFrames = true; // we like B-frames as it produces better quality per bitrate

format->setInt32("priority", 0 /* realtime */);

float maxPlaybackFps = mFrameRate; // assume video is only played back at normal speed

if (mCaptureFpsEnable) {

format->setFloat("operating-rate", mCaptureFps);

// enable layering for all time lapse and high frame rate recordings

if (mFrameRate / mCaptureFps >= 1.9) { // time lapse

preferBFrames = false;

tsLayers = 2; // use at least two layers as resulting video will likely be sped up

} else if (mCaptureFps > maxPlaybackFps) { // slow-mo

//HFR 走的是该出

format->setInt32("high-frame-rate", 1);

maxPlaybackFps = mCaptureFps; // assume video will be played back at full capture speed

preferBFrames = false;

}

}

for (uint32_t tryLayers = 1; tryLayers <= kMaxNumVideoTemporalLayers; ++tryLayers) {

if (tryLayers > tsLayers) {

tsLayers = tryLayers;

}

// keep going until the base layer fps falls below the typical display refresh rate

float baseLayerFps = maxPlaybackFps / (1 << (tryLayers - 1));

if (baseLayerFps < kMinTypicalDisplayRefreshingRate / 0.9) {

break;

}

}

// mIFramesIntervalSec == 0 means all Intra frame, can't support P/B layers

if (tsLayers > 1 && mIFramesIntervalSec != 0) {

uint32_t bLayers = std::min(2u, tsLayers - 1); // use up-to 2 B-layers

uint32_t pLayers = tsLayers - bLayers;

format->setString(

"ts-schema", AStringPrintf("android.generic.%u+%u", pLayers, bLayers));

// TODO: some encoders do not support B-frames with temporal layering, and we have a

// different preference based on use-case. We could move this into camera profiles.

format->setInt32("android._prefer-b-frames", preferBFrames);

}

if (mMetaDataStoredInVideoBuffers != kMetadataBufferTypeInvalid) {

format->setInt32("android._input-metadata-buffer-type", mMetaDataStoredInVideoBuffers);

}

if (mOutputFormat == OUTPUT_FORMAT_MPEG_4) {

format->setInt32("mpeg4-writer", 1);

format->setInt32("nal-length", 4);

}

uint32_t flags = 0;

if (cameraSource == NULL) {

//hfr 走的是该处

flags |= MediaCodecSource::FLAG_USE_SURFACE_INPUT;

} else {

// require dataspace setup even if not using surface input

format->setInt32("android._using-recorder", 1);

}

//创建MediaCodecSource对象encoder

sp<MediaCodecSource> encoder = MediaCodecSource::Create(

mLooper, format, cameraSource, mPersistentSurface, flags);

if (encoder == NULL) {

ALOGE("Failed to create video encoder");

// When the encoder fails to be created, we need

// release the camera source due to the camera's lock

// and unlock mechanism.

if (cameraSource != NULL) {

cameraSource->stop();

}

return UNKNOWN_ERROR;

}

//HFR的cameraSource为NULL,是通过encoder->getGraphicBufferProducer()来获取mGraphicBufferProducer的

if (cameraSource == NULL) {

mGraphicBufferProducer = encoder->getGraphicBufferProducer();

}

*source = encoder;

return OK;

}

分析下MediaCodecSource::Create(..)

//frameworks\av\media\libstagefright\MediaCodecSource.cpp

sp<MediaCodecSource> MediaCodecSource::Create(

const sp<ALooper> &looper,

const sp<AMessage> &format,

const sp<MediaSource> &source,

const sp<PersistentSurface> &persistentSurface,

uint32_t flags) {

//创建MediaCodecSource

sp<MediaCodecSource> mediaSource = new MediaCodecSource(

looper, format, source, persistentSurface, flags);

//获取HFR参数,赋值给mediaSource

AVUtils::get()->getHFRParams(&mediaSource->mIsHFR, &mediaSource->mBatchSize, format);

//mediaSource初始化,mediarecorder获取的sureface就是在该方法中初始化的,稍后会重点分析,

if (mediaSource->init() == OK) {

return mediaSource;

}

return NULL;

}

分析下encoder->getGraphicBufferProducer()

//frameworks\av\media\libstagefright\MediaCodecSource.cpp

sp<IGraphicBufferProducer> MediaCodecSource::getGraphicBufferProducer() {

CHECK(mFlags & FLAG_USE_SURFACE_INPUT);

return mGraphicBufferProducer;

}

至此,可见mMediaRecorder.getSurface()获取的surface实际上获取的是MediaCodecSource.cpp类成员变量mGraphicBufferProducer封装成 的surface对象。

接下来继续分析下,MediaCodecSource.cpp类成员变量mGraphicBufferProducer的初始化过程 其是在MediaCodecSource::Create(…)`中的mediaSource->init()初始化的,代码如下:

//frameworks\av\media\libstagefright\MediaCodecSource.cpp

status_t MediaCodecSource::init() {

//初始化

status_t err = initEncoder();

if (err != OK) {

releaseEncoder();

}

return err;

}

继续分析initEncoder

//frameworks\av\media\libstagefright\MediaCodecSource.cpp

status_t MediaCodecSource::initEncoder() {

mReflector = new AHandlerReflector<MediaCodecSource>(this);

mLooper->registerHandler(mReflector);

//启动消息循环线程,之后分析AMessage时会用到

mCodecLooper = new ALooper;

mCodecLooper->setName("codec_looper");

mCodecLooper->start();

if (mFlags & FLAG_USE_SURFACE_INPUT) {

mOutputFormat->setInt32("create-input-buffers-suspended", 1);

}

AString outputMIME;

CHECK(mOutputFormat->findString("mime", &outputMIME));

Vector<AString> matchingCodecs;

if (AVUtils::get()->useQCHWEncoder(mOutputFormat, &matchingCodecs)) {

;

} else {

//寻找与之匹配的Codecs

MediaCodecList::findMatchingCodecs(

outputMIME.c_str(), true /* encoder */,

((mFlags & FLAG_PREFER_SOFTWARE_CODEC) ? MediaCodecList::kPreferSoftwareCodecs : 0),

&matchingCodecs);

}

status_t err = NO_INIT;

for (size_t ix = 0; ix < matchingCodecs.size(); ++ix) {

//创建MediaCodec

mEncoder = MediaCodec::CreateByComponentName(

mCodecLooper, matchingCodecs[ix]);

if (mEncoder == NULL) {

continue;

}

ALOGV("output format is '%s'", mOutputFormat->debugString(0).c_str());

mEncoderActivityNotify = new AMessage(kWhatEncoderActivity, mReflector);

mEncoder->setCallback(mEncoderActivityNotify);

//初始化MediaCodec

err = mEncoder->configure(

mOutputFormat,

NULL /* nativeWindow */,

NULL /* crypto */,

MediaCodec::CONFIGURE_FLAG_ENCODE);

if (err == OK) {

break;

}

mEncoder->release();

mEncoder = NULL;

}

if (err != OK) {

return err;

}

mEncoder->getOutputFormat(&mOutputFormat);

sp<MetaData> meta = new MetaData;

convertMessageToMetaData(mOutputFormat, meta);

mMeta.lock().set(meta);

if (mFlags & FLAG_USE_SURFACE_INPUT) {

CHECK(mIsVideo);

if (mPersistentSurface != NULL) {

// When using persistent surface, we are only interested in the

// consumer, but have to use PersistentSurface as a wrapper to

// pass consumer over messages (similar to BufferProducerWrapper)

err = mEncoder->setInputSurface(mPersistentSurface);

} else {

//终于到达了创建surface的地方了

err = mEncoder->createInputSurface(&mGraphicBufferProducer);

}

if (err != OK) {

return err;

}

}

sp<AMessage> inputFormat;

int32_t usingSwReadOften;

mSetEncoderFormat = false;

if (mEncoder->getInputFormat(&inputFormat) == OK) {

mSetEncoderFormat = true;

if (inputFormat->findInt32("using-sw-read-often", &usingSwReadOften)

&& usingSwReadOften) {

// this is a SW encoder; signal source to allocate SW readable buffers

mEncoderFormat = kDefaultSwVideoEncoderFormat;

} else {

mEncoderFormat = kDefaultHwVideoEncoderFormat;

}

if (!inputFormat->findInt32("android._dataspace", &mEncoderDataSpace)) {

mEncoderDataSpace = kDefaultVideoEncoderDataSpace;

}

ALOGV("setting dataspace %#x, format %#x", mEncoderDataSpace, mEncoderFormat);

}

//启动MediaCodec

err = mEncoder->start();

if (err != OK) {

return err;

}

{

Mutexed<Output>::Locked output(mOutput);

output->mEncoderReachedEOS = false;

output->mErrorCode = OK;

}

return OK;

}

继续分析surface的创建

//frameworks\av\media\libstagefright\MediaCodec.cpp

status_t MediaCodec::createInputSurface(

sp<IGraphicBufferProducer>* bufferProducer) {

sp<AMessage> msg = new AMessage(kWhatCreateInputSurface, this);

sp<AMessage> response;

//是通过发消息kWhatCreateInputSurface来创建的surface的

//靠,还需要研究下AMessage的发送接收!!!哥已晕

status_t err = PostAndAwaitResponse(msg, &response);

if (err == NO_ERROR) {

// unwrap the sp接下来分下下AMessage机制

启动过程

//frameworks\av\media\libstagefright\MediaCodecSource.cpp

status_t MediaCodecSource::initEncoder() {

mReflector = new AHandlerReflector<MediaCodecSource>(this);

mLooper->registerHandler(mReflector);

//创建ALooper线程

mCodecLooper = new ALooper;

mCodecLooper->setName("codec_looper");

mCodecLooper->start();

...

status_t err = NO_INIT;

for (size_t ix = 0; ix < matchingCodecs.size(); ++ix) {

//将上一步创建的mCodecLooper传入待创建的MediaCodec

mEncoder = MediaCodec::CreateByComponentName(

mCodecLooper, matchingCodecs[ix]);

....

return OK;

}

// static

sp<MediaCodec> MediaCodec::CreateByComponentName(

const sp<ALooper> &looper, const AString &name, status_t *err, pid_t pid, uid_t uid) {

VTRACE_CALL();

//将上一步创建的mCodecLooper传入待创建的MediaCodec

sp<MediaCodec> codec = new MediaCodec(looper, pid, uid);

//进入MediaCodec 的init方法

const status_t ret = codec->init(name, false /* nameIsType */, false /* encoder */);

if (err != NULL) {

*err = ret;

}

return ret == OK ? codec : NULL; // NULL deallocates codec.

}

为方便分析,先给出MediaCodec类图

可见MediaCodec继承AHandler

进入init

status_t MediaCodec::init(const AString &name, bool nameIsType, bool encoder) {

...

// 创建的Acode

mCodec = GetCodecBase(name, nameIsType);

if (mIsVideo) {

// video codec needs dedicated looper

if (mCodecLooper == NULL) {

mCodecLooper = new ALooper;

mCodecLooper->setName("CodecLooper");

mCodecLooper->start(false, false, ANDROID_PRIORITY_AUDIO);

}

mCodecLooper->registerHandler(mCodec);

} else {

//给上一步骤创建的MediaCodec设置mLooper及ID,将Loop和ID设置给Handle

mLooper->registerHandler(mCodec);

}

mLooper->registerHandler(this);

...

return err;

}

继续分析registerHandler

//frameworks\av\media\libstagefright\foundation\ALooper.cpp

ALooper::handler_id ALooper::registerHandler(const sp<AHandler> &handler) {

return gLooperRoster.registerHandler(this, handler);

}

继续分析registerHandler

//frameworks\av\media\libstagefright\foundation\ALooperRoster.cpp

ALooper::handler_id ALooperRoster::registerHandler(

const sp<ALooper> &looper, const sp<AHandler> &handler) {

Mutex::Autolock autoLock(mLock);

if (handler->id() != 0) {

CHECK(!"A handler must only be registered once.");

return INVALID_OPERATION;

}

HandlerInfo info;

info.mLooper = looper;

info.mHandler = handler;

ALooper::handler_id handlerID = mNextHandlerID++;

mHandlers.add(handlerID, info);

handler->setID(handlerID, looper);

return handlerID;

}

继续分析handler->setID()

//frameworks\av\media\libstagefright\foundation\include\media\stagefright\foundation\AHandler.h

inline void setID(ALooper::handler_id id, const wp<ALooper> &looper) {

mID = id;

mLooper = looper;

}

接着分析AMessage的使用

AMessage创建

//frameworks\av\media\libstagefright\foundation\AMessage.cpp

AMessage::AMessage(uint32_t what, const sp<const AHandler> &handler)

: mWhat(what),

mNumItems(0) {

setTarget(handler);

}

接着分析下

void AMessage::setTarget(const sp<const AHandler> &handler) {

if (handler == NULL) {

mTarget = 0;

mHandler.clear();

mLooper.clear();

} else {

mTarget = handler->id();

mHandler = handler->getHandler();

mLooper = handler->getLooper();

}

}

可见,最终通过handler获取了mLooper和id

接着分析消息发送

status_t AMessage::postAndAwaitResponse(sp<AMessage> *response) {

sp<ALooper> looper = mLooper.promote();

if (looper == NULL) {

ALOGW("failed to post message as target looper for handler %d is gone.", mTarget);

return -ENOENT;

}

sp<AReplyToken> token = looper->createReplyToken();

if (token == NULL) {

ALOGE("failed to create reply token");

return -ENOMEM;

}

setObject("replyID", token);

//给ALoopeer发送消息

looper->post(this, 0 /* delayUs */);

//从ALopper中获取结果

return looper->awaitResponse(token, response);

}

void ALooper::post(const sp<AMessage> &msg, int64_t delayUs) {

Mutex::Autolock autoLock(mLock);

int64_t whenUs;

if (delayUs > 0) {

whenUs = GetNowUs() + delayUs;

} else {

whenUs = GetNowUs();

}

List<Event>::iterator it = mEventQueue.begin();

while (it != mEventQueue.end() && (*it).mWhenUs <= whenUs) {

++it;

}

//根据msg,形成Event

Event event;

event.mWhenUs = whenUs;

event.mMessage = msg;

if (it == mEventQueue.begin()) {

mQueueChangedCondition.signal();

}

//插入消息队列

mEventQueue.insert(it, event);

}

//frameworks\av\media\libstagefright\foundation\ALooper.cpp

bool ALooper::loop() {

Event event;

{

...

取消息

event = *mEventQueue.begin();

mEventQueue.erase(mEventQueue.begin());

}

//发消息

event.mMessage->deliver();

// NOTE: It's important to note that at this point our "ALooper" object

// may no longer exist (its final reference may have gone away while

// delivering the message). We have made sure, however, that loop()

// won't be called again.

return true;

}

继续分析deliver

//\frameworks\av\media\libstagefright\foundation\AMessage.cpp

void AMessage::deliver() {

sp<AHandler> handler = mHandler.promote();

if (handler == NULL) {

ALOGW("failed to deliver message as target handler %d is gone.", mTarget);

return;

}

handler->deliverMessage(this);

}

继续分析deliverMessage

//frameworks\av\media\libstagefright\foundation\AHandler.cpp

void AHandler::deliverMessage(const sp<AMessage> &msg) {

onMessageReceived(msg);

...

}

进入MediaCodec

//frameworks\av\media\libstagefright\MediaCodec.cpp

void MediaCodec::onMessageReceived(const sp<AMessage> &msg) {

VTRACE_METHOD();

switch (msg->what()) {

...

case kWhatCreateInputSurface:

case kWhatSetInputSurface:

{

.....

mReplyID = replyID;

if (msg->what() == kWhatCreateInputSurface) {

//给ACode发送消息创建surface

mCodec->initiateCreateInputSurface();

}

break;

}

....

default:

TRESPASS();

}

}

最终进入ACodec.cpp

//frameworks\av\media\libstagefright\ACodec.cpp

bool ACodec::LoadedState::onMessageReceived(const sp<AMessage> &msg) {

bool handled = false;

switch (msg->what()) {

....

case ACodec::kWhatCreateInputSurface:

{

onCreateInputSurface(msg);

handled = true;

break;

}

...

return handled;

}

进如创建surface的流程

//frameworks\av\media\libstagefright\ACodec.cpp

void ACodec::LoadedState::onCreateInputSurface(

const sp<AMessage> & /* msg */) {

ALOGV("onCreateInputSurface");

//创建surface

sp<IGraphicBufferProducer> bufferProducer;

//mOMX类型是sp mOMX,接下来分析下其创建过程

status_t err = mCodec->mOMX->createInputSurface(

&bufferProducer, &mCodec->mGraphicBufferSource);

...

}

mOMX的创建过程

//frameworks\av\media\libstagefright\ACodec.cpp

bool ACodec::UninitializedState::onAllocateComponent(const sp<AMessage> &msg) {

ALOGV("onAllocateComponent");

....

sp<CodecObserver> observer = new CodecObserver;

sp<IOMX> omx;

sp<IOMXNode> omxNode;

status_t err = NAME_NOT_FOUND;

for (size_t matchIndex = 0; matchIndex < matchingCodecs.size();

++matchIndex) {

componentName = matchingCodecs[matchIndex];

OMXClient client;

bool trebleFlag;

//创建IOMX对象

if (client.connect(owners[matchIndex].c_str(), &trebleFlag) != OK) {

mCodec->signalError(OMX_ErrorUndefined, NO_INIT);

return false;

}

//omx 是通过client获取的,

omx = client.interface();

...

mCodec->mOMX = omx;

mCodec->mOMXNode = omxNode;

mCodec->mCallback->onComponentAllocated(mCodec->mComponentName.c_str());

mCodec->changeState(mCodec->mLoadedState);

return true;

}

接着分析下,IOMX对象的创建connect

//frameworks\av\media\libstagefright\OMXClient.cpp

status_t OMXClient::connect(const char* name, bool* trebleFlag) {

if (property_get_bool("persist.media.treble_omx", true)) {

if (trebleFlag != nullptr) {

*trebleFlag = true;

}

return connectTreble(name);

}

if (trebleFlag != nullptr) {

*trebleFlag = false;

}

//进入的是该函数

return connectLegacy();

}

status_t OMXClient::connectLegacy() {

//获取服务代理对象codecservice

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> codecbinder = sm->getService(String16("media.codec"));

sp<IMediaCodecService> codecservice = interface_cast<IMediaCodecService>(codecbinder);

if (codecservice.get() == NULL) {

ALOGE("Cannot obtain IMediaCodecService");

return NO_INIT;

}

//通过codecservice 获取mOMX对象

mOMX = codecservice->getOMX();

if (mOMX.get() == NULL) {

ALOGE("Cannot obtain mediacodec IOMX");

return NO_INIT;

}

return OK;

}

进入到media.codec服务

服务实现代码:

rc文件:

//frameworks\av\services\mediacodec\[email protected]

service mediacodec /vendor/bin/hw/android.hardware.media.omx@1.0-service

class main

user mediacodec

group camera drmrpc mediadrm audio

ioprio rt 4

writepid /dev/cpuset/foreground/tasks

进程入口:

//frameworks\av\services\mediacodec\main_codecservice.cpp

int main(int argc __unused, char** argv)

{

LOG(INFO) << "mediacodecservice starting";

....

if (treble) {

...

} else {

MediaCodecService::instantiate();

LOG(INFO) << "Non-Treble OMX service created.";

}

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}

getOMX实现

//frameworks\av\services\mediacodec\MediaCodecService.cpp

sp<IOMX> MediaCodecService::getOMX() {

Mutex::Autolock autoLock(mOMXLock);

if (mOMX.get() == NULL) {

//创建OMX对象

mOMX = new OMX();

}

//返回OMX对象

return mOMX;

}

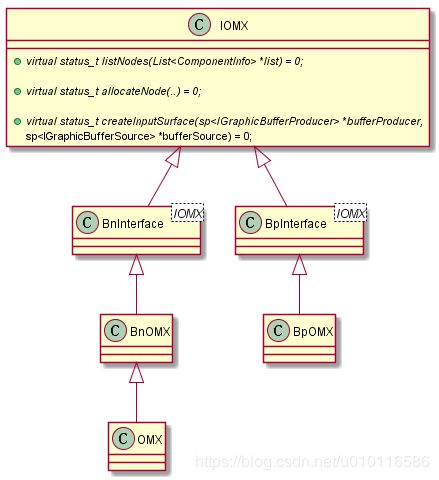

OMX类图如下:

继续分析surface 创建,上一步分析了OMX的创建过程,现在进入OMX createInputSurface创建surface

//frameworks\av\media\libstagefright\omx\OMX.cpp

status_t OMX::createInputSurface(

sp<IGraphicBufferProducer> *bufferProducer,

sp<IGraphicBufferSource> *bufferSource) {

if (bufferProducer == NULL || bufferSource == NULL) {

ALOGE("b/25884056");

return BAD_VALUE;

}

//创建GraphicBufferSource对象,surface就是在该方法创建出来的

sp<GraphicBufferSource> graphicBufferSource = new GraphicBufferSource();

status_t err = graphicBufferSource->initCheck();

if (err != OK) {

ALOGE("Failed to create persistent input surface: %s (%d)",

strerror(-err), err);

return err;

}

*bufferProducer = graphicBufferSource->getIGraphicBufferProducer();

*bufferSource = new BWGraphicBufferSource(graphicBufferSource);

return OK;

}

//frameworks\av\media\libstagefright\omx\GraphicBufferSource.cpp

GraphicBufferSource::GraphicBufferSource() {

ALOGV("GraphicBufferSource");

String8 name("GraphicBufferSource");

//创建surface,千辛万苦,终于看到了surface的创建的地方了

BufferQueue::createBufferQueue(&mProducer, &mConsumer);

mConsumer->setConsumerName(name);

// Note that we can't create an sp<...>(this) in a ctor that will not keep a

// reference once the ctor ends, as that would cause the refcount of 'this'

// dropping to 0 at the end of the ctor. Since all we need is a wp<...>

// that's what we create.

wp<BufferQueue::ConsumerListener> listener =

static_cast<BufferQueue::ConsumerListener*>(this);

sp<IConsumerListener> proxy =

new BufferQueue::ProxyConsumerListener(listener);

mInitCheck = mConsumer->consumerConnect(proxy, false);

...

}

至此分析完成,mediarecorder创建其内部的surface流程