CentOS 7部署Hadoop集群(完全分布式)

目录

测试环境

集群服务器节点与进程

集群部署规划

搭建Linux系统

配置Java环境

安装单机版Hadoop

配置环境变量

关闭防火墙

修改hosts文件

配置SSH免密登录

修改Hadoop配置文件

HDFS初始化

启动Hadoop

查看服务器进程

启动HDFS和YARN的web管理界面

测试环境

Linux系统版本:CentOS 7 64位

Hadoop版本:hadoop-2.7.3

Java版本:jdk-8u181-linux-x64

集群服务器节点与进程

Hadoop中的HDFS和YARN都是主从结构,主从结构中的主节点和从节点有多重概念方式:

| 主节点 | 从节点 |

| master | slave |

| 管理者 | 工作者 |

| leader | followe |

Hadoop集群中各个角色的名称:

| 服务 | 主节点 | 从节点 |

| HDFS | NameNode | DataNode |

| YARN | ResourceManager | NodeManager |

HDFS

NameNode:主Master,整个Hadoop集群只能有一个,管理HDFS文件系统的命名空间,维护元数据信息,管理副本的配置和信息(默认三个副本),处理客户端读写请求。

DataNode:Slave 工作节点,集群一般会启动多个,负责存储数据块和数据块校验,执行客户端的读写请求,通过心跳机制定期向NameNode汇报运行状态和本地所有块的列表信息,在集群启动时DataNode项NameNode提供存储Block块的列表信息。

YARN

ResourceManager:整个集群只有一个Master,Slave可以有多个,支持高可用,处理客户端Client请求,启动/管理/监控ApplicationMaster,监控NodeManager,资源的分配和调度。

NodeManager:每个节点只有一个,一般与Data Node部署在同一台机器上且一一对应,定时向Resource Manager汇报本机资源的使用状况,处理来自Resource Manager的作业请求,为作业分配Container,处理来自Application Master的请求,启动和停止Container

为增加集群的容灾性,将对SecondaryNameNode进行配置

SecondaryNameNode:备份所有数据分布情况,当Namenode服务器宕机(日常所说的死机)时,可通过该服务器来恢复数据。

集群部署规划

| 主机名称 | IP地址 | 用户名称 | 进程 |

| node101 | 192.168.33.101 | hadoop | NameNode、ResourceManager |

| node102 | 192.168.33.102 | hadoop | DataNode、NodeManager、SecondaryNameNode |

| node103 | 192.168.33.103 | hadoop | DataNode、NodeManager |

| node104 | 192.168.33.104 | hadoop | DataNode、NodeManager |

搭建Linux系统

按如下方法部署四台主机,主机名与IP地址的对应关系见上文集群部署规划

VMware虚拟机安装Linux系统

配置完成之后各主机IP与主机名信息如下:

[root@node101 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:81:55:83 brd ff:ff:ff:ff:ff:ff

inet 192.168.33.101/24 brd 192.168.33.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe81:5583/64 scope link

valid_lft forever preferred_lft forever [root@node102 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c2:52:70 brd ff:ff:ff:ff:ff:ff

inet 192.168.33.102/24 brd 192.168.33.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fec2:5270/64 scope link

valid_lft forever preferred_lft forever

[root@node103 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:9b:9e:e2 brd ff:ff:ff:ff:ff:ff

inet 192.168.33.103/24 brd 192.168.33.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9b:9ee2/64 scope link

valid_lft forever preferred_lft forever

[root@node104 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:76:44:a4 brd ff:ff:ff:ff:ff:ff

inet 192.168.33.104/24 brd 192.168.33.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe76:44a4/64 scope link

valid_lft forever preferred_lft foreve 配置完成之后测试各主机网络互通情况,在每台主机上执行下面两条命令,运行过程中按Ctrl+C可以终止进程,下面就不贴测试效果了

ping 192.168.33.1

ping www.baidu.com配置Java环境

为上面安装的系统配置Java环境变量,本文中就写关键配置步骤与执行命令了,想了解详细的配置过程可以查看:Linux系统下安装Java环境

为了方便,本文就直接使用rpm包安装了,/etc/profile文件暂时不进行配置,到后面配置hadoop单机版时再进行配置

[1-3]均使用root用户执行

1、将安装包jdk-8u181-linux-x64.rpm上传到/usr/local目录下

2、安装rpm包,先设置权限,然后执行rpm命令安装

chmod 755 /usr/local/jdk-8u181-linux-x64.rpm

rpm -ivh /usr/local/jdk-8u181-linux-x64.rpm3、校验安装情况

java -version安装单机版Hadoop

详细步骤查看:CentOS 7部署Hadoop(单机版),这里只简单介绍安装步骤

[1-5]均使用root用户执行

1、将压缩包hadoop-2.7.3.tar.gz上传到/usr/local目录下

2、解压压缩包,进入/usr/local目录,对文件夹重命名

tar -zxvf /usr/local/hadoop-2.7.3.tar.gz

cd /usr/local

mv hadoop-2.7.3 hadoop3、创建hadoop用户和hadoop用户组,并设置hadoop用户密码

useradd hadoop

passwd hadoop4、为hadoop用户添加sudo权限

vi /etc/sudoers在root用户下面一行加上hadoop ALL=(ALL) ALL,保存并退出(这里需要用wq!强制保存退出)

## Next comes the main part: which users can run what software on

## which machines (the sudoers file can be shared between multiple

## systems).

## Syntax:

##

## user MACHINE=COMMANDS

##

## The COMMANDS section may have other options added to it.

##

## Allow root to run any commands anywhere

root ALL=(ALL) ALL

hadoop ALL=(ALL) ALL

5、将hadoop文件夹的主:组设置成hadoop,/usr目录与/usr/local目录所属主:组均为root,默认权限为755,也就是说其他用户(hadoop)没有写入(w)权限,在这里我们需要将这两个目录其他用户的权限设置为7。在这里将hadoop用户加入root组,然后将文件夹权限设置为775

###############################

#chown -R hadoop:hadoop hadoop

#chmod 757 /usr

#chmod 757 /usr/local

###############################

使用下面代码:

gpasswd -a hadoop root

chmod 771 /usr

chmod 771 /usr/local

chown -R hadoop:hadoop /usr/local/hadoop

配置环境变量

[1-3]均使用root用户执行

1、编辑/etc/profile文件

vi /etc/profile2、在末尾加上如下几行代码

export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JRE_HOME=$JAVA_HOME/jre

3、配置完环境变量之后保存退出,让环境变量立即生效

source /etc/profile关闭防火墙

CentOS 7 使用的是firewalld作为防火墙,与CentOS 6 有所不同

下面三步均使用root用户执行

查看防火墙状态:

systemctl status firewalld关闭防火墙:

systemctl stop firewalld关闭防火墙开机自动启动:

systemctl disable firewalld更多详情可以了解:CentOS 7部署Hadoop(伪分布式)

修改hosts文件

修改所有主机的/etc/hosts文件,这里使用root用户操作

vi /etc/hosts在文件后面加上

192.168.33.101 node101

192.168.33.102 node102

192.168.33.103 node103

192.168.33.104 node104注意:此处IP地址后面为Tab制表符,而不是空格

配置SSH免密登录

所有步骤均使用hadoop用户进行操作,方法参照:

Linux系统配置SSH免密登录(多主机互通)

#在每台主机上执行ssh-keygen -t rsa

[hadoop@node101 ~]$ ssh-keygen -t rsa

[hadoop@node102 ~]$ ssh-keygen -t rsa

[hadoop@node103 ~]$ ssh-keygen -t rsa

[hadoop@node104 ~]$ ssh-keygen -t rsa

#生成authorized_keys

[hadoop@node101 ~]$ ssh-copy-id localhost

#将node101上的文件通过scp复制到其他主机,覆盖其他主机的密钥文件

[hadoop@node101 ~]$ scp -r ~/.ssh/* 192.168.33.102:~/.ssh

The authenticity of host '192.168.33.102 (192.168.33.102)' can't be established.

ECDSA key fingerprint is SHA256:mLD6JLZCaaM/4LNX5yw9zIpL0aJaiPLdcKau6gPJEzI.

ECDSA key fingerprint is MD5:b5:ff:b7:d9:f7:76:77:57:df:a5:89:e9:63:63:d8:71.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.33.102' (ECDSA) to the list of known hosts.

[email protected]'s password:

scp: /home/hadoop/.ssh: No such file or directory

[hadoop@node101 ~]$ scp -r ~/.ssh/* 192.168.33.102:~/.ssh

[email protected]'s password:

authorized_keys 100% 396 326.1KB/s 00:00

id_rsa 100% 1675 1.4MB/s 00:00

id_rsa.pub 100% 396 508.2KB/s 00:00

known_hosts 100% 347 594.5KB/s 00:00

[hadoop@node101 ~]$ scp -r ~/.ssh/* 192.168.33.103:~/.ssh

The authenticity of host '192.168.33.103 (192.168.33.103)' can't be established.

ECDSA key fingerprint is SHA256:8jtDe6bMz1Ej/L00sRkVp2P9GEUUBGBYHChtbDQIVkE.

ECDSA key fingerprint is MD5:97:9f:d6:5e:c1:88:7f:b6:f2:df:3b:d7:cb:27:c9:f3.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.33.103' (ECDSA) to the list of known hosts.

[email protected]'s password:

authorized_keys 100% 396 415.9KB/s 00:00

id_rsa 100% 1675 1.6MB/s 00:00

id_rsa.pub 100% 396 514.7KB/s 00:00

known_hosts 100% 523 554.1KB/s 00:00

[hadoop@node101 ~]$ scp -r ~/.ssh/* 192.168.33.104:~/.ssh

The authenticity of host '192.168.33.104 (192.168.33.104)' can't be established.

ECDSA key fingerprint is SHA256:J2aFGIz5bWg1IirGYnQrhBDAuXvSUB9qJyLcxyB+CQ4.

ECDSA key fingerprint is MD5:eb:1e:e6:af:af:5a:11:92:e6:55:ff:a3:09:16:55:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.33.104' (ECDSA) to the list of known hosts.

[email protected]'s password:

authorized_keys 100% 396 349.5KB/s 00:00

id_rsa 100% 1675 1.9MB/s 00:00

id_rsa.pub 100% 396 675.8KB/s 00:00

known_hosts 100% 699 453.9KB/s 00:00 修改Hadoop配置文件

均使用hadoop用户操作,只需要在node101上修改即可

先进入/usr/local/hadoop/etc/hadoop/文件

[hadoop@node101 ~]$ cd /usr/local/hadoop/etc/hadoop/1、修改hadoop-env.sh文件

[hadoop@node101 hadoop]$ vi hadoop-env.sh找到export JAVA_HOME=${JAVA_HOME},在前面加个#注释掉,将JAVA_HOME用路径代替,如下:

#export JAVA_HOME=${JAVA_HOME} export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64

2、修改core-site.xml文件

[hadoop@master100 hadoop]$ vi core-site.xml配置文件如下:

hadoop.tmp.dir file:/usr/local/hadoop/tmp 指定hadoop运行时产生文件的存储路径 fs.defaultFS hdfs://node101:9000 hdfs namenode的通信地址,通信端口

3、修改hdfs-site.xml

[hadoop@node101 hadoop]$ vi hdfs-site.xml配置文件如下:

dfs.replication 3 指定HDFS存储数据的副本数目,默认情况下是3份 dfs.namenode.name.dir file:/usr/local/hadoop/hadoopdata/namenode namenode存放数据的目录 dfs.datanode.data.dir file:/usr/local/hadoop/hadoopdata/datanode datanode存放block块的目录 dfs.secondary.http.address node102:50090 secondarynamenode 运行节点的信息,和 namenode 不同节点 dfs.permissions.enabled false 关闭权限验证

4、修改mapred-site.xml

/usr/local/hadoop/etc/hadoop文件夹中并没有mapred-site.xml文件,但提供了模板mapred-site.xml.template,将其复制一份重命名为mapred-site.xml 即可

[hadoop@node101 hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@node101 hadoop]$ vi mapred-site.xml配置文件如下:

mapreduce.framework.name yarn 指定mapreduce运行在yarn上

5、修改yarn-site.xml

[hadoop@master100 hadoop]$ vi yarn-site.xml配置文件如下:

yarn.resourcemanager.hostname node101 yarn总管理器的IPC通讯地址 yarn.nodemanager.aux-services mapreduce_shuffle mapreduce执行shuffle时获取数据的方式

6、修改slaves文件

[hadoop@node101 hadoop]$ vi slaves配置文件如下:

node102 node103 node104

上述配置文件我已经上传至:https://github.com/PengShuaixin/hadoop-2.7.3_centos7

可以直接下载下来,通过上传到Linux直接覆盖原来文件的方式进行配置

7、通过scp将配置文件上传到其他主机

[hadoop@node101 hadoop]$ scp -r /usr/local/hadoop/etc/hadoop/* node102:/usr/local/hadoop/etc/hadoop

[hadoop@node101 hadoop]$ scp -r /usr/local/hadoop/etc/hadoop/* node103:/usr/local/hadoop/etc/hadoop

[hadoop@node101 hadoop]$ scp -r /usr/local/hadoop/etc/hadoop/* node104:/usr/local/hadoop/etc/hadoop

HDFS初始化

Hadoop配置完后,用hadoop用户操作,在node101上格式化namenode

[hadoop@node101 hadoop]$ hdfs namenode -format出现如下信息说明格式化成功:

[hadoop@node101 hadoop]$ hdfs namenode -format

18/10/04 16:21:27 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = node101/192.168.33.101

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.7.3.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.7.3.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.7.3.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.7.3-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.7.3.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.7.3.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.7.3-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3-tests.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff; compiled by 'root' on 2016-08-18T01:41Z

STARTUP_MSG: java = 1.8.0_181

************************************************************/

18/10/04 16:21:27 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/10/04 16:21:27 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-f67c3bc3-0ce6-4def-8b59-64af51cb4f35

18/10/04 16:21:28 INFO namenode.FSNamesystem: No KeyProvider found.

18/10/04 16:21:28 INFO namenode.FSNamesystem: fsLock is fair:true

18/10/04 16:21:28 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

18/10/04 16:21:28 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

18/10/04 16:21:28 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

18/10/04 16:21:28 INFO blockmanagement.BlockManager: The block deletion will start around 2018 十月 04 16:21:28

18/10/04 16:21:28 INFO util.GSet: Computing capacity for map BlocksMap

18/10/04 16:21:28 INFO util.GSet: VM type = 64-bit

18/10/04 16:21:28 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

18/10/04 16:21:28 INFO util.GSet: capacity = 2^21 = 2097152 entries

18/10/04 16:21:28 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

18/10/04 16:21:28 INFO blockmanagement.BlockManager: defaultReplication = 3

18/10/04 16:21:28 INFO blockmanagement.BlockManager: maxReplication = 512

18/10/04 16:21:28 INFO blockmanagement.BlockManager: minReplication = 1

18/10/04 16:21:28 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

18/10/04 16:21:28 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

18/10/04 16:21:28 INFO blockmanagement.BlockManager: encryptDataTransfer = false

18/10/04 16:21:28 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

18/10/04 16:21:28 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

18/10/04 16:21:28 INFO namenode.FSNamesystem: supergroup = supergroup

18/10/04 16:21:28 INFO namenode.FSNamesystem: isPermissionEnabled = false

18/10/04 16:21:28 INFO namenode.FSNamesystem: HA Enabled: false

18/10/04 16:21:28 INFO namenode.FSNamesystem: Append Enabled: true

18/10/04 16:21:29 INFO util.GSet: Computing capacity for map INodeMap

18/10/04 16:21:29 INFO util.GSet: VM type = 64-bit

18/10/04 16:21:29 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

18/10/04 16:21:29 INFO util.GSet: capacity = 2^20 = 1048576 entries

18/10/04 16:21:29 INFO namenode.FSDirectory: ACLs enabled? false

18/10/04 16:21:29 INFO namenode.FSDirectory: XAttrs enabled? true

18/10/04 16:21:29 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

18/10/04 16:21:29 INFO namenode.NameNode: Caching file names occuring more than 10 times

18/10/04 16:21:29 INFO util.GSet: Computing capacity for map cachedBlocks

18/10/04 16:21:29 INFO util.GSet: VM type = 64-bit

18/10/04 16:21:29 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

18/10/04 16:21:29 INFO util.GSet: capacity = 2^18 = 262144 entries

18/10/04 16:21:29 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

18/10/04 16:21:29 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

18/10/04 16:21:29 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

18/10/04 16:21:29 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

18/10/04 16:21:29 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

18/10/04 16:21:29 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

18/10/04 16:21:29 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/10/04 16:21:29 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/10/04 16:21:29 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/10/04 16:21:29 INFO util.GSet: VM type = 64-bit

18/10/04 16:21:29 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

18/10/04 16:21:29 INFO util.GSet: capacity = 2^15 = 32768 entries

18/10/04 16:21:29 INFO namenode.FSImage: Allocated new BlockPoolId: BP-148426469-192.168.33.101-1538641289434

18/10/04 16:21:29 INFO common.Storage: Storage directory /usr/local/hadoop/hadoopdata/namenode has been successfully formatted.

18/10/04 16:21:29 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/hadoopdata/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

18/10/04 16:21:29 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/hadoopdata/namenode/current/fsimage.ckpt_0000000000000000000 of size 353 bytes saved in 0 seconds.

18/10/04 16:21:29 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/10/04 16:21:29 INFO util.ExitUtil: Exiting with status 0

18/10/04 16:21:29 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at node101/192.168.33.101

************************************************************/

启动Hadoop

使用hadoop用户操作

启动HDFS

注意:不管在集群中的那个节点都可以

[hadoop@node101 hadoop]$ start-dfs.sh可看到如下信息:

[hadoop@node101 hadoop]$ start-dfs.sh Starting namenodes on [node101] The authenticity of host 'node101 (192.168.33.101)' can't be established. ECDSA key fingerprint is SHA256:jXz9wiErwjKiKaa+PJoCRyecdM3jVnu+AW2PrZucWxk. ECDSA key fingerprint is MD5:d1:a2:b4:6d:30:21:d7:f8:3c:17:e8:43:93:6c:5e:da. Are you sure you want to continue connecting (yes/no)? yes node101: Warning: Permanently added 'node101,192.168.33.101' (ECDSA) to the list of known hosts. node101: starting namenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-namenode-node101.out node103: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-node103.out node104: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-node104.out node102: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-node102.out Starting secondary namenodes [node102] node102: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-secondarynamenode-node102.out

启动YARN

注意:只能在主节点中进行启动

[hadoop@node101 hadoop]$ start-yarn.sh可看到如下信息:

[hadoop@node101 hadoop]$ start-yarn.sh starting yarn daemons starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-resourcemanager-node101.out node104: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-nodemanager-node104.out node102: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-nodemanager-node102.out node103: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-nodemanager-node103.out

查看服务器进程

jpsnode101

[hadoop@node101 hadoop]$ jps 15713 Jps 14847 NameNode 15247 ResourceManager

node102

[hadoop@node102 ~]$ jps 15228 NodeManager 14925 DataNode 14989 SecondaryNameNode 15567 Jps

node103

[hadoop@node103 ~]$ jps 14532 DataNode 15143 Jps 14766 NodeManager

node104

[hadoop@node104 ~]$ jps 15217 Jps 14805 NodeManager 14571 DataNode

可以看到与我们集群规划所分配的进程是一致的

启动HDFS和YARN的web管理界面

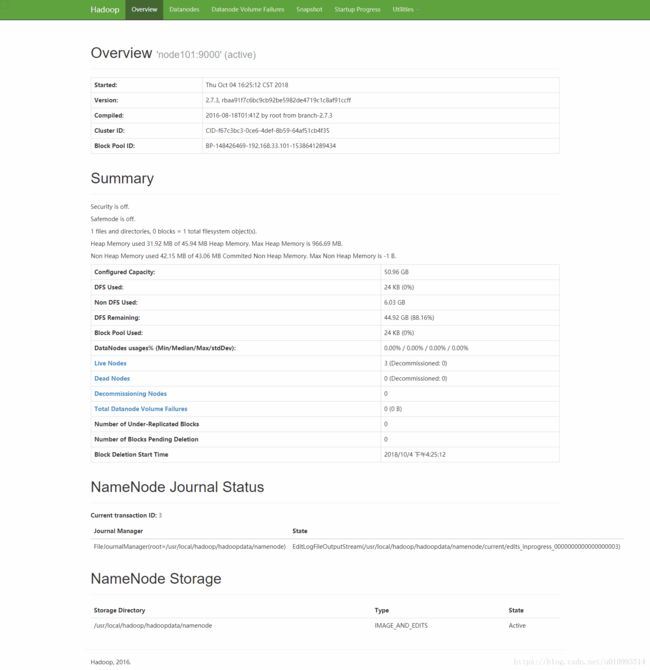

HDFS : http://192.168.33.101:50070

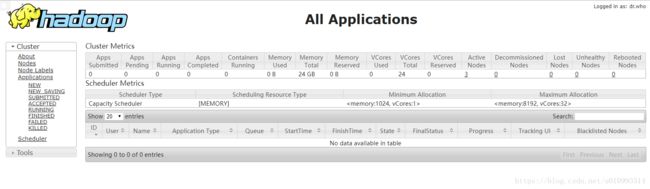

YARN : http://192.168.33.101:8088

HDFS界面

YARN界面

相关推荐

在Windows中安装Hadoop(非虚拟机安装)

CentOS 7部署Hadoop(单机版)

CentOS 7部署Hadoop(伪分布式)

CentOS 7部署Hadoop集群(HA高可用集群)

到这里就配置完整个集群啦,若在配置过程中遇到什么问题,欢迎在下方评论区留言一起讨论!